Bridging the Gap Between Digital Creation and Physical Interactivity

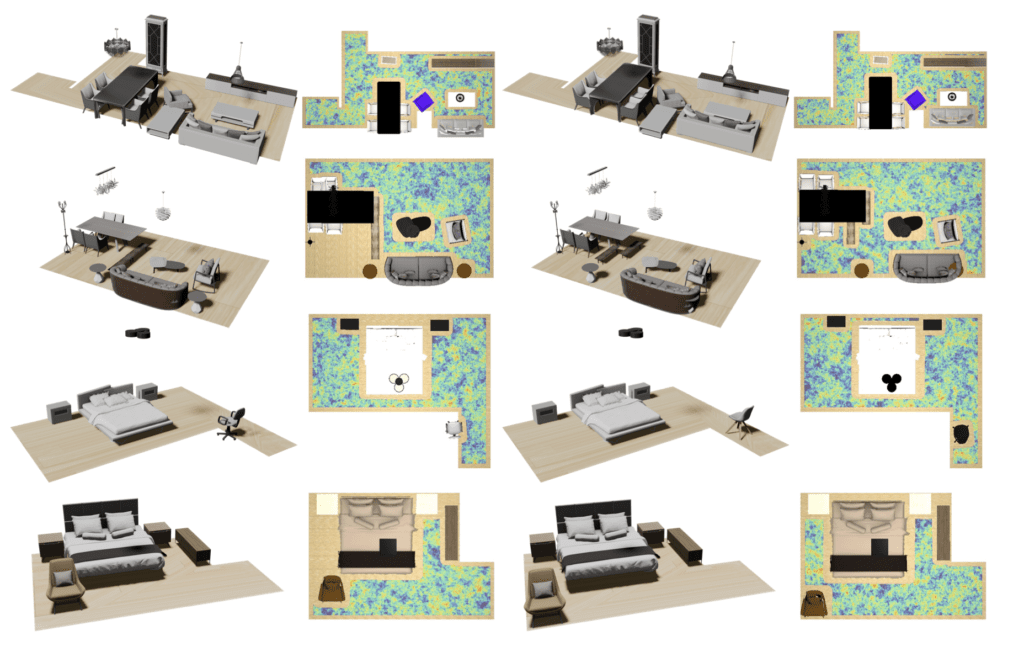

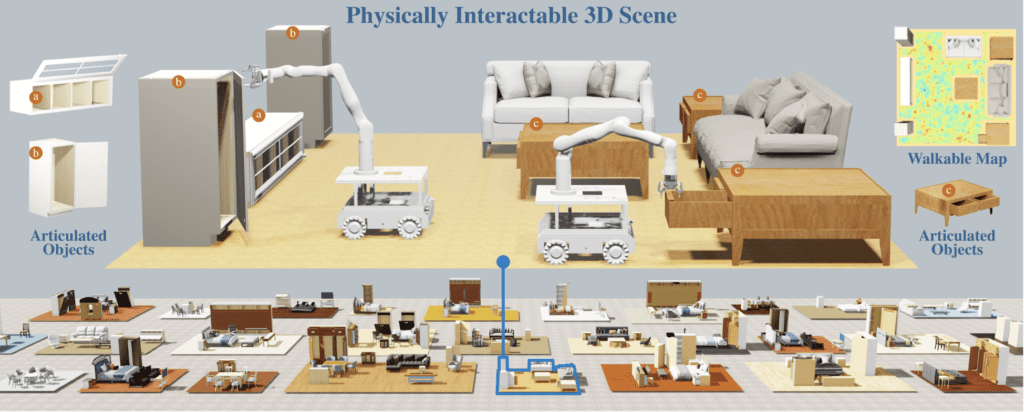

- Advanced Scene Synthesis: PhyScene introduces a conditional diffusion model designed to generate physically interactable 3D scenes, featuring articulated objects suitable for embodied AI tasks, setting a new standard in scene realism and functionality.

- Physics-Based Guidance: The novel approach includes guidance mechanisms grounded in physical principles such as object collision and reachability, ensuring that the synthesized scenes are not only visually accurate but also practically interactable.

- Empowering Embodied AI: By providing an environment that supports complex interactions, PhyScene significantly enhances the training and development of AI agents, preparing them for real-world applications that require nuanced physical interactions.

Innovative Technology

PhyScene’s technology leverages a cutting-edge conditional diffusion model, which captures intricate scene layouts and integrates guidance functions tailored for physical interactivity. This approach allows for the generation of detailed scenes that AI agents can navigate and manipulate, mirroring the complexity of real-world environments.

Interactivity at the Core

Unlike traditional 3D scene synthesis that focuses primarily on visual fidelity, PhyScene emphasizes the functional aspects of these environments. It incorporates advanced physics-based algorithms to manage dynamics like collision avoidance and object manipulation, ensuring that every element within the scene is positioned and behaves in a way that is true to life.

Research and Application

PhyScene’s development is a response to the growing need for more sophisticated training grounds for embodied AI systems, which are increasingly used in diverse fields such as robotics, virtual reality, and autonomous vehicles. By simulating realistic interactions within 3D environments, PhyScene provides a robust platform for developing and testing AI behaviors that require a deep understanding of physical spaces and objects.

Challenges and Future Directions

While PhyScene marks a significant advancement in 3D scene synthesis, the current version is limited to certain room types and larger objects. The inability to incorporate smaller, more detailed items into scenes restricts its use in tasks that involve fine-grained manipulation, such as picking up and placing small objects. Future enhancements are expected to address these limitations, broadening the scope of potential applications and further improving the system’s utility for complex embodied AI tasks.

PhyScene represents a monumental step forward in the field of embodied AI, offering a sophisticated tool that blends high-resolution visual content with dynamic, real-world interactivity. This innovation not only enhances the aesthetic quality of synthetic environments but also fundamentally changes how AI agents can learn from and interact with their surroundings. As the technology evolves, it promises to unlock new possibilities in automated systems and interactive technologies, paving the way for more intuitive and capable AI solutions.