Public preview of the 7B OpenLLaMA model trained on 200 billion tokens released, with PyTorch and Jax weights available

- OpenLLaMA is an open-source reproduction of Meta AI’s LLaMA large language model, trained on the RedPajama dataset.

- Public preview of the 7B OpenLLaMA model has been released, with both PyTorch and Jax weights available for download on HuggingFace Hub.

- OpenLLaMA shows comparable performance to the original LLaMA and GPT-J across most tasks, and outperforms them in some instances.

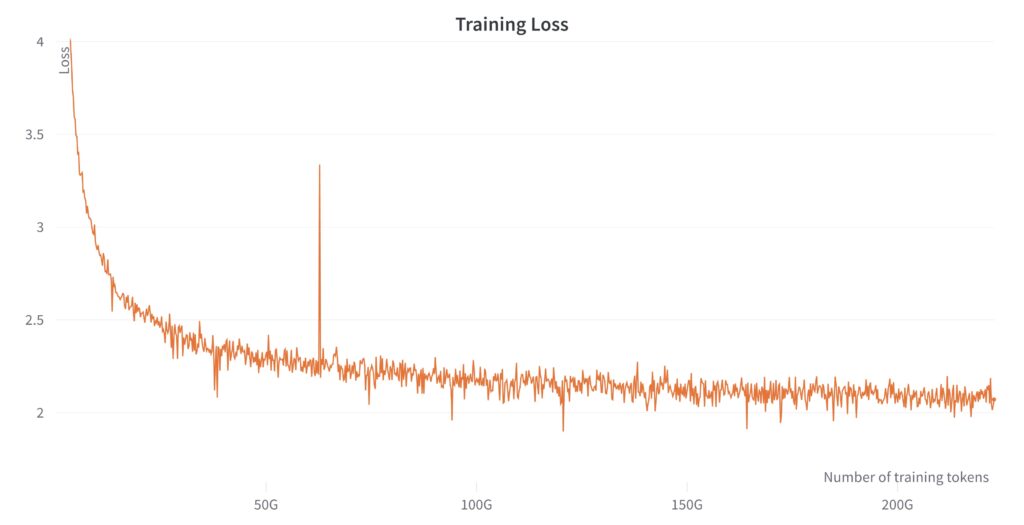

The OpenLLaMA project releases an open-source reproduction of Meta AI’s LLaMA large language model, with a permissive license for users. The public preview of the 7B OpenLLaMA model has been trained on 200 billion tokens, and both PyTorch and Jax weights of pre-trained OpenLLaMA models are provided.

The OpenLLaMA model is trained on the RedPajama dataset released by Together, a reproduction of the LLaMA training dataset containing over 1.2 trillion tokens. It follows the same preprocessing steps and training hyperparameters as the original LLaMA paper, with the only difference being the use of the RedPajama dataset instead of the original LLaMA dataset. The models are trained on cloud TPU-v4s using EasyLM, a JAX-based training pipeline developed for training and fine-tuning language models.

In terms of evaluation, OpenLLaMA was assessed on a wide range of tasks using lm-evaluation-harness, with the results comparing the original LLaMA model and GPT-J, a 6B parameter model trained on the Pile dataset by EleutherAI. OpenLLaMA demonstrates comparable performance to the original LLaMA and GPT-J across most tasks and outperforms them in certain cases. The performance of OpenLLaMA is expected to improve further once training on 1 trillion tokens is completed.

To encourage community feedback, a preview checkpoint of the weights has been released, available for download from HuggingFace Hub. The weights are provided in two formats: an EasyLM format for use with the EasyLM framework and a PyTorch format for use with the HuggingFace Transformers library. Instructions for using the weights in both frameworks can be found in their respective documentation.

The training framework EasyLM and the preview checkpoint weights are licensed permissively under the Apache 2.0 license. The current release is just a preview of the complete OpenLLaMA release, with the focus now on completing the training process on the entire RedPajama dataset. In addition to the 7B model, a smaller 3B model is in development to cater to low-resource use cases. Stay tuned for future updates and releases.