From Sensors to Benchmarks for Collaboration, Meta Unveils Tools for the Next Generation of Robotics

- Meta FAIR introduces three revolutionary tools—Meta Sparsh, Meta Digit 360, and Meta Digit Plexus—to advance AI touch perception and robotics dexterity.

- Collaborative partnerships with GelSight and Wonik Robotics will enable commercialization and wider access to these innovations.

- The new PARTNR benchmark will guide the development of socially-aware, collaborative robots that interact seamlessly with humans.

Meta’s Fundamental AI Research (FAIR) team has announced transformative advancements in robotics, emphasizing touch perception and dexterity. These new tools aim to create a world where robots are not only physically capable but also context-aware, making human-robot collaboration more seamless and intuitive. With three pioneering innovations—Meta Sparsh, Meta Digit 360, and Meta Digit Plexus—Meta is set to redefine the landscape of AI robotics. Through strategic partnerships, Meta plans to share these tools with the open-source community, empowering researchers and developers to build, iterate, and innovate.

Meta Sparsh: Pioneering Touch Perception

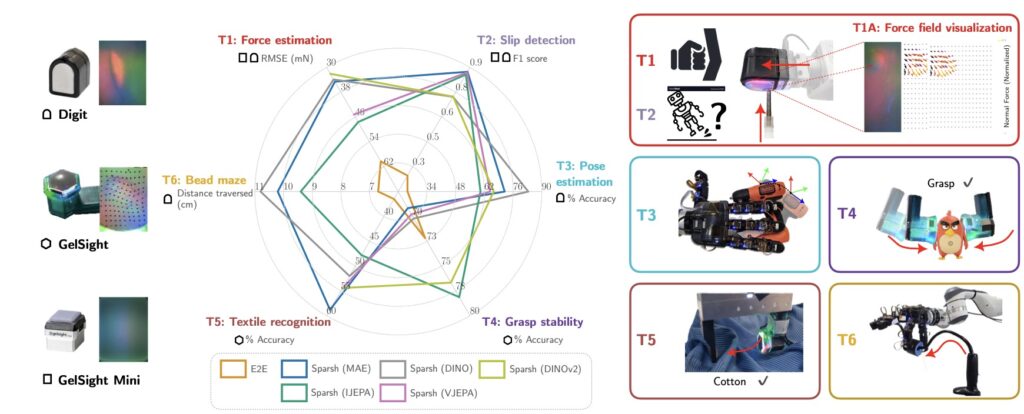

Meta Sparsh, derived from the Sanskrit word for touch, is the first general-purpose encoder for tactile sensing. Traditional tactile sensors are tailored to specific tasks and sensors, making them hard to scale across applications. Sparsh changes this paradigm by using self-supervised learning trained on over 460,000 tactile images, enabling it to work across diverse sensors and tasks. From grasping objects with variable textures to detecting minor shifts in pressure, Sparsh lays the foundation for robots to interpret touch with human-like subtlety. This advancement holds significant promise for sectors requiring delicate object handling, such as medical devices and manufacturing.

Meta Digit 360: A Breakthrough in Robotic Fingertip Sensitivity

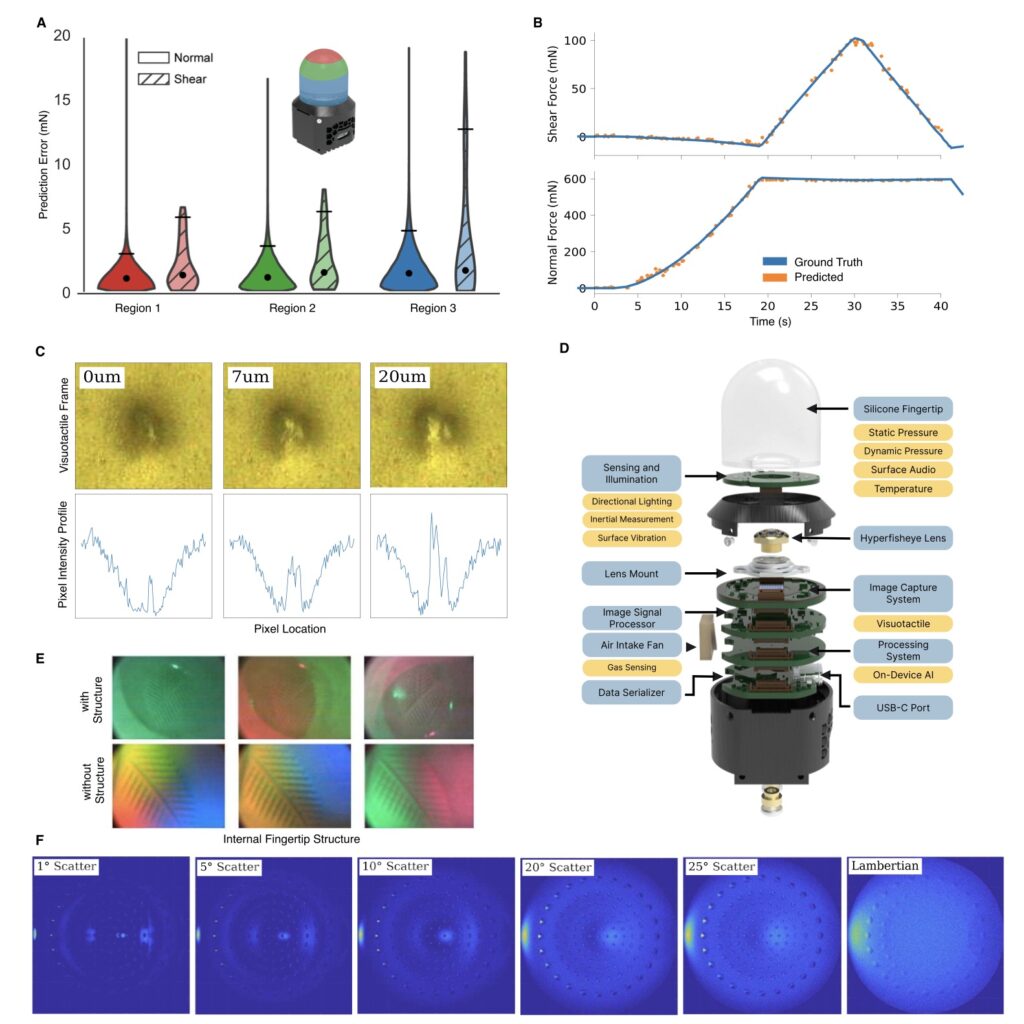

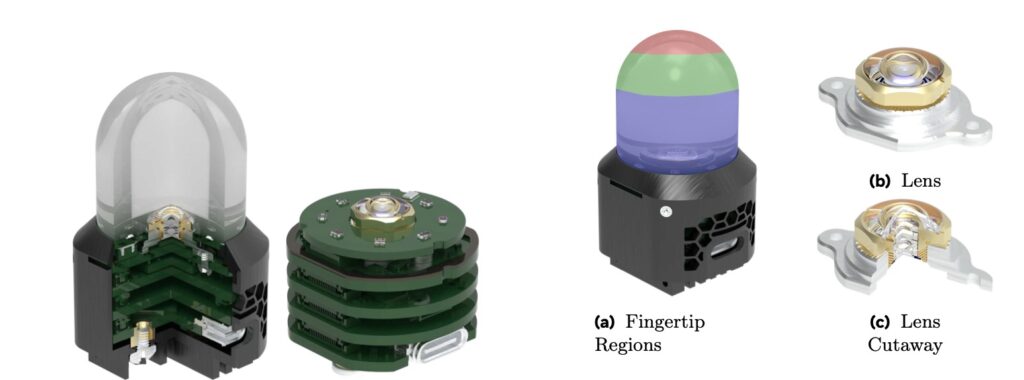

Introducing Meta Digit 360, a cutting-edge fingertip sensor with human-level tactile precision. Digit 360 captures data on 18+ sensing features, allowing it to mimic human tactile perception with unparalleled accuracy. Equipped with 8 million “taxels” (tactile pixels), this artificial fingertip can register tiny forces and provide real-time feedback, such as sensing an object’s softness or detecting surface vibrations. This multimodal sensing will enable new AI capabilities, from dexterous object manipulation to realistic virtual touch in VR. By delivering precise feedback, Digit 360 also opens possibilities for medical and prosthetic applications, where touch sensitivity can greatly enhance functionality.

Meta Digit Plexus: A Platform for Tactile Integration

Meta Digit Plexus introduces a standardized hardware-software framework, integrating tactile sensors onto a single robotic hand. With the capacity to link multiple tactile sensors, Digit Plexus consolidates touch data into a single output, allowing robots to make instant, informed decisions. Digit Plexus’s standardized structure will simplify the deployment of touch-sensitive robotic systems, enabling more robust testing and experimentation. By supporting the seamless integration of tactile sensors, Digit Plexus empowers researchers to dive into touch perception research, fostering development in areas like safe robot-assisted surgery or dexterous handling in supply chains.

PARTNR: A New Standard for Collaborative Robotics

To facilitate more meaningful human-robot collaboration, Meta introduces PARTNR (Planning And Reasoning Tasks in humaN-Robot collaboration), a benchmark for evaluating robots in collaborative tasks. Developed within Habitat 3.0, this benchmark provides a realistic simulation environment, allowing robots to interact with humans across a range of everyday tasks. With over 100,000 natural language tasks and 5,800 unique objects, PARTNR pushes AI beyond isolated responses, requiring coordination and adaptability in human-centered scenarios. PARTNR also allows for reproducible assessments, providing researchers with critical data for refining robot-human interaction, from responsive personal assistants to household robots.

A Vision for Collaborative Robotics and the Path Forward

Meta FAIR’s announcements highlight an ambitious vision for the future of robotics: creating embodied AI that perceives and interacts with the physical world with human-like awareness. By forming partnerships with GelSight and Wonik Robotics, Meta FAIR accelerates the real-world adoption of these innovations. GelSight will manufacture Digit 360 sensors, which will soon be available to the research community, while Wonik Robotics is collaborating with Meta to launch the next-gen Allegro Hand with integrated tactile sensing.

Meta’s recent developments reveal a trajectory toward a future where AI robots are more adaptable, intuitive, and able to assist with complex human tasks. Whether in healthcare, manufacturing, or everyday interactions, Meta’s latest contributions to tactile AI and collaborative benchmarks signal a new era of intelligent, human-centric robotics. By releasing these tools and partnering with industry leaders, Meta is not only advancing machine intelligence but fostering a collaborative community committed to exploring the potentials of AI for the greater good.