Adobe introduces DiffusionGS, a breakthrough in fast and scalable image-to-3D creation.

- Adobe unveils DiffusionGS, a cutting-edge 3D diffusion model, generating consistent 3D outputs from single 2D images.

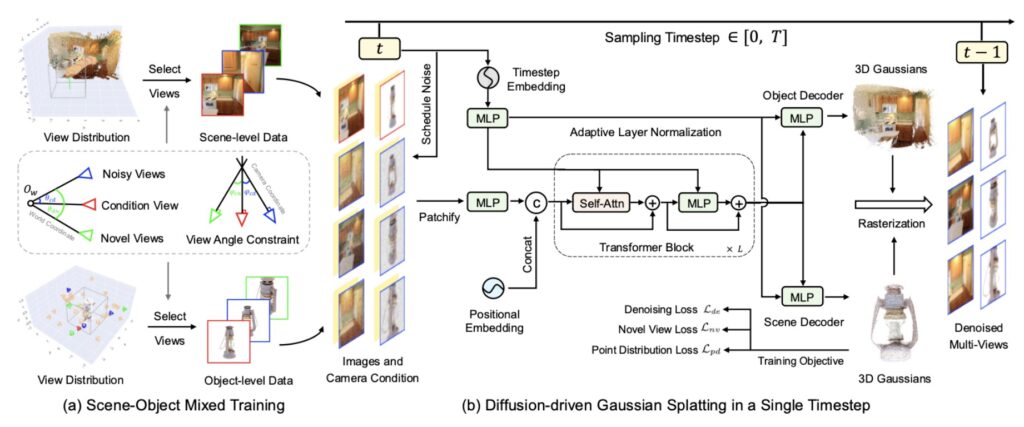

- The model utilizes Gaussian splatting to ensure seamless view consistency and supports both object- and scene-level inputs.

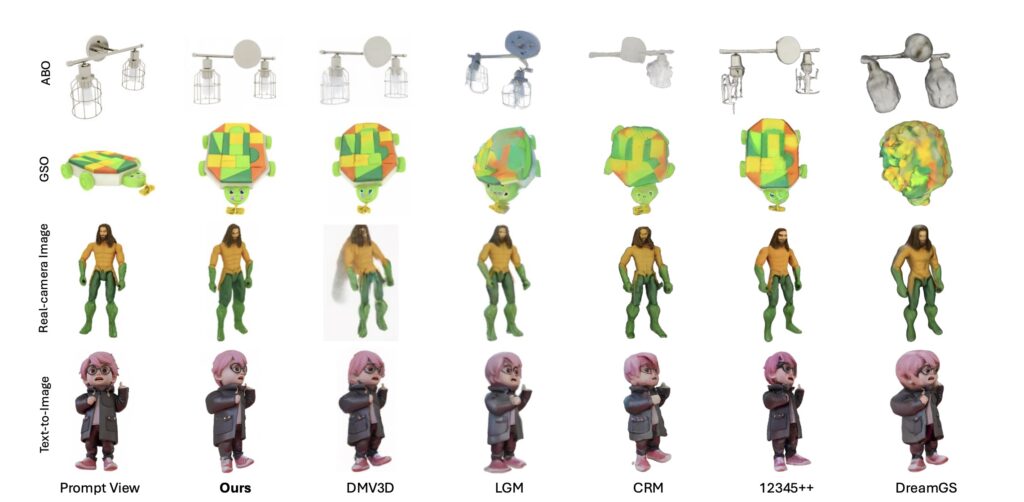

- DiffusionGS achieves unmatched speed and quality, outpacing existing state-of-the-art (SOTA) methods by a significant margin.

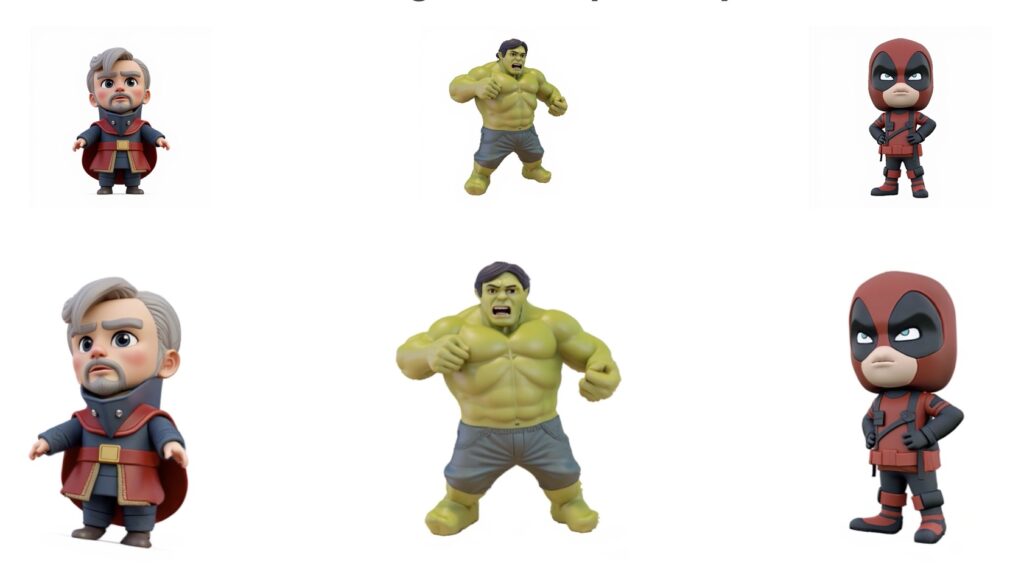

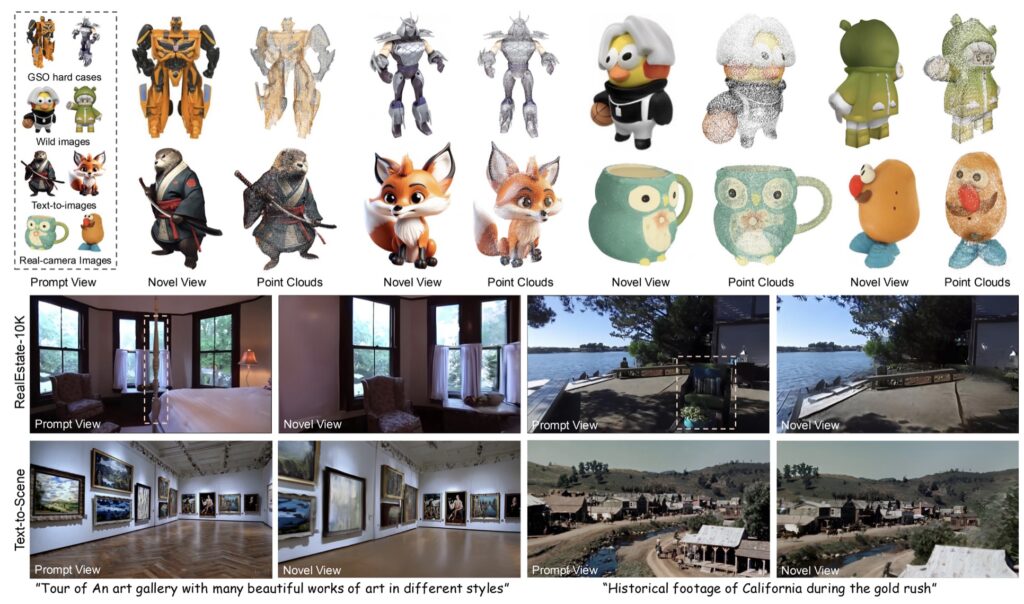

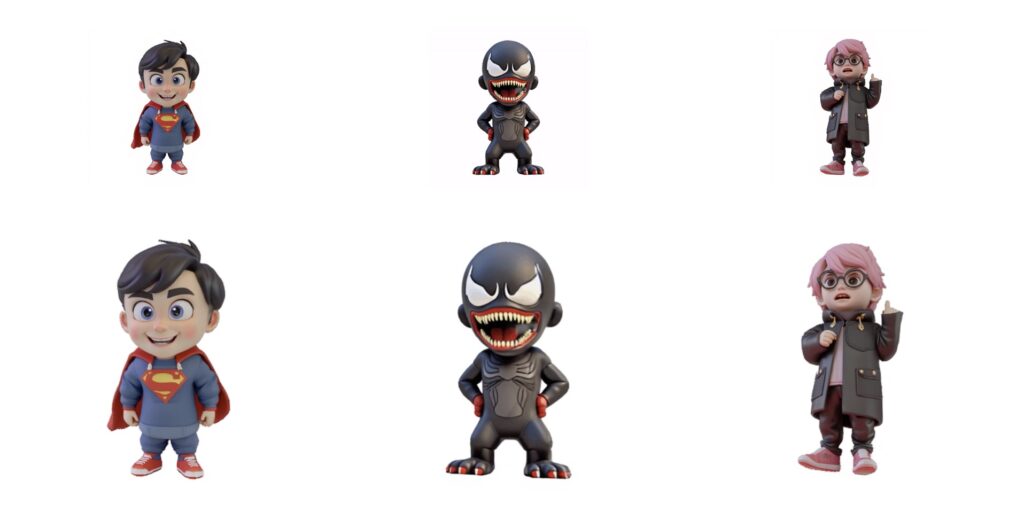

Adobe’s DiffusionGS is setting a new benchmark in 3D image generation by offering a groundbreaking single-stage solution. Unlike traditional methods that rely on 2D multi-view diffusion models and struggle with 3D consistency, DiffusionGS directly outputs Gaussian point clouds, maintaining visual coherence across diverse viewing angles. This innovation ensures robust object and scene generation from a single input image, overcoming the limitations of object-centric prompts.

The system’s ability to generalize stems from a scene-object mixed training strategy and a novel camera conditioning method, RPPC. These advancements equip DiffusionGS to handle complex geometries and textures with remarkable precision.

Unmatched Speed and Quality

DiffusionGS doesn’t just set new quality standards—it also redefines efficiency. Running on an A100 GPU, the model can produce 3D outputs in just 6 seconds, making it over five times faster than existing SOTA methods. This speed is complemented by significant improvements in image quality, with up to a 2.91 dB increase in PSNR and a 75.68 reduction in FID for scene generation tasks.

Such performance has practical implications, particularly in text-to-3D applications where users can generate lifelike 3D models from textual descriptions. The combination of speed, precision, and versatility makes DiffusionGS an invaluable tool for industries like gaming, animation, and virtual reality.

Shaping the Future of AI-Driven 3D Modeling

DiffusionGS is more than a technical milestone; it’s a leap forward for AI-driven creativity. By integrating Gaussian splatting with diffusion modeling and a robust training strategy, Adobe has delivered a model that combines unparalleled speed and quality with user-friendly versatility.

As industries increasingly adopt AI solutions for content creation, DiffusionGS stands out as a pioneering tool, paving the way for faster, more accessible 3D modeling workflows. For artists, developers, and researchers, this technology offers a glimpse into the future of AI-assisted creativity—efficient, accurate, and truly transformative.