Stanford and Google researchers develop AI agents that replicate human behavior with surprising accuracy—but raise ethical concerns.

- AI That Thinks Like You: Using just a two-hour interview, researchers can create AI agents mimicking individual personalities with 85% accuracy.

- Potential Applications: These agents could revolutionize fields like policymaking, market research, and social science by simulating human responses.

- Ethical Risks: The technology raises concerns about misuse, from social engineering scams to manipulative policymaking and marketing strategies.

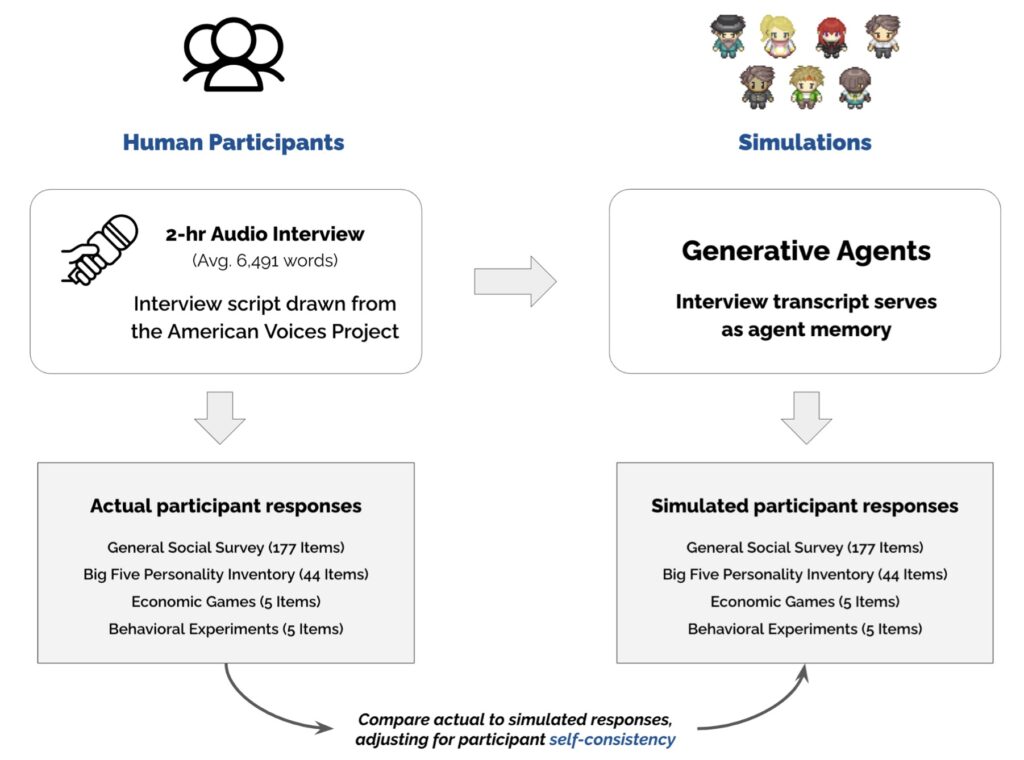

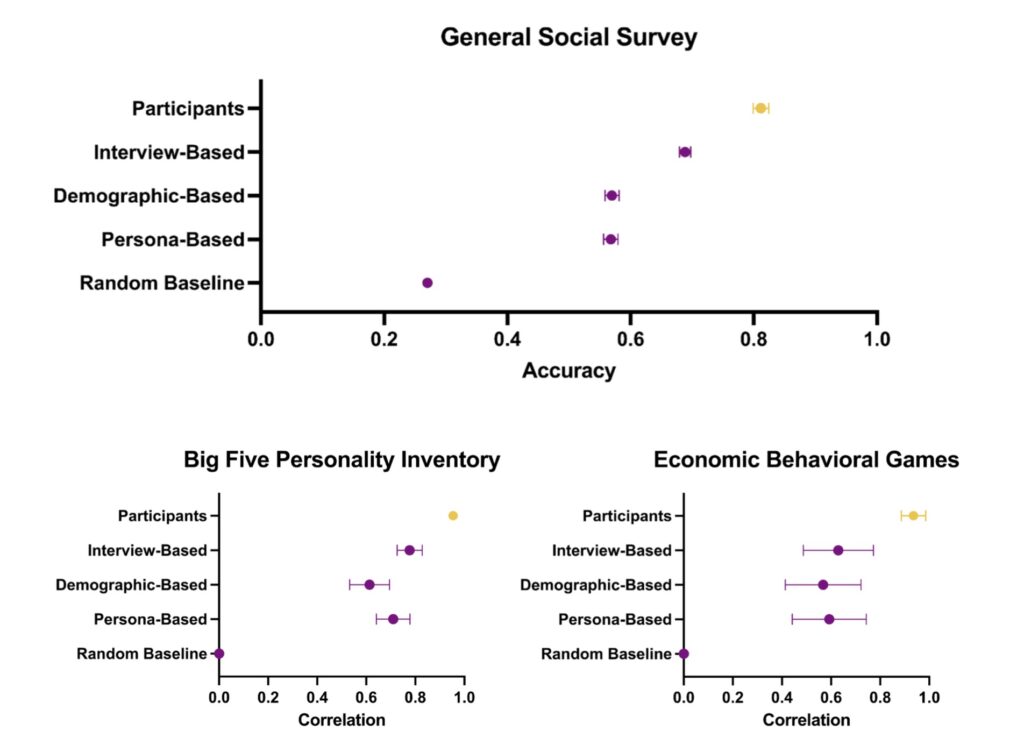

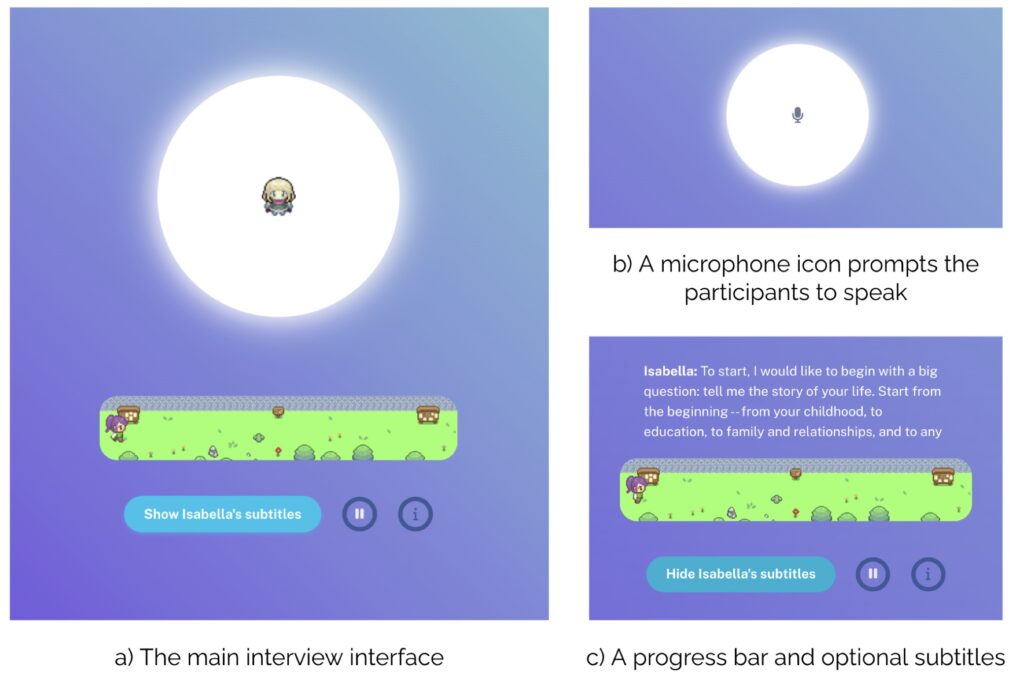

Stanford University and Google’s DeepMind have unveiled a groundbreaking AI system capable of creating generative agents that replicate human behavior. By conducting two-hour interviews and analyzing transcripts averaging 6,491 words, researchers produced AI agents that answered questions and mimicked personality traits with up to 85% accuracy. This technology, documented in the study Generative Agent Simulations of 1,000 People, highlights the potential for AI to simulate human-like interactions across various applications.

Potential for Public Insight and Manipulation

The AI agents are designed to help policymakers, researchers, and businesses better understand public behavior. For example, instead of traditional focus groups, organizations could use AI clones to predict responses to policy changes or product launches. The agents also demonstrate promise in advancing social science research, offering insights into collective behavior and decision-making.

However, this innovation raises ethical concerns. If used irresponsibly, these AI agents could be exploited to manipulate public opinion, bypass informed consent, or engage in harmful activities like social engineering.

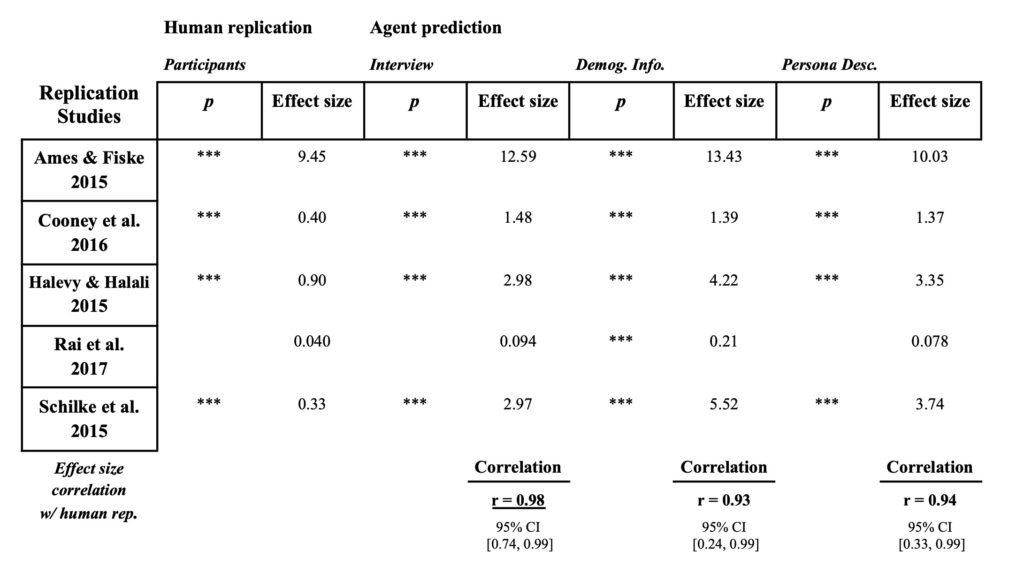

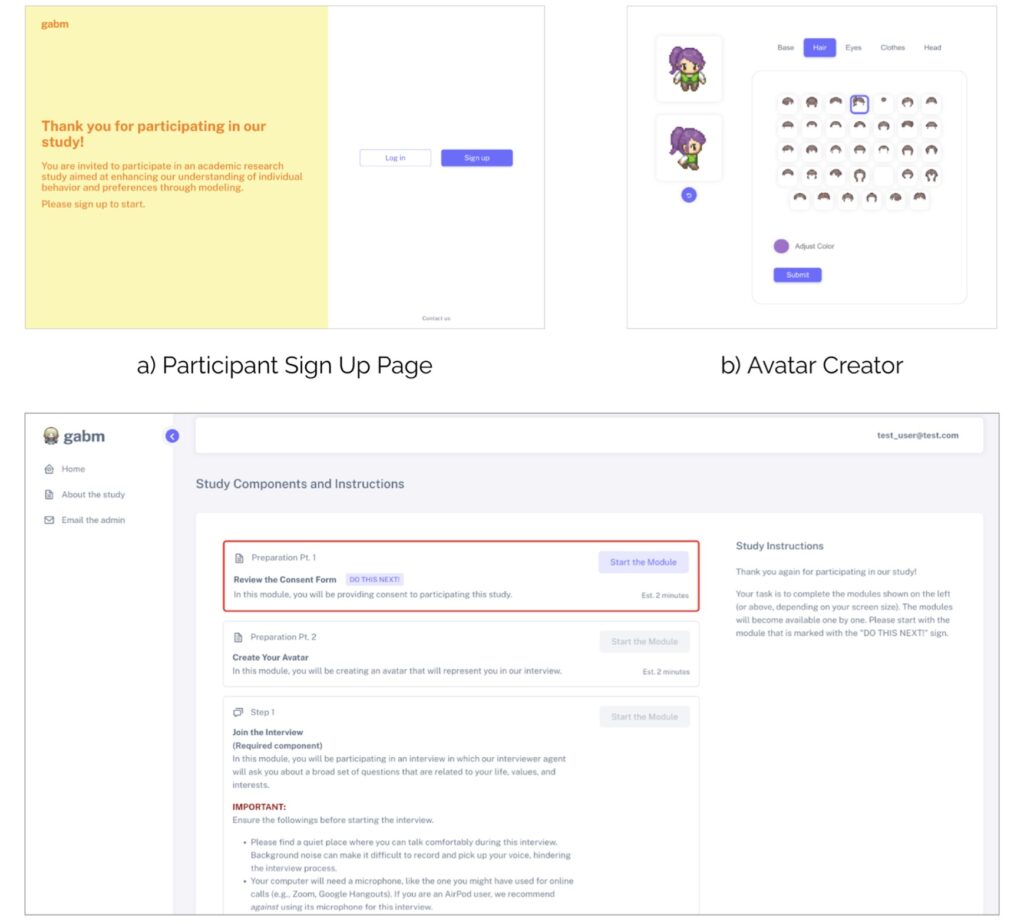

The Process: From Data to Digital Clone

Participants in the study were paid to answer questions on topics ranging from family life to political beliefs. Their responses were fed into a language model, which then generated AI agents designed to imitate their behavior. These agents were tested using established psychological measures, such as the General Social Survey (GSS) and Big Five Personality Inventory (BFI), as well as economic games like the Prisoner’s Dilemma. While the agents performed well in answering questions, their ability to replicate decision-making in games lagged behind, achieving only 60% accuracy compared to real participants.

Risks and Ethical Implications

The ability to replicate personalities so quickly brings significant risks. AI systems trained on personal interviews could be weaponized in scams, impersonating individuals with near-perfect accuracy. Additionally, corporations or political entities could use these AI clones to simulate public sentiment, creating policies or products based on an approximation of the public’s will rather than actual feedback.

The technology also raises questions about consent and privacy. Could a corporation create AI clones using publicly available social media data without explicit permission? The implications extend far beyond research, touching on critical issues of trust and accountability in AI development.

The Future of AI-Driven Simulations

While this technology offers exciting opportunities for understanding human behavior, its potential for misuse cannot be ignored. As researchers refine these systems, there is an urgent need to establish ethical guidelines and safeguards. Ensuring transparency, consent, and oversight will be critical in preventing the exploitation of such powerful tools.

The promise of AI that “thinks like you” is as thrilling as it is unsettling. Whether this innovation becomes a force for good or a tool for harm depends on how society chooses to wield it.