How a Modality-Specific Normalization Strategy is Redefining Scalable and High-Quality Virtual Try-On

- Revolutionizing Virtual Try-On (VTON): A new single-network paradigm eliminates the need for dual networks, enhancing efficiency and scalability.

- High-Quality Results: Advanced normalization techniques preserve garment details while achieving exceptional realism in both images and videos.

- E-commerce Transformation: This breakthrough makes VTON more accessible, enabling better user experiences and reducing operational costs.

Virtual Try-On (VTON) is revolutionizing e-commerce by allowing users to see how garments would look on them without trying them on physically. It combines realism with convenience, helping retailers reduce photoshoot costs and empowering shoppers to explore styles interactively. However, achieving high-fidelity garment simulations while preserving individual details has been a persistent challenge.

Early VTON methods relied on single generative networks, but these approaches struggled with maintaining garment textures and alignment during processing. The introduction of dual-network paradigms addressed these issues by adding a supplementary network for enhanced feature extraction. While effective, the dual-network approach came with a significant computational cost, limiting its practicality for high-resolution and real-time applications.

A Single-Network Paradigm: Introducing MN-VTON

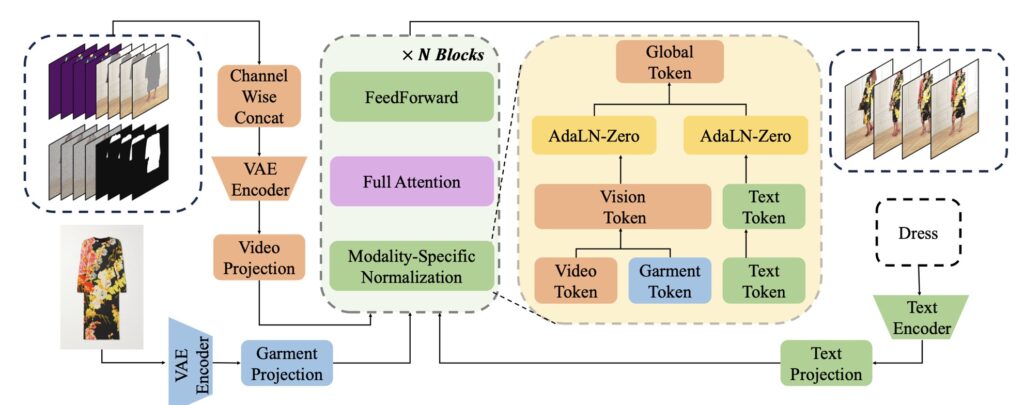

In a groundbreaking development, researchers have introduced MN-VTON, a single-network framework that rivals the quality of dual-network systems while drastically reducing computational demands. This innovation hinges on a Modality-Specific Normalization (MSN) strategy, which processes text, images, and videos within the same network layers. By doing so, MN-VTON retains garment realism, texture precision, and alignment, addressing the shortcomings of traditional methods.

The MSN strategy allows the model to adapt to different input modalities without additional processing layers. This not only simplifies the architecture but also ensures a seamless fusion of garment features, maintaining high visual fidelity across various use cases.

High Performance, Low Overhead

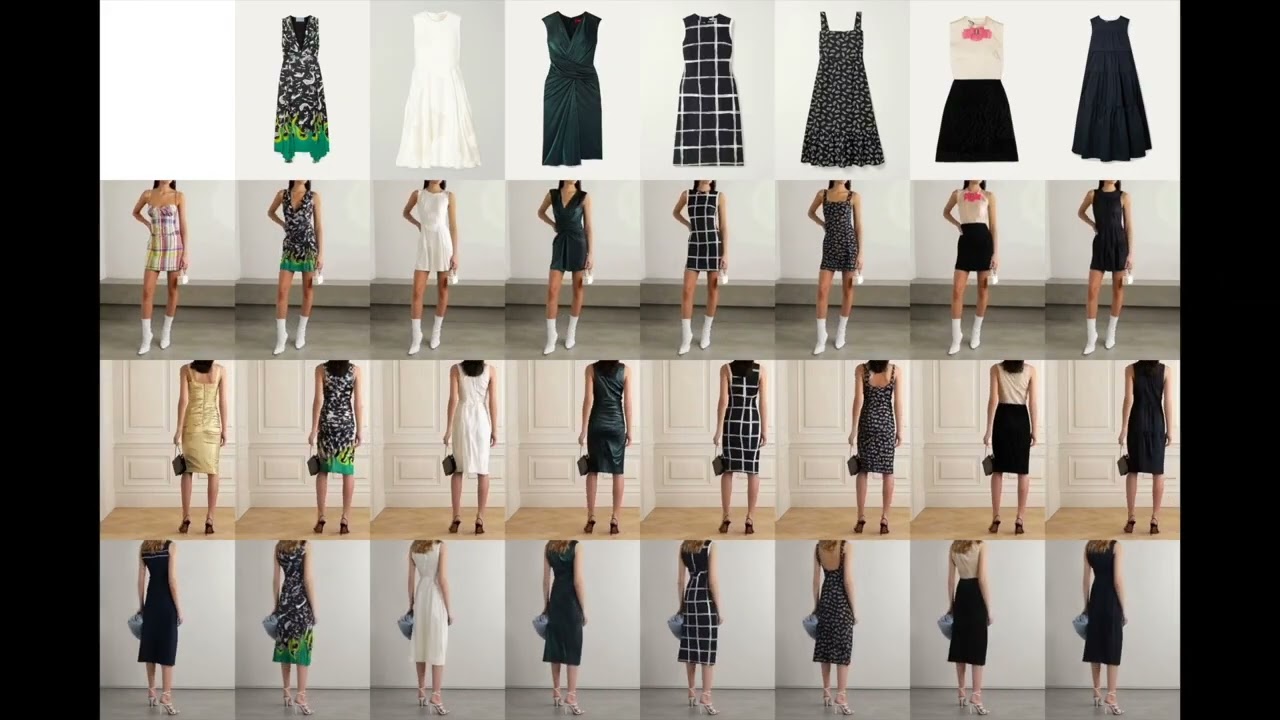

Extensive testing has shown that MN-VTON outperforms dual-network systems in both subjective and objective evaluations. For image-based VTON, it delivers lifelike textures and preserves the original pose and appearance of the subject. For video-based VTON, the model ensures temporal consistency, avoiding the flickering artifacts that often plague dynamic scenarios.

The efficiency of the single-network design makes it highly scalable, opening doors for real-time applications and broader adoption in e-commerce platforms. With reduced computational costs, businesses can integrate VTON into user-friendly apps without the need for expensive hardware.

Transforming E-commerce and Beyond

The implications of MN-VTON extend far beyond technical advancements. For retailers, it means the ability to display entire collections on virtual models without costly photoshoots. For consumers, it offers a personalized and engaging shopping experience. Additionally, MN-VTON’s scalability positions it as a vital tool in industries like gaming, fashion design, and content creation.

By simplifying the architecture and achieving superior results, MN-VTON is setting the stage for the next era of VTON systems. As researchers refine its capabilities, the vision of real-time, high-quality garment visualization is becoming a reality, fundamentally transforming how we shop and interact with fashion.

MN-VTON exemplifies how innovation in AI-driven frameworks can overcome existing limitations, making cutting-edge technology accessible and practical for real-world applications. This breakthrough marks the beginning of a new chapter in virtual try-on technology, redefining efficiency and quality for the modern age.