From Lyrics to Symphony: How YuE’s Breakthroughs in Long-Form Music Generation Are Outpacing Closed-Source Rivals

- YuE (乐) is the first open-source AI model capable of generating full-length songs (up to 5 minutes)—complete with vocals, instrumentals, and genre diversity—directly from lyrics, rivaling proprietary systems like Suno.ai.

- Innovative techniques—dual-token synchronization, lyrics-chain-of-thought generation, and a 3-stage training scheme—solve long-standing challenges in music AI, from polyphonic complexity to lyrical coherence.

- Ethical and industry upheaval looms: As YuE democratizes AI music creation, debates over copyright, cultural bias, and the future of human artistry intensify.

The Death Knell for Closed Music AI Systems?

The race to dominate AI-generated music just took a seismic turn. While Western platforms like Suno.ai and Udio have dazzled users with vocal snippets and instrumental loops, China’s newly unveiled YuE(乐, meaning “music” in Mandarin) has leapfrogged ahead by delivering full-song generation—an open-source powerhouse that transforms raw lyrics into polished, multi-minute tracks across genres, languages, and cultural styles. Built on the Llama architecture and Hugging Face-compatible, YuE isn’t just a tool—it’s a paradigm shift.

For years, startups like Suno.ai and Udio dominated AI music generation with proprietary models that turned text into short clips. But their closed ecosystems, limited customization, and opaque ethics are under threat.

Why Music AI Is Harder Than You Think

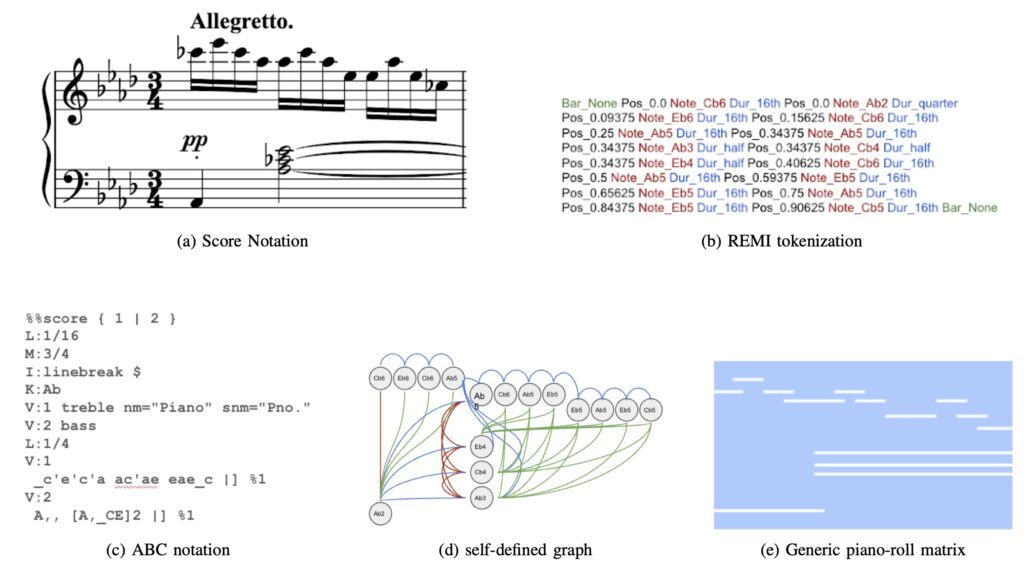

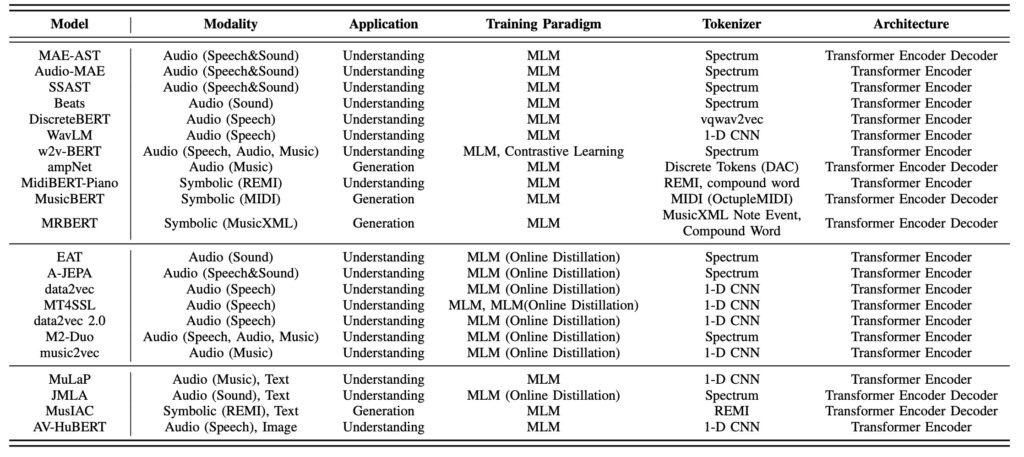

Music generation isn’t just ChatGPT with a beat. Unlike speech or text, music is a polyphonic labyrinth: multiple “voices” (instruments, vocals) overlap, cultural context shapes meaning, and structure demands coherence over minutes, not seconds. Traditional models stumble on four core challenges:

- Long-form storytelling: A 5-minute song requires modeling 10x more data than a 30-second clip.

- Polyphonic chaos: Separating vocals, drums, and synths without distortion is akin to untangling a symphony of overlapping conversations.

- Lyrics-audio mismatch: Most datasets lack aligned lyrics and audio, forcing models to “hallucinate” connections.

- Cultural blind spots: Western-centric training data often ignores global musical traditions.

YuE tackles these with brute-force innovation.

How YuE Works: Four Technical Breakthroughs

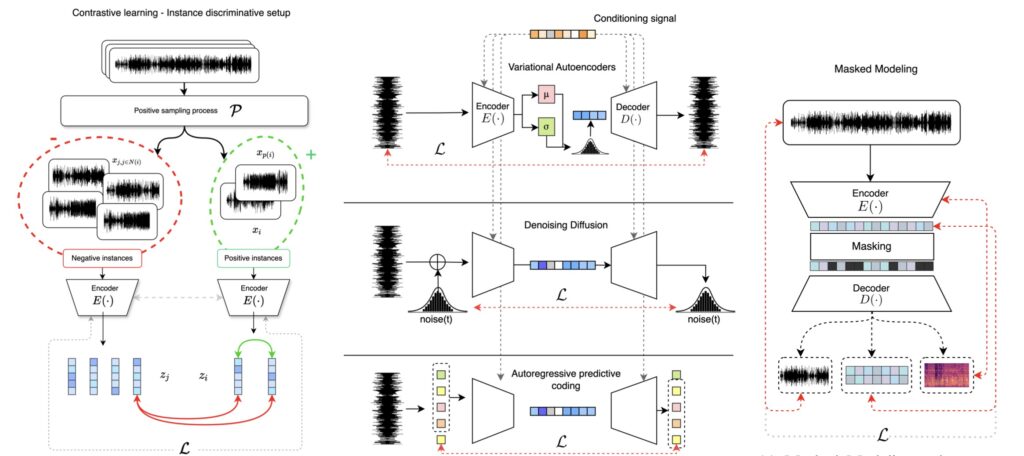

- Semantically Enhanced Audio Tokenizer:

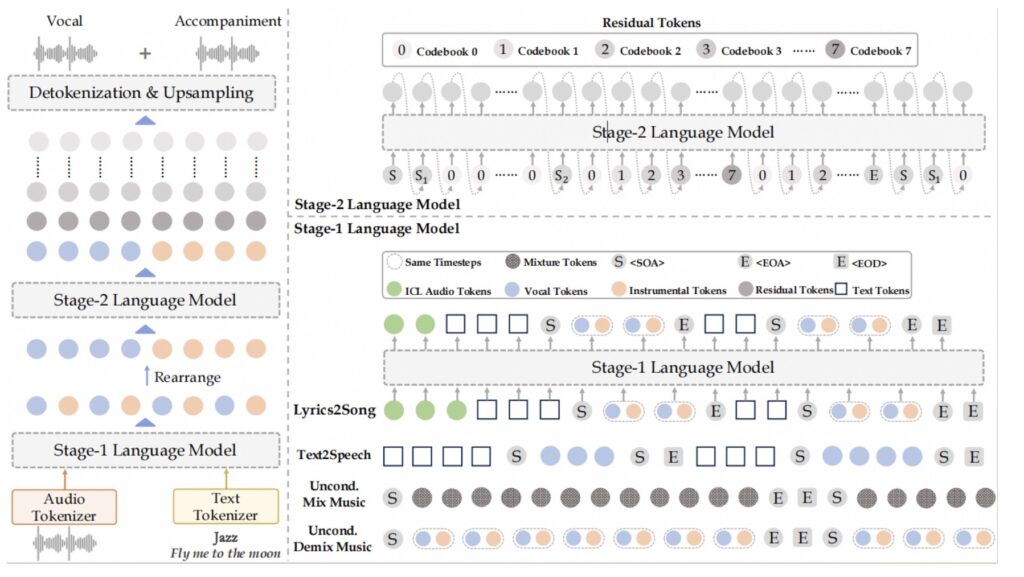

Compressing raw audio into tokens is like translating a symphony into Morse code. YuE’s tokenizer preserves vocal nuances and instrumental textures, slashing training costs while accelerating model convergence. - Dual-Token Technique:

Vocals and instrumentals are modeled simultaneously without altering Llama’s architecture. Imagine two conductors leading an orchestra in sync—YuE’s dual tokens keep vocals on-beat while guitars riff in the background. - Lyrics Chain-of-Thoughts:

Instead of generating a song in one chaotic burst, YuE “thinks” step-by-step: verse → chorus → bridge, guided by lyrical themes. This mimics human songwriting, ensuring structure and emotional flow. - 3-Stage Training Scheme:

- Stage 1: Learn basic audio patterns from 2 million music clips.

- Stage 2: Fine-tune with lyrics-audio pairs to align words and melody.

- Stage 3: Optimize for genre diversity (pop, rock, classical) and multilingual vocals (Mandarin, English, Spanish).

A model that crafts Billboard-worthy tracks while adhering to lyrical intent.

Open-Source vs. Closed Systems: A Ticking Time Bomb for Music

YuE’s release ignites a critical debate. Closed models like Suno.ai guard their tech to monetize subscriptions, but YuE’s open framework lets anyone fine-tune it—for free. A student in Nairobi can generate Afrobeat anthems; a indie dev can build a punk-rock GPT. Yet this freedom comes with risks:

- Copyright chaos: If YuE was trained on unlicensed music, who owns the output? Universal Music’s lawsuit against Anthropic foreshadows legal wars.

- Cultural erasure: 90% of music AI training data is Western. YuE’s Chinese roots may help balance this, but bias persists.

- Creative disruption: Will AI democratize music or flood markets with generic tracks?

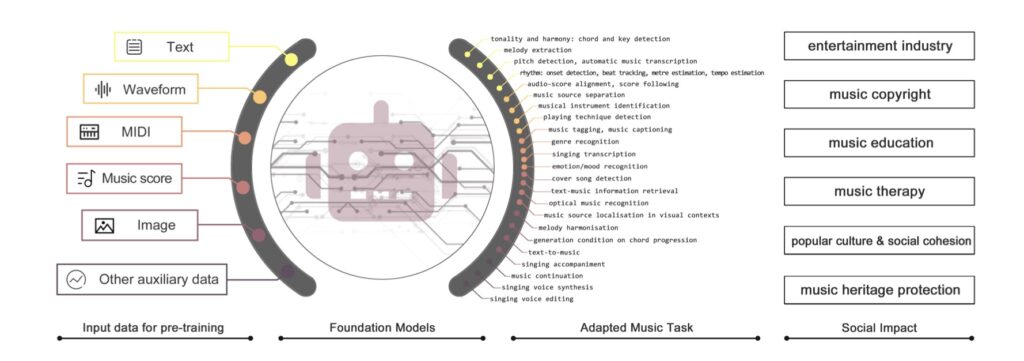

Music Agents, Ethical AI, and the End of “Composer’s Block”

YuE is just the beginning. The survey behind its development envisions “music agents”—AI that collaborates with humans, producing personalized soundtracks for games, therapy, or ads. Imagine a ChatGPT-like interface where you hum a melody, and YuE expands it into a symphony. But to get there, the field must address:

- Ethical guardrails: Transparent sourcing of training data, artist compensation, and bias audits.

- Interdisciplinary fusion: Combining musicology, AI, and ethics to preserve cultural diversity.

- Long-context modeling: Scaling to hour-long compositions (think film scores or operas).

Music’s Open-Source Revolution Has Begun

YuE isn’t just a tool—it’s a manifesto. By open-sourcing what others lock behind paywalls, China challenges the West’s AI hegemony and forces a reckoning: Will music remain a guarded commodity or evolve into a shared creative language? For artists, the stakes are existential; for listeners, the possibilities are endless. One thing’s certain: Suno and Udio’s closed models just met their open-source nemesis.

Harmony or Dissonance?

YuE’s arrival marks a crossroads. For creators, it’s a liberating tool; for corporations, a disruptor; for ethicists, a Pandora’s box. As the line between human and machine-made music blurs, one truth remains: AI won’t replace artists—but artists who wield AI will replace those who don’t.