As the AI arms race intensifies, Google’s latest release signals a bold step toward a future of autonomous, multi-step AI assistants.

- Google has launched Gemini 2.0, its most advanced AI model suite yet, making it available to the public after months of testing and integration into its products.

- The release is part of a broader strategy to develop “AI agents” capable of completing complex, multi-step tasks autonomously, a goal shared by tech giants like Meta, Microsoft, and OpenAI.

- With features like native image and audio output, cost-efficient models, and advanced multimodality, Gemini 2.0 positions Google as a key player in the race to create universal AI assistants.

The artificial intelligence landscape is evolving at breakneck speed, and Google is doubling down on its commitment to lead the charge. On Wednesday, the tech giant unveiled Gemini 2.0, its most powerful AI model suite to date, marking a significant milestone in its push to develop advanced AI agents. This release isn’t just another update—it’s a strategic move in a high-stakes race among tech titans and startups to create AI systems that can think, plan, and act on behalf of users.

The Rise of AI Agents

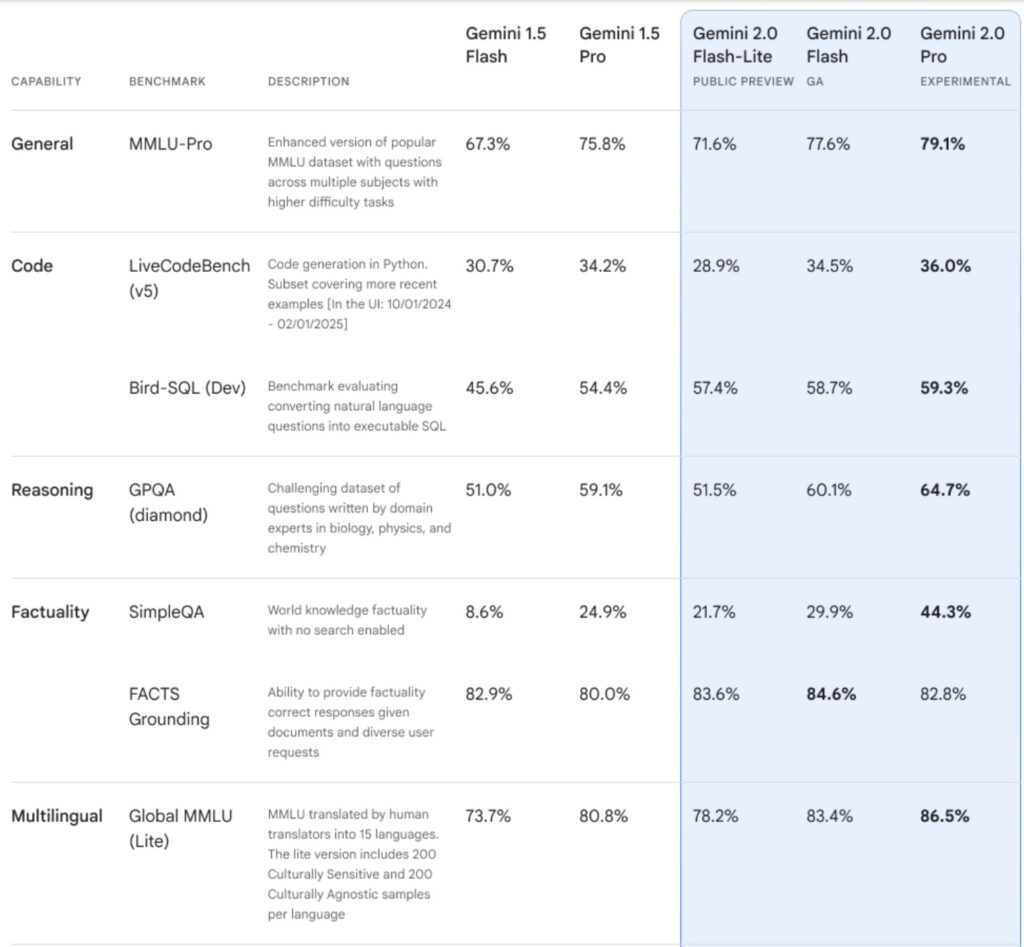

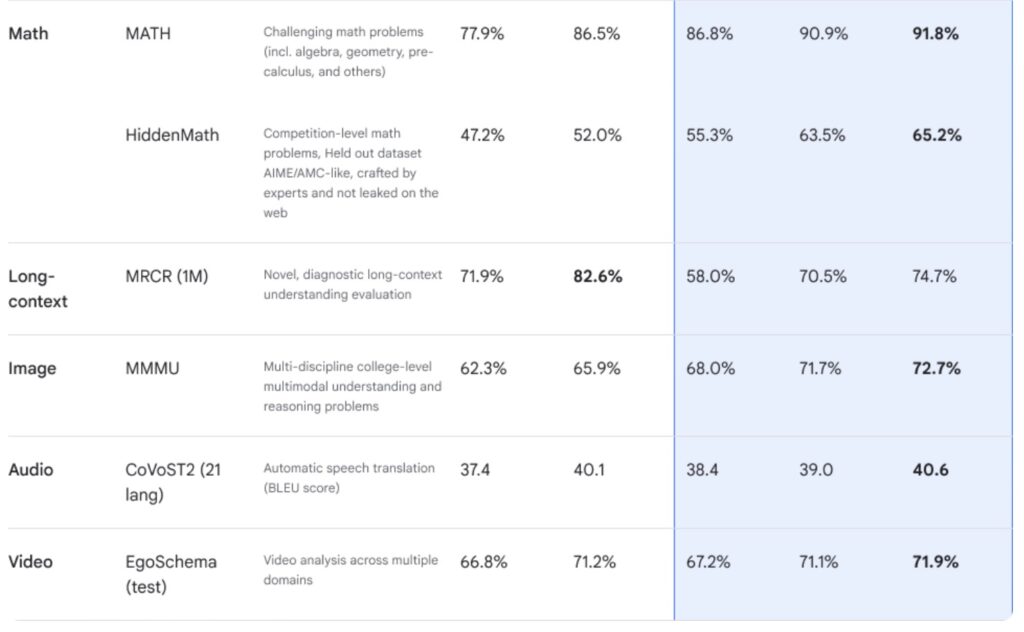

Google’s Gemini 2.0 is more than just a collection of models; it’s a glimpse into the future of AI-powered virtual assistants. The suite includes three key models: Gemini 2.0 Flash, designed for high-volume tasks; Gemini 2.0 Pro Experimental, optimized for coding; and Gemini 2.0 Flash-Lite, the most cost-efficient option yet. These models are engineered to handle a wide range of inputs, from text and images to video and audio, making them versatile tools for developers and businesses alike.

But what sets Gemini 2.0 apart is its focus on “agentic AI”—systems that can autonomously complete complex, multi-step tasks without requiring users to micromanage every step. This vision aligns with Google’s long-term goal of creating a “universal assistant” that can understand the world, think ahead, and take action on behalf of users. As Google stated in a December blog post, Gemini 2.0 introduces “new advances in multimodality” and “native tool use,” enabling the development of AI agents that are more intuitive and capable than ever before.

The AI Arms Race Heats Up

Google isn’t alone in its pursuit of agentic AI. Competitors like Meta, Amazon, Microsoft, OpenAI, and Anthropic are also racing to build AI systems that can perform intricate tasks autonomously. For instance, Anthropic, an Amazon-backed startup founded by ex-OpenAI executives, has developed AI agents capable of using computers like humans—interpreting screens, clicking buttons, entering text, and navigating websites. Jared Kaplan, Anthropic’s chief science officer, described these agents as capable of handling tasks with “tens or even hundreds of steps.”

Similarly, OpenAI recently introduced Operator, an AI agent designed to automate tasks such as planning vacations, filling out forms, and making reservations. The company also launched Deep Research, a tool that compiles complex research reports and analyzes user-defined topics. Interestingly, Google has its own version of Deep Research, which it describes as a “research assistant” capable of exploring intricate subjects and generating reports on behalf of users.

These developments underscore a broader trend: the shift from single-task AI models to multi-functional, autonomous agents that can seamlessly integrate into our daily lives. As the competition intensifies, companies are investing heavily in research and development to stay ahead of the curve.

A Strategic Vision for the Future

Google’s release of Gemini 2.0 is part of a larger strategy to solidify its position as a leader in the AI space. The company has been steadily integrating AI into its products, from search engines to productivity tools, and Gemini 2.0 represents the next phase of this evolution. By making the models accessible to the public, Google is not only democratizing AI but also encouraging innovation and collaboration among developers.

CEO Sundar Pichai has emphasized the importance of execution in this competitive landscape. “In history, you don’t always need to be first, but you have to execute well and really be the best in class as a product,” he said during a strategy meeting. “I think that’s what 2025 is all about.”

The Road Ahead

As AI continues to advance, the possibilities are both exciting and daunting. On one hand, AI agents like those powered by Gemini 2.0 promise to revolutionize industries, streamline workflows, and enhance productivity. On the other hand, they raise important questions about privacy, security, and the ethical implications of autonomous systems.

For now, Google’s Gemini 2.0 represents a significant leap forward in the quest to create intelligent, autonomous AI agents. With its advanced capabilities, cost-efficient models, and focus on multimodality, Gemini 2.0 is poised to shape the future of AI—and bring us one step closer to the dream of a universal assistant.

As the AI arms race heats up, one thing is clear: the future of technology will be defined by those who can innovate, execute, and deliver solutions that truly transform the way we live and work. And with Gemini 2.0, Google is making a bold statement that it intends to lead the charge.