How t-SNE Plots and Embedding Analysis Reveal the Hidden ‘Phases’ of LLM Reasoning

- A novel visualization technique transforms AI “chains of thought” into animated embeddings, exposing patterns in how models like R1 process information.

- Three distinct reasoning phases emerge—search, thinking, and concluding—marked by fluctuations in conceptual “jumps” between ideas.

- Interpretability tools like this could democratize AI safety research, moving beyond abstract theory to tangible analysis of how models reason.

Peering Into the Black Box: Why Visualizing AI Thought Matters

As large language models (LLMs) grow more advanced, understanding their internal reasoning—not just their outputs—has become critical. In a fascinating experiment, researchers animated the “thought process” of an AI model (R1) as it answered a simple question: “Describe how a bicycle works.” The resulting visualizations, built using text embeddings and dimensionality reduction techniques, offer a rare glimpse into how machines might “think.”

This work bridges the gap between AI’s technical complexity and human interpretability. By converting abstract reasoning steps into intuitive animations, we gain tools to analyze, critique, and ultimately improve AI systems—a vital step for safety and transparency.

From Text to Trajectory: How to Animate Machine Reasoning

The experiment’s methodology is as elegant as it is insightful:

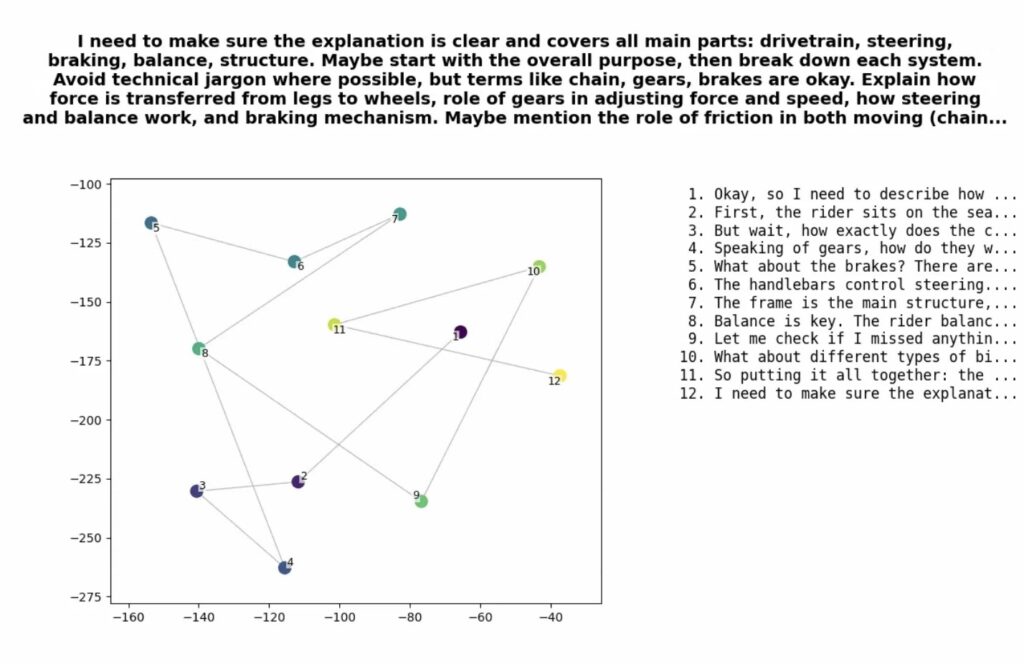

- Capturing Chains of Thought: When R1 answers a question, it generates intermediate reasoning steps (e.g., “First, recall bicycle components… then explain force transmission…”). These steps were logged as text.

- Embedding the Thoughts: Using OpenAI’s API, each text snippet was converted into a high-dimensional vector (embedding), numerically representing its semantic meaning.

- Plotting the “Mind Map”: t-SNE, a dimensionality reduction algorithm, transformed these embeddings into 2D coordinates. Animating them sequentially revealed R1’s conceptual trajectory.

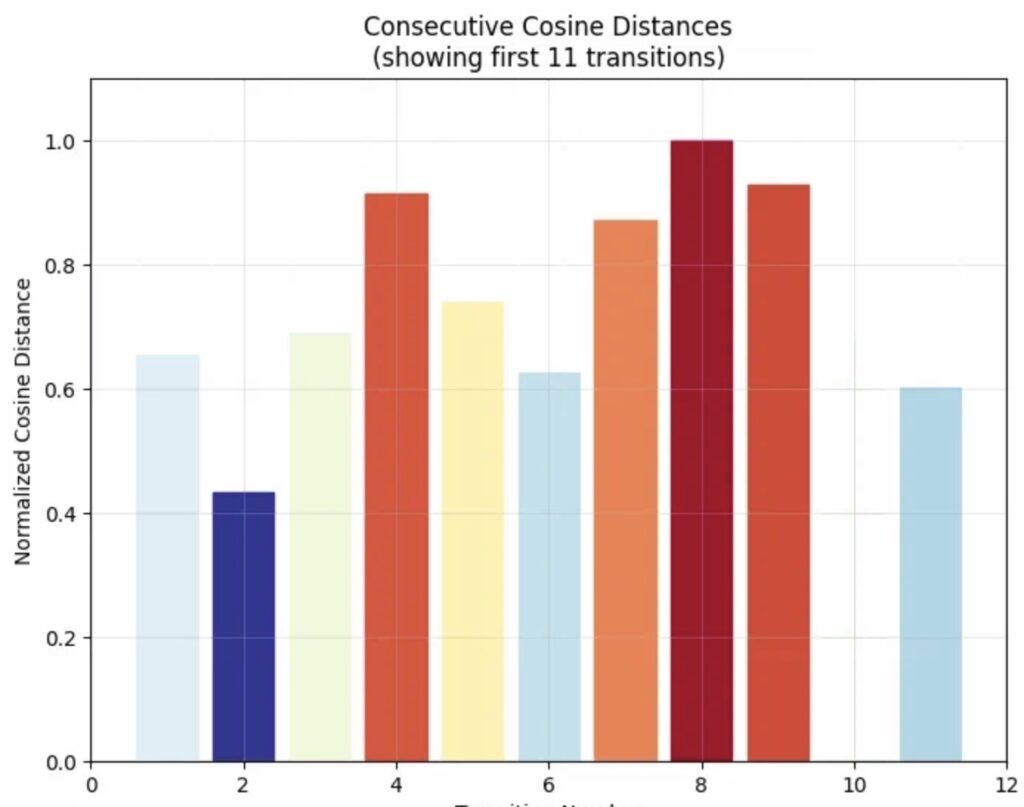

The team also analyzed consecutive distances (how “far” the model’s focus shifted between steps) using cosine similarity and Euclidean metrics. While t-SNE’s visualizations prioritized intuitive spatial relationships, cosine similarity provided a mathematically rigorous measure of conceptual shifts.

The Three Phases of Machine Reasoning: Search, Think, Conclude

The animations exposed a rhythmic pattern in R1’s reasoning:

- Phase 1: Search (Large conceptual jumps)

The model rapidly explores ideas—cycling through related concepts like balance, pedals, and gears—suggesting an initial brainstorming phase. - Phase 2: Stabilization (Smaller, deliberate steps)

Jumps between embeddings shorten as R1 settles into a coherent narrative, linking components into a functional explanation. - Phase 3: Conclusion (A spike, then resolution)

A final leap occurs as R1 transitions from detailed mechanics to a succinct summary, followed by a drop in activity—akin to a human writer polishing a conclusion.

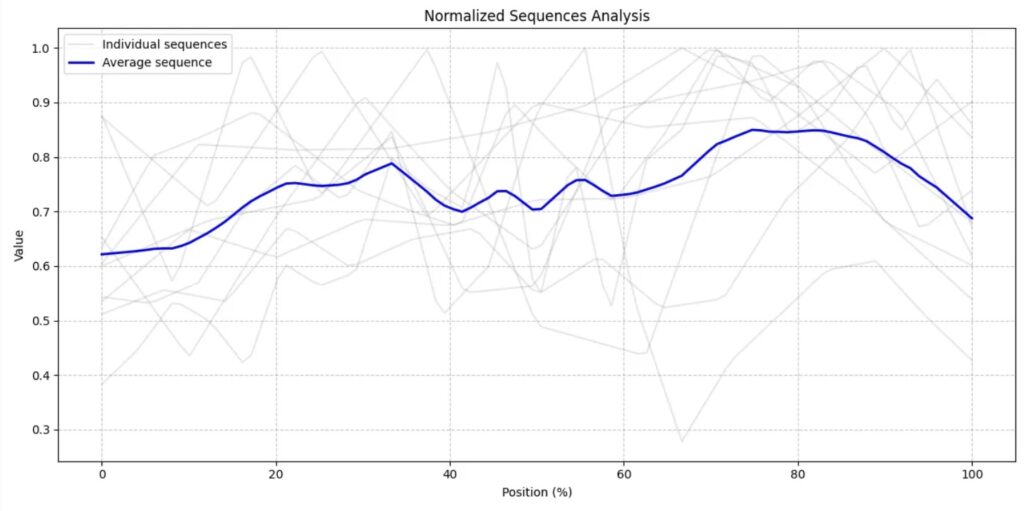

Aggregate data from 10 samples hinted at this three-stage cycle, though researchers caution against overinterpretation: “This could be noise, or it might reflect a deeper structure in how LLMs organize knowledge,” the experiment notes.

Why This Matters: Simplicity as a Path to AI Safety

The project’s most significant contribution isn’t its technical novelty but its philosophy. As the researcher observes: “A.I. safety enthusiasts sometimes miss the forest for the trees.” While complex interpretability frameworks exist, accessible tools like thought animations empower broader audiences to engage with AI’s inner workings.

Imagine educators using such visualizations to debug student-facing tutors or regulators auditing models for biased reasoning patterns. By making AI’s abstract “cognition” tactile, we foster trust and accountability.

From Bicycles to Bigger Models

This experiment leaves tantalizing questions: Do all LLMs follow similar reasoning phases? How do thought patterns shift with model size or task complexity? Early signs suggest larger models might exhibit more structured trajectories, but validation requires scaling the approach.

One thing is clear: as AI evolves, so must our tools to understand it. By transforming embeddings into animations, we’ve taken a small but profound step toward demystifying machine minds—and ensuring they remain aligned with our own.