How Wearable Glasses and Multimodal Data Are Pioneering the Future of Personal Efficiency

- A 300-Hour Window into Daily Life: The EgoLife Dataset captures six participants’ lives via AI glasses and third-person cameras, offering a rare, annotated glimpse into interpersonal dynamics.

- Beyond Memory: AI That Answers Life’s Questions: EgoLifeQA tasks enable practical assistance, from health monitoring to personalized recommendations.

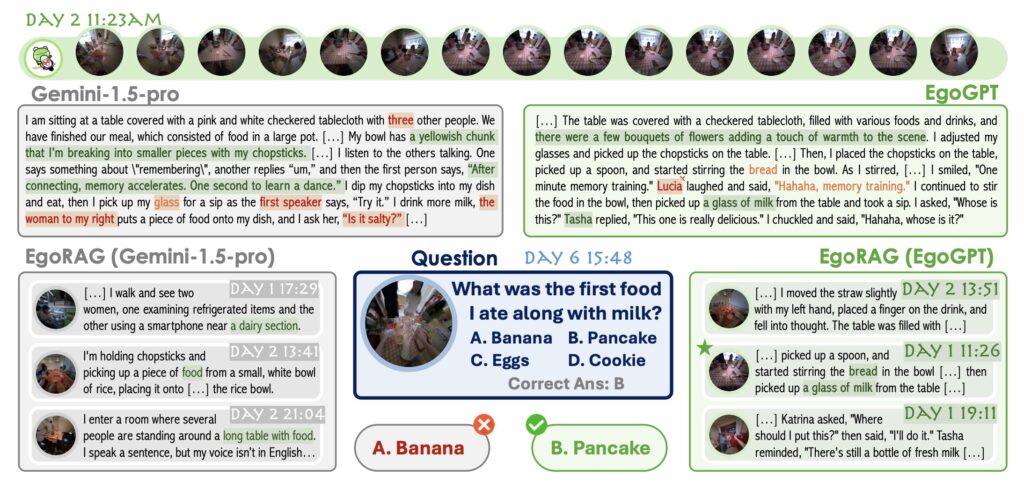

- EgoButler: The Brains Behind the Glasses: Combines EgoGPT’s omni-modal understanding and EgoRAG’s long-context retrieval to solve real-world challenges.

What if your glasses could remind you to buy milk on the way home, suggest a recipe based on your dietary habits, or even nudge you to call a friend you haven’t spoken to in weeks? This is the vision behind EgoLife, a groundbreaking project developing an AI-powered egocentric life assistant. By merging wearable technology, multimodal data, and advanced AI, EgoLife aims to transform how we navigate daily life—not just as individuals, but as interconnected members of families, workplaces, and communities.

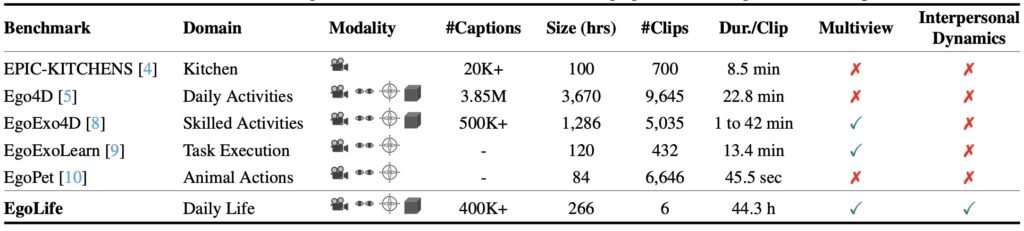

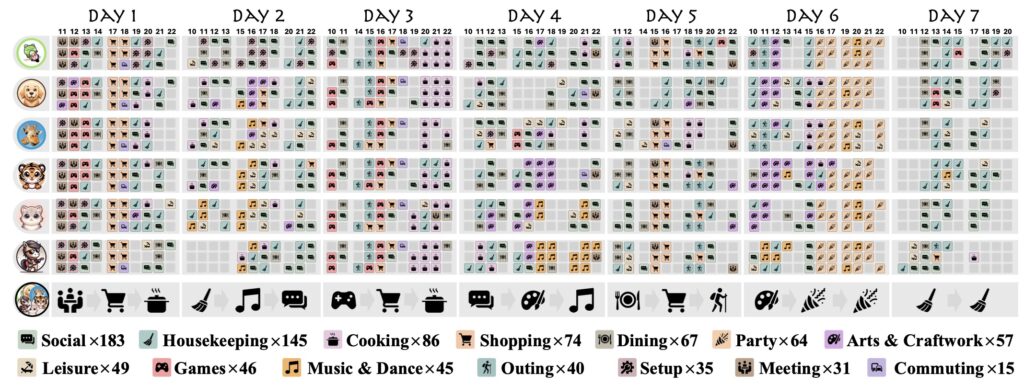

The EgoLife Dataset: A 300-Hour Mirror of Human Behavior

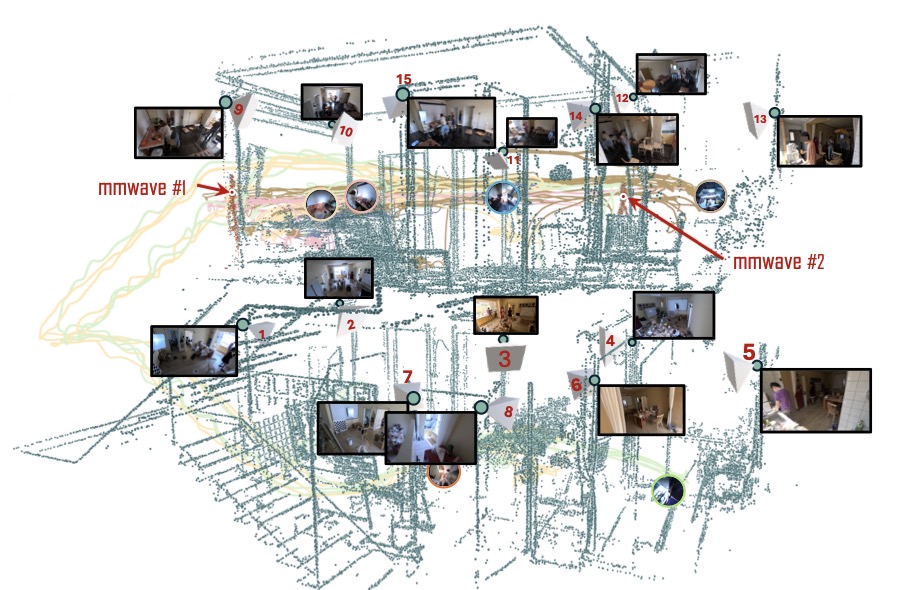

To build an AI that truly understands human life, researchers first needed to see life through our eyes—literally. Six participants lived together for a week, wearing AI glasses that recorded their every interaction: cooking meals, shopping, debating TV shows, and even moments of quiet reflection. Synchronized third-person cameras, millimeter-wave radar, and WiFi signals added layers of context, creating the EgoLife Dataset—a 300-hour treasure trove of egocentric video, audio, and sensor data, meticulously annotated for activity, emotion, and social dynamics.

This dataset isn’t just large—it’s rich. It captures the messy, unscripted reality of shared living: how people interrupt each other, collaborate on tasks, or unconsciously mimic gestures. For AI researchers, it’s a goldmine to study synchronized human behavior and ego-exo (self vs. outsider) perspective alignment.

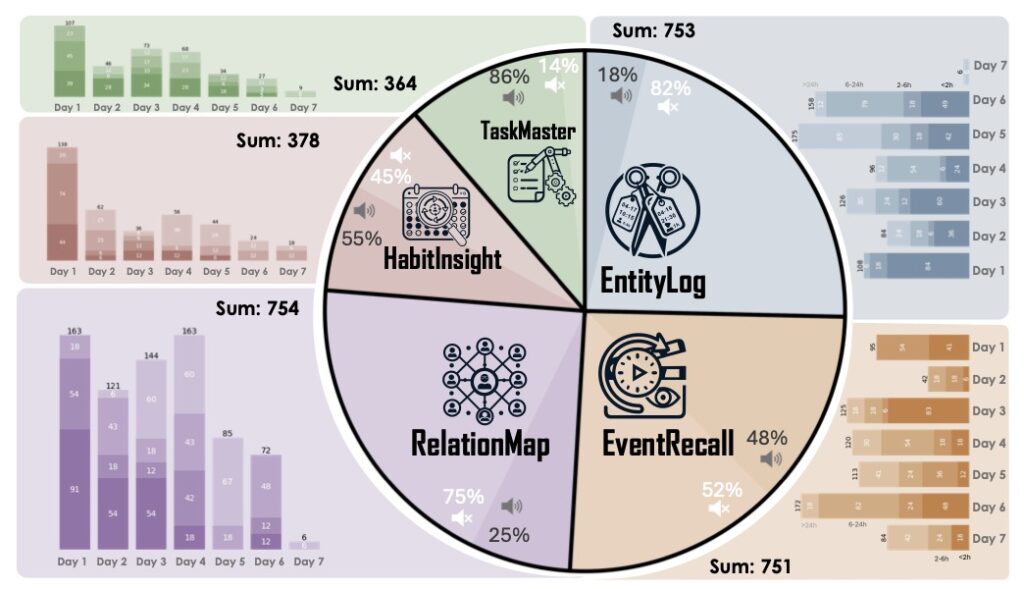

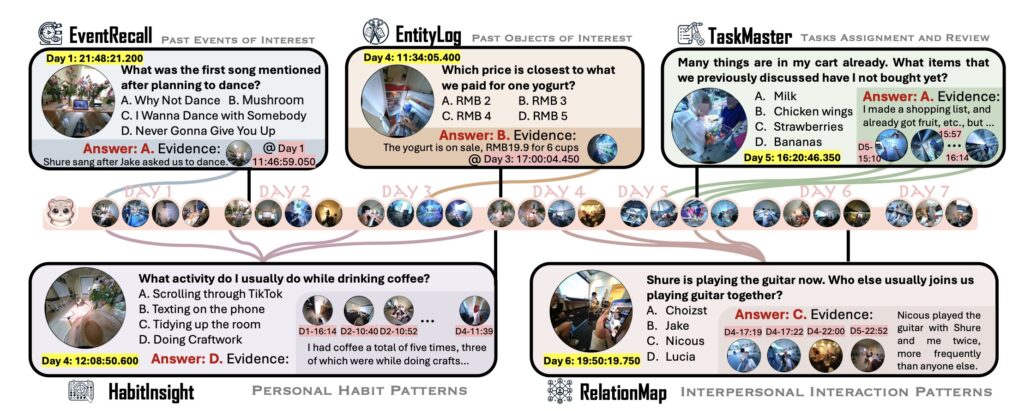

EgoLifeQA: Your AI Life Coach

How do you turn raw data into actionable insights? Enter EgoLifeQA, a suite of tasks designed to answer questions like:

- “Did I take my medication yesterday?”

- “What was the recipe we tried last Tuesday?”

- “How much screen time did I have this week compared to my partner?”

These aren’t simple queries. They require parsing hours of video, recognizing faces, interpreting mumbled conversations, and connecting events across days. Traditional AI struggles with such “ultra-long-context” challenges, but EgoLifeQA trains models to spot patterns—like noticing that you always forget sunscreen on grocery runs—and offer timely, personalized nudges.

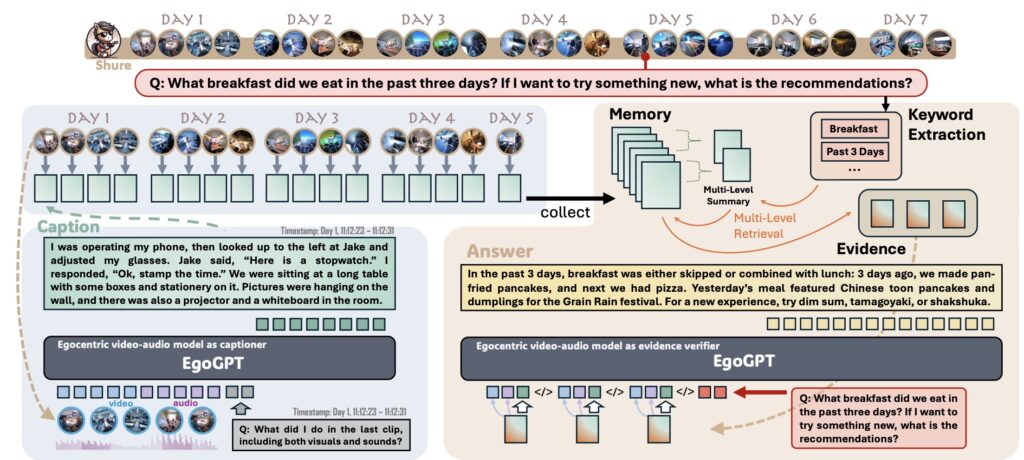

The Engine Powering the Vision

Building an assistant this sophisticated demands solving three thorny problems:

- Egocentric Data Complexity: First-person video is shaky, occluded, and full of motion blur. EgoGPT, an omni-modal model trained on egocentric data, outperforms existing systems in recognizing activities like chopping vegetables or reading subtitles on a distant screen.

- Identity Recognition: In group settings, knowing who did what is crucial. EgoButler uses voice fingerprinting and gait analysis to distinguish between participants, even when faces are hidden.

- Memory Over Months: Answering “Did I exercise enough this month?” requires sifting through weeks of data. EgoRAG, a retrieval-augmented system, acts like a photorealistic memory bank, pulling relevant clips from terabytes of footage in seconds.

Early tests reveal both promise and hurdles: EgoGPT excels at short-term tasks (e.g., “Find my keys”) but struggles with abstract reasoning (e.g., “Why was I stressed last Tuesday?”). Meanwhile, EgoRAG’s accuracy drops when queries span multiple sensory modes, highlighting the need for better cross-modal integration.

From Dataset to Daily Companion

EgoLife isn’t just about technology—it’s about redefining human-AI collaboration. Future iterations could predict conflicts by analyzing tone and body language, or suggest family activities based on everyone’s interests. The team’s decision to open-source the dataset, models, and benchmarks invites global researchers to tackle unanswered questions: How do cultural norms affect behavior patterns? Can AI respect privacy while still being helpful?

A New Era of Empathetic AI

The EgoLife project marks a leap toward AI that doesn’t just compute but comprehends—the subtle rhythms of work, the unspoken rules of shared spaces, the quiet growth of habits. As lead researcher Dr. Jane Luo notes, “We’re not building a tool. We’re building a companion.” Whether it’s helping a parent manage a hectic household or aiding a therapist in understanding a patient’s routines, EgoLife’s vision of AI as a seamless, empathetic partner in human life is no longer science fiction. It’s a dataset, a model, and a pair of smart glasses away.