How Google DeepMind’s Gemini 2.0-Powered Models Are Redefining Robotics Through Embodied Reasoning and Humanoid Partnerships

- Two Breakthrough Models: Gemini Robotics (vision-language-action) and Gemini Robotics-ER (embodied reasoning) enable robots to generalize tasks, adapt dynamically, and interact safely in real-world environments.

- Humanlike Capabilities: Advanced spatial understanding, multilingual interactivity, and fine motor skills allow robots to tackle complex tasks like origami folding, packing, and real-time replanning.

- Safety & Collaboration: A layered safety framework, partnerships with robotics leaders like Apptronik, and the release of the ASIMOV dataset aim to responsibly advance AI-powered robotics.

For years, AI breakthroughs have dazzled in the digital realm—processing text, images, and video with human-like fluency. Yet, the leap into the physical world, where AI must navigate unpredictability, spatial complexity, and real-time interactions, has remained elusive. At Google DeepMind, this challenge is now being met head-on with Gemini Robotics and Gemini Robotics-ER, two models built on the Gemini 2.0 foundation. These innovations mark a pivotal shift: AI isn’t just thinking—it’s acting.

Gemini Robotics: The Trio of Generality, Interactivity, and Dexterity

To build robots that are genuinely helpful, Google DeepMind prioritized three core qualities:

Generality:

Unlike rigid, task-specific robots, Gemini Robotics thrives in novel scenarios. Trained on diverse datasets, it doubles the performance of state-of-the-art models on generalization benchmarks. Whether encountering new objects, environments, or multilingual instructions, it adapts “out of the box.” For example, it can pivot from folding origami to packing a snack bag without retraining—a leap toward general-purpose robotics.

Interactivity:

In dynamic settings, robots must respond to shifting commands and environments. Leveraging Gemini 2.0’s language prowess, the model understands conversational prompts (e.g., “Hand me the blue tool, but avoid the glass”) and adjusts actions mid-task. If an object slips or a human moves an item, it replans instantly—a critical skill for kitchens, factories, or hospitals.

Dexterity:

Precision tasks like threading a needle or grasping a coffee mug by its handle have long stumped robots. Gemini Robotics combines advanced motor control with spatial reasoning, enabling delicate multi-step actions. Partnering with Apptronik, Google DeepMind is testing these skills on Apollo, a humanoid robot designed for real-world chores.

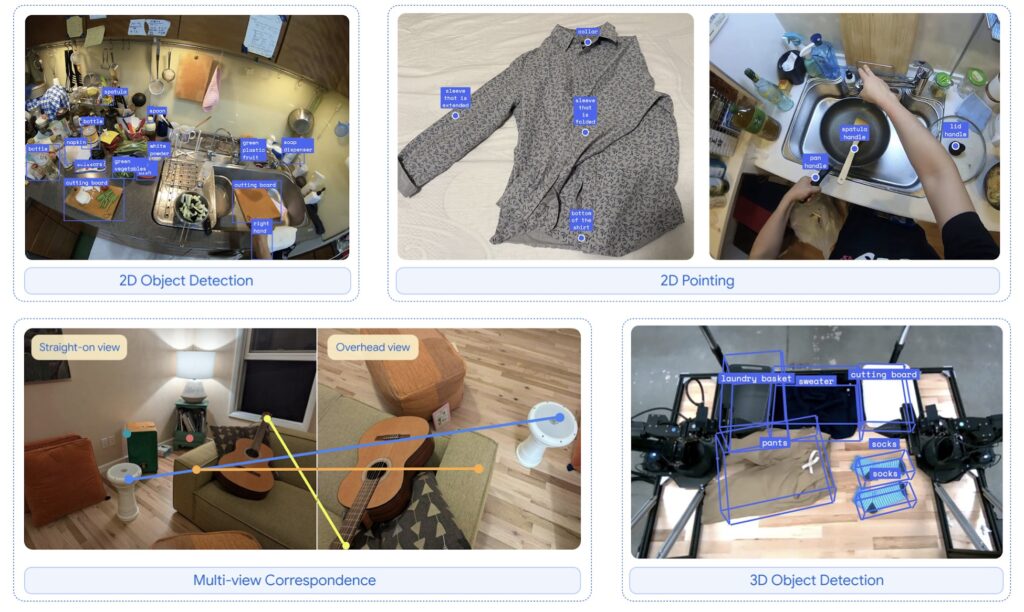

Gemini Robotics-ER: Supercharging Spatial Reasoning

While Gemini Robotics excels at direct control, Gemini Robotics-ER (Embodied Reasoning) focuses on spatial intelligence for roboticists. This model enhances 3D detection, trajectory planning, and code generation, achieving 2x-3x higher success rates than Gemini 2.0. For instance, when shown a mug, it calculates the optimal two-finger grasp and safe movement path. It even learns from human demonstrations via in-context learning, bridging gaps where pre-programmed code falls short.

Safety First: Building Trust in AI-Powered Robots

Physical robots introduce unique risks, from collisions to unintended force. Google DeepMind addresses this with a multi-layered approach:

- Low-Level Safety: Classic measures like collision avoidance and force limits are integrated with each robot’s hardware.

- Semantic Safety: Inspired by Asimov’s Three Laws of Robotics, the team developed a framework for “data-driven constitutions.” These natural-language rules guide robots to prioritize human safety and ethical alignment.

- The ASIMOV Dataset: A new benchmark allows researchers to rigorously evaluate robotic actions in real-world contexts, fostering industry-wide safety advancements.

Collaboration: The Path to Real-World Impact

Google DeepMind is partnering with robotics pioneers to test and refine these models:

- Apptronik: Co-developing Apollo, a humanoid robot for logistics and household tasks.

- Trusted Testers: Boston Dynamics, Agility Robots, and others are exploring Gemini Robotics-ER’s potential for their platforms.

- Responsible Innovation: Internal councils and external experts guide ethical deployment, ensuring societal benefits outweigh risks.

The Future of Embodied AI

Gemini Robotics isn’t just a technical milestone—it’s a vision of AI as a tangible collaborator. Imagine robots that assist in disaster recovery, elder care, or sustainable manufacturing, blending human-like intuition with machine precision. By prioritizing adaptability, safety, and partnerships, Google DeepMind is laying the groundwork for robots that don’t just exist in labs but enrich everyday life.

As these models evolve, one truth becomes clear: the line between digital intelligence and physical agency is vanishing. Welcome to the era of embodied AI.