The Smartest Models Yet with Full Tool Access and Unmatched Reasoning Power

- OpenAI o3 and o4-mini are the latest and most advanced models in the o-series, offering groundbreaking reasoning abilities and full tool integration within ChatGPT, including web search, data analysis, and image generation.

- These models excel in complex problem-solving across coding, math, science, and visual perception, setting new standards in academic benchmarks and real-world applications.

- With enhanced conversational naturalness, personalized responses, and agentic tool use, o3 and o4-mini pave the way for a more autonomous and versatile ChatGPT experience.

The world of artificial intelligence has taken a monumental leap forward with the release of OpenAI o3 and o4-mini, the latest additions to the o-series of models. These are not just incremental updates; they represent a transformative shift in what AI can achieve, blending state-of-the-art reasoning with comprehensive tool access. Designed to think longer and more deeply before responding, these models are the smartest OpenAI has ever released, catering to everyone from curious individuals to seasoned researchers. Whether it’s tackling intricate multi-step problems, analyzing visual data, or generating creative outputs, o3 and o4-mini are setting a new benchmark for intelligence and utility in AI.

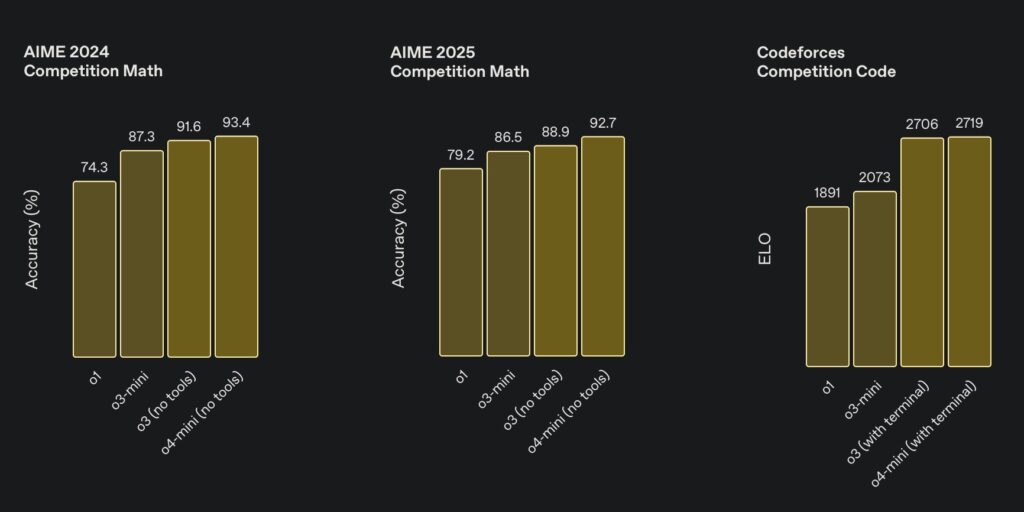

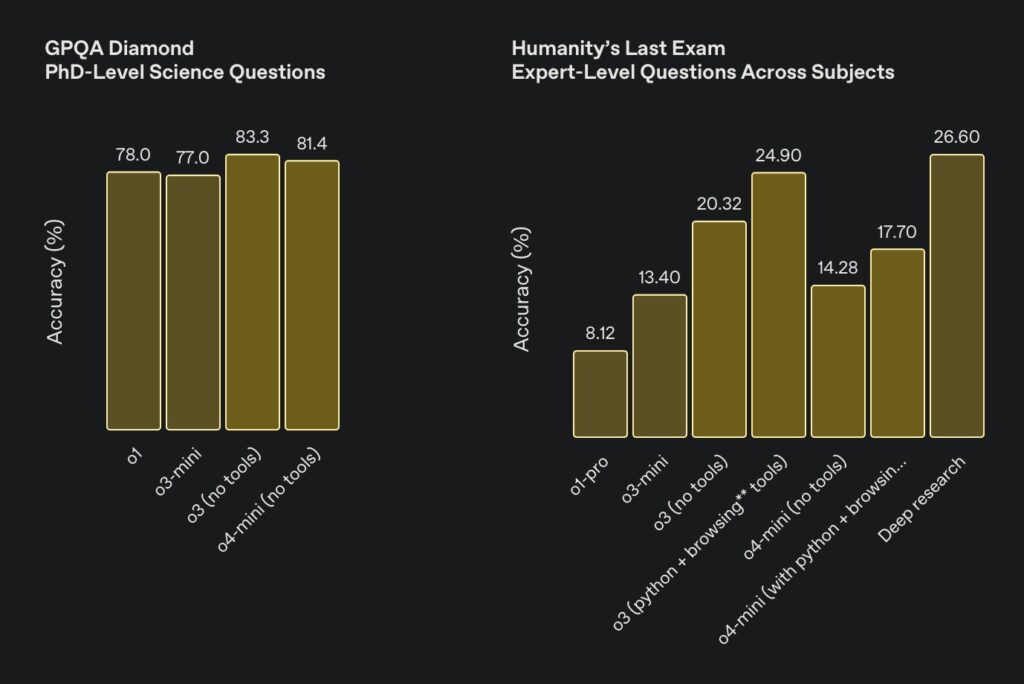

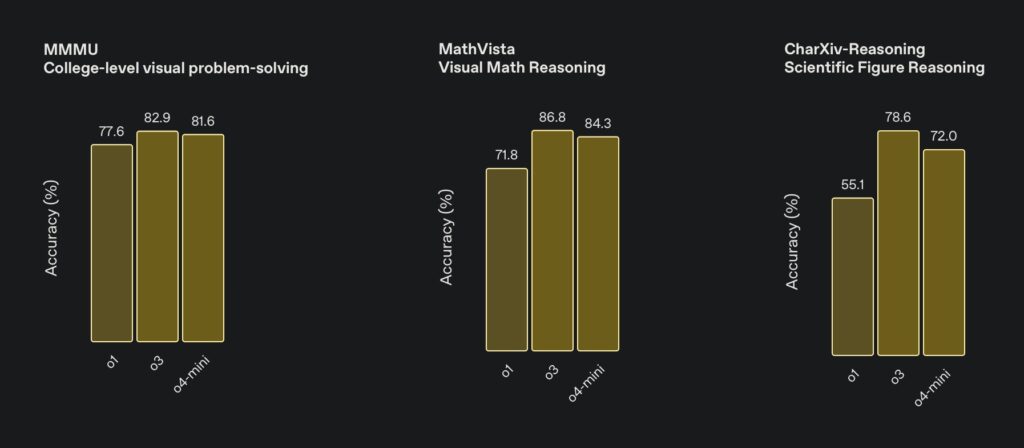

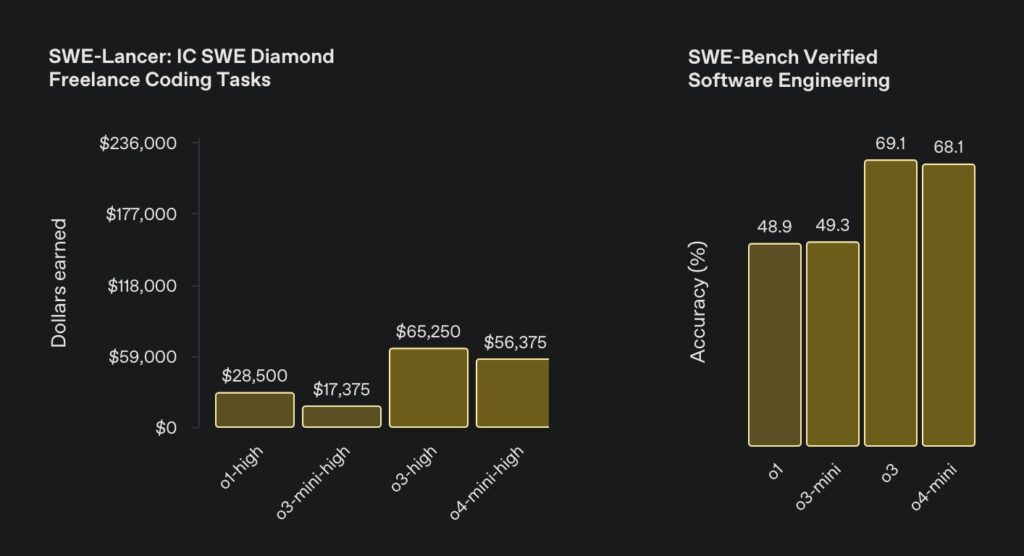

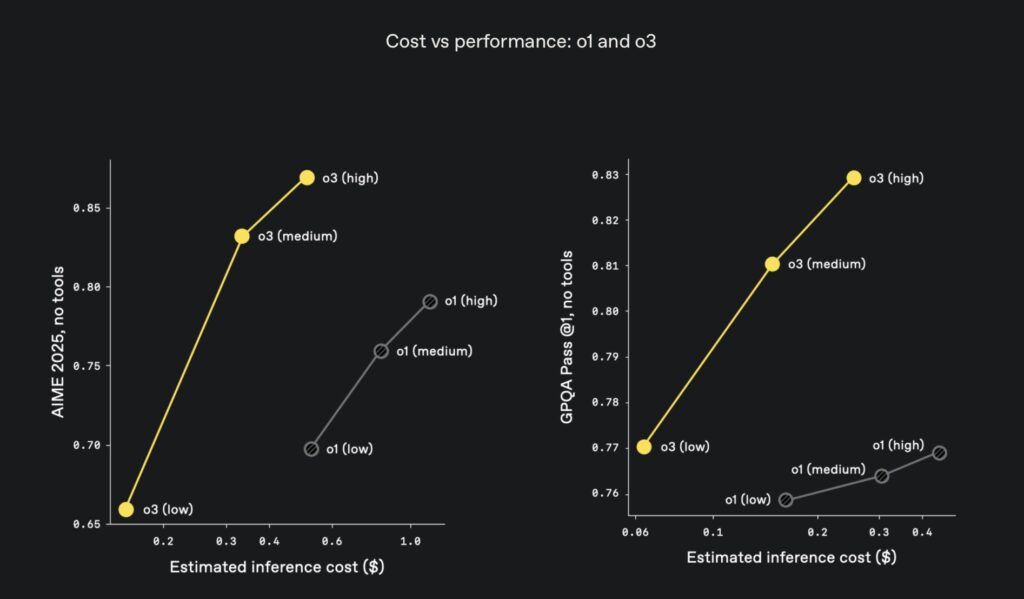

At the heart of this release is OpenAI o3, a powerhouse of reasoning that pushes boundaries across diverse domains like coding, mathematics, science, and visual perception. It has achieved state-of-the-art results on benchmarks such as Codeforces, SWE-bench, and MMMU, demonstrating its prowess in handling complex queries where answers aren’t immediately obvious. External evaluations reveal that o3 commits 20 percent fewer major errors compared to its predecessor, OpenAI o1, particularly shining in programming, business consulting, and creative ideation. Early testers have praised its analytical rigor, noting its ability to serve as a thought partner by generating and critically evaluating novel hypotheses in fields like biology, math, and engineering. Its exceptional performance in visual tasks—such as interpreting images, charts, and graphics—further cements its position as a versatile tool for multifaceted analysis.

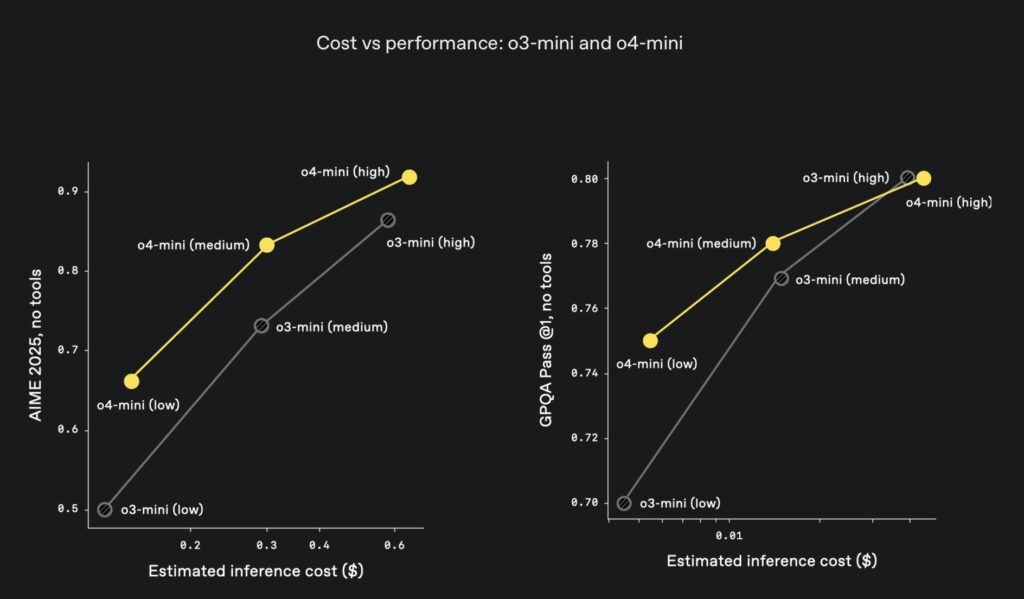

Complementing o3 is OpenAI o4-mini, a smaller yet remarkably efficient model optimized for speed and cost-effectiveness. Despite its compact size, o4-mini delivers outstanding performance, especially in math, coding, and visual tasks, outperforming even its predecessor, o3-mini, across both STEM and non-STEM domains. It has set a high bar on benchmarks like AIME 2024 and 2025, and its efficiency allows for significantly higher usage limits compared to o3, making it an ideal choice for high-volume, high-throughput applications. Expert evaluators have noted that both models exhibit improved instruction-following and deliver more useful, verifiable responses, thanks to their enhanced intelligence and integration of web sources. Additionally, these models feel more natural and conversational, leveraging memory and past interactions to provide personalized and contextually relevant answers.

A key innovation in the development of o3 and o4-mini lies in the scaling of reinforcement learning (RL). OpenAI has observed that large-scale RL follows the same trend as pretraining in the GPT series: more compute equals better performance. By investing an additional order of magnitude in training compute and inference-time reasoning, OpenAI has unlocked clear performance gains, with o3 outperforming o1 at equal latency and cost within ChatGPT. Moreover, allowing the models to think longer results in even greater improvements, validating the potential for continuous enhancement through extended reasoning. This approach has also enabled the models to master tool use through RL, teaching them not only how to use tools but also when to deploy them based on desired outcomes. This capability shines in open-ended scenarios, particularly those involving visual reasoning and multi-step workflows.

One of the most exciting advancements with these models is their ability to integrate visual inputs directly into their chain of thought. Unlike previous models that merely processed images, o3 and o4-mini think with images, unlocking a new dimension of problem-solving that combines visual and textual reasoning. Users can upload a photo of a whiteboard, a textbook diagram, or even a blurry hand-drawn sketch, and the models can interpret and manipulate the content—rotating, zooming, or transforming images as part of their reasoning process. This results in best-in-class accuracy on visual perception tasks, enabling solutions to problems that were previously out of reach. OpenAI’s visual reasoning research blog offers deeper insights into this groundbreaking capability, showcasing how these models are redefining multimodal AI.

Beyond visual reasoning, o3 and o4-mini are designed for agentic tool use, with full access to ChatGPT’s suite of tools as well as custom tools via function calling in the API. Trained to reason about problem-solving strategies, these models can choose when and how to use tools to deliver detailed, thoughtful answers in the appropriate output formats, often in under a minute. For instance, if a user asks about summer energy usage in California compared to the previous year, the model can search the web for public utility data, write Python code to create a forecast, generate a visual graph, and explain the underlying factors—all by chaining multiple tool calls. This strategic flexibility allows the models to adapt and pivot based on new information, conducting multiple web searches if needed to refine their responses.

This agentic approach empowers o3 and o4-mini to handle tasks requiring up-to-date information beyond their built-in knowledge, extended reasoning, synthesis, and output generation across modalities. Whether it’s synthesizing data from diverse sources or crafting creative solutions, these models are a step closer to a fully autonomous ChatGPT that can independently execute tasks on behalf of users. The implications are profound, spanning academic research, professional workflows, and everyday problem-solving. As early testers have noted, the analytical depth and practical utility of these models make them invaluable partners in navigating complex challenges.

OpenAI o3 and o4-mini are not just advancements in AI—they are a redefinition of what intelligent systems can achieve. By combining unparalleled reasoning with full tool access, conversational naturalness, and multimodal capabilities, these models are poised to transform how we interact with technology. From solving intricate scientific problems to providing quick, cost-effective answers for high-volume queries, o3 and o4-mini cater to a wide spectrum of needs with unmatched precision and versatility. As OpenAI continues to push the boundaries of compute and reinforcement learning, the future of AI looks brighter than ever, promising even more powerful tools to augment human potential.