Empowering Agents to Navigate the Web Like Humans—Faster, Smarter, and Safer

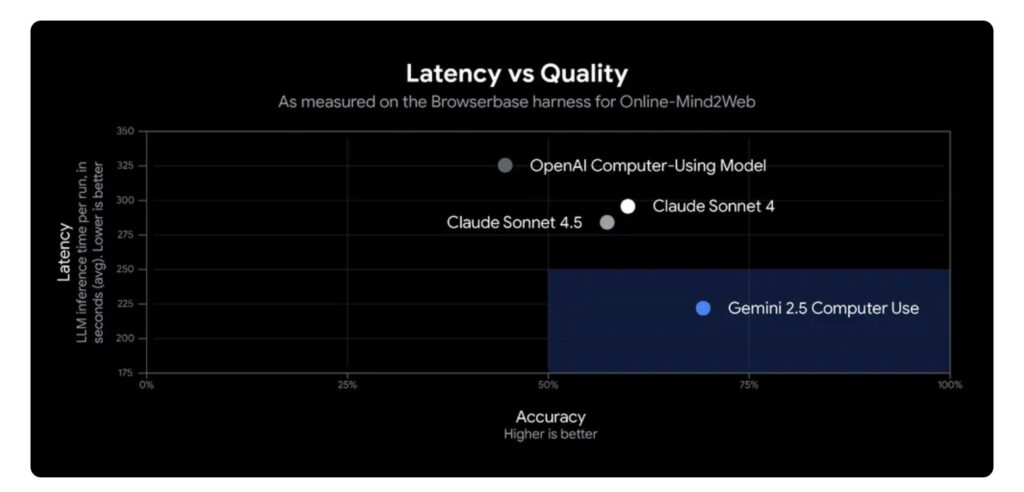

- Breaking New Ground in UI Interaction: The Gemini 2.5 Computer Use model, built on Gemini 2.5 Pro, enables AI agents to directly manipulate graphical user interfaces (UIs) through actions like clicking, typing, and scrolling, outperforming competitors on web and mobile benchmarks with lower latency.

- A Loop of Intelligent Automation: By processing screenshots, user requests, and action histories via the new

computer_usetool in the Gemini API, the model iteratively executes tasks such as filling forms or booking appointments, all while prioritizing safety through built-in guardrails and developer controls. - Real-World Impact and Accessibility: From speeding up software testing at Google to powering personal assistants and workflow automation for early testers, this preview model—available now via Google AI Studio and Vertex AI—marks a crucial step toward general-purpose AI agents that handle everyday digital tasks responsibly.

In the ever-evolving landscape of artificial intelligence, the line between human and machine interaction with technology is blurring faster than ever. On October 7, 2025, Google unveiled the Gemini 2.5 Computer Use model, a groundbreaking specialized AI that transforms how agents engage with digital environments. Available in public preview through the Gemini API on platforms like Google AI Studio and Vertex AI, this model leverages the visual understanding and reasoning prowess of Gemini 2.5 Pro to empower AI agents capable of interacting with user interfaces (UIs) in ways that mimic human behavior. No longer confined to structured APIs, these agents can now tackle the messy, graphical world of web pages, mobile apps, and beyond—filling forms, navigating dropdowns, and even operating behind logins. This isn’t just an incremental update; it’s a leap toward building versatile, general-purpose AI that can automate real-world digital tasks with unprecedented efficiency.

At its core, the challenge of AI interacting with software has long been split between the clean precision of APIs and the chaotic reality of graphical UIs. While APIs handle data exchanges seamlessly, many everyday digital activities—like submitting online forms or browsing e-commerce sites—demand direct engagement with visual elements. Humans do this intuitively: we click buttons, type into fields, scroll through pages, and make split-second decisions based on what we see. The Gemini 2.5 Computer Use model bridges this gap by enabling AI agents to perform these actions natively. Optimized primarily for web browsers but showing strong potential for mobile UI control, the model excels at tasks that require contextual awareness, such as extracting pet care details from a signup page at https://tinyurl.com/pet-care-signup and seamlessly adding them to a spa CRM at https://pet-luxe-spa.web.app/, complete with scheduling a follow-up appointment for October 10th after 8 a.m. with specialist Anima Lavar. Demos of such workflows, accelerated to 3X speed, showcase the model’s fluid navigation, highlighting its ability to handle complex, multi-step processes without human intervention.

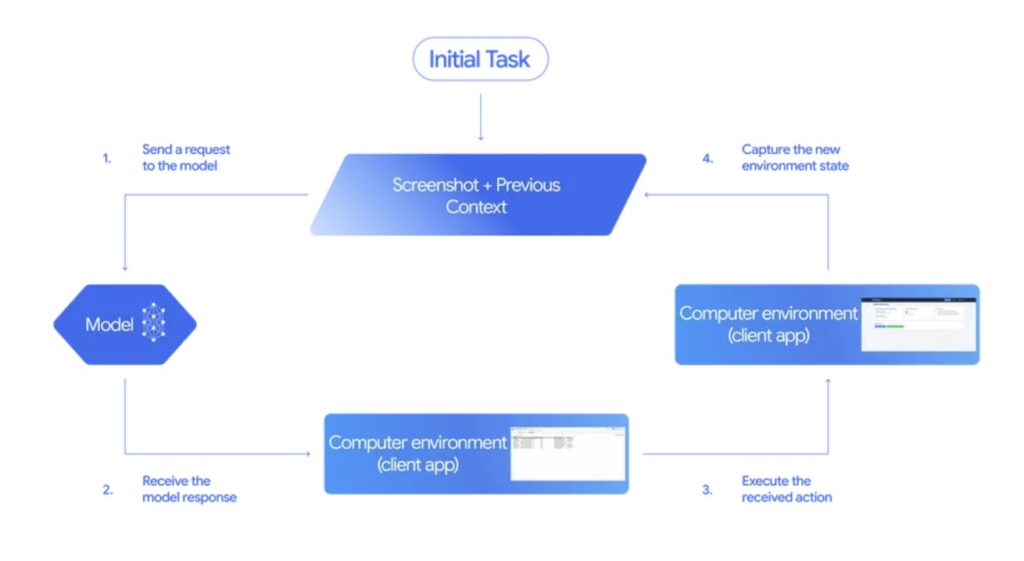

Delving into the mechanics, the model’s ingenuity lies in its iterative, loop-based operation via the innovative computer_use tool integrated into the Gemini API. Developers feed the model a user request, a screenshot of the current environment, and a history of recent actions. Optionally, they can exclude certain UI functions or add custom ones to tailor the interaction. The AI then analyzes this input and responds with a precise function call—perhaps instructing a click on a dropdown or typing into a form field. For sensitive actions, like making a purchase, it might pause to request end-user confirmation, ensuring accountability. The client-side code executes this action, captures a fresh screenshot and the updated URL, and loops the information back to the model. This cycle repeats until the task concludes successfully, encounters an error, or is halted by safety protocols or user choice. It’s a elegant dance of perception and action, reminiscent of how we humans process and respond to our screens, but executed at machine speeds. Notably, while the model shines in web and mobile scenarios, it’s not yet tuned for full desktop operating system control, focusing instead on the most common UI frontiers.

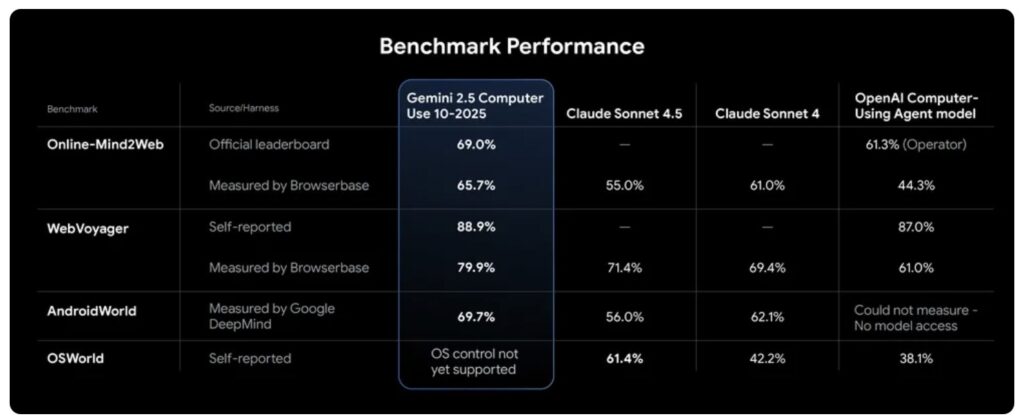

Performance is where the Gemini 2.5 Computer Use model truly stands out, consistently surpassing leading alternatives across rigorous benchmarks for web and mobile control. Evaluations, including self-reported metrics, tests run by Browserbase, and Google’s own assessments, reveal superior results—often with latency reductions that make real-time applications feasible. Detailed evaluation insights are available in the Gemini 2.5 Computer Use evaluation info and Browserbase’s blog post. These scores underscore the model’s edge in accuracy and speed, making it a game-changer for scenarios where every second counts, such as automated testing or customer service bots navigating intricate websites.

Yet, with great power comes great responsibility, and Google has approached safety as a foundational pillar from day one. AI agents wielding computer control introduce novel risks: deliberate misuse by bad actors, unpredictable behaviors from the model itself, and vulnerabilities like prompt injections or scams lurking in web environments. To counter these, the model embeds trained safety features targeting these exact threats, as outlined in the comprehensive Gemini 2.5 Computer Use System Card. Developers gain robust controls, including a per-step safety service that scrutinizes each proposed action before execution, flagging potential harms like system integrity breaches, security compromises, CAPTCHA circumventions, or unauthorized medical device manipulations. System instructions allow customization, such as mandating refusals or user confirmations for high-stakes moves. Google urges thorough testing and provides documentation on safety best practices, emphasizing that while these guardrails minimize dangers, vigilance remains essential for any deployment.

The proof of the model’s potential is already evident in its early adoption. Within Google, teams have integrated it into production for UI testing, dramatically accelerating software development cycles. It’s the engine behind Project Mariner, the Firebase Testing Agent, and enhanced agentic features in AI Mode within Google Search. Early access participants from the developer community echo this enthusiasm. For instance, Poke.com, which builds proactive AI assistants for iMessage, WhatsApp, and SMS with intricate third-party workflows, reports that Gemini 2.5 Computer Use is “far ahead of the competition, often being 50% faster and better than the next best solutions.” Autotab, a provider of drop-in AI agents, highlights its reliability: “Our agents run fully autonomously… Gemini 2.5 Computer Use outperformed other models at reliably parsing context in complex cases, increasing performance by up to 18% on our hardest evals.” Even Google’s internal payments platform team has leveraged it to rescue fragile end-to-end UI tests, rehabilitating over 60% of failing executions that once demanded days of manual fixes—slashing a 25% failure rate in the process. These testimonials illustrate a broader shift: from rigid scripting to adaptive, AI-driven automation that thrives on human-like intuition.

For developers eager to harness this technology, getting started is straightforward and immediate. The model is now in public preview, accessible via the Gemini API on Google AI Studio for experimentation and Vertex AI for enterprise-scale applications. Beginners can jump in with a demo environment hosted by Browserbase, while those ready to build should explore the reference documentation and Vertex AI enterprise guides. Whether setting up a local agent loop with Playwright or scaling in a cloud VM via Browserbase, the tools are there to turn visionary ideas into functional agents. As AI continues to weave deeper into our digital lives, the Gemini 2.5 Computer Use model isn’t just a tool—it’s a catalyst for a more intuitive, efficient, and secure future of automation.