When Media Giants Fall for Digital Deception, Real People Pay the Price

- AI’s Deceptive Power Exposed: Fox News aired fabricated videos of supposed SNAP recipients raging about benefit cuts, treating them as authentic news without verification, highlighting the growing threat of AI-generated misinformation.

- A Hasty Correction, But Bias Persists: The network overhauled its story with a massive editor’s note admitting the footage was likely AI-slop, yet continued to portray welfare recipients as entitled, ignoring the program’s vital role in supporting vulnerable Americans.

- Broader Implications for Society: This incident underscores how AI can manufacture “evidence” to fuel harmful narratives, exacerbate prejudices, and erode trust in media amid economic hardships faced by millions.

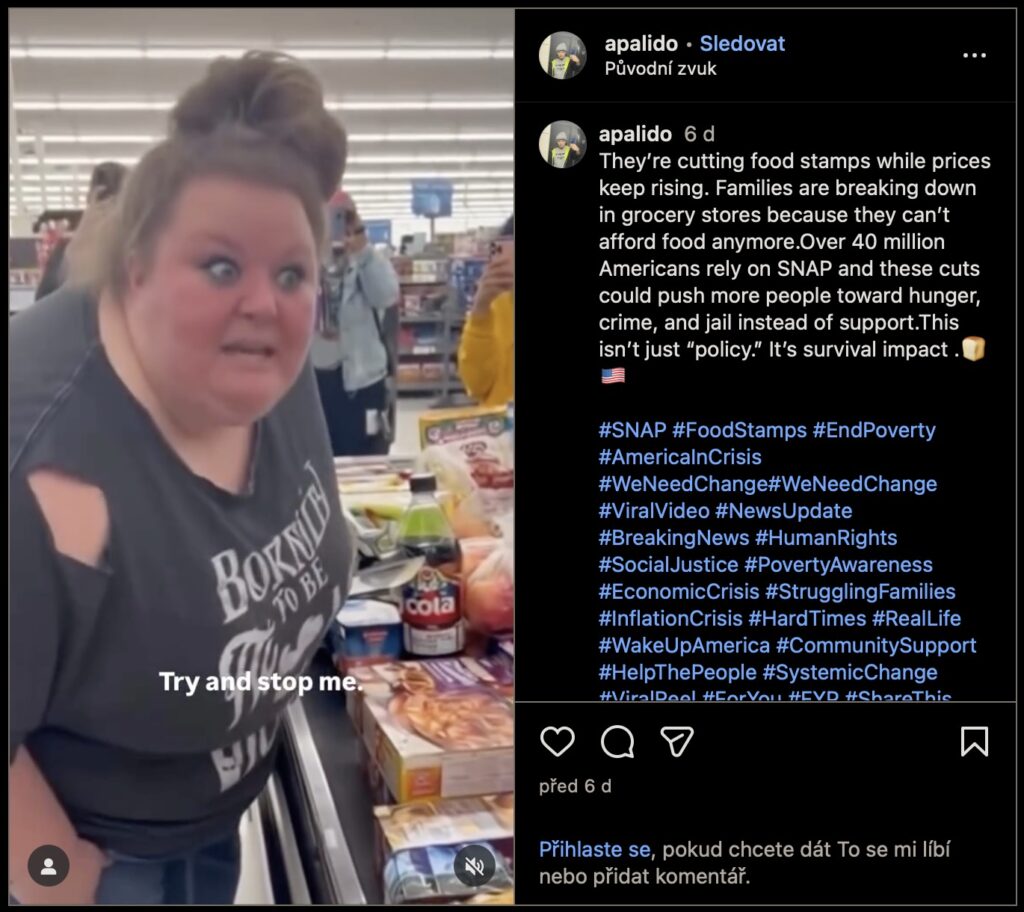

In an era where artificial intelligence blurs the line between fact and fiction, Fox News recently found itself ensnared in a web of digital deceit. Last week, a series of AI-generated “slop” videos began circulating online, peddling blatant misinformation about the Supplemental Nutrition Assistance Program (SNAP), commonly known as food stamps. These clips depicted dramatic scenes of frustration, including one where a woman in a store confronts an employee, exclaiming, “They cut my food stamps. I ain’t paying for none of this s**t. I got babies at home that gotta eat.” What started as viral fakery quickly escalated into a national news blunder when Fox News latched onto it, running a story that treated the footage as genuine evidence of widespread discontent. This not only amplified false narratives but also stirred unnecessary anger against a program that primarily aids children, the elderly, and people with disabilities—groups that rely on SNAP’s modest support to put food on the table.

The original Fox News piece was a masterclass in sensationalism, with a headline screaming, “SNAP beneficiaries threaten to ransack stores over government shutdown.” It painted a picture of entitled recipients on the brink of chaos, using the videos to argue that welfare programs spoil the poor and working class. One particularly inflammatory quote featured a woman fuming, “It is the taxpayer’s responsibility to take care of my kids,” presented without any caveat. But as scrutiny mounted, the network was forced to backpedal dramatically. Over the weekend, the story was rewritten almost entirely, with the headline softened to “AI videos of SNAP beneficiaries complaining about cuts go viral.” An extraordinary editor’s note was tacked on at the bottom, confessing that the outlet had “previously reported on some videos that appear to have been generated by AI without noting that.” Remarkably, even in the revised version, Fox retained the controversial quote—now with a disclaimer that it “appears to be generated by AI”—while still pushing the narrative that SNAP recipients are overly dependent on a program that averages just $177 per person per month, a stipend barely sufficient for basic cold food needs.

This fiasco didn’t just expose a lapse in journalistic standards; it revealed deeper biases at play. As Tim Miller from The Bulwark pointed out, the story would have been ridiculous even if the videos were real. “It’s outrageous to try to nit-pick a handful of people you think will play into the racial prejudice of your audience,” he remarked, cutting to the heart of how such coverage often exploits stereotypes to demonize welfare programs. Fox’s decision to highlight these clips amid a time when 41 million Americans are grappling with food insecurity is particularly galling. SNAP isn’t a luxury—it’s a lifeline for families facing skyrocketing grocery prices, yet the network chose to frame it as a burden on taxpayers, using fabricated “evidence” to justify cuts or criticism. By doing so, they not only misled viewers but also contributed to a toxic discourse that stigmatizes those in need, potentially influencing public policy and voter sentiment.

From a broader perspective, this incident is a harbinger of AI’s dark potential in the information age. We’re entering a stupefying era where deepfakes and generated content can manufacture lifelike “proof” to bolster harmful narratives, making it increasingly difficult to distinguish reality from ruse. In the case of SNAP, these videos didn’t just spread misinformation; they weaponized empathy gaps, portraying beneficiaries as ungrateful or violent to justify dismantling social safety nets. This isn’t isolated—AI slop is proliferating across social media, from political deepfakes to fabricated health scares, eroding trust in institutions and amplifying divisions. Media outlets like Fox, with their vast reach, bear a heightened responsibility to verify sources, especially when dealing with content that preys on societal prejudices. Failure to do so doesn’t just result in corrections; it risks real-world harm, as vulnerable populations face increased scrutiny and reduced support.

The Fox News blunder serves as a wake-up call for all of us. As AI tools become more sophisticated, the onus falls on journalists, platforms, and consumers to demand transparency and critical thinking. Policymakers might need to step in with regulations on AI-generated content, while education on digital literacy could empower the public to spot fakes. In the meantime, stories like this remind us that behind the headlines are real people—parents feeding their kids, seniors stretching meager budgets—who deserve better than to be pawns in a game of manufactured outrage. If we don’t address this now, the line between truth and AI illusion will only grow fainter, with consequences that could reshape society in ways we’re only beginning to imagine.