From RGB Limitations to Superhuman Vision – Empowering Apps to See Beyond the Human Eye with Simple AI Techniques

- Demystifying Multi-Spectral Data: Discover how multi-spectral imagery captures invisible wavelengths, offering insights into vegetation health, water detection, burn scars, and material identification that traditional RGB images can’t provide.

- Gemini’s Game-Changing Approach: Learn the straightforward three-step process to map complex spectral data into formats Gemini can understand, eliminating the need for custom models and enabling rapid analysis.

- Real-World Impact and Accessibility: Explore practical examples, from correcting misclassifications in land cover to broader applications in environmental monitoring, and try it yourself with a ready-to-use Colab notebook.

In a world dominated by RGB imagery—those familiar red, green, and blue channels that mimic human vision—developers have long built applications that recognize objects, generate art, and categorize scenes. But imagine giving your app the ability to peer into invisible realms, detecting plant stress, mapping floods, or identifying minerals from space. This isn’t science fiction; it’s the promise of multi-spectral imagery, a technology that captures data across numerous electromagnetic bands, including near-infrared (NIR) and short-wave infrared (SWIR). Thanks to Google’s Gemini models and their native multimodal capabilities, this powerful tool is now within reach for everyday developers, without the hassle of specialized training or complex pipelines.

Multi-spectral imagery goes far beyond the three-color limits of standard photos. While a typical digital image assigns each pixel values for red, green, and blue, multi-spectral sensors act like enhanced cameras, recording data in multiple bands across the spectrum. This includes invisible wavelengths that reveal hidden details about our world. For instance, healthy vegetation reflects high levels of NIR light, making it a reliable indicator for assessing crop health or tracking deforestation—far more precise than relying on visible green hues alone. Water, on the other hand, absorbs infrared, allowing for accurate mapping of floodplains or even evaluating water quality. SWIR bands excel at cutting through smoke to spot burn scars from wildfires, while unique spectral “fingerprints” help identify minerals and man-made materials from afar.

The game-changing potential here is immense, especially in fields like agriculture, environmental science, and disaster management. Historically, harnessing this data demanded expert knowledge, intricate processing tools, and custom machine learning models tailored to specific tasks. But Gemini flips the script. As detailed in recent research, its reasoning engine can analyze this rich data straight out of the box, using a clever technique that bridges the gap between invisible light and the model’s pre-trained understanding of visible colors.

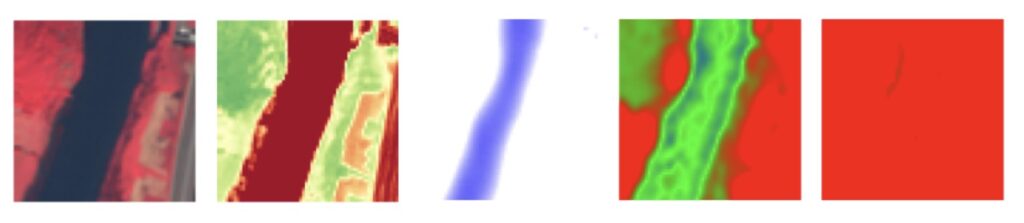

At the heart of this innovation is the creation of “false-color composite” images, where invisible spectral bands are mapped to the familiar RGB channels that models like Gemini are trained on. This isn’t about making images look natural; it’s about encoding scientific data in a digestible format. The process is refreshingly simple and boils down to three key steps. First, select the three spectral bands most relevant to your problem—say, NIR for vegetation or SWIR for fire detection. Second, normalize the data from each band to a 0-255 range and assign them to red, green, and blue channels, forming a new image. Third, and most crucially, feed this image to Gemini along with a prompt that explains what the colors represent, effectively teaching the model in real-time how to interpret the custom visuals.

Gemini’s versatility shines in real-world examples, particularly with datasets like EuroSat for land cover classification. In tests, the model accurately identified images as permanent crops, rivers, and industrial areas using standard inputs. However, challenges arise in ambiguous cases. For instance, an RGB image of a river might be misclassified as a forest due to similar green tones. By incorporating multi-spectral pseudo-images—such as those highlighting the Normalized Difference Water Index (NDWI)—and a detailed prompt, Gemini corrects this, reasoning that the spectral data indicates water. Similarly, a forest image initially mistaken for a sea lake is properly classified once multi-spectral inputs reveal vegetation signatures, with the model’s reasoning trace showing heavy reliance on these additional bands. These cases underscore how extra spectral data enhances decision-making without altering the model itself, opening doors to integrating other input types seamlessly.

This approach democratizes multi-spectral analysis, lowering barriers for developers who might lack deep remote sensing expertise. What once took weeks of prototyping can now be achieved in hours, fostering innovation in areas like precision agriculture, urban planning, and climate monitoring. Gemini’s in-context learning allows dynamic instructions via prompts, adapting to tasks from monitoring deforestation to aiding disaster response. Public data sources like NASA‘s Earthdata, Copernicus Open Access Hub, or Google Earth Engine make it easy to get started, turning satellite imagery into actionable insights.

To experience this firsthand, check out the prepared Colab notebook, which demonstrates using Gemini 2.5 with multi-spectral inputs for your own remote sensing explorations. Whether you’re building an app to track crop yields or analyze environmental changes, this method empowers you to teach your applications to see the world in a whole new light. The era of AI-driven superhuman vision is here—grab some data and unlock its potential today.