Veteran iOS Photo App Developers Put OpenAI, Gemini, and Seedream to the Test – Uncovering Trade-Offs in Quality, Creativity, and Real-World Usability for Everyday Edits

- Rigorous Testing Insights: LateNiteSoft, with 15 years of experience in apps like Camera+, Photon, and REC, conducted over 600 AI image generations using simple user prompts and common photo types, highlighting each model’s strengths and weaknesses.

- Model Breakdown: OpenAI dominates creative, transformative edits but risks “AI slop”; Gemini excels in realistic detail preservation, often conservatively; Seedream offers a balanced, affordable middle ground with impressive versatility.

- Industry Implications: The study emphasizes sustainable AI billing via their CreditProxy system, potential for prompt classifiers, and the evolving role of AI in mobile photography – all while avoiding venture capital pitfalls for user-focused innovation.

In the fast-paced realm of mobile photography, where apps can transform casual snaps into stunning visuals, AI is reshaping the game. LateNiteSoft, a seasoned developer of iOS photography apps, has been at the forefront for 15 years with hits like Camera+, Photon, and REC. They’ve consistently tuned into user desires for intuitive editing tools. When OpenAI unveiled its gpt-image-1 model earlier this year, LateNiteSoft seized the opportunity to explore AI’s potential in image editing. As a self-funded company eschewing venture capital, their approach prioritizes user delight over aggressive market grabs. They avoid the common AI startup model of teaser free tiers and low “unlimited” fees that rely on acquisitions, opting instead for sustainable practices.

To fairly monetize AI features, LateNiteSoft developed CreditProxy, a credit-based “pay per generation” system. They’re even planning to offer it as a service to others, inviting interested parties to reach out for trials. This innovation was battle-tested through their app MorphAI, a public proof-of-concept marketed to Camera+ users who already enjoy traditional filters and are eager for AI upgrades. With the emergence of models like nanoBanana and Seedream, LateNiteSoft evaluated which to support, weighing quality, prompt handling, and costs. After scripting quick tests and burning through countless credits, they shared their findings to help others avoid wasteful experiments.

The tests drew from LateNiteSoft’s expertise with Camera+ and MorphAI user behaviors, using straightforward prompts that everyday folks might enter – think pet owners or parents editing family photos, not AI pros. They selected representative images: pets, kids, landscapes, cars, and product shots. Generation times were consistent across models during testing: OpenAI’s high-quality mode took 80 seconds, Gemini 11 seconds, and Seedream 9 seconds. OpenAI’s medium quality averaged 36 seconds, with potential for sharing those results if interest arises. To navigate the comparisons easily, LateNiteSoft suggested keyboard shortcuts like arrows for switching models, tab for images, and ESC to exit enlarged views.

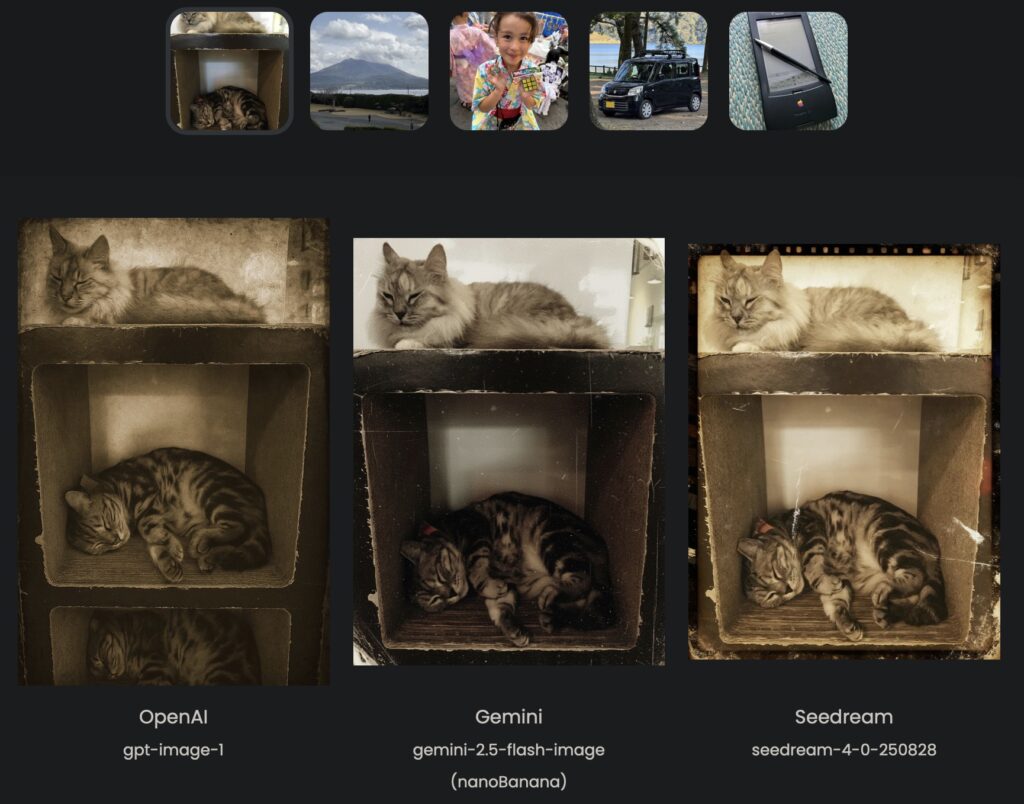

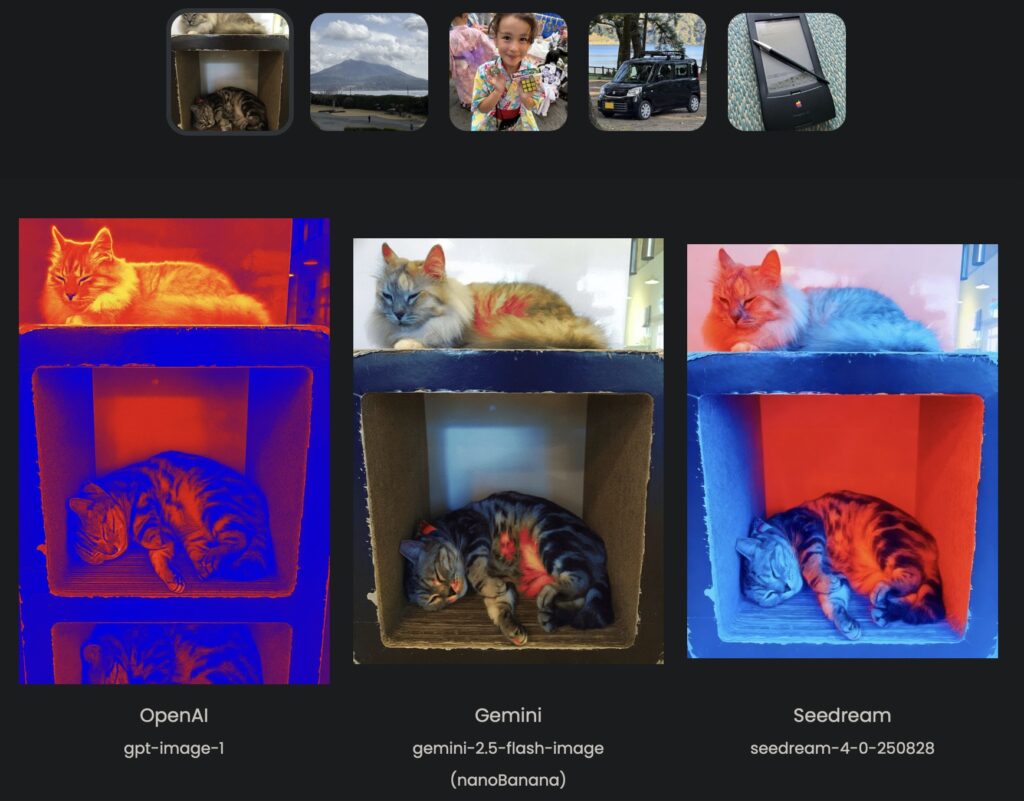

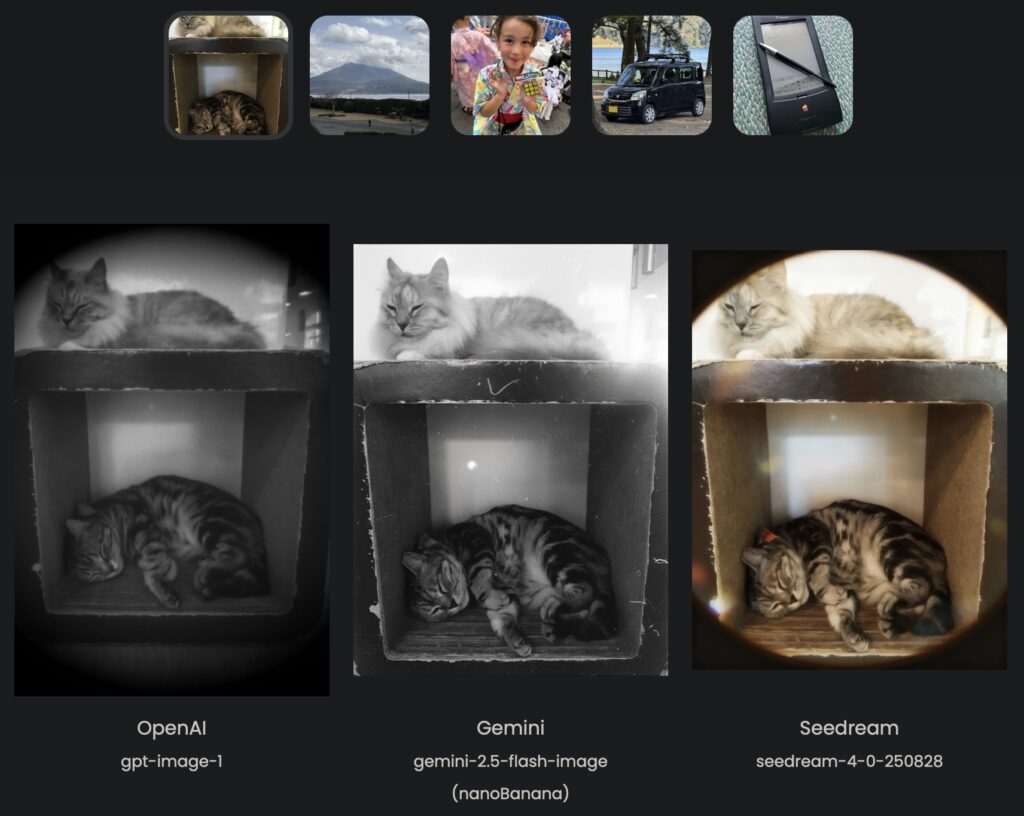

Starting with photo-realistic filters – once manually crafted via Photoshop layers and Objective-C code – AI now simplifies the process, albeit with environmental drawbacks from high energy use. LateNiteSoft found Gemini excelled by maintaining original details and curbing hallucinations, though it sometimes weakened effects for subtlety, particularly on people where it favored realism and occasionally skipped edits. OpenAI tended to alter details excessively, creating an “AI slop” effect that’s off-putting on faces. Seedream balanced this, adding elements like light streaks in thermal or long-exposure simulations, but it faltered on conceptual tasks, such as misunderstanding heat sources beyond humans.

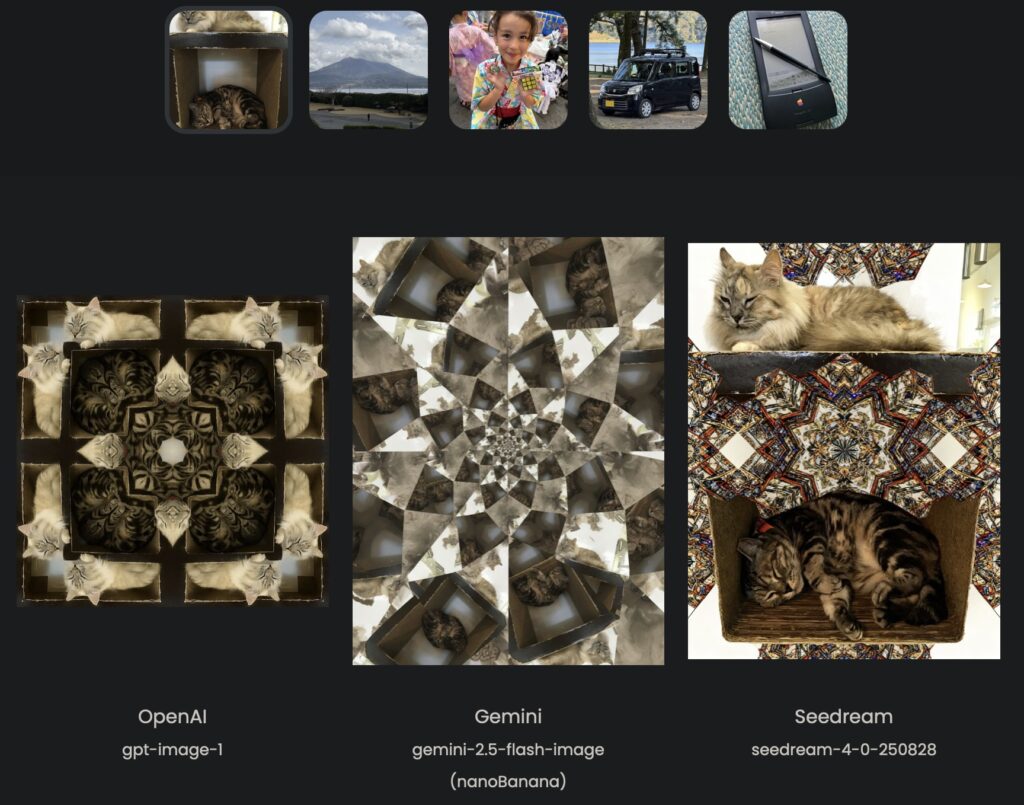

Long-exposure prompts exposed model quirks: OpenAI performed well on landscapes and cars with effective motion blur but distorted cats and turned portraits abstract. Gemini often did little, staying literal, while Seedream added car-light trails that fit some scenes better than others. For “etched glass” effects, vague prompts led to funny missteps, like Gemini generating literal camera pictures. Refining to “etched glass effect” improved outcomes: OpenAI nailed traditional looks, Seedream created appealing glassy items, and Gemini offered unique interpretations. Background isolation tests showed OpenAI’s detail mangling as a flaw, with Gemini seeking clarification on ambiguous subjects (e.g., prompting about which cat to isolate) and Seedream delivering eye-catching product results, including invented reflections.

Lens effects, inspired by LateNiteSoft’s past Camera+ filter packs, highlighted AI’s ability to fabricate wide-angle views. OpenAI hallucinated freely, straying from originals, whereas Gemini and Seedream remained faithful, simulating authentic lens changes. Bokeh effects proved challenging: OpenAI offered solid blur without proper circles, Gemini scattered odd orbs, and Seedream delivered convincing depth. Style transfers, an older AI technique LateNiteSoft experimented with on early GPUs, favored OpenAI for bold artistic renditions like Van Gogh or Studio Ghibli styles – the latter going viral via ChatGPT. Gemini was overly cautious, especially on human photos, possibly due to guardrails, while Seedream showed anime biases, likely from Asian-heavy training data.

For human-centric prompts on portraits, caricature requests saw Seedream veer anime-style, OpenAI go viral with exaggerations, and Gemini produce realistic tweaks. Creative prompts shone with OpenAI’s flair, like perfect 70s vinyl covers or cyberpunk art, where it nailed aspect ratios and full reinventions. Gemini’s outputs could turn creepy (e.g., face portals), and Seedream excelled in Asian-inspired styles but lagged on others. Ultimately, LateNiteSoft concluded no single model fits all: OpenAI for creative overhauls, Gemini for realism, and Seedream as a jack-of-all-trades at a better price-performance ratio.

Broadly, this study illuminates AI’s progress in creative tools while spotlighting biases, environmental costs, and the need for ethical monetization. LateNiteSoft’s CreditProxy pushes back against unsustainable models, and their prompt classifier experiments – routing artistic tasks to OpenAI and realistic ones to Gemini – could automate selections. Conducted on October 8 using gpt-image-1, gemini-2.5-flash-image, and seedream-4-0-250828 over a Japanese fiber connection, these tests offer valuable guidance. As AI integrates deeper into mobile apps, insights like these from innovators like LateNiteSoft will shape a more user-centric future. What do you think – ready to try these models yourself?