While the rest of Big Tech drafts roadmaps for the future, Elon Musk’s Colossus 2 is already online, consuming city-sized energy to train the next generation of artificial intelligence.

- Operational Supremacy: xAI has officially brought Colossus 2 online, becoming the first entity in history to operate a 1-gigawatt AI training cluster, effectively beating competitors like OpenAI and Google to this infrastructure milestone by nearly two years.

- Unmatched Scale: The facility currently houses approximately 555,000 GPUs and consumes more electricity than the peak demand of San Francisco, with immediate plans to expand capacity to 1.5 gigawatts by April 2026.

- Speed Over Bureaucracy: Reflecting a strategy of “execution at scale,” xAI utilized gas turbines and Tesla Megapacks to bypass traditional grid bottlenecks, allowing them to train next-generation models like Grok 5 while others are still in the planning phase.

In the high-stakes race for artificial intelligence supremacy, infrastructure is destiny. Elon Musk’s xAI has just claimed a decisive lead by bringing Colossus 2 online, a supercomputing cluster that has officially broken the gigawatt barrier. This achievement marks a pivotal moment in the history of computing; xAI is now the first company to operate a gigawatt-scale AI training cluster, a feat that provides the raw horsepower necessary to develop the next generation of “frontier” models.

To visualize the sheer magnitude of this engineering behemoth, one must look at energy consumption on a municipal scale. A single gigawatt of power is roughly sufficient to supply electricity to 750,000 to 800,000 homes. In practical terms, xAI’s new facility consumes more power than the peak electricity demand of the entire city of San Francisco. While this massive energy draw raises questions about sustainability, it provides xAI with a computational density that is currently unmatched anywhere else on Earth.

Leaving Competitors in the Rearview Mirror

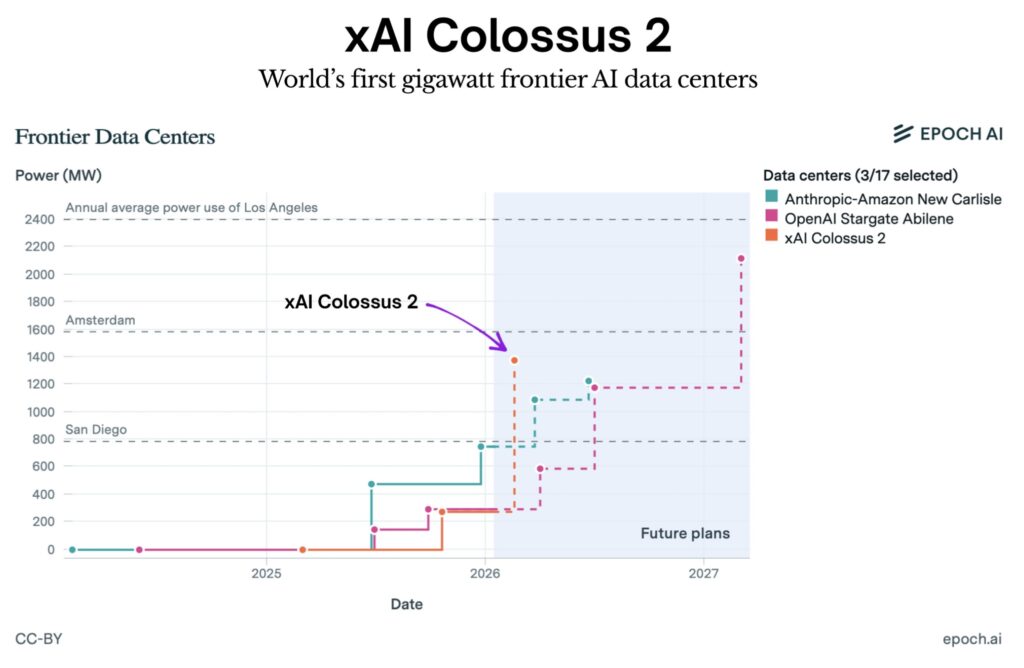

The activation of Colossus 2 highlights a stark divergence in strategy between xAI and its rivals. The prevailing narrative in Silicon Valley has been that gigawatt-scale clusters were a distant goal, with industry giants like OpenAI (via the “Stargate” project), Microsoft, and Google aiming to bring similar facilities online between 2026 and 2027. By activating this cluster now, xAI has effectively pulled the future into the present.

Independent analysis from Epoch AI underscores this disparity. While competitors remain in the planning and drafting stages—navigating complex corporate meetings and supply chain logistics—xAI is already operating at a level others hope to reach years down the line. The strategy is classic Musk: move faster than everyone else and scale before the competition finishes their paperwork. The company’s execution speed has been described as “unreal,” with the original Colossus 1 facility going from dirt to fully operational in just 122 days.

The Hardware Advantage: Bigger is Smarter

The driving force behind this massive infrastructure investment is the “Scaling Law” of artificial intelligence: the intelligence of a model scales directly with the amount of compute used to train it. Inside Colossus 2 sits an arsenal of approximately 555,000 specialized GPUs. To put this in perspective, estimates suggest that Meta operates roughly 150,000 GPUs, while Microsoft has around 100,000 dedicated to similar tasks. xAI’s distributed infrastructure is potentially four times more powerful than the next largest dedicated AI training site.

This hardware advantage is being deployed immediately to train Grok, xAI’s large language model. With $20 billion recently raised to accelerate this specific infrastructure, the cluster will support the development of Grok 4.20 and the highly anticipated Grok 5. By possessing the largest computer, xAI can iterate faster, test complex reasoning capabilities, and deploy improved versions of their software to X’s hundreds of millions of users long before other labs can catch up.

Powering the Future

Sustaining a gigawatt-scale facility presents enormous logistical challenges, particularly regarding energy. Colossus 2 is not just a computing marvel but an energy puzzle that xAI is solving in real-time. To manage the massive load and mitigate strain on the public grid, the company is diversifying its energy resources by expanding its array of gas turbines and Tesla Megapack batteries. This allows the facility to maintain consistent operations without destabilizing local power infrastructure.

The growth shows no signs of slowing down. Musk has already announced that the facility will upgrade to 1.5 gigawatts by April 2026, eventually targeting a total of 2 gigawatts. This expansion is expected to add another 250,000 GPUs to the fleet, pushing the total count toward 850,000. As xAI continues to scale at this breakneck pace, the industry is left playing catch-up, watching as the threshold for state-of-the-art AI is redefined in real-time.