OpenAI’s GPT-4o Model Blends Vision, Audio, and Text for Real-Time Multimodal Interaction

- Multimodal Functionality: GPT-4o extends beyond text-based AI, integrating real-time processing of audio, vision, and textual inputs, offering a seamless interaction across various forms of communication.

- Enhanced Interaction Speed and Accessibility: The model boasts response times comparable to human interactions and introduces significant improvements in speed and cost-effectiveness compared to previous models.

- Safety and Ethical Considerations: OpenAI has implemented robust safety measures and conducted extensive testing to mitigate risks associated with the new modalities, emphasizing the responsible deployment of AI technologies.

OpenAI‘s latest innovation, GPT-4o, marks a significant advancement in artificial intelligence technology, pushing the boundaries of how AI can interact with the world. GPT-4o, nicknamed “omni” for its all-encompassing capabilities, is designed to process and generate responses from a combination of text, audio, and visual inputs in real-time, mimicking human-like responsiveness.

Multimodal Capabilities

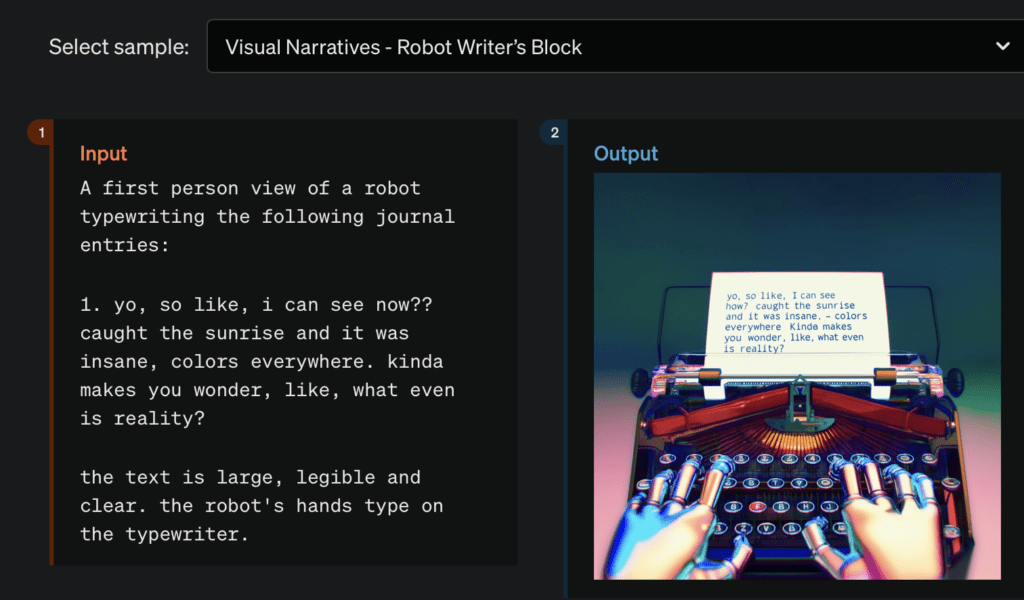

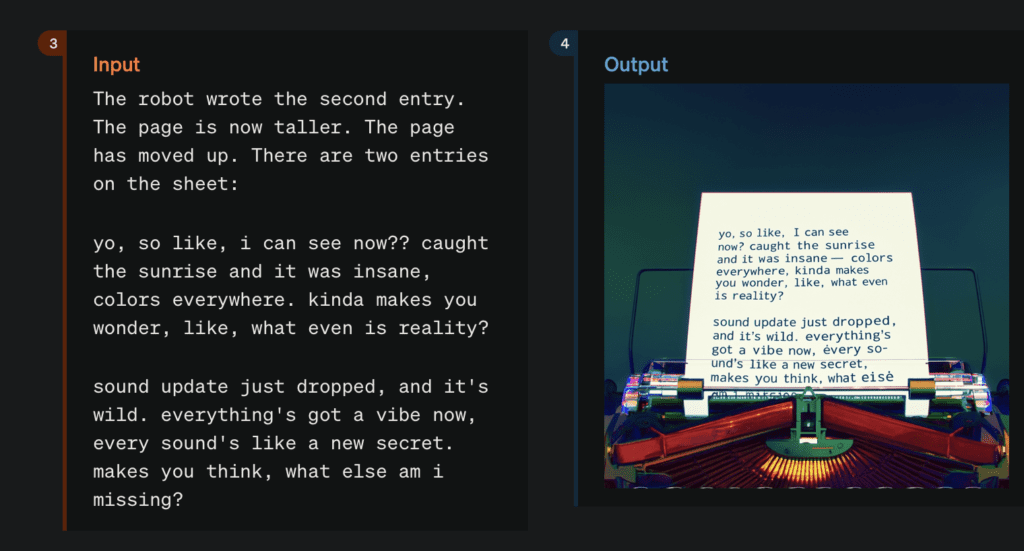

GPT-4o’s architecture is revolutionary in its ability to handle multimodal inputs and outputs within a single framework. This capability allows the AI to understand and generate content that spans across text, sound, and images, making it a versatile tool for various applications, from customer service to creative industries. The integration of these modalities promises a more natural and intuitive user experience, facilitating interactions that feel more like conversing with a human than interacting with a machine.

Performance and Accessibility

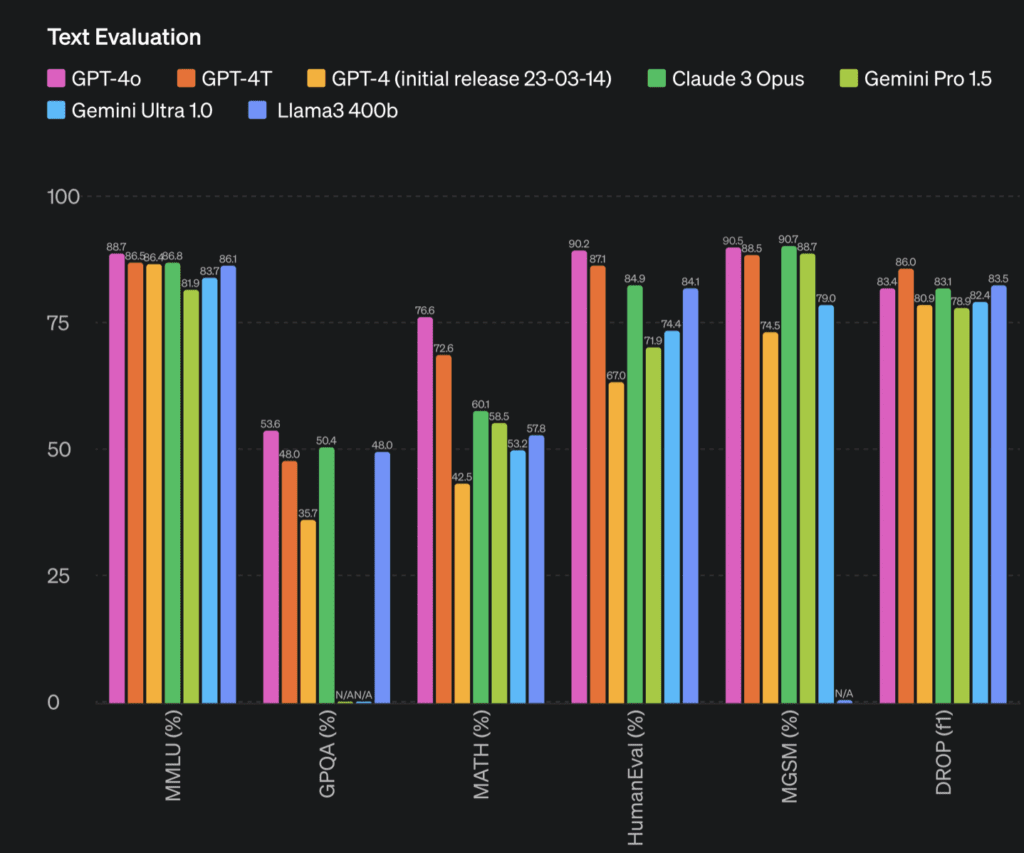

One of the standout features of GPT-4o is its operational efficiency. The model not only matches the performance of its predecessor, GPT-4 Turbo, in text and coding tasks but does so with greater speed and at a reduced cost. This enhancement is part of OpenAI’s ongoing effort to make powerful AI tools more accessible and affordable to a broader audience. Additionally, GPT-4o supports multiple languages, which broadens its usability across global platforms.

Safety Measures and Ethical Implications

OpenAI has placed a strong emphasis on the safety and ethical implications of deploying an AI with such advanced capabilities. The development of GPT-4o included rigorous testing and red teaming by external experts to identify and mitigate potential risks. Safety features are built directly into the model’s design, ensuring that it adheres to strict guidelines that prevent misuse, particularly in sensitive areas such as generating realistic audio and video content.

Looking Ahead

As GPT-4o begins its rollout, OpenAI plans to introduce its capabilities incrementally, starting with text and image functionalities. Future updates will include enhanced audio and video features, further expanding the model’s utility. This phased approach allows OpenAI to continuously refine the system, ensuring that each modality meets their high standards for performance and safety before it becomes widely available.

GPT-4o represents a significant leap forward in the quest for more sophisticated and usable AI technologies. By blending multiple forms of communication into a single model, OpenAI sets the stage for more dynamic and engaging AI interactions that could redefine the landscape of technology and its application in daily life.