OpenAI Introduces a New, Affordable AI Model

- OpenAI launches GPT-4o mini, its most cost-efficient small model to date.

- The model supports text and vision, with future updates to include image, video, and audio capabilities.

- GPT-4o mini is significantly cheaper, enhancing accessibility for a broad range of AI applications.

OpenAI has announced the release of GPT-4o mini, a new model designed to make artificial intelligence more accessible and affordable. This announcement marks a significant step in OpenAI’s mission to democratize AI by reducing the cost of using advanced models. The GPT-4o mini is priced at just 15 cents per million input tokens and 60 cents per million output tokens, making it more than 60% cheaper than the previous GPT-3.5 Turbo model.

Expanding AI Accessibility

GPT-4o mini is a smaller, cost-efficient variant of OpenAI’s powerful GPT-4o model. It aims to broaden the scope of AI applications by lowering the financial barriers to entry. With its low cost and reduced latency, GPT-4o mini is ideal for a wide range of tasks, including applications that require chaining or parallelizing multiple model calls, passing large volumes of context to the model, and interacting with customers through real-time text responses.

Technical Capabilities

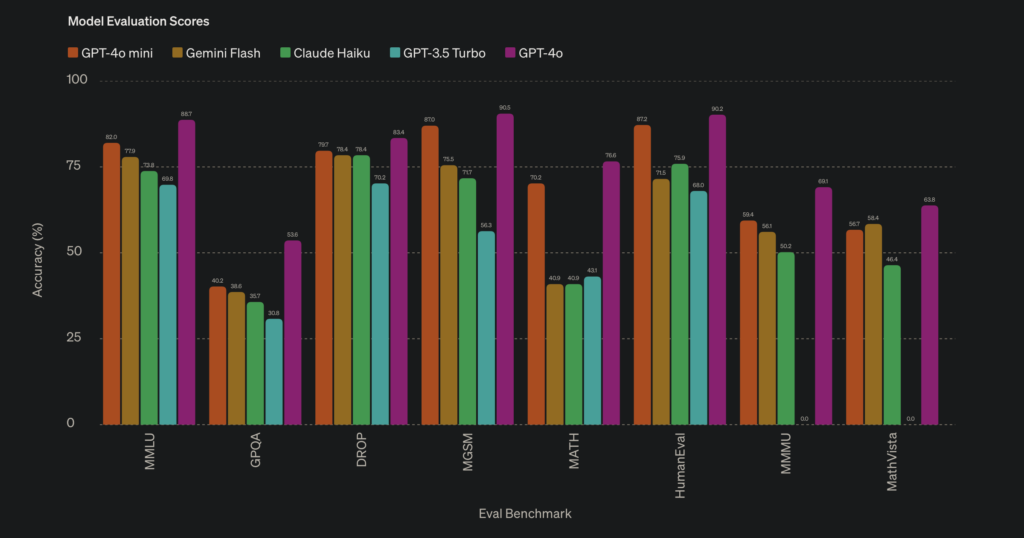

The GPT-4o mini model boasts impressive technical capabilities, supporting a context window of 128K tokens and up to 16K output tokens per request. It includes the same advanced tokenizer as GPT-4o, which improves cost-efficiency when handling non-English text. The model has shown strong performance across several benchmarks, surpassing other small models in textual intelligence, multimodal reasoning, and math and coding proficiency. For instance, it scored 82% on the MMLU benchmark, outperforming other competitors like Gemini Flash and Claude Haiku.

Safety and Reliability

OpenAI has integrated robust safety measures into GPT-4o mini from the ground up. These measures include filtering out unwanted information during pre-training and aligning the model’s behavior to OpenAI’s policies using reinforcement learning with human feedback (RLHF). The model has been tested extensively by external experts to identify potential risks, which have been addressed to ensure safe and reliable usage.

Availability and Future Developments

GPT-4o mini is now available in the Assistants API, Chat Completions API, and Batch API. It will be accessible to ChatGPT’s Free, Plus, and Team users starting today, with Enterprise users gaining access next week. This rollout reflects OpenAI’s commitment to making the benefits of AI widely available.

Looking ahead, OpenAI plans to integrate image, video, and audio capabilities into GPT-4o mini, further enhancing its multimodal reasoning abilities. This evolution aligns with OpenAI’s vision of creating AI models that can seamlessly interact with various types of media, making them more versatile and useful in diverse applications.

Implications for the AI Industry

The introduction of GPT-4o mini represents a significant advancement in the AI industry. By offering a powerful yet affordable model, OpenAI is poised to accelerate the adoption of AI across different sectors. Developers can now build and scale AI applications more efficiently and at a lower cost, potentially leading to a surge in innovative solutions powered by AI.

Moreover, GPT-4o mini’s emphasis on safety and ethical considerations sets a new standard for AI development. As AI continues to integrate into daily life, ensuring that these models operate securely and responsibly becomes increasingly important. OpenAI’s proactive approach in this regard is commendable and likely to influence industry practices.

GPT-4o mini not only advances cost-efficient intelligence but also underscores OpenAI’s dedication to making AI technology accessible, safe, and effective for a wide audience. The model’s release marks a promising step towards a future where AI is seamlessly integrated into various aspects of our digital experiences, driving innovation and improving efficiency across multiple domains.