Bridging the Embodiment Gap for Dexterous Robot Manipulation

- DexUMI is a groundbreaking framework that uses the human hand as a universal interface to transfer dexterous manipulation skills to diverse robot hands, achieving an impressive 86% success rate across various tasks.

- Through innovative hardware adaptations like wearable exoskeletons and software solutions such as high-fidelity video inpainting, DexUMI minimizes the kinematic and visual gaps between human and robotic hands.

- While demonstrating significant advancements, DexUMI also faces challenges in hardware design, software precision, and existing robot hand limitations, paving the way for future improvements in robotics.

The field of robotics has long aspired to replicate the unparalleled dexterity of the human hand, a tool capable of performing an astonishing array of tasks with precision and ease. However, transferring these skills to dexterous robot hands has proven to be a formidable challenge due to the substantial embodiment gap—a divide encompassing differences in kinematics, tactile feedback, visual appearance, and contact surface shapes. Enter DexUMI, a pioneering framework developed to address these disparities by positioning the human hand as the natural and universal interface for robotic manipulation. By integrating sophisticated hardware and software adaptations, DexUMI not only bridges the gap between human and robot hands but also sets a new standard for skill transfer across diverse robotic platforms.

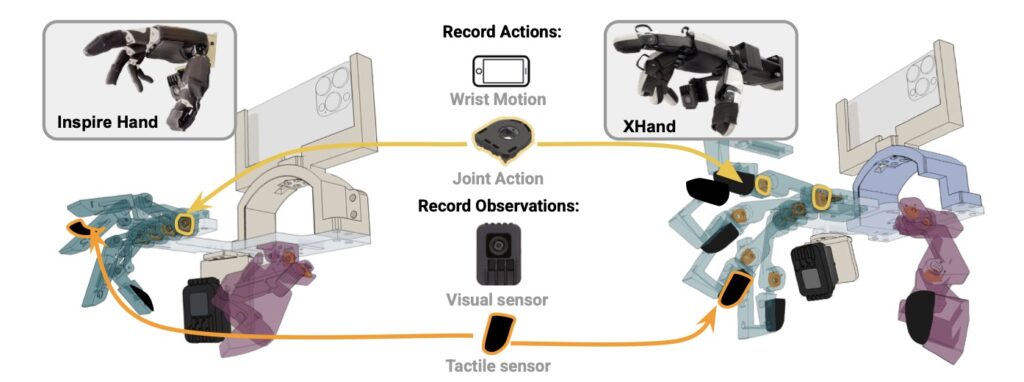

At its core, DexUMI tackles the embodiment gap with a dual approach. On the hardware front, it introduces a wearable hand exoskeleton tailored to specific robot hands through a meticulous optimization process. This exoskeleton allows users to directly interact with objects during data collection, providing intuitive haptic feedback that teleoperation systems often lack. Unlike traditional methods that struggle with spatial mismatches, the exoskeleton ensures that human motions are constrained to match the feasible kinematics of the target robot hand, making the recorded actions directly transferable. Additionally, it captures precise joint angles using encoders, avoiding the inaccuracies of visual tracking, and incorporates tactile sensors to mirror the sensory input a robot hand would receive. This hardware adaptation results in a seamless and efficient data collection process, demonstrated to be 3.2 times more effective than teleoperation.

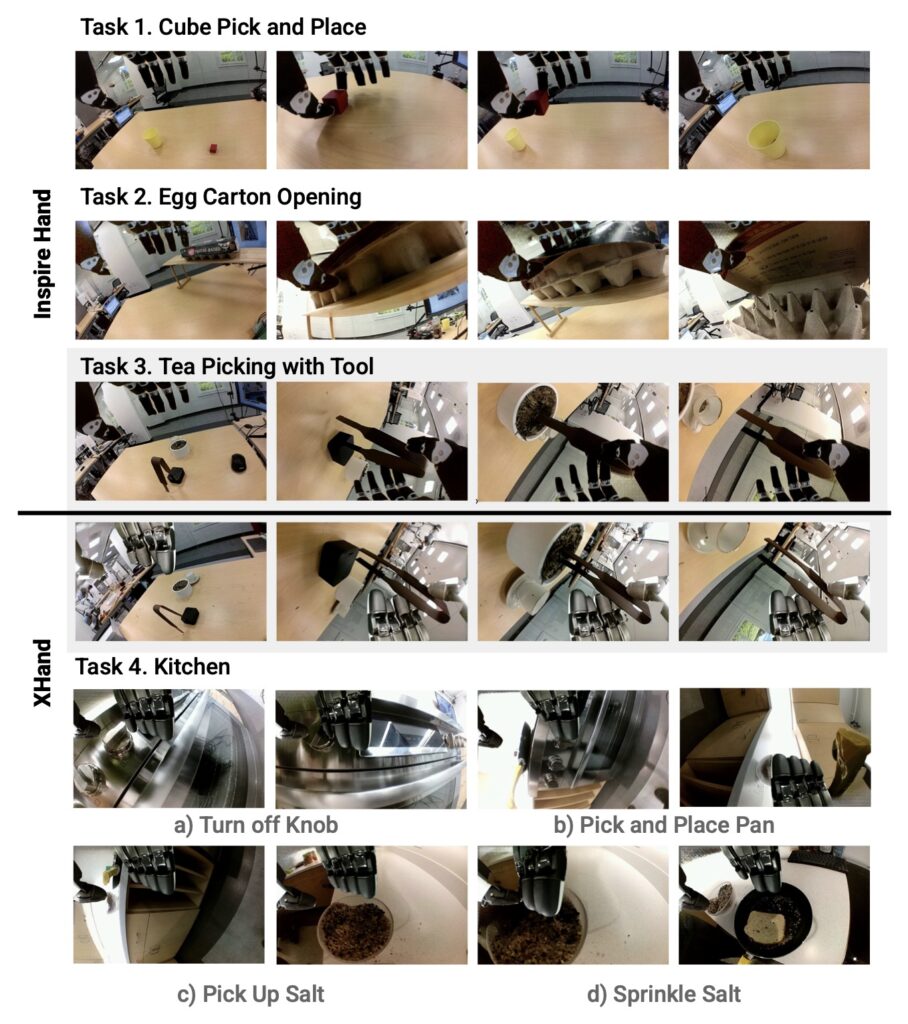

Complementing the hardware, DexUMI’s software adaptation focuses on bridging the visual observation gap. Through a sophisticated data processing pipeline, it removes the human hand and exoskeleton from demonstration videos using segmentation techniques and replaces them with high-fidelity images of the corresponding robot hand. This inpainting process ensures visual consistency between training data and real-world robot deployment, addressing the stark visual differences between human and robotic hands. The result is a cohesive dataset that allows robots to learn from human demonstrations as if they were observing their own actions, a critical step in effective skill transfer.

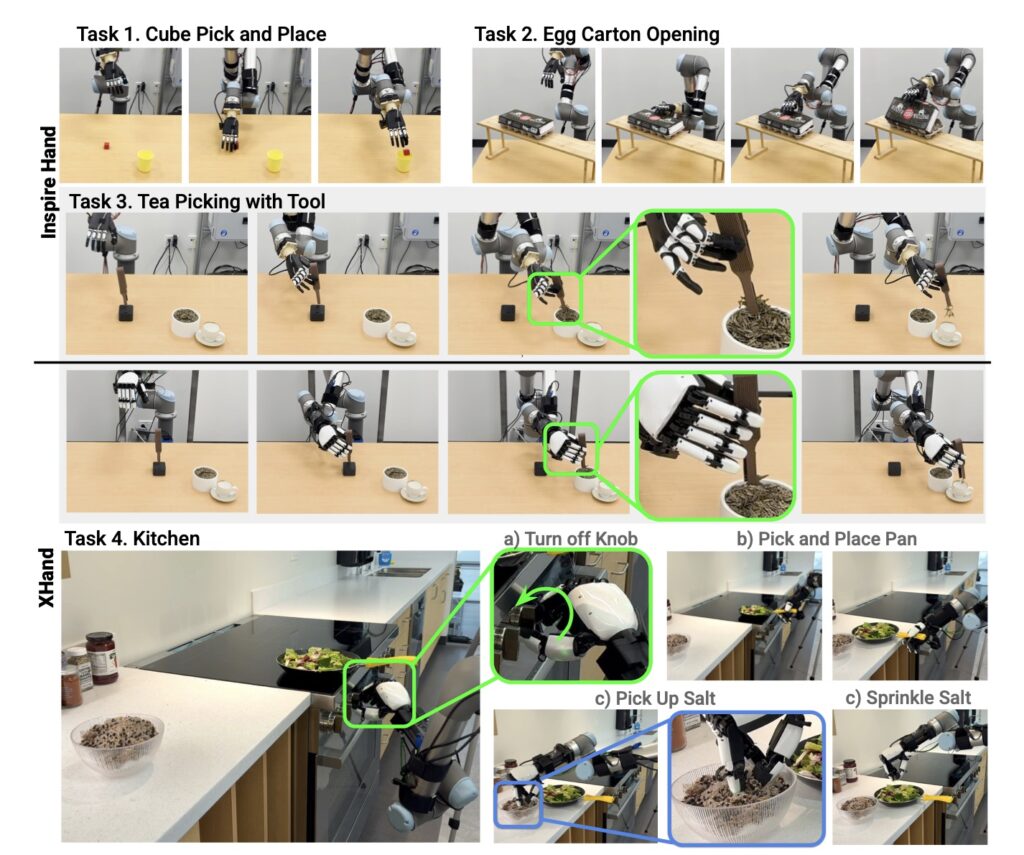

The real-world impact of DexUMI is evident in its comprehensive experiments conducted on two distinct dexterous robot hands: the 6-DoF Inspire Hand and the 12-DoF XHand. Across four diverse tasks, including complex, long-horizon activities requiring multi-finger coordination, DexUMI achieved an average success rate of 86%. This remarkable performance underscores its ability to generalize across different hardware designs, from underactuated to fully-actuated systems, despite the inherent engineering trade-offs in degrees of freedom, motor ranges, and actuation mechanisms. Whether it’s closing tweezers with fine-grained precision or handling intricate multi-contact scenarios, DexUMI proves that human dexterity can indeed be translated to robotic systems with minimal loss of capability.

However, the journey to perfecting this framework is not without its hurdles. Hardware adaptation challenges include the need for robot-specific exoskeleton tuning to ensure wearability, which currently requires manual optimization. Future directions could involve fully automated design processes or the integration of generative models to streamline this step. Wearability itself remains a concern, as users sometimes face limitations in finger movement due to material constraints and 3D printing strength issues, suggesting a potential shift towards softer materials like TPU for enhanced comfort. Tactile sensor reliability also poses a problem, with current resistive and electromagnetic sensors showing sensitivity to attachment methods and pressure-induced drift, particularly under the greater force exerted by human hands. Exploring alternative sensor technologies, such as vision-based or capacitive options, could offer a solution.

On the software side, limitations in inpainting quality—such as challenges in reproducing illumination effects or avoiding blurred areas—highlight areas for improvement. The current reliance on real-world robot hardware for image data could be mitigated by developing generative models that synthesize robot hand poses directly from motor values. Additionally, DexUMI’s requirement for a fixed camera position limits flexibility, though training image generation models to account for varying camera angles could expand its applicability.

Beyond these adaptations, the existing design of commercial robot hands introduces further complexities. Issues like backlash and friction reduce precision in motor movements, leading to discrepancies in fingertip positioning that affect action mapping and inpainting accuracy. Size differences between human and robot hands can also complicate wearability, especially when a robot hand is significantly larger, making certain joint configurations unattainable. An intriguing future direction might involve a reverse design paradigm, where a comfortable and operable exoskeleton is designed first, serving as the blueprint for the robot hand itself, thus prioritizing human ergonomics from the outset.

Despite these challenges, DexUMI represents a transformative leap in robotics, redefining how we approach the transfer of dexterous skills from human to machine. By leveraging the human hand as the ultimate manipulation interface, it not only achieves unprecedented success rates but also opens a dialogue on the future of robot design and human-robot interaction. As researchers continue to refine hardware wearability, enhance software fidelity, and address the limitations of current robotic hardware, DexUMI stands as a beacon of innovation, promising a world where robots can mirror the intricate capabilities of the human hand with ever-greater fidelity. This framework is not just a tool; it’s a vision of a future where the boundary between human skill and robotic potential becomes increasingly blurred, paving the way for applications we are only beginning to imagine.