Human-Driven Perturbations Unlock Efficiency and Robustness in Robotic Imitation Learning

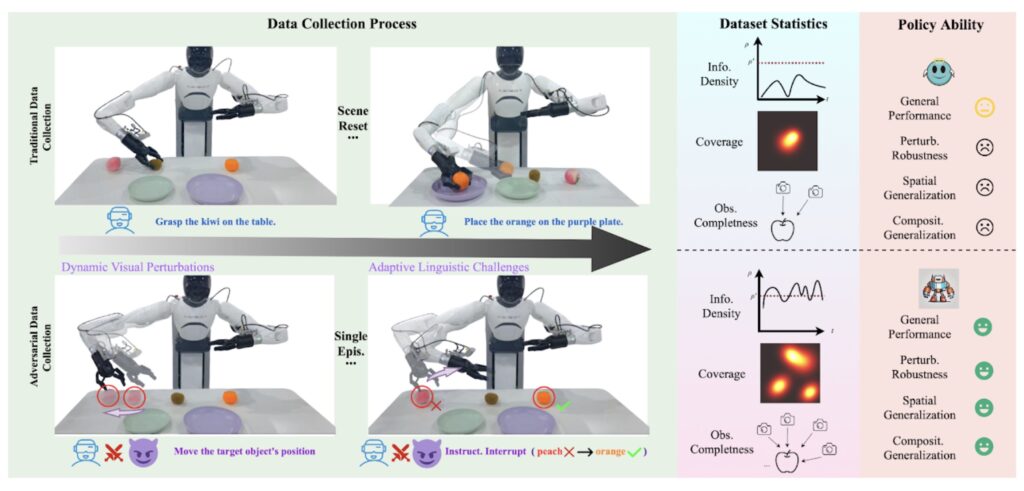

- Adversarial Data Collection (ADC) introduces a groundbreaking Human-in-the-Loop framework that maximizes the informational density of robotic demonstrations, reducing reliance on large datasets while enhancing task performance.

- By dynamically altering object states, environmental conditions, and linguistic commands during demonstrations, ADC compresses diverse failure-recovery behaviors and task variations into minimal data, enabling superior generalization and robustness.

- Models trained with just 20% of ADC-collected data outperform traditional methods using full datasets, proving that strategic data acquisition is key to scalable, real-world robotic learning.

The field of robotic manipulation is at a crossroads. While advancements in artificial intelligence (AI) and machine learning have unlocked unprecedented capabilities, the challenge of data efficiency remains a significant bottleneck. Real-world robotic tasks, such as grasping objects or assembling components, require vast amounts of high-quality data to ensure robust performance. However, collecting this data is labor-intensive, costly, and often impractical to scale. Enter Adversarial Data Collection (ADC), a transformative approach that redefines how robots learn by prioritizing quality over quantity.

The Data Dilemma in Robotic Learning

Traditional robotic imitation learning relies on static demonstrations, where a human operator performs a task while the robot passively records the actions. These demonstrations are then sliced into sequential units, each containing visual inputs, language instructions, and corresponding actions. While this approach has been the backbone of robotic learning, it suffers from inherent inefficiencies. Visual frames are often redundant, language instructions repetitive, and actions highly correlated, diluting the informational value of each demonstration.

Moreover, real-world complexity—varying object configurations, lighting conditions, and task constraints—demands massive data diversity to ensure generalization. Synthetic data, though scalable, falls short due to idealized physics and kinematics, creating a domain gap that hinders real-world application. Even with low-cost teleoperation systems, minor environmental changes require costly human resampling, making scalability a persistent challenge.

A Paradigm Shift

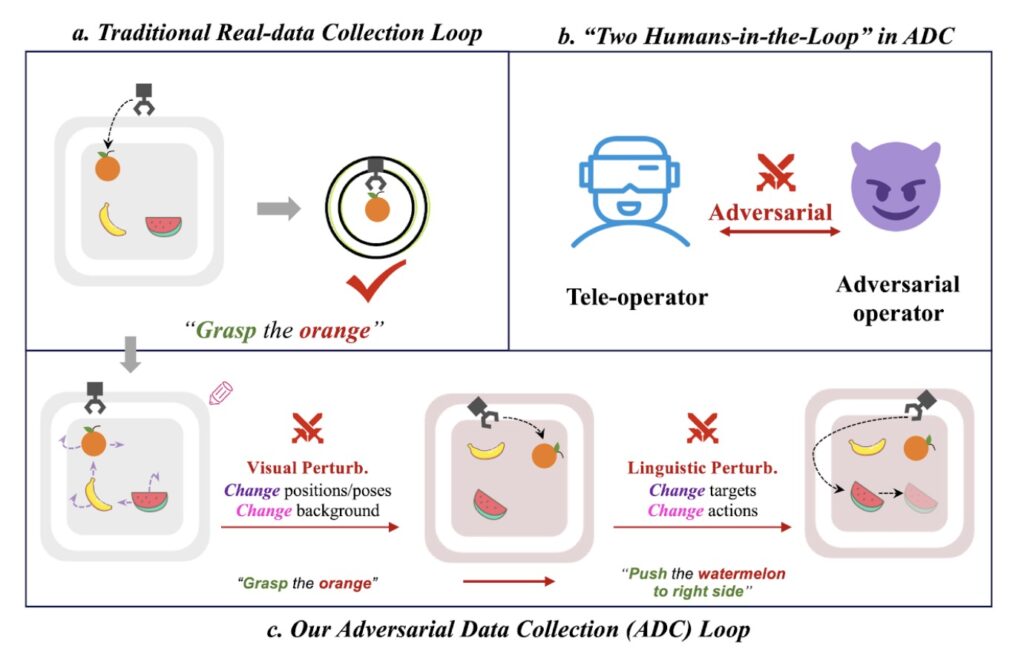

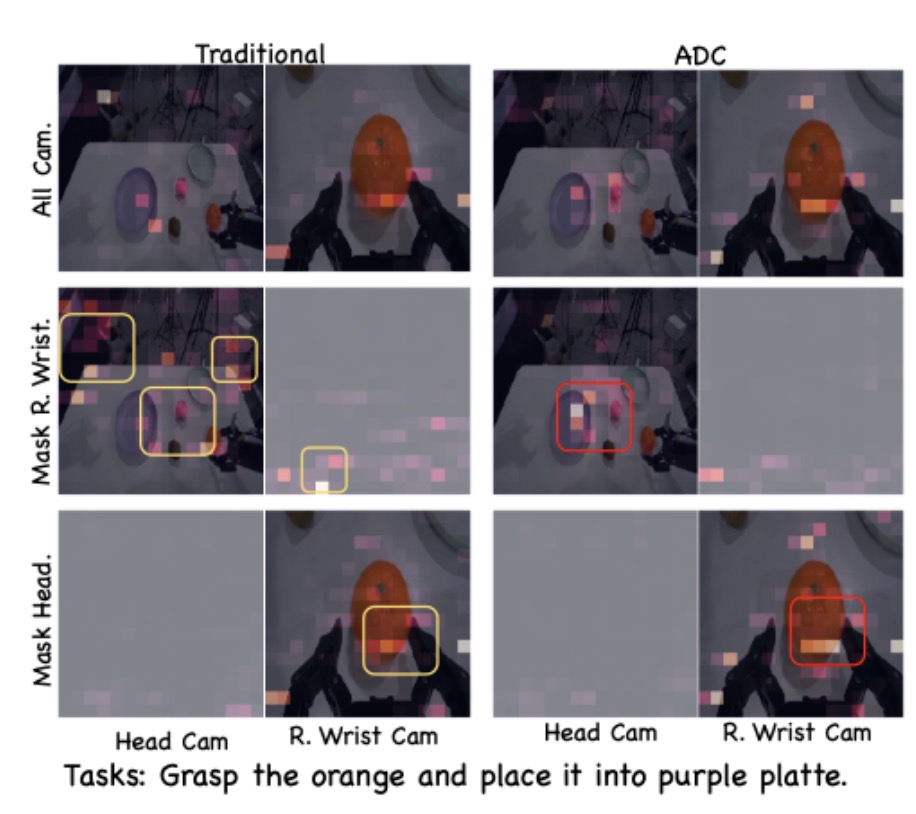

ADC addresses these limitations by introducing a Human-in-the-Loop (HiL) framework that leverages real-time, bidirectional interactions between humans and the environment. Unlike conventional methods, ADC adopts a collaborative perturbation paradigm. During a single demonstration, an adversarial operator dynamically alters object states, environmental conditions, and linguistic commands. Simultaneously, the teleoperator adapts their actions to overcome these evolving challenges.

This process compresses diverse failure-recovery behaviors, compositional task variations, and environmental perturbations into minimal demonstrations. For example, a single ADC demonstration might include scenarios where objects are repositioned, lighting conditions change, or linguistic instructions are modified mid-task. By exposing the robot to these adversities during training, ADC-trained models inherently acquire robustness to environmental uncertainties and compositional language variations.

The Power of Strategic Adversity

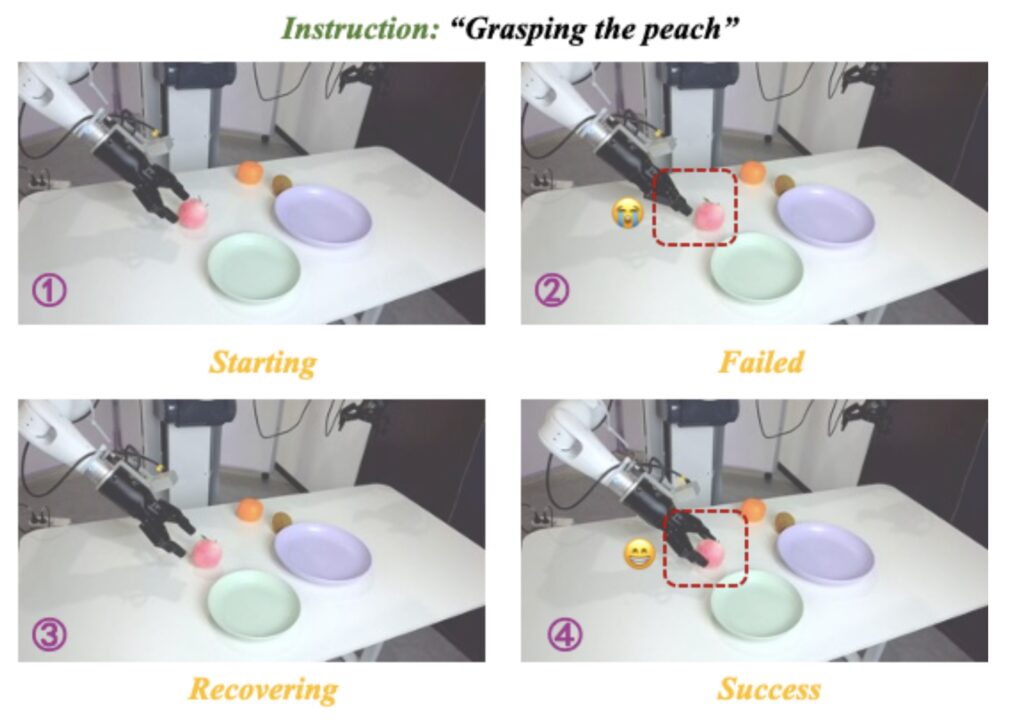

The results of ADC are nothing short of remarkable. Models trained with just 20% of the demonstration volume collected through ADC significantly outperform traditional approaches using full datasets. These models exhibit superior compositional generalization to unseen task instructions, enhanced robustness to perceptual perturbations, and emergent error recovery capabilities.

For instance, in a task where a robot is instructed to “grasp the orange,” an ADC-trained model can adapt to variations such as the orange being moved, the lighting changing, or the instruction being altered to “grasp the orange and place it in the basket.” These capabilities are unattainable through scale-driven data collection, highlighting the transformative potential of ADC.

A New Benchmark for Robotic Learning

To further advance the field, the research team is curating a large-scale ADC-Robotics datasetcomprising real-world manipulation tasks with adversarial perturbations. This benchmark, which will be open-sourced, aims to facilitate advancements in robotic imitation learning by providing a rich, diverse, and high-quality dataset for researchers and developers.

ADC represents a paradigm shift in robotic learning, demonstrating that strategic adversity during data acquisition is fundamental to efficient and robust AI. By integrating real-time human adaptation with physics-constrained perturbations, ADC bridges the gap between data-centric learning paradigms and practical robotic deployment.

As the field of robotics continues to evolve, the insights from ADC compel a re-examination of robotic data ecosystems. Purposeful perturbation during acquisition, not just algorithmic innovation, may hold the key to generalizable embodied intelligence. In a world where data is often equated with quantity, ADC reminds us that quality, orchestrated through human-environment interplay, is the true cornerstone of progress.

Adversarial Data Collection is more than just a new method—it’s a philosophy that challenges the status quo of robotic learning. By embracing adversity and prioritizing the informational density of data, ADC paves the way for scalable, efficient, and robust robotic systems capable of thriving in the complexities of the real world. As we look to the future, ADC stands as a testament to the power of human collaboration and strategic innovation in shaping the next generation of AI.