How Neural Timing and Synchronization Could Unlock the Next Generation of Artificial Intelligence

- Biological brains rely on timing and synchronization for flexible, adaptive intelligence, but modern AI systems have largely discarded these principles for efficiency.

- The Continuous Thought Machine (CTM) introduces a new neural network architecture that explicitly incorporates neural timing, neuron-level models, and synchronization as core computational elements.

- By reintroducing temporal dynamics and internal recurrence, CTMs offer a promising path toward more general, reasoning-capable AI that more closely mirrors the workings of the human brain.

For decades, artificial intelligence has drawn inspiration from the human brain, yet the resemblance between artificial neural networks (NNs) and their biological counterparts has grown increasingly superficial. While brains are dynamic, ever-changing systems where the timing and synchronization of neural activity are fundamental to cognition, modern AI has favored static, simplified abstractions. This trade-off has enabled the remarkable scaling of deep learning, but at the cost of discarding the very properties that make biological intelligence so flexible and adaptable.

The Missing Ingredient: Time

Biological brains are the product of hundreds of millions of years of evolution, developing intricate mechanisms such as spike-timing-dependent plasticity (STDP) and neuronal oscillations. These mechanisms allow for a rich tapestry of neural dynamics, where the precise timing of spikes and the synchronization of neural populations play a central role in learning, memory, and reasoning. In contrast, most modern neural networks reduce the complexity of neural activity to a single, static value—an activation function that ignores the temporal dance of real neurons.

This simplification has been a double-edged sword. On one hand, it has enabled the training of massive models on vast datasets, leading to breakthroughs in image recognition, language understanding, and more. On the other, it has created a fundamental gap between the general, flexible intelligence of humans and the narrow, task-specific prowess of machines. The absence of temporal processing and synchronization in AI models may be a key reason why current systems struggle with tasks that require reasoning, adaptation, and creativity.

Introducing the Continuous Thought Machine

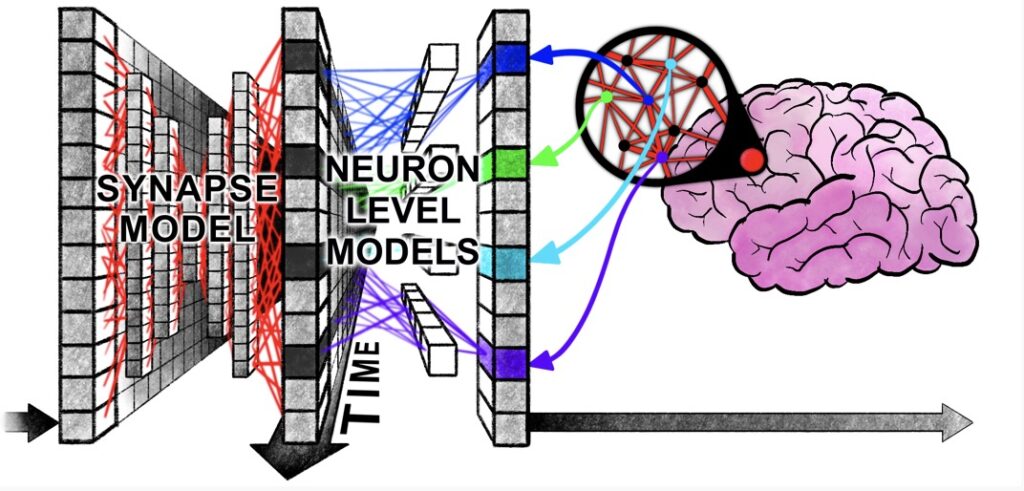

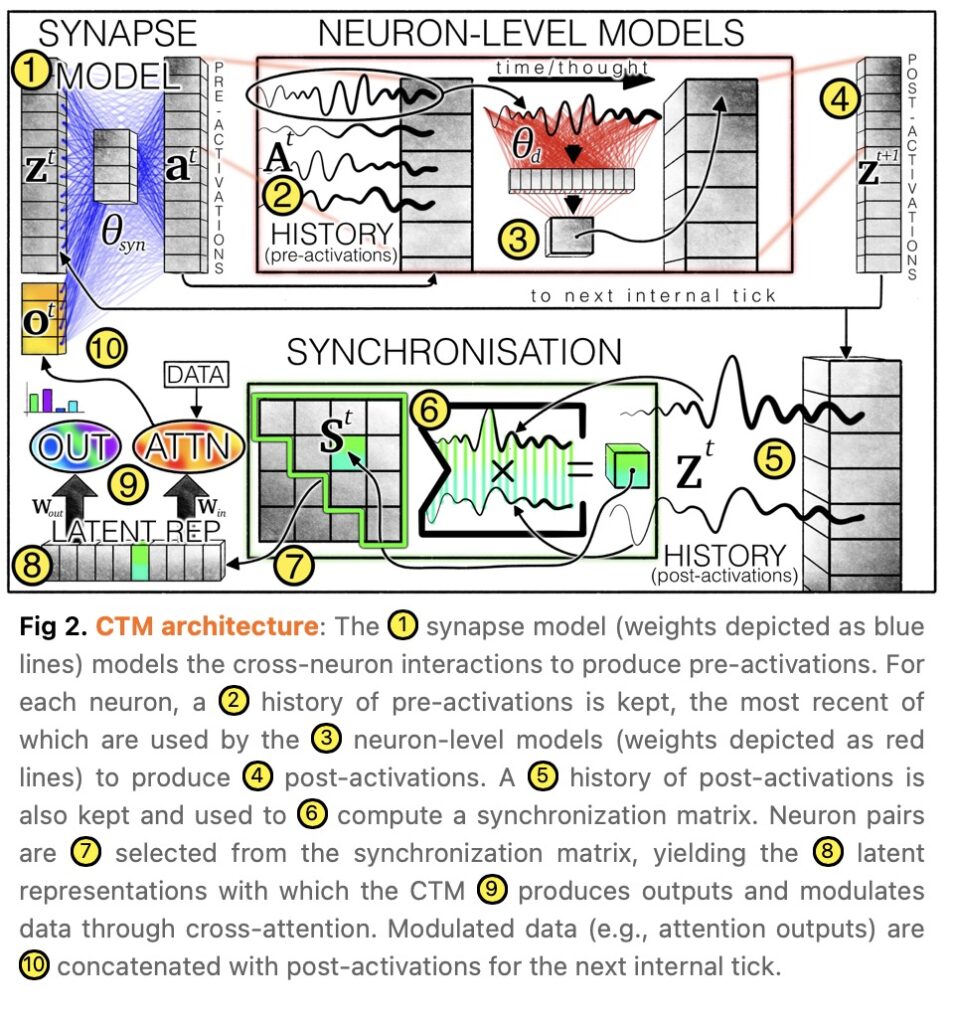

To address this gap, we present the Continuous Thought Machine (CTM), a novel neural network architecture that places neural timing and synchronization at the heart of computation. The CTM is built on three foundational ideas:

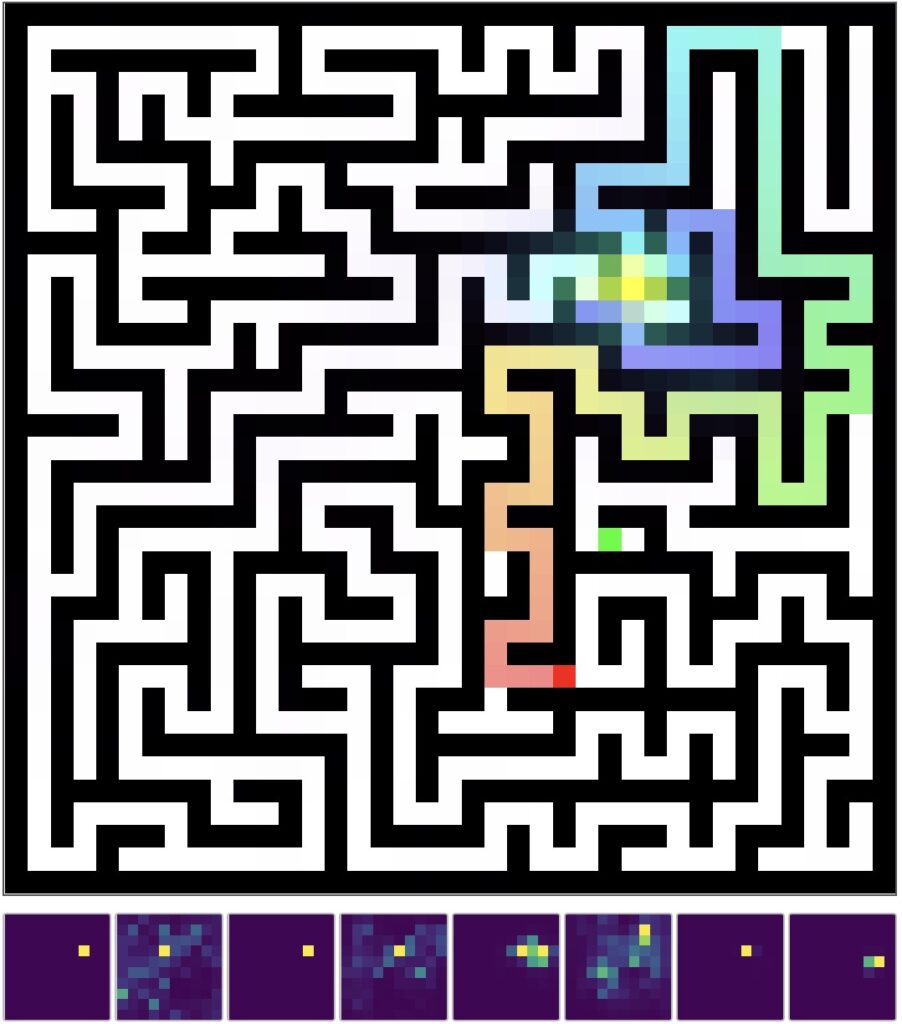

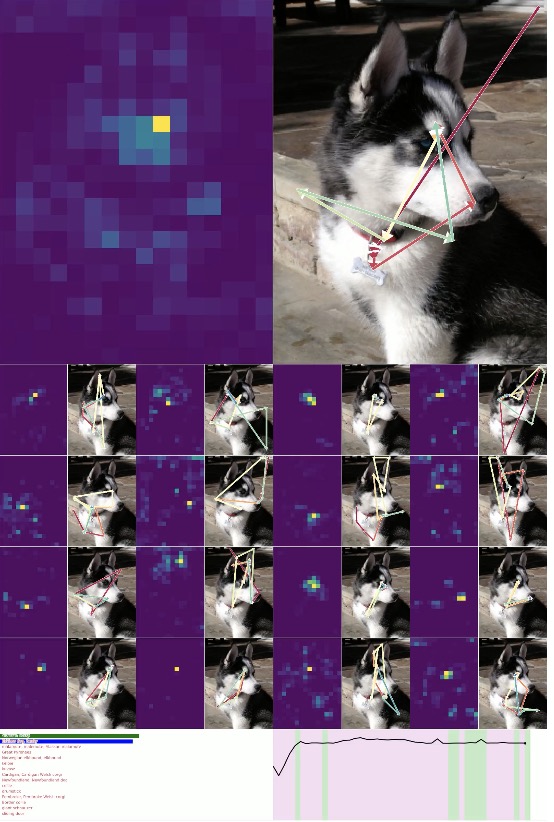

First, it introduces a decoupled internal dimension—a timeline along which thought can unfold within the artificial system. Unlike traditional recurrent neural networks (RNNs) or transformers, which process data step-by-step according to the sequence of the input, the CTM operates along its own self-generated sequence of “internal ticks.” This allows the model to iteratively build and refine its representations, even for static data like images or mazes.

Second, the CTM employs neuron-level models (NLMs). Instead of using a single, static activation function, each neuron in the CTM has its own internal weights and processes a history of incoming signals. This mid-level abstraction allows each neuron to develop its own temporal dynamics, much like real neurons in the brain.

Third, the CTM uses neural synchronization as a representation. Rather than relying solely on the magnitude of neural activations, the CTM tracks how pairs of neurons synchronize over time. This measure of synchronization becomes the latent representation with which the model observes, attends to, and predicts outcomes. In other words, the CTM makes decisions not just based on what is active, but on how activity is coordinated across the network.

Recurrence, Reasoning, and the Future of AI

The field of AI stands at a crossroads. While scaling up existing models has produced impressive results, the approach is reaching its limits in terms of computational cost and data requirements. There is a growing recognition that true reasoning—beyond simple input-output mapping—requires models that can accumulate and process information over time.

Recurrence, the ability to revisit and refine internal states, is re-emerging as a key ingredient for advanced AI. Modern text generation models, for example, use intermediate generations as a form of recurrence, allowing for more complex reasoning at test time. Yet, even these advances fall short of the rich temporal dynamics found in biological brains.

The CTM pushes this frontier further by decoupling internal recurrence from the data itself, enabling a form of sequential thought that is not tied to the structure of the input. Its neuron-level models and synchronization-based representations bring us closer to the way real brains process information—through the interplay of timing, history, and coordination.

Toward Truly Intelligent Machines

The Continuous Thought Machine represents a bold step toward bridging the gap between the efficiency of modern AI and the biological plausibility of the brain. By reintroducing time as a central component of computation, CTMs offer a new paradigm for building machines that can reason, adapt, and perhaps one day rival the flexibility of human intelligence.

The journey is just beginning. Technical details, code, and demonstrations are available for those who wish to explore the inner workings of the CTM. As we continue to refine these models, the hope is that by embracing the lessons of biology—timing, synchronization, and dynamic computation—we can unlock the next generation of artificial intelligence.