Enhanced multi-modal reasoning and visual instruction-following capabilities with minimal additional parameters

- LLaMA-Adapter V2 unlocks more learnable parameters and introduces an early fusion strategy for better visual knowledge incorporation.

- A joint training paradigm optimizes disjoint groups of learnable parameters for improved multi-modal reasoning and instruction-following capabilities.

- Expert models like captioning and OCR systems are incorporated during inference to enhance image understanding without incurring training costs.

The recently developed LLaMA-Adapter V2 is a parameter-efficient visual instruction model that builds upon its predecessor to improve multi-modal reasoning and visual instruction-following capabilities. The new model unlocks more learnable parameters, such as norm, bias, and scale, distributing the instruction-following ability across the entire LLaMA model in addition to adapters. An early fusion strategy is introduced to feed visual tokens only into the early LLM layers, contributing to better visual knowledge incorporation.

LLaMA-Adapter V2 employs a joint training paradigm that optimizes disjoint groups of learnable parameters using image-text pairs and instruction-following data. This strategy effectively mitigates interference between the tasks of image-text alignment and instruction-following, achieving strong multi-modal reasoning with only a small-scale image-text and instruction dataset.

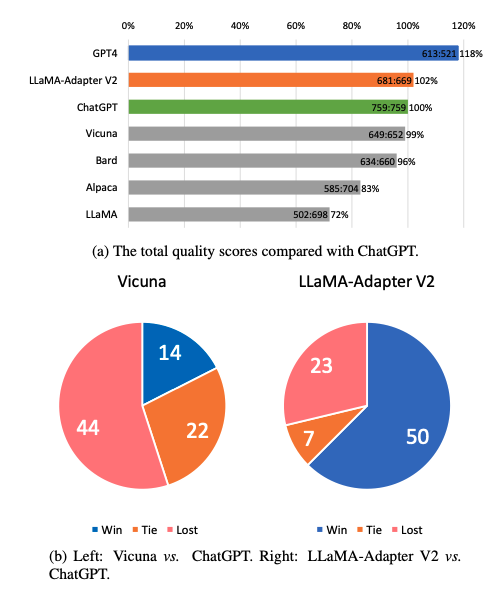

During inference, LLaMA-Adapter V2 incorporates additional expert models, such as captioning and OCR systems, to further enhance its image understanding capabilities without incurring training costs. Compared to the original LLaMA-Adapter, LLaMA-Adapter V2 can perform open-ended multi-modal instructions by merely introducing 14M parameters over LLaMA. The updated framework also exhibits stronger language-only instruction-following capabilities and excels in chat interactions.

Despite these improvements, LLaMA-Adapter V2 still lags behind LLaVA in visual understanding capabilities and is susceptible to inaccurate information provided by expert systems. Future research plans include exploring the integration of more expert systems and fine-tuning LLaMA-Adapter V2 with a multi-modal instruction dataset or other PEFT methods, such as LoRA, to further enhance its visual instruction-following capabilities. The code and models are made available for further development and research.