Recurrent Memory Transformer Enables Unprecedented Context Length in NLP Models

- Researchers have applied recurrent memory to BERT, extending the model’s context length to an impressive two million tokens.

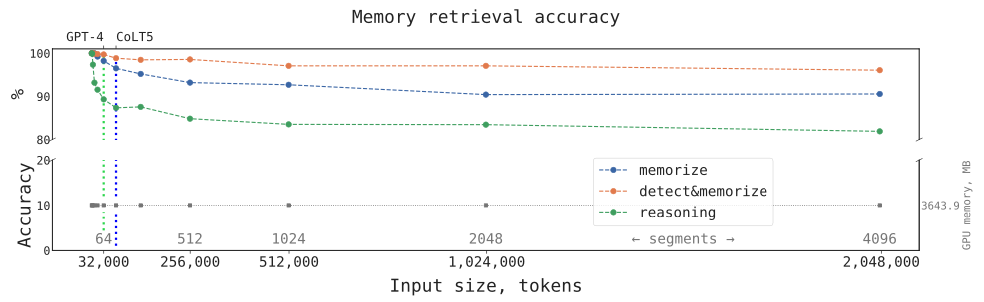

- The method maintains high memory retrieval accuracy while allowing for the storage and processing of local and global information.

- The Recurrent Memory Transformer (RMT) can enhance long-term dependency handling in NLP tasks and facilitate large-scale context processing in memory-intensive applications.

A recent technical report presents a significant breakthrough in the field of natural language processing: the successful extension of BERT’s context length to an unprecedented two million tokens. By leveraging the Recurrent Memory Transformer (RMT) architecture, researchers have maintained high memory retrieval accuracy while increasing the model’s context length, enabling enhanced long-term dependency handling in natural language understanding and generation tasks.

The RMT architecture addresses the quadratic complexity issue that plagues the attention operation in Transformer models, making them increasingly difficult to apply to longer inputs. By incorporating token-based memory storage and segment-level recurrence with recurrent memory, the RMT-augmented BERT model can tackle tasks on sequences with lengths up to seven times its original input length of 512 tokens.

The study revealed that the trained RMT can successfully extrapolate to tasks of varying lengths, including those exceeding one million tokens, with a linear scaling of computations required. Additionally, attention pattern analysis showed the operations RMT employs with memory, allowing it to handle exceptionally long sequences effectively.

The application of RMT to BERT demonstrates that handling long texts with Transformers does not necessarily require large amounts of memory. Using a recurrent approach and memory, the quadratic complexity can be reduced to linear, and models trained on large inputs can extrapolate their abilities to significantly longer texts.

As a first milestone for enabling RMT to generalize to tasks with unseen properties, the synthetic tasks explored in this study pave the way for further improvements in effective context size. Future work aims to tailor the recurrent memory approach to the most commonly used Transformers, further enhancing their capabilities in natural language processing tasks.