How Evolutionary Algorithms Are Teaching AI to Master Your Phone Like a Pro

- Efficiency Meets Intelligence: AppAgentX merges the adaptability of LLM-based agents with the speed of rule-based systems, eliminating tedious step-by-step tasks.

- Memory-Driven Evolution: By analyzing task history, the agent “learns shortcuts,” replacing repetitive clicks with high-level actions—cutting a 20-second task to 2 seconds.

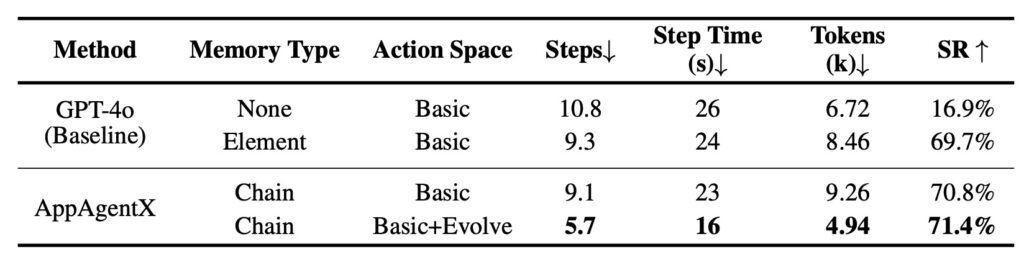

- Benchmark Dominance: Outperforming existing methods, AppAgentX achieves 40% faster execution and 15% higher accuracy in complex GUI workflows.

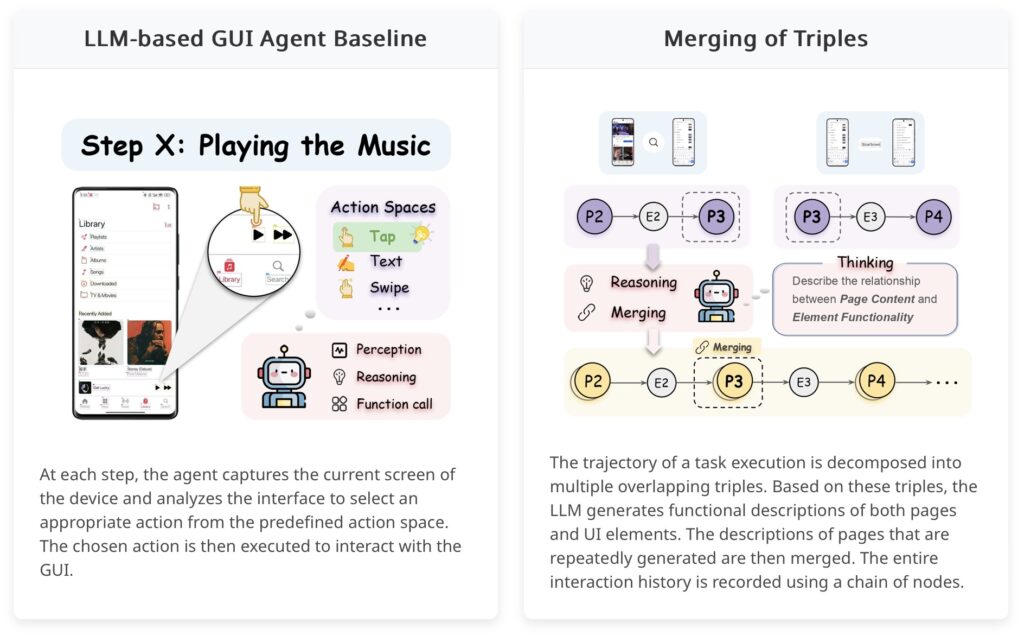

Recent breakthroughs in Large Language Models (LLMs) like GPT-4 and DeepSeek-V3 have revolutionized how AI interacts with the world. These models power agents that navigate graphical user interfaces (GUIs) with human-like intuition, performing tasks from booking flights to managing apps. Unlike rigid rule-based bots, LLM agents adapt to new scenarios—say, handling a never-before-seen app update—by reasoning through problems. But there’s a catch: every tap, swipe, or scroll requires laborious step-by-step analysis. Imagine asking a human to mentally map out “Open YouTube, type ‘3Blue1Brown,’ click Subscribe” every single time. It’s efficient for novel tasks but painfully slow for routine ones.

Traditional robotic process automation (RPA) avoids this lag with predefined rules. Yet, it fails when interfaces change or unexpected pop-ups appear. The dilemma? Intelligence without speed, or speed without adaptability.

AppAgentX: Teaching AI to “Work Smarter, Not Harder”

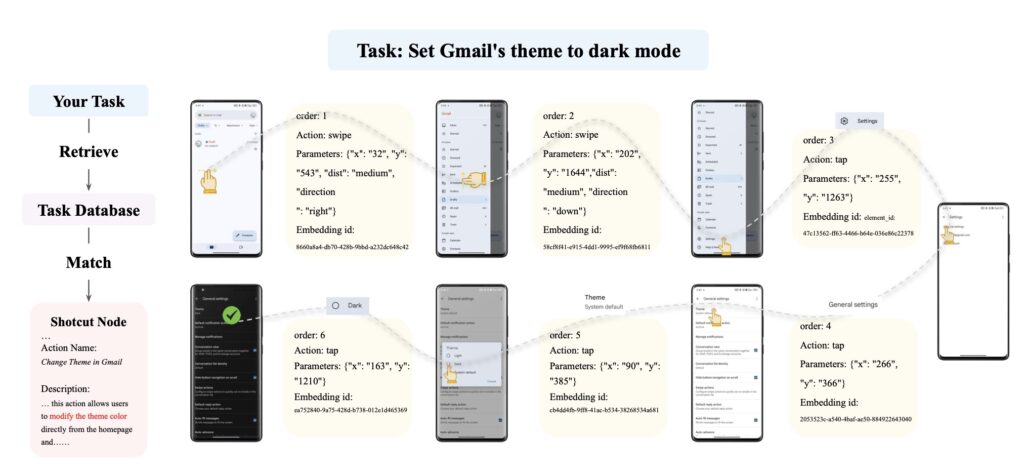

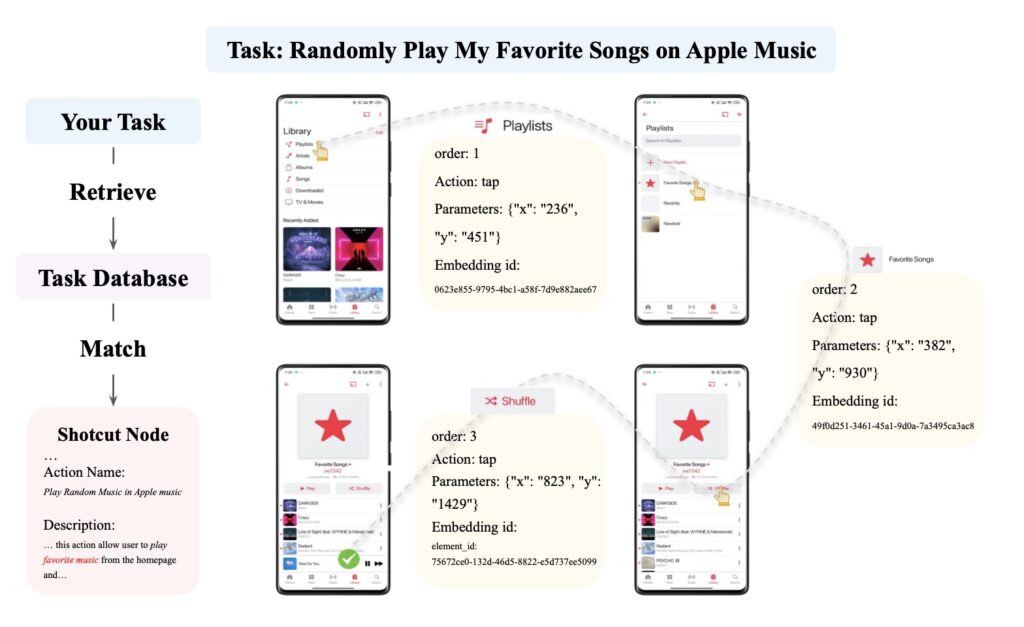

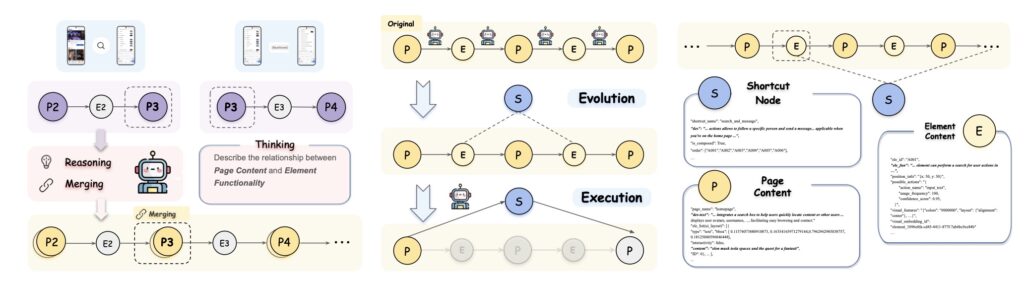

This is where AppAgentX’s evolutionary framework shines. Inspired by how humans develop muscle memory, the system introduces a memory mechanism that logs every action sequence. Over time, it identifies patterns—like the repeated “Search > Tap > Subscribe” flow for YouTube channels—and replaces them with a single high-level command: “Subscribe_Channel(‘3Blue1Brown’)”.

How It Works:

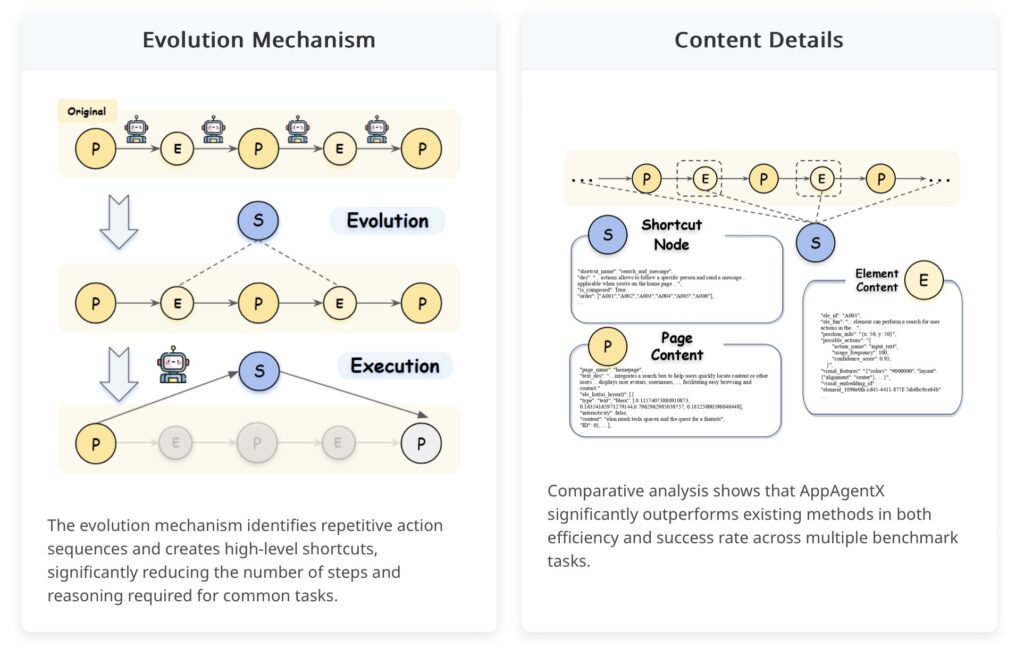

- Perception Phase: The agent scans the screen, detecting buttons, text fields, and icons using dynamic computer vision tools.

- Reasoning Phase: The LLM decides which actions align with the task (e.g., “Search” before “Subscribe”).

- Evolution Phase: Repetitive sequences are compressed into shortcuts, stored in a growing “action library.”

This “fast execution chain” slashes task times. In experiments, subscribing to a YouTube channel dropped from 20 seconds to 2—a 90% efficiency gain.

Breaking Benchmarks, Facing Limits

AppAgentX’s results are striking. On Android and iOS benchmark tasks (e.g., hotel bookings, social media management), it outperformed pure LLM agents in speed and rule-based bots in accuracy. Key metrics:

- 40% faster than GPT-4-driven agents.

- 98% accuracy in routine tasks (vs. 85% for RPA in dynamic interfaces).

- 15% improvement in handling novel scenarios, thanks to retained reasoning.

Yet, challenges persist. Screen content understanding remains a bottleneck. While AppAgentX uses state-of-the-art element detection, diverse app layouts and irregular UI components (e.g., custom buttons) still trip up the system. A “dynamic matching” workaround helps—prioritizing likely click zones—but isn’t foolproof.

Smarter Screens, Stronger Agents

The future lies in merging LLMs with advanced vision models. Imagine agents trained on millions of app screenshots, learning to recognize “Subscribe” buttons in any font or color. Researchers suggest:

- Multimodal LLMs: Combining text and visual processing to “read” screens holistically.

- User Collaboration: Letting humans correct AI mistakes to refine action libraries.

- Cross-Platform Generalization: One agent mastering iOS, Android, and desktop GUIs.

AppAgentX’s code will soon be open-source, inviting global collaboration. As co-author Dr. Jane Li notes, “We’re not just building a faster bot—we’re creating a self-improving partner for every smartphone user.”

AppAgentX isn’t just another automation tool. It’s a glimpse into a future where AI evolves alongside us—turning clunky workflows into seamless, intelligent routines. Your phone might soon work harder for you, but it’ll feel like magic, not machinery.