Ferret-UI Bridges the Gap in Mobile UI Understanding with Advanced Multimodal LLM Integration

- Enhanced UI Screen Understanding: Ferret-UI introduces a novel approach to processing mobile UI screens by dividing them into sub-images based on aspect ratio, enabling finer detail magnification and improved object recognition.

- Comprehensive Training on UI Tasks: The model is trained on a diverse dataset covering both basic and advanced UI tasks, from icon recognition to function inference, ensuring versatile comprehension and interaction capabilities.

- Benchmarking Excellence: In comparative evaluations, Ferret-UI not only outperforms existing open-source UI-focused MLLMs but also surpasses GPT-4V in elementary UI task comprehension, setting a new standard in the field.

Apple‘s latest innovation, Ferret-UI, marks a significant advancement in the realm of mobile user interface (UI) interaction and understanding. Addressing the limitations of general-domain Multimodal Large Language Models (MLLMs) in comprehending and interacting with UI screens, Ferret-UI emerges as a specialized solution tailored for the intricacies of mobile UI elements.

Rethinking UI Screen Processing

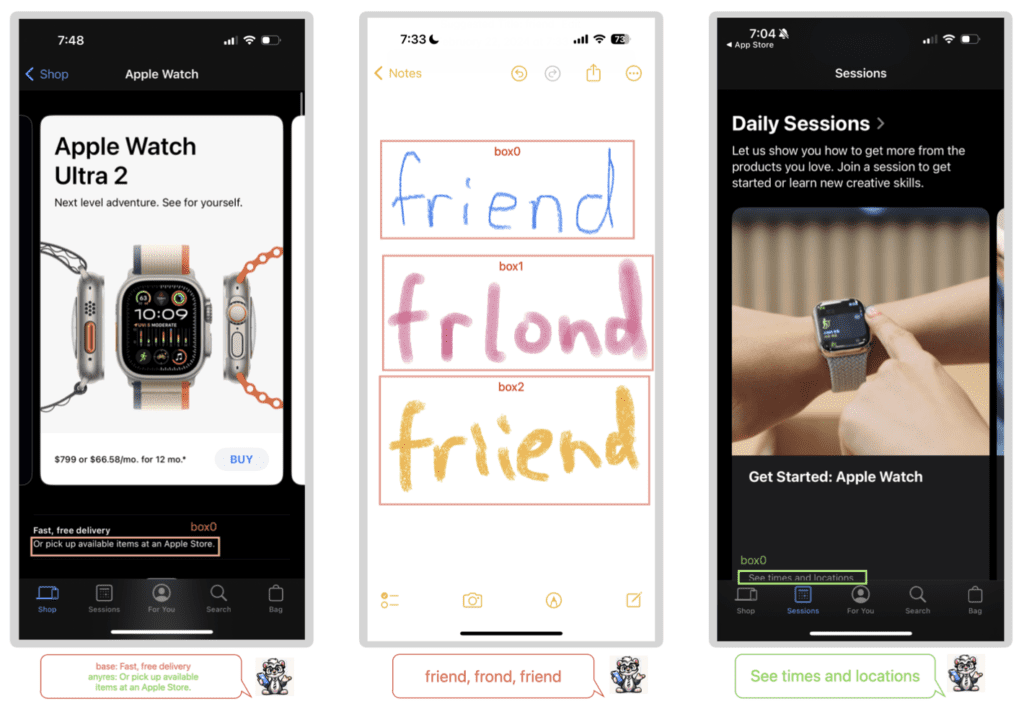

Ferret-UI’s unique approach to processing UI screens involves dissecting each screen into two sub-images, aligning with the original aspect ratio to preserve detail integrity. This method, termed “any resolution,” enhances the model’s ability to magnify and interpret smaller, detail-rich UI components like icons and texts. Such a granular focus on UI elements paves the way for more accurate and context-aware model responses, bridging the gap between AI comprehension and human-like understanding of mobile interfaces.

Diverse and Targeted Training

The foundation of Ferret-UI’s proficiency lies in its comprehensive training regime, encompassing a wide array of UI tasks. The model is meticulously trained on datasets that include basic UI elements identification to complex reasoning and interaction tasks. This extensive training ensures that Ferret-UI is not just another layer of computation but a significant enhancement to the model’s utility, providing nuanced understanding and interaction capabilities that extend beyond the operating system’s inherent UI element identification.

Setting New Benchmarks

The effectiveness of Ferret-UI is underscored by its superior performance in benchmark evaluations, where it excels beyond most existing UI-focused MLLMs and even outperforms advanced models like GPT-4V in elementary UI tasks. This achievement not only validates Ferret-UI’s specialized training and processing approach but also highlights its potential to revolutionize how developers and users interact with mobile UIs.

Beyond Aspect Ratio Concerns

While the question of computational overhead for aspect ratio adjustments might arise, Ferret-UI’s value proposition extends far beyond mere visual adjustments. Its ability to understand and interact with UI components in a contextually rich manner offers a transformative potential for app development, accessibility features, and user experience enhancement.

Ferret-UI represents a forward-thinking solution to the nuanced challenges of mobile UI comprehension and interaction. By leveraging advanced MLLM capabilities tailored specifically for the mobile UI context, Apple’s Ferret-UI sets a new precedent in the field, promising to enhance the way users and developers engage with mobile interfaces in a multitude of applications.