Apple introduces AI advancements with on-device and server models for enhanced user experience

- Apple unveils a 3 billion parameter on-device language model and a more powerful server-based language model.

- The new models aim to support diverse user tasks, from text generation to image creation, integrated across Apple devices.

- Apple emphasizes responsible AI development with privacy-focused design and robust evaluation methods.

Apple is making a significant push into the AI space with the introduction of its latest AI models, announced at the 2024 Worldwide Developers Conference (WWDC). The company is set to invest heavily in both on-device and server-based AI capabilities, aimed at revolutionizing user interaction across its ecosystem of devices.

Key AI Innovations

On-Device Language Model (SLM): Apple’s new on-device model features 3 billion parameters and a vocabulary size of 49,000. This model is designed to handle various everyday tasks directly on the user’s device, ensuring privacy and reducing latency.

Server-Based Language Model (LLM): The server-based model is more powerful, boasting a larger vocabulary of 100,000 tokens. It is built to handle complex tasks, leveraging Apple’s Private Cloud Compute infrastructure to ensure data security and compliance with regulations like the European Union’s Digital Operational Resilience Act (DORA).

Visual and Multimodal Capabilities: In addition to the language models, Apple is incorporating diffusion models for image generation, enhancing visual reasoning tasks such as interpreting charts and transcribing text from imperfect images.

Practical Applications and Performance

Apple’s AI advancements are set to transform user experiences across its devices. The new models can generate documents, write code, create vector graphics, and even design simple games. These capabilities are integrated seamlessly, allowing users to interact with their creations in real-time through the new Artifacts feature on Claude.ai.

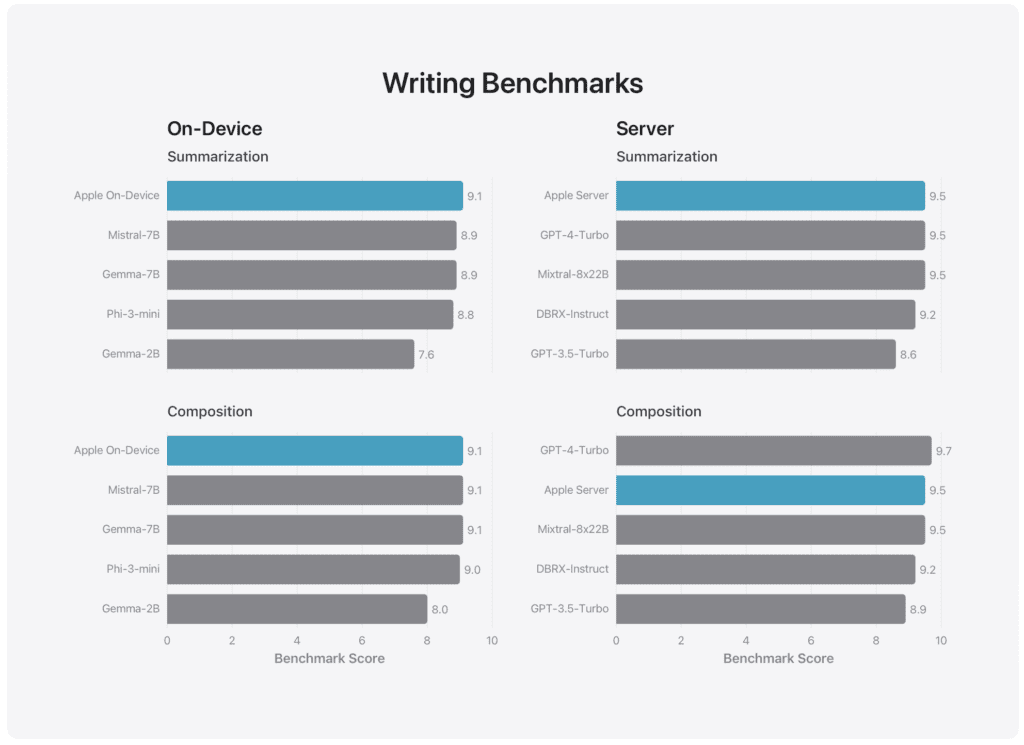

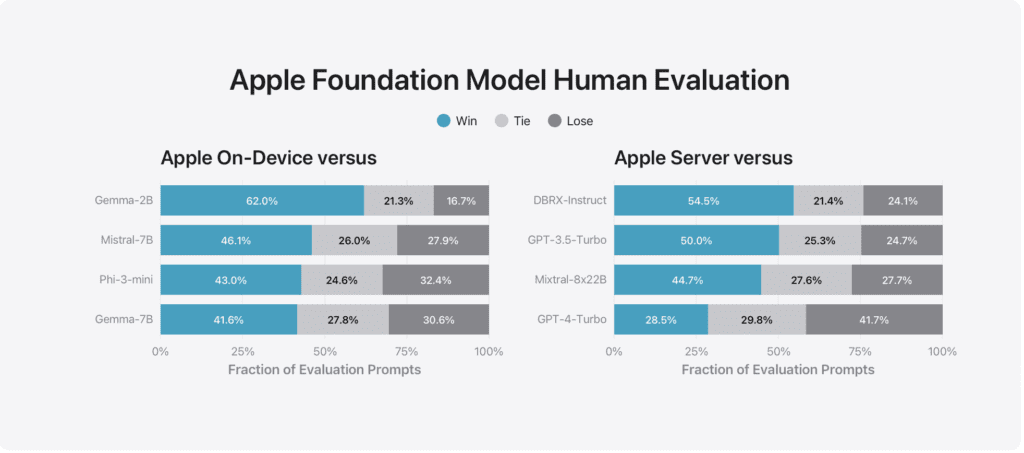

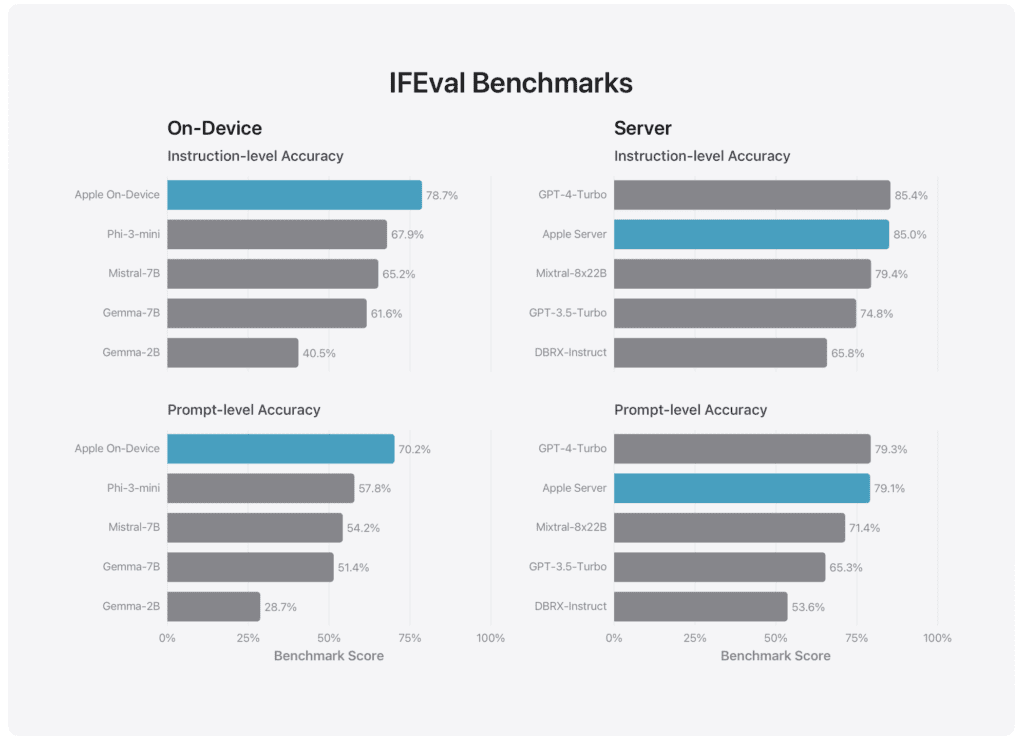

The on-device model outperformed the 7B parameter Mistral model, showcasing its efficiency and robustness. Meanwhile, the server-based model surpassed GPT-3.5 and Mixtral 8x22B in performance, although it still falls short of GPT-4.

Responsible AI Development

Apple has prioritized responsible AI development, emphasizing user privacy and ethical considerations. The company ensures that its models do not train on user-submitted data without explicit permission and employs rigorous evaluation methods to mitigate misuse.

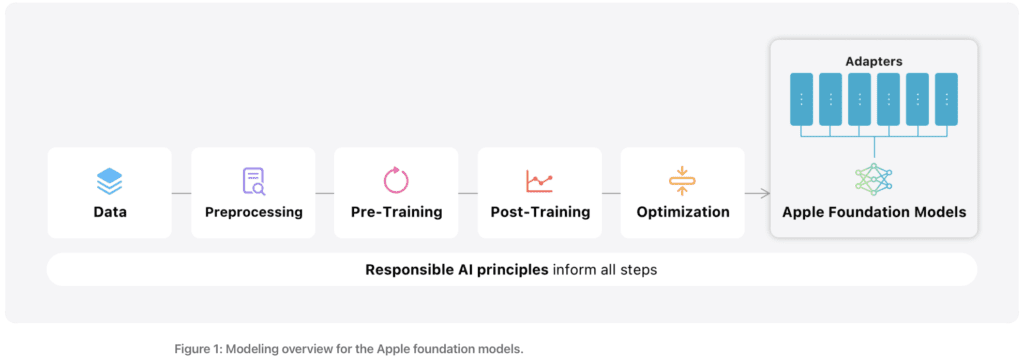

Training and Optimization: Apple’s foundation models are trained using the AXLearn framework, incorporating both human-annotated and synthetic data. Optimization techniques, such as low-bit palletization and grouped-query-attention, ensure the models run efficiently on Apple devices and in the cloud.

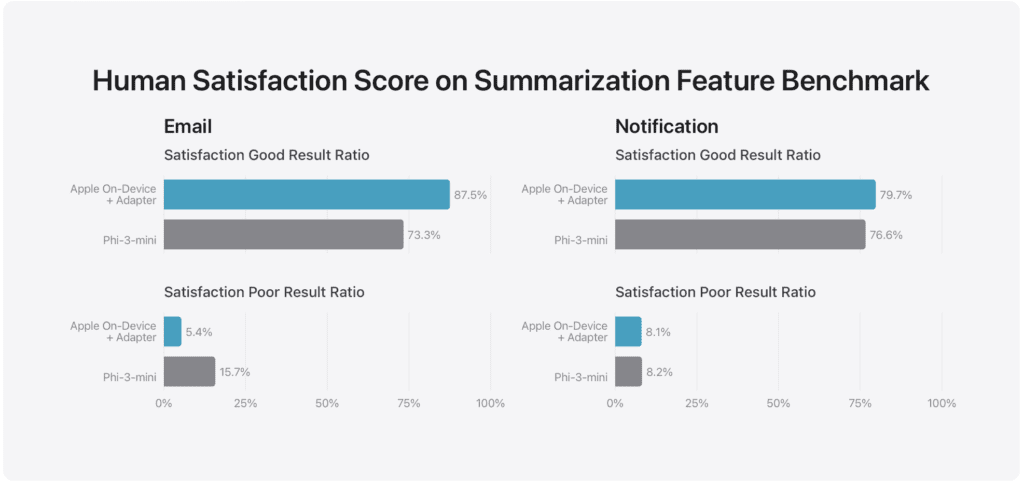

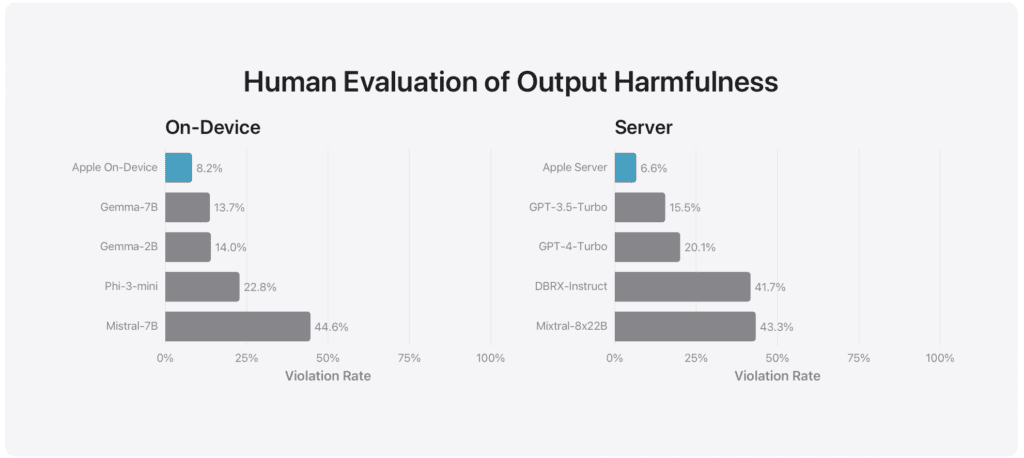

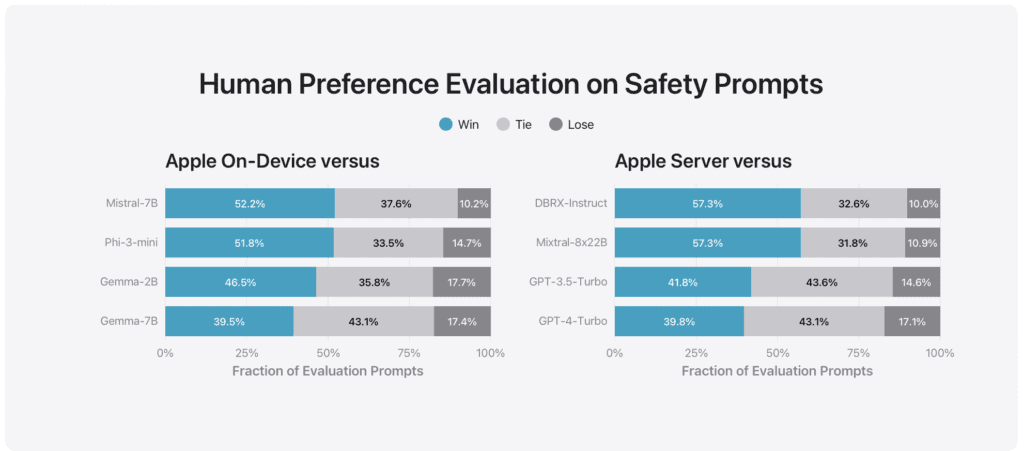

Evaluation and Safety: Apple uses a comprehensive set of real-world prompts to evaluate model performance, focusing on tasks like summarization, classification, and coding. Human graders play a crucial role in assessing the models’ helpfulness and safety, with Apple’s models demonstrating lower violation rates in handling adversarial prompts compared to competitors.

Future Developments

Apple plans to expand its AI capabilities with the release of additional models, including Claude 3.5 Haiku and Claude 3.5 Opus, later this year. The company is also exploring new features like Memory, which will enable AI to remember user preferences and history, further personalizing the user experience.

Apple’s introduction of advanced on-device and server-based AI models marks a significant step forward in enhancing the functionality and responsiveness of its devices. By balancing powerful capabilities with a strong commitment to privacy and responsible AI development, Apple is poised to offer its users a more intuitive and secure digital experience. With continuous advancements on the horizon, Apple’s AI models are set to redefine the future of personal and professional technology use.