How Hierarchical Multimodal Learning Powers the Future of Mobile Robots

- Astra introduces a groundbreaking dual-model architecture, Astra-Global and Astra-Local, to tackle the challenges of robot navigation in complex indoor environments, surpassing traditional modular systems.

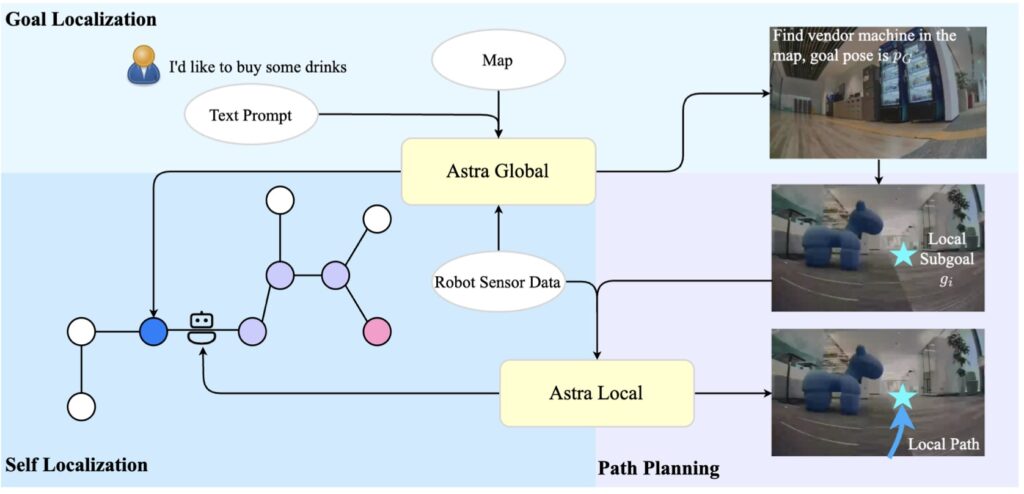

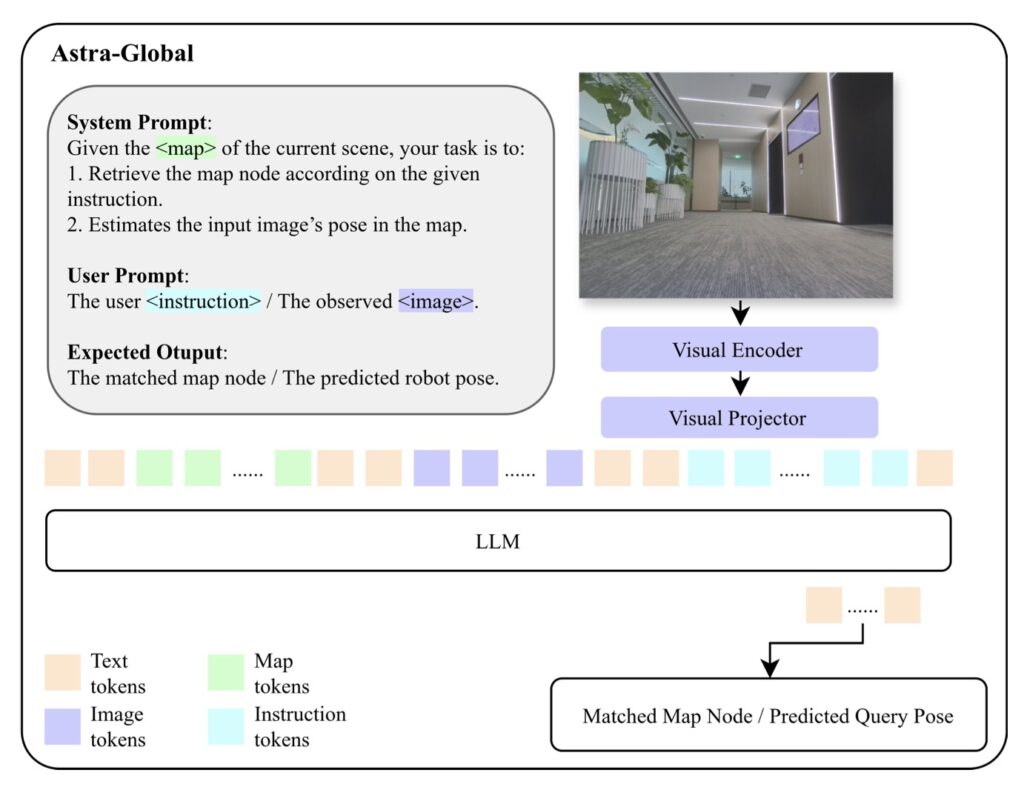

- Astra-Global, a multimodal large language model (LLM), excels at goal and self-localization using a hybrid topological-semantic map, while Astra-Local handles precise local path planning and odometry estimation with innovative techniques.

- Real-world deployments show Astra’s high mission success rates, with ongoing plans to enhance robustness, generalization, and human-robot interaction for broader applications.

Modern robotics is at a turning point. As environments grow more complex—think sprawling warehouses, busy offices, or cluttered homes—traditional navigation systems often stumble. These older approaches, built on fragmented modules or rigid rule-based frameworks, struggle to adapt to new settings or unexpected challenges. Enter Astra, a game-changer in mobile robot navigation. Developed to address these very limitations, Astra combines cutting-edge hierarchical multimodal learning into a dual-model system: Astra-Global and Astra-Local. This isn’t just another incremental upgrade; it’s a comprehensive rethink of how robots understand and move through the world, achieving impressive end-to-end mission success rates in diverse indoor spaces.

Let’s start with the core challenges of robot navigation. First, there’s goal localization—figuring out where to go when the target isn’t a simple coordinate but a natural language prompt like “go to the kitchen” or a goal image. Then, there’s self-localization, where a robot must pinpoint its own position in a map, often in repetitive environments like warehouses with few distinct landmarks. Traditional systems might lean on artificial markers like QR codes, but that’s a clunky workaround. Finally, path planning splits into global and local tasks: charting a broad route from start to finish and then fine-tuning the journey to dodge obstacles along the way. Astra tackles all these challenges head-on with a unified, intelligent approach that leaves modular systems in the dust.

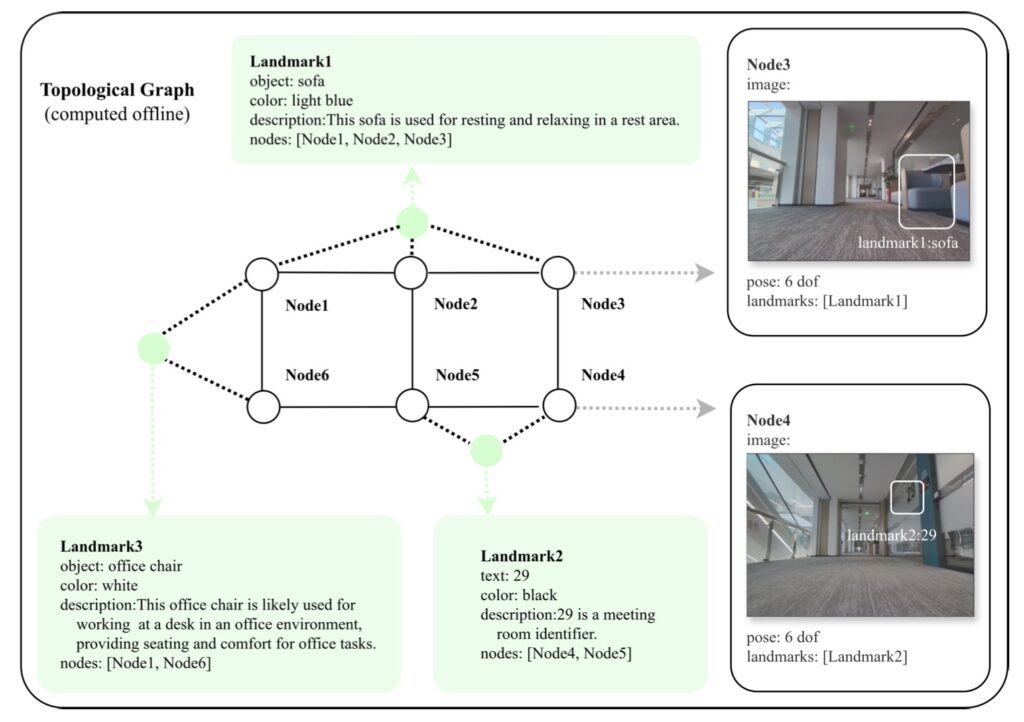

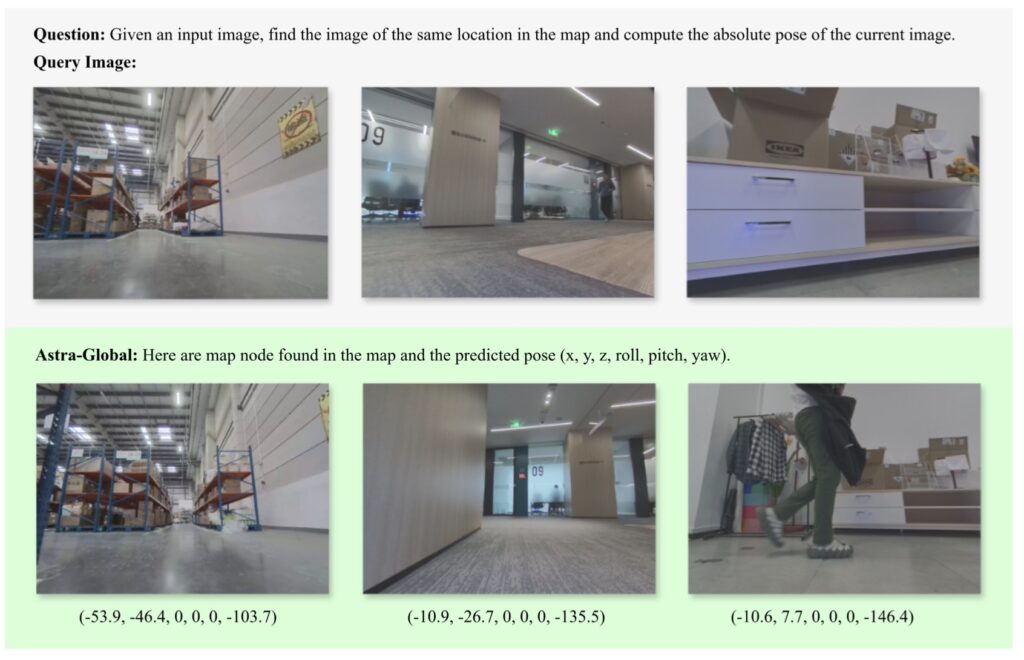

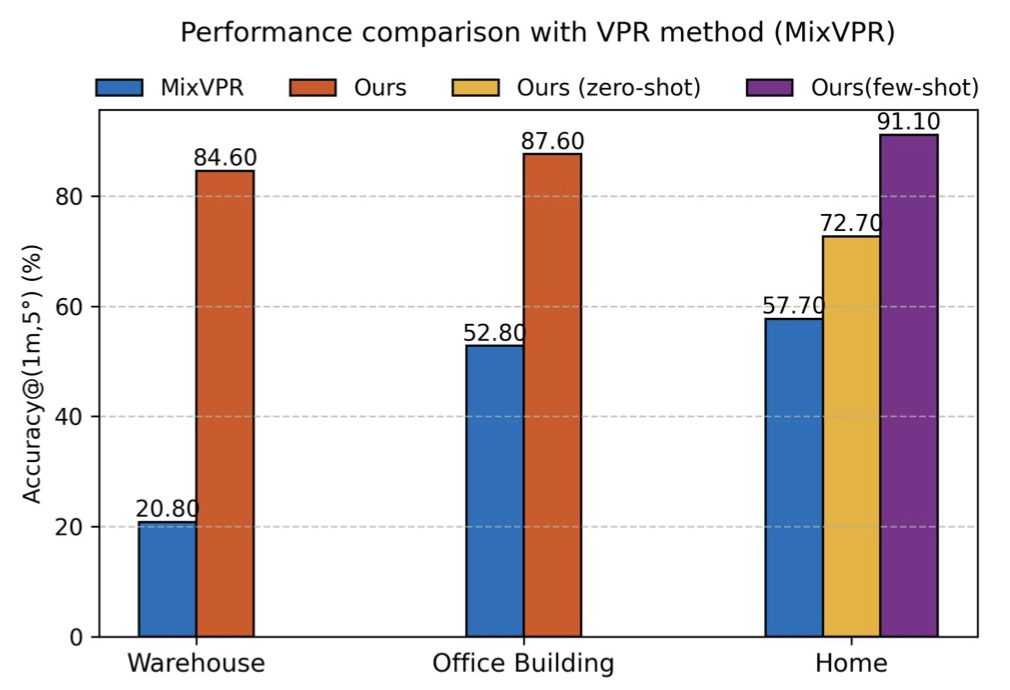

Astra-Global, the first pillar of this architecture, is a multimodal LLM that processes both vision and language inputs to handle localization like never before. It operates on a pre-built hybrid topological-semantic map—a clever balance of spatial structure and meaningful context—that allows it to interpret queries, whether they’re text descriptions or images, and pinpoint goals or the robot’s own position with remarkable accuracy. Unlike traditional visual place recognition methods, which often falter in unfamiliar settings, Astra-Global shines in diverse environments and even generalizes to unseen scenarios with little to no extra data. Imagine a robot navigating a new building it’s never seen, just by understanding a spoken command or a snapshot of the target location—that’s the power of Astra-Global.

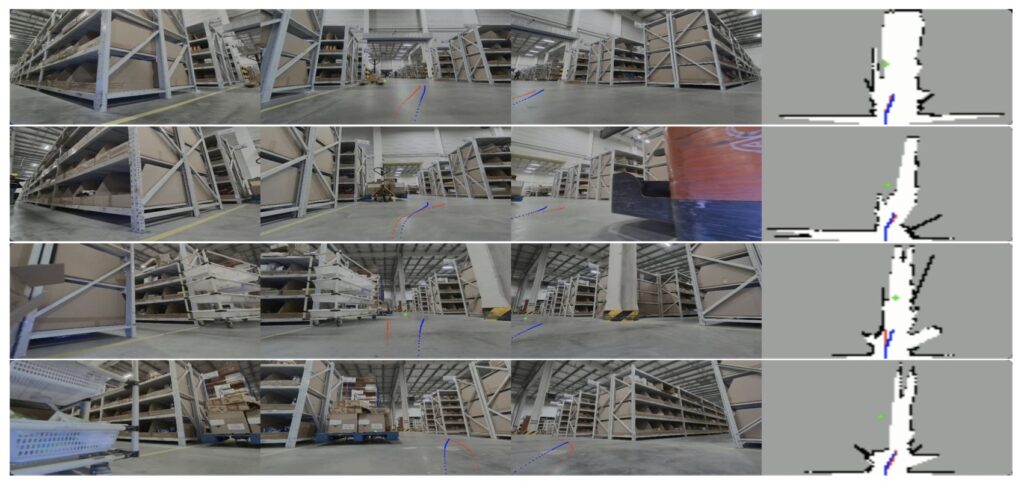

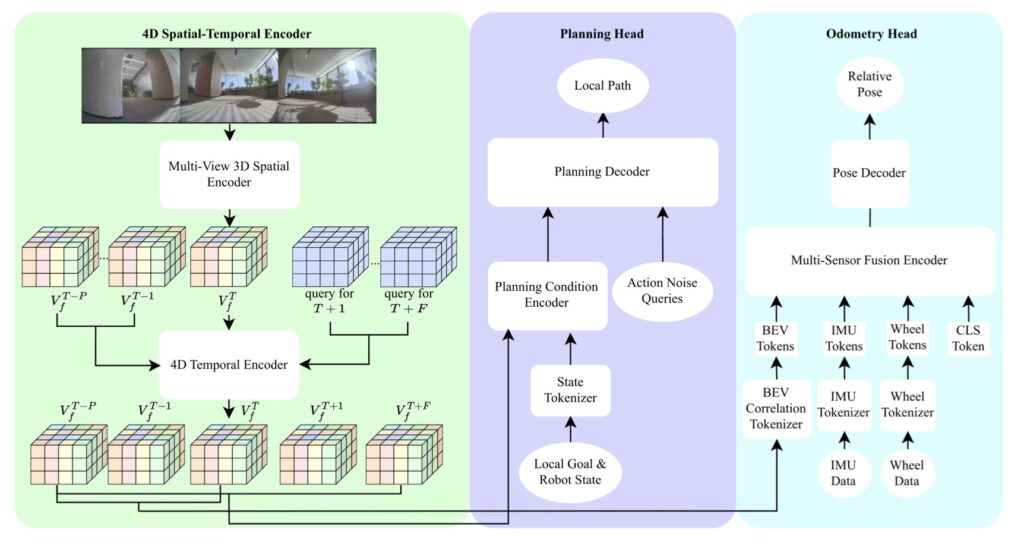

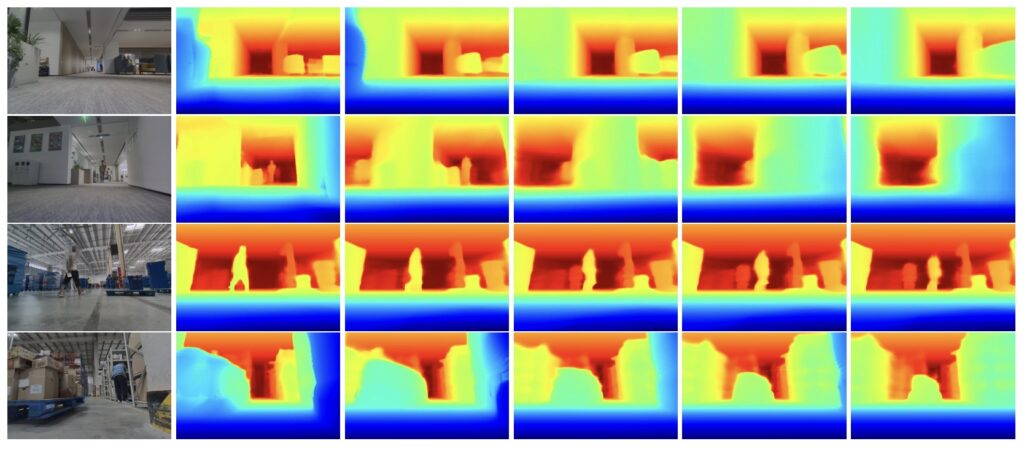

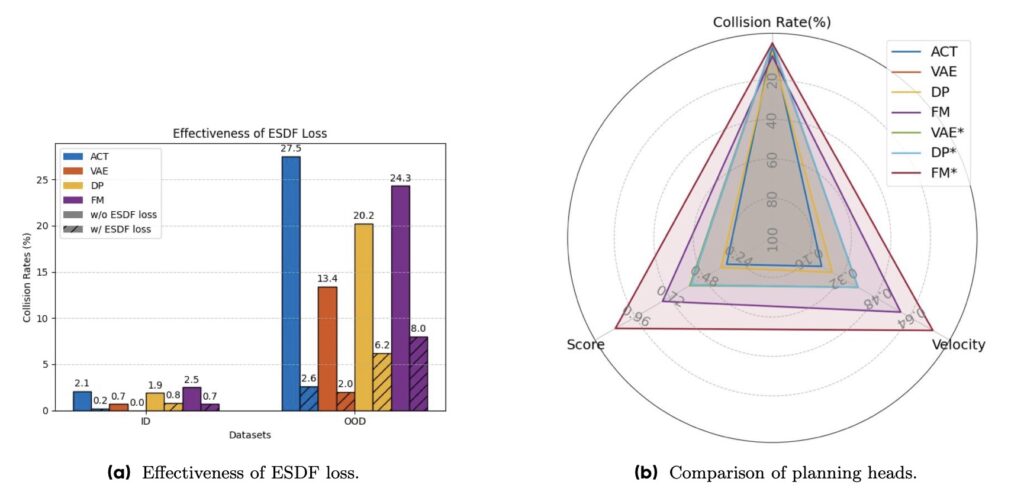

Meanwhile, Astra-Local focuses on the nitty-gritty of movement. This multi-sensor, multi-task network is built for local path planning and odometry estimation, ensuring the robot moves smoothly and safely toward its waypoints. At its heart is a pretrained 4D spatial-temporal encoder, honed through self-supervised learning, which churns out robust features for downstream tasks. For planning, it employs a novel masked ESDF (Euclidean Signed Distance Field) loss paired with flow matching to minimize collision risks, crafting precise local trajectories even in tight or cluttered spaces. Its odometry head, powered by a transformer encoder, fuses data from multiple sensors to predict the robot’s relative pose with high accuracy. This integration of diverse inputs means Astra-Local doesn’t just react—it anticipates, adapting to the real-time dynamics of its surroundings.

The results speak for themselves. Deployed on in-house mobile robots, Astra has demonstrated a high success rate across varied indoor environments, from structured offices to chaotic storage facilities. It’s not just about getting from point A to B; it’s about doing so reliably, even when the setting throws curveballs like repetitive layouts or sudden obstacles. Compared to traditional systems that often require extensive customization or artificial aids, Astra’s adaptability is a breath of fresh air. It’s a system that learns, understands, and navigates with a level of autonomy that feels almost human.

But no innovation is without room for growth, and the team behind Astra is already eyeing the future. For Astra-Global, one limitation is the current map representation. While it strikes a decent balance between information loss and efficiency, it sometimes misses critical semantic details needed for pinpoint localization. The plan is to explore alternative map compression techniques that preserve more context without bogging down performance. Another challenge is that the model currently relies on single-frame observations, which can fail in featureless or highly repetitive spaces—think long, identical corridors where even a person might get turned around. To counter this, future iterations will enable active exploration and incorporate temporal reasoning, using sequential observations to build a more robust understanding of the environment over time.

For Astra-Local, real-world testing has revealed a small but noticeable fallback rate, often due to the model’s struggles with out-of-distribution scenarios or the occasional misfire of a rule-based fallback system in edge cases. The roadmap includes bolstering the model’s resilience to these unusual situations and reimagining the fallback mechanism as a more seamless part of the overall system. Beyond that, there’s a push to weave in instruction-following capabilities, paving the way for natural human-robot interactions. Picture a robot that not only navigates flawlessly but also responds to casual commands or collaborates in dynamic, human-centric spaces—that’s the vision.

Looking at the bigger picture, Astra represents more than just a technical leap; it’s a glimpse into the future of robotics. As demands for adaptive, general-purpose robots grow, systems like Astra could redefine how we interact with machines in everyday life. From assisting in warehouses to supporting tasks at home, the potential applications are vast. The ongoing enhancements—whether it’s refining map representations, boosting robustness, or enabling richer communication—promise to make Astra even more versatile. For now, it stands as a testament to what’s possible when hierarchical multimodal learning meets real-world challenges, proving that the next generation of mobile robots isn’t just coming—it’s already here.