Replacing Stateless Retrieval with a Structured Framework for Long-Term Reasoning

- The Problem with “Digital Amnesia”: Current AI agents rely on shallow retrieval methods that treat memory as a simple storage bin, often failing to connect dots over time or distinguish facts from inferences.

- The HINDSIGHT Solution: A new architecture transforms memory into a reasoning tool using four structured networks (facts, experiences, entities, beliefs) and three operations: Retain, Recall, and Reflect.

- Proven Results: HINDSIGHT significantly outperforms existing models, boosting accuracy on long-term memory benchmarks from 39% to over 83% and beating full-context GPT-4o.

We are increasingly demanding that our AI agents behave less like stateless search engines and more like long-term partners. Whether in customer support, personal companionship, or complex workflow automation, we expect these digital assistants to remember past interactions, track knowledge about the world, and maintain stable perspectives over time. We want a distinct personality that learns; however, we often get a chatbot that forgets the context of a conversation from mere minutes ago.

The current generation of agent memory systems struggles to meet this demand because they are built around short-context Retrieval-Augmented Generation (RAG) pipelines. These designs treat memory as an external “hard drive”—a layer that extracts salient snippets from a conversation, stores them in vector or graph-based formats, and simply retrieves the top few relevant items to paste into the prompt of an otherwise stateless model. While this helps with basic personalization, it blurs the line between raw evidence and the AI’s inferences. It struggles to organize information over long horizons and offers almost no support for agents that need to explain why they believe what they believe.

HINDSIGHT: Memory as a Reasoning Substrate

To bridge this gap, a new architecture called HINDSIGHT has been developed. This approach fundamentally shifts the paradigm by treating agent memory not as a passive storage bin, but as a structured, first-class substrate for reasoning.

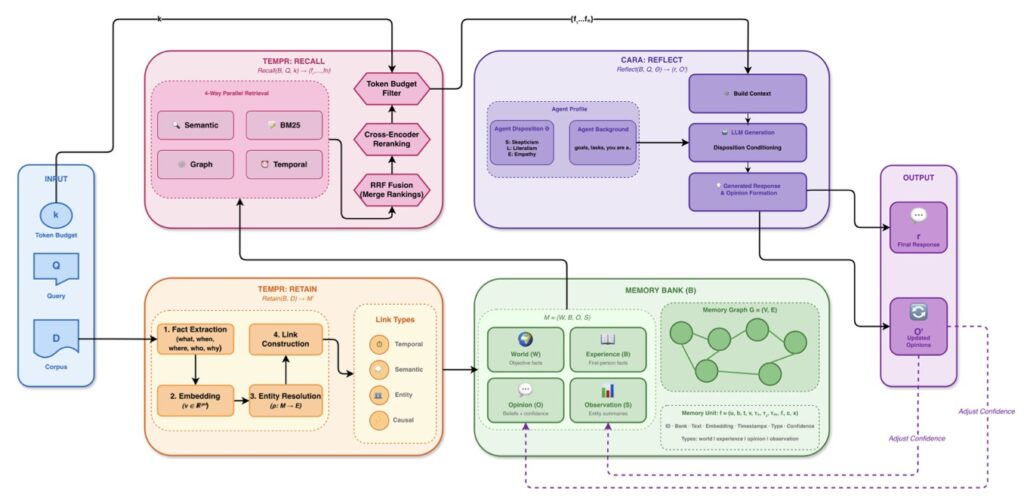

Instead of throwing all data into a single bucket, HINDSIGHT organizes an agent’s long-term memory into four logical networks:

- World Facts: Objective truths about the environment.

- Agent Experiences: A log of what the agent has done and seen.

- Synthesized Entity Summaries: Consolidated profiles of people, places, or objects.

- Evolving Beliefs: The agent’s current opinions or working theories, which can change over time.

This structured approach allows the system to distinguish between what it knows happened (evidence) and what it thinksabout it (inference).

Retain, Recall, Reflect: The Three Pillars

The HINDSIGHT framework is governed by three specific operations that manage how information flows through the system:

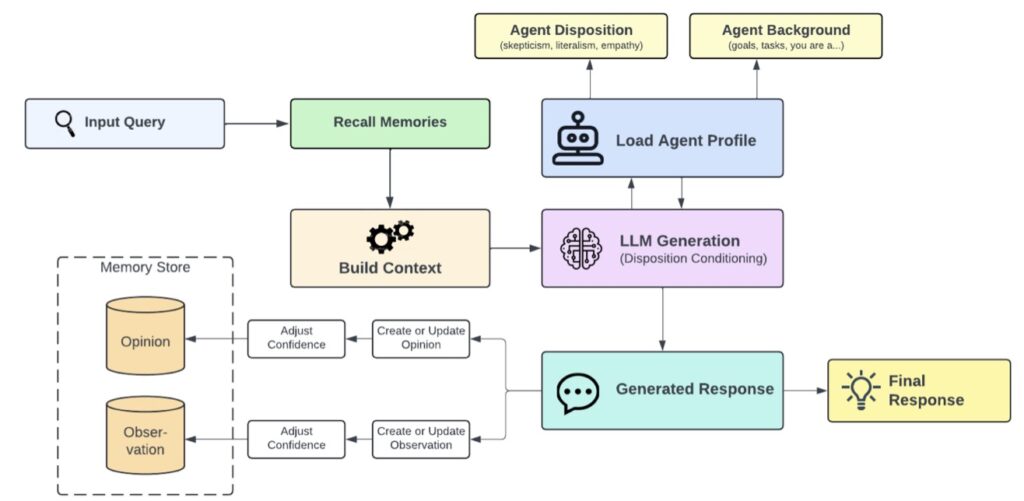

- Retain: This operation handles how information is added to the memory bank, incrementally turning conversational streams into structured data.

- Recall: This governs how the agent accesses relevant information when prompted.

- Reflect: Perhaps the most critical innovation, this layer allows the agent to reason over its memory bank. It produces answers and updates information in a traceable way.

By implementing these as explicit operations, the architecture separates the raw evidence of a conversation from synthesized summaries. This creates a “temporal, entity-aware memory layer” that allows the AI to look back and understand the evolution of a conversation, rather than just grabbing keywords.

Unprecedented Performance Gains

The impact of this structured memory is not just theoretical; it delivers massive improvements in practice. When tested on key long-horizon conversational memory benchmarks like LongMemEval and LoCoMo, the results were staggering.

Using an open-source 20B model, Hindsight lifted overall accuracy from a baseline of 39% to 83.6% compared to a full-context model with the same backbone. Even more impressively, it outperformed full-context GPT-4o. When the backbone model was scaled further, Hindsight pushed accuracy to 91.4% on LongMemEval and nearly 90% on LoCoMo, crushing the strongest prior open systems which hovered around 75%. These results prove that the structure of memory matters just as much as the size of the model.

The Future of Resilient Agents

HINDSIGHT paves the way for even more robust AI applications. Future development aims to move beyond fixed pipelines by jointly optimizing fact extraction, graph construction, and retrieval—potentially using reinforcement learning loops to explore the interplay between retaining, recalling, and reflecting.

Furthermore, there is immense potential in integrating this memory architecture with richer tool-use and workflow orchestration. Researchers also plan to extend the opinion and belief layers to support “controlled forgetting” and time-aware mechanisms. By moving away from thin retrieval layers and embracing structured, reflective memory, we are one step closer to AI agents that truly understand the world they interact with.