The First Open-Source, Native 1-Bit LLM at Scale

- BitNet b1.58 2B4T is the first open-source, native 1-bit Large Language Model (LLM) at the 2-billion parameter scale, trained on 4 trillion tokens.

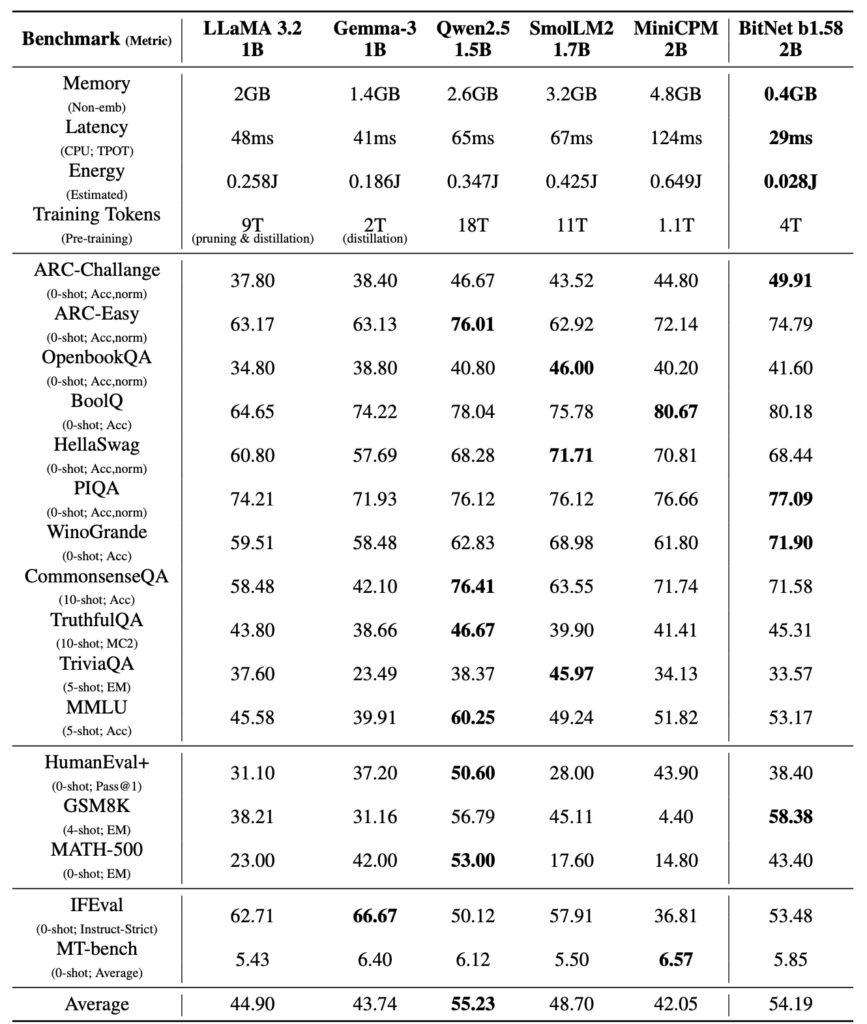

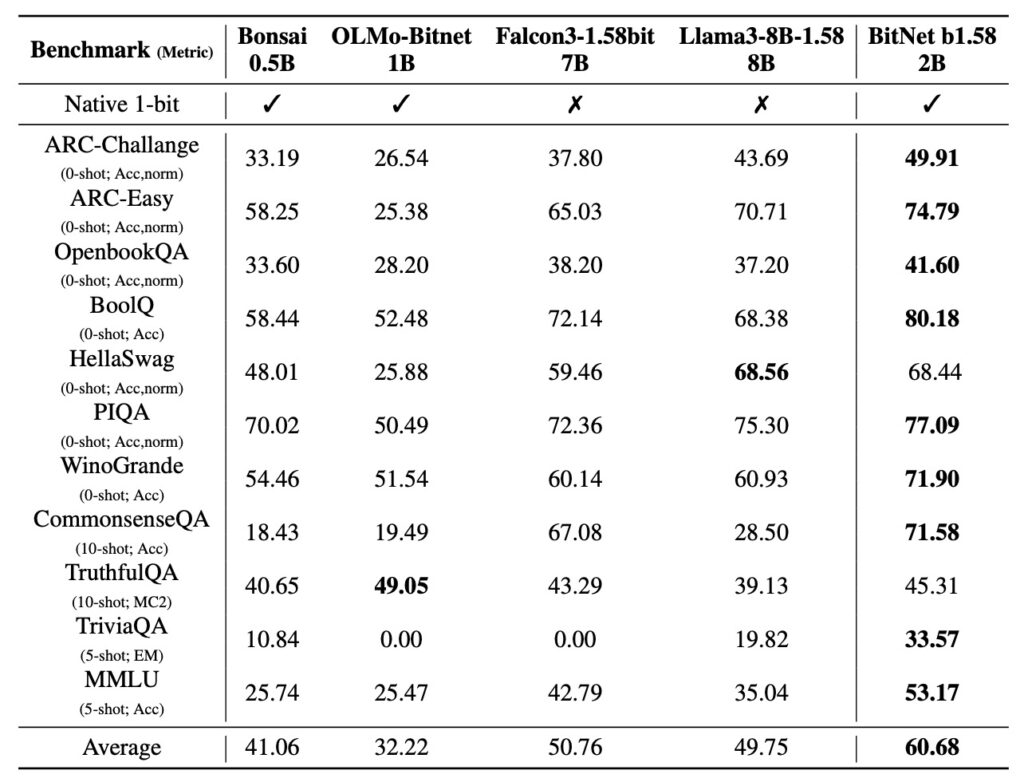

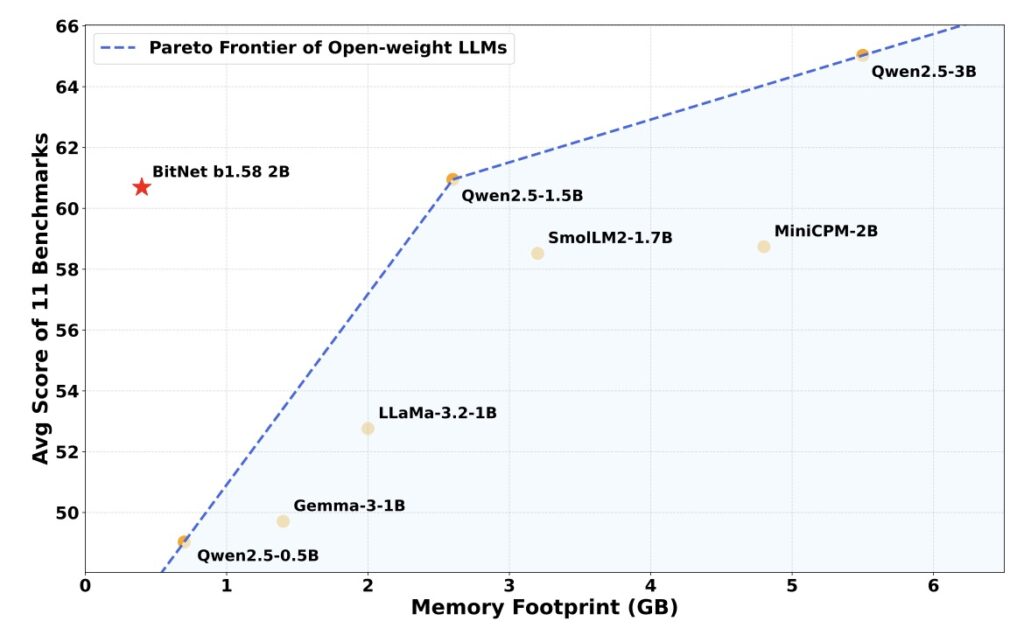

- It achieves performance comparable to state-of-the-art full-precision models while drastically reducing memory usage, energy consumption, and inference latency.

- The model is freely available on Hugging Face, with open-source implementations for both GPU and CPU, paving the way for efficient AI deployment in resource-constrained environments.

The rapid evolution of Large Language Models (LLMs) has revolutionized fields like natural language processing, code generation, and conversational AI. However, the computational demands of these models often make them inaccessible for many users and applications. BitNet b1.58 2B4T emerges as a groundbreaking solution to this challenge, offering a highly efficient, open-source alternative without compromising performance.

This article explores the technical innovations behind BitNet b1.58 2B4T, its performance benchmarks, and its potential to democratize AI by enabling deployment in resource-constrained environments.

The Challenge of Efficiency in LLMs

Open-source LLMs have been instrumental in democratizing AI, fostering innovation, and enabling research across diverse domains. However, their adoption is often hindered by the substantial computational resources required for deployment and inference.

State-of-the-art LLMs typically demand:

- Large memory footprints for storing model weights.

- High energy consumption during training and inference.

- Significant latency, making real-time applications impractical.

These challenges are particularly pronounced in edge devices, resource-constrained environments, and real-time systems. Addressing these limitations requires innovative approaches to model design and training.

1-Bit Quantization: A Game-Changer

1-bit quantization represents a radical yet promising solution to the efficiency challenges of LLMs. By constraining model weights (and potentially activations) to binary values {-1, +1} or ternary values {-1, 0, +1}, this approach drastically reduces memory requirements and enables highly efficient bitwise computations.

While previous efforts in 1-bit quantization have shown promise, they often fall short in two key areas:

- Post-Training Quantization (PTQ): Applying quantization to pre-trained full-precision models often leads to significant performance degradation.

- Native 1-Bit Models: Models trained from scratch with 1-bit weights have been limited to smaller scales, failing to match the capabilities of larger, full-precision counterparts.

BitNet b1.58 2B4T addresses these limitations by introducing a native 1-bit LLM trained at an unprecedented scale of 2 billion parameters.

BitNet b1.58 2B4T: A Technical Breakthrough

BitNet b1.58 2B4T is the first open-source, native 1-bit LLM trained on a massive corpus of 4 trillion tokens. This scale of training ensures that the model achieves performance on par with leading full-precision LLMs of similar size.

Key Features and Innovations:

- Performance Parity: Comprehensive evaluations across benchmarks for language understanding, mathematical reasoning, coding proficiency, and conversational ability demonstrate that BitNet b1.58 2B4T matches the performance of state-of-the-art full-precision models.

- Efficiency Gains: The model offers substantial reductions in:

- Memory Footprint: Requires significantly less memory to store weights.

- Energy Consumption: Operates with lower energy requirements, making it environmentally friendly.

- Inference Latency: Enables faster response times, ideal for real-time applications.

- Open-Source Accessibility: The model weights are freely available on Hugging Face, along with optimized inference implementations for both GPU (via custom CUDA kernels) and CPU (via the

bitnet.cpplibrary).

Democratizing AI with BitNet b1.58 2B4T

BitNet b1.58 2B4T represents a paradigm shift in the development and deployment of LLMs. By proving that high performance can be achieved with extreme quantization, it challenges the necessity of full-precision weights in large-scale models.

Implications for the AI Ecosystem:

- Wider Accessibility: The reduced computational requirements make advanced AI capabilities accessible to a broader audience, including researchers, developers, and organizations with limited resources.

- Edge Applications: The model’s efficiency enables deployment on edge devices, unlocking new possibilities for real-time and on-device AI applications.

- Sustainability: Lower energy consumption aligns with global efforts to reduce the environmental impact of AI technologies.

BitNet b1.58 2B4T is a testament to the potential of 1-bit quantization in overcoming the efficiency challenges of LLMs. As the first open-source, native 1-bit LLM at the 2-billion parameter scale, it sets a new standard for performance and accessibility in AI.

By making advanced AI capabilities more efficient and widely available, BitNet b1.58 2B4T paves the way for a future where powerful language models can be deployed in even the most resource-constrained environments. This innovation not only democratizes AI but also inspires further research and development in the quest for sustainable and efficient AI solutions.