Transforming Single Images into Decomposable 3D Models with Unprecedented Precision

- PartCrafter introduces a groundbreaking approach to 3D modeling by generating multiple semantically meaningful and geometrically distinct 3D meshes from a single RGB image, bypassing the limitations of traditional monolithic or two-stage methods.

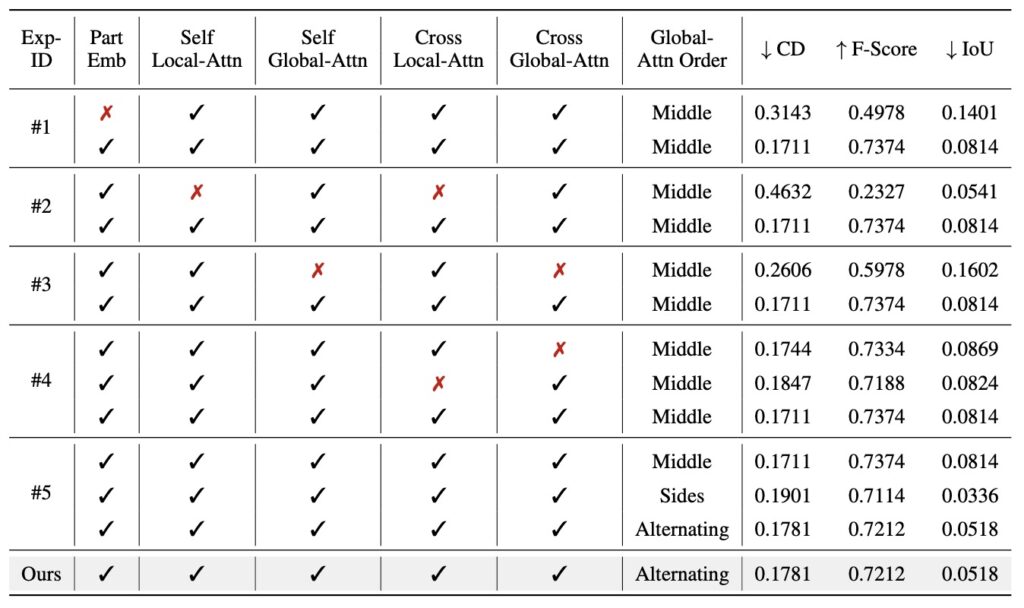

- Leveraging a compositional latent space and a hierarchical attention mechanism, PartCrafter ensures both local detail and global coherence, enabling part-aware synthesis of complex objects and scenes.

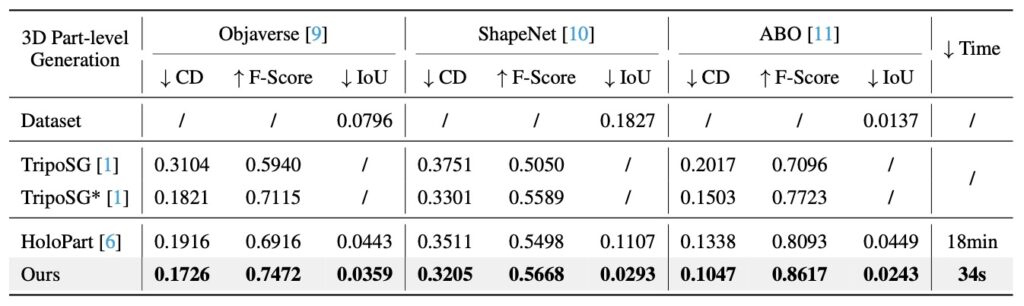

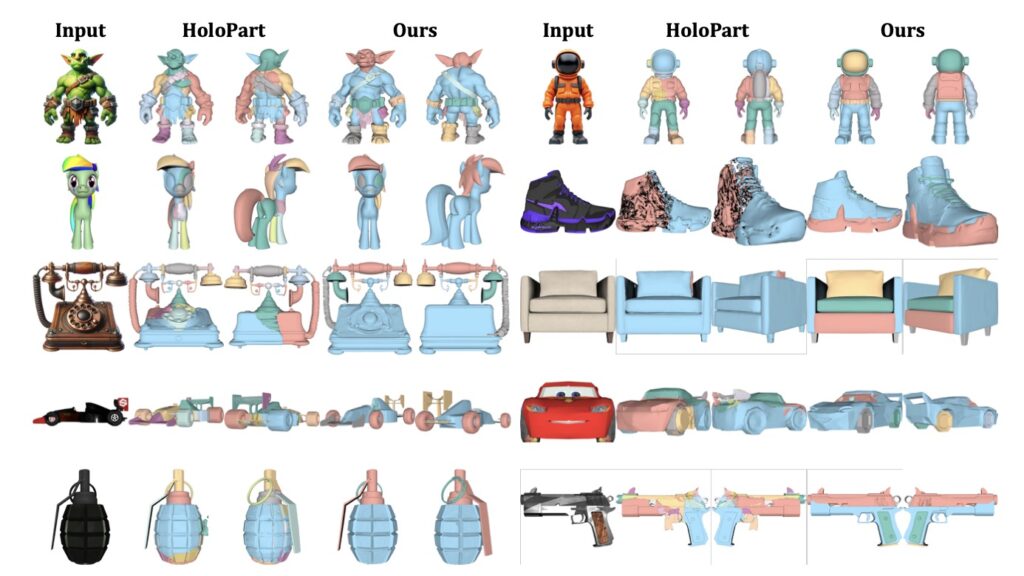

- Supported by a newly curated dataset with part-level annotations, PartCrafter outperforms existing models in creating editable, decomposable 3D assets, with code and data set for release to drive future innovation.

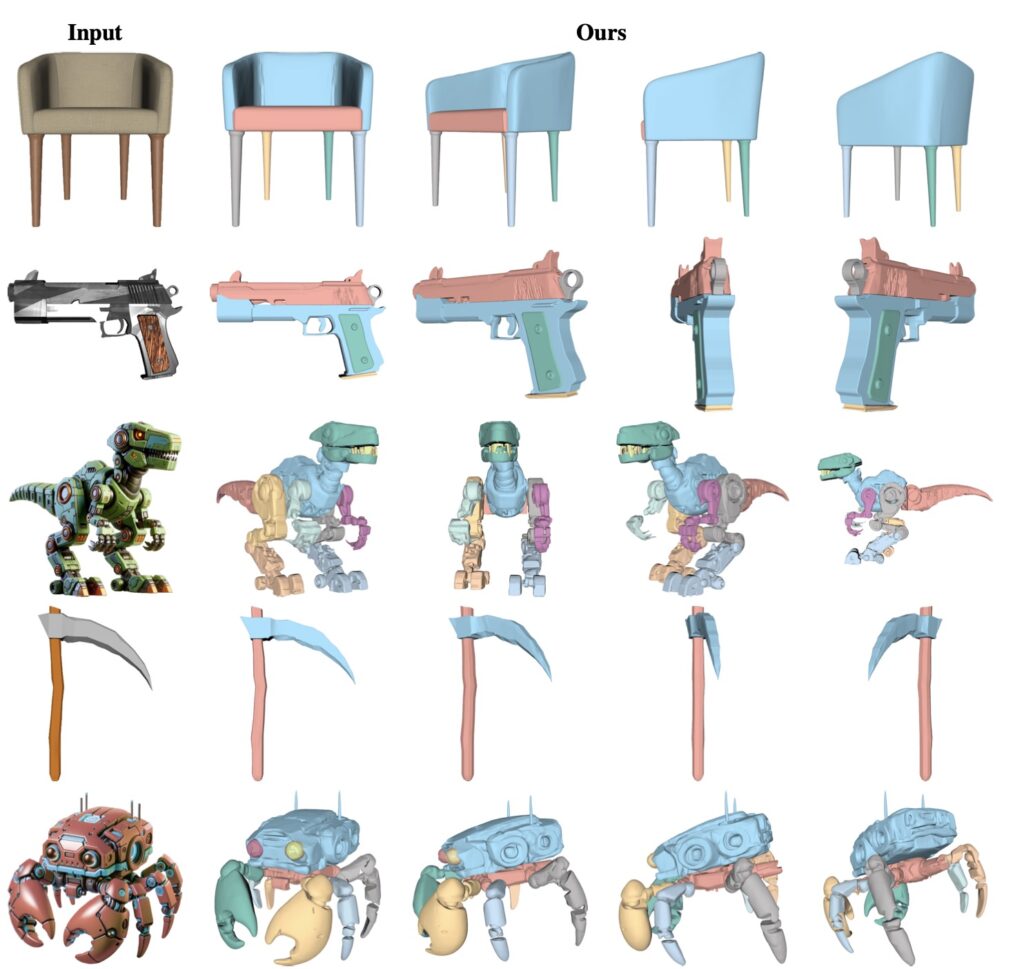

Imagine a world where a single photograph can be transformed into a fully structured, editable 3D model, with each part—be it the legs of a chair or the wheels of a car—distinctly crafted and ready for manipulation. This is no longer a distant dream but a reality brought to life by PartCrafter, the first structured 3D generative model of its kind. Unlike conventional methods that either produce a single, indivisible 3D shape or rely on cumbersome two-stage processes involving image segmentation followed by reconstruction, PartCrafter offers a unified, end-to-end solution. By simultaneously denoising multiple 3D parts from just one RGB image, it paves the way for generating both individual objects and intricate multi-object scenes with remarkable precision and flexibility.

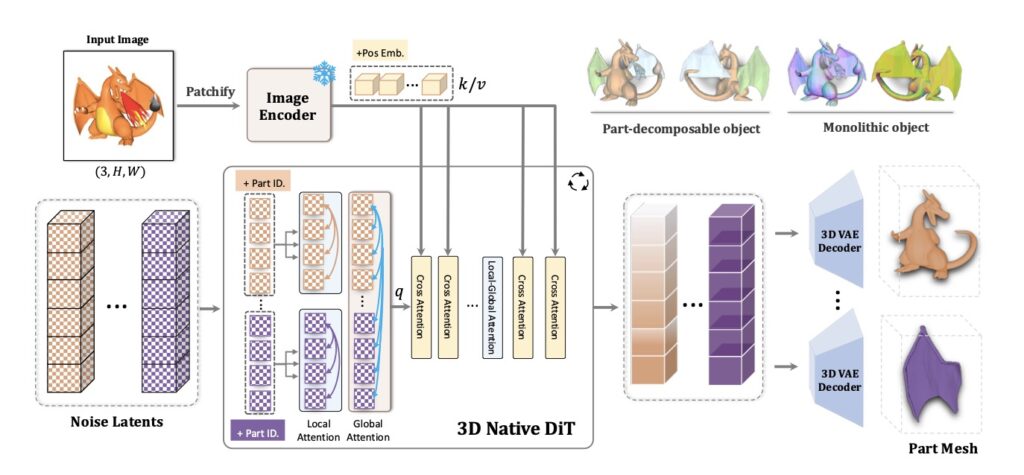

At the heart of PartCrafter lies a deep understanding of how humans perceive and interact with the world—through objects and their parts. These semantically coherent units are the building blocks of higher-level cognitive tasks like language, planning, and reasoning. While most neural networks struggle to grasp such structured, symbol-like entities, PartCrafter embraces this compositional nature. It builds upon the foundation of pretrained 3D mesh diffusion transformers (DiT), inheriting their weights, encoder, and decoder, but introduces two game-changing innovations. First, it employs a compositional latent space where each 3D part is represented by disentangled latent tokens, distinguished by learnable part identity embeddings. Second, it incorporates a local-global denoising transformer with a hierarchical attention mechanism, ensuring information flows seamlessly within and across parts for both detailed local features and overarching global coherence. This dual approach, combined with image conditioning, guarantees that each generated part is independent yet semantically consistent with the whole.

The limitations of existing 3D generation models are well-documented. Diffusion-based approaches have excelled at creating entire object meshes from scratch or images, but they often lack part-level decomposition, restricting their use in practical applications like texture mapping, animation, or scene editing. Other attempts to address this issue through two-stage pipelines—segmenting images into parts before reconstructing them in 3D—suffer from segmentation errors, high computational costs, and scalability challenges. PartCrafter sidesteps these pitfalls entirely. Its compositional architecture allows for the joint synthesis of multiple distinct 3D parts, each tied to a dedicated set of latent variables. This means parts can be independently edited, removed, or added without affecting the rest of the model—a level of flexibility that is unprecedented in the field.

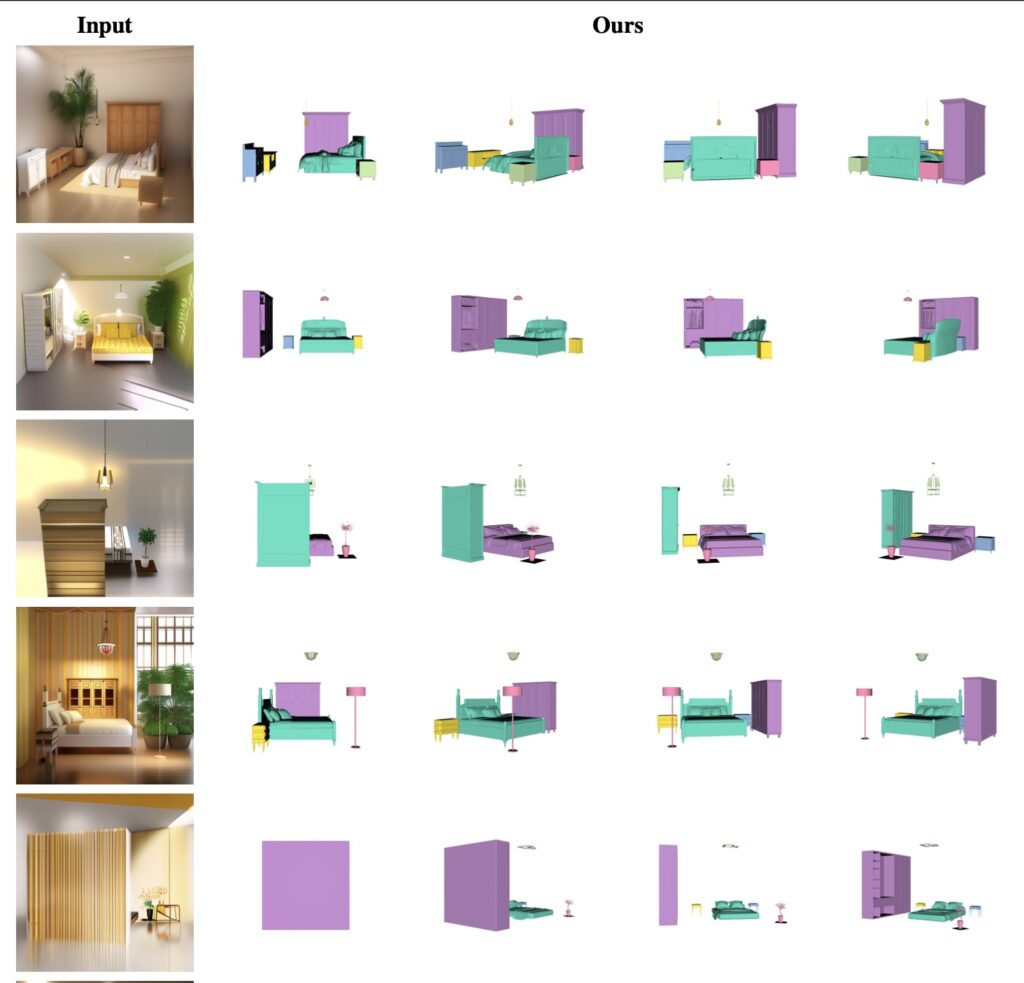

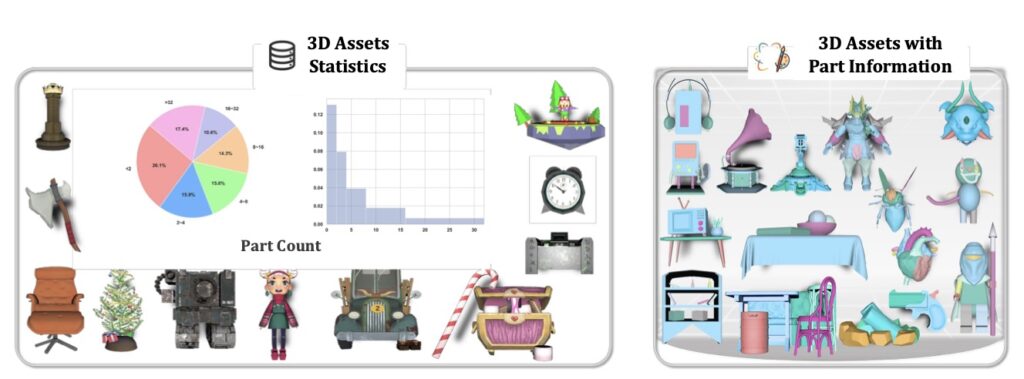

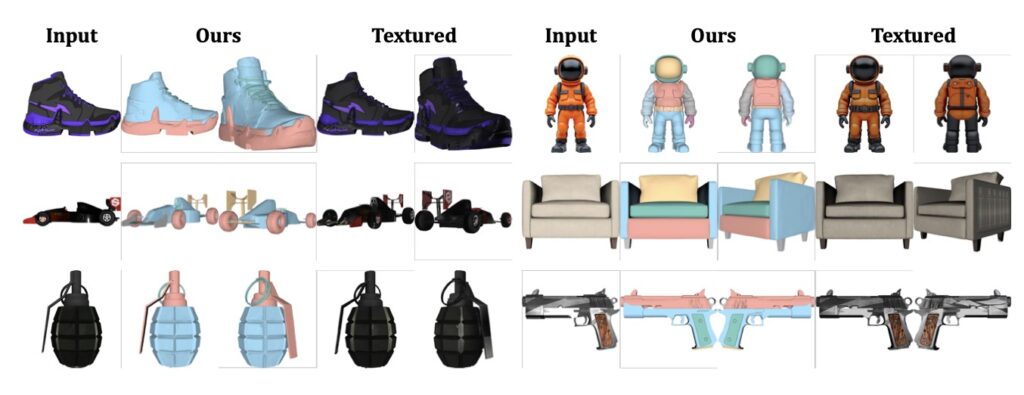

To train such an innovative model, the team behind PartCrafter curated a unique dataset by mining part-level annotations from large-scale 3D object repositories like Objaverse, ShapeNet, and the Amazon Berkeley Objects (ABO) dataset. Unlike prior efforts that flattened these assets into single meshes, PartCrafter retains their modular components, creating a rich collection of part-annotated 3D models. For scene-level generation, the model also leverages the 3D-Front dataset, ensuring it can handle complex multi-object environments. This meticulous data preparation has paid off—experiments demonstrate that PartCrafter not only outperforms existing methods in generating decomposable 3D meshes but also excels at synthesizing parts not directly visible in the input image, thanks to its robust part-aware generative priors.

The implications of PartCrafter are vast. For industries ranging from gaming and animation to architecture and product design, the ability to generate structured 3D assets from a single image opens up new avenues for creativity and efficiency. Imagine animators tweaking individual components of a character model without rebuilding the entire structure, or architects editing specific elements of a virtual building with ease. PartCrafter’s disentangled latent tokens and local-global attention mechanism make such tasks not just possible but intuitive. Moreover, by eliminating the need for brittle segmentation-then-reconstruction pipelines, it offers a more robust and scalable solution for 3D understanding and synthesis.

Of course, no innovation is without its challenges. PartCrafter’s training dataset, while groundbreaking, is relatively small at 50,000 part-level data points compared to the millions used for typical 3D object generation models. This limitation suggests room for growth, and future work could focus on scaling up the training process with larger, higher-quality datasets to further enhance the model’s capabilities. Nevertheless, the current achievements of PartCrafter stand as a testament to the power of integrating structural understanding into the generative process. With the promise of releasing both the code and training data, the team invites the broader research and development community to build upon this foundation, potentially revolutionizing how we interact with and create in the 3D space.

In a landscape where 3D modeling has often been constrained by rigid workflows and computational inefficiencies, PartCrafter emerges as a beacon of innovation. It not only validates the feasibility of part-level generation but also redefines what’s possible in 3D design. As we look to the future, the question isn’t whether structured 3D generation will transform industries—it’s how quickly we can harness tools like PartCrafter to make that transformation a reality. What potential applications do you see for such a technology in your own field or interests? I’d love to hear your thoughts!