Advancing 3D Content Creation through a Generation-Reconstruction Cycle

- Cycle3D combines 2D diffusion-based generation with 3D reconstruction for superior image-to-3D conversion.

- The framework enhances the quality and consistency of 3D content.

- Extensive experiments show Cycle3D outperforms state-of-the-art methods.

Creating high-quality and consistent 3D content from single-view images has long been a challenging task in the fields of robotics, gaming, and architecture. Traditionally, this process required significant manual effort and expertise in complex computer graphics software. However, recent advancements have led to automated methods that aim to simplify this process. Among these, Cycle3D stands out as a novel framework that combines 2D diffusion-based generation with 3D reconstruction to produce superior 3D content.

The Cycle3D Framework

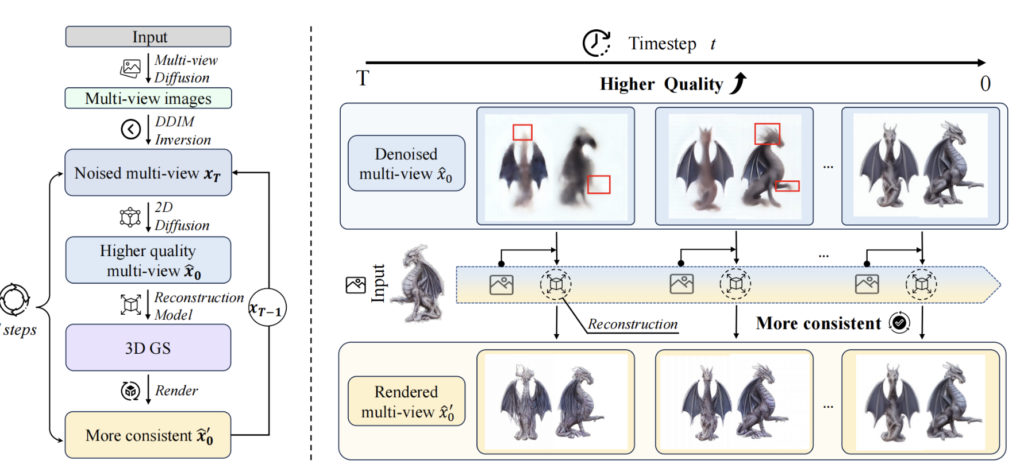

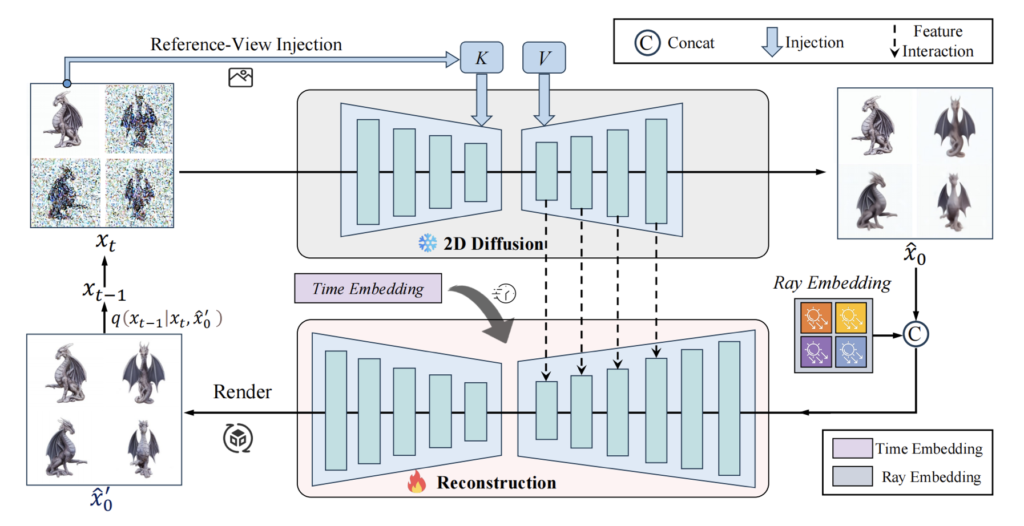

Cycle3D addresses the limitations of traditional two-stage 3D reconstruction models, which typically suffer from producing low-quality and inconsistent multi-view images. The framework integrates a 2D diffusion-based generation module with a feed-forward 3D reconstruction module in a cyclical manner during the multi-step diffusion process. This iterative approach ensures higher quality and consistency in the final 3D reconstruction.

Generation and Reconstruction Cycle

The core of Cycle3D lies in its ability to cyclically use both 2D and 3D models:

- 2D Diffusion Model: During the denoising process, the 2D model generates high-quality multi-view images. It can control the generation of unseen views and inject reference-view information, enhancing diversity and texture consistency.

- 3D Reconstruction Model: This model works in tandem with the 2D model, correcting 3D inconsistencies and improving reconstruction quality as the diffusion process evolves.

Experimental Results

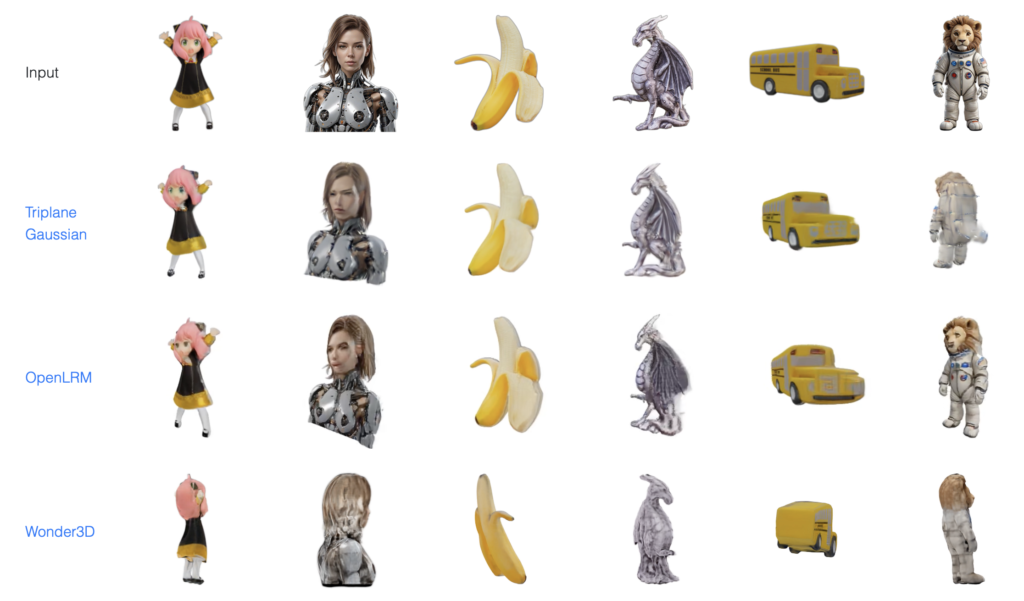

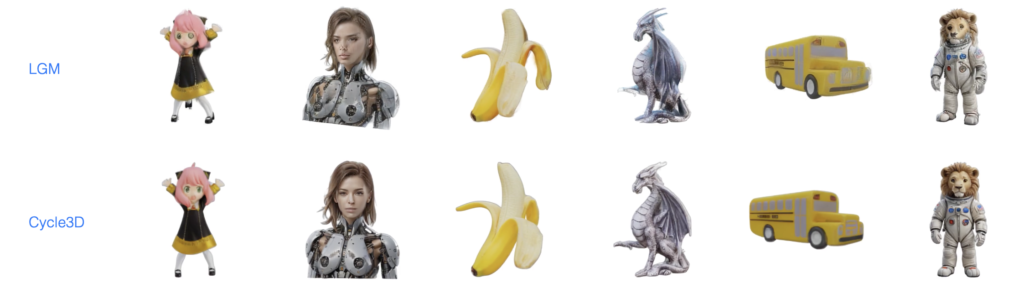

Extensive experiments have demonstrated that Cycle3D significantly surpasses existing state-of-the-art methods in terms of both generation quality and consistency. The integrated approach of using a generation-reconstruction cycle ensures that the produced 3D assets are of high fidelity and free from common issues such as texture inconsistency and poor resolution.

Applications and Impact

The ability to generate high-quality and diverse 3D assets automatically has broad implications across various industries:

- Robotics: Improved 3D models can enhance robotic vision and interaction capabilities.

- Gaming: High-quality 3D content can lead to more immersive and visually stunning game environments.

- Architecture: Architects can benefit from rapid prototyping and visualization of designs with consistent and accurate 3D models.

Cycle3D represents a significant advancement in the field of 3D computer vision by introducing a novel framework that merges 2D diffusion-based generation with 3D reconstruction in a cyclical process. This approach addresses the limitations of previous models, offering a robust solution for generating high-quality and consistent 3D content from single-view images. As the demand for automated and efficient 3D content creation continues to grow, Cycle3D sets a new benchmark for the industry, paving the way for further innovations and applications in various fields.