How a Chinese Startup Outperformed DALL-E 3 and Stable Diffusion—and Why It’s Just the Beginning

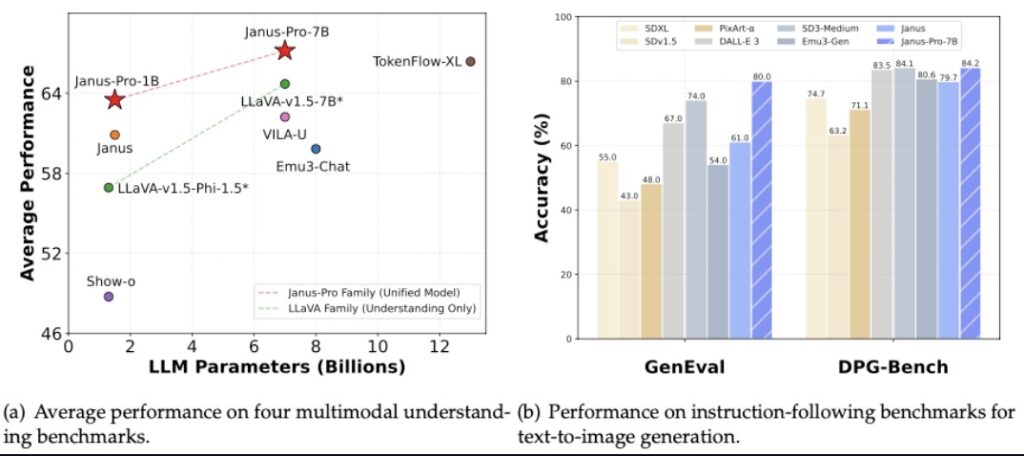

- Benchmark Dominance: Janus-Pro-7B surpasses OpenAI’s DALL-E 3 and Stability AI’s Stable Diffusion in critical image-generation benchmarks, redefining what open-source AI can achieve.

- Architectural Innovation: A novel framework decouples visual encoding to resolve conflicts between understanding and generation, offering unmatched flexibility.

- Market Shockwaves: GPU demand surges, China’s AI prowess accelerates, and mainstream media misinfo clouds the narrative—while developers reap the rewards of open-source innovation.

DeepSeek, the Chinese AI startup that recently stunned the tech world with its R1 model and DeepSeek-V3-powered assistant, has done it again. Enter Janus-Pro-7B, a multimodal AI model that not only generates images but beats industry giants like OpenAI’s DALL-E 3 and Stability AI’s Stable Diffusion on key benchmarks. This isn’t just another incremental upgrade—it’s a seismic shift in how AI handles both understanding and creating visual content.

Why Janus-Pro-7B Matters

At its core, Janus-Pro-7B is built on a novel autoregressive framework that unifies multimodal tasks while sidestepping the pitfalls of earlier models. Traditional systems often force a single visual encoder to juggle understanding (e.g., analyzing an image) and generation (e.g., creating one), leading to inefficiencies. Janus-Pro solves this by decoupling visual encoding into separate pathways—one for comprehension (using SigLIP-L, a vision encoder supporting 384×384 resolution) and another for generation (leveraging a specialized tokenizer with a 16x downsample rate). The result? A single transformer architecture that balances flexibility, speed, and precision.

Benchmark results tell the story: Janus-Pro outperforms rivals on GenEval and DPG-Bench, metrics evaluating image quality, stability, and alignment with text prompts. DeepSeek credits this leap to 72 million high-quality synthetic images added to its training data, blended with real-world datasets. The 7-billion-parameter model also boasts faster training speeds and richer detail retention, cementing its status as a next-gen contender.

Broader Implications: GPU Demand, Open-Source Surges, and Media Noise

- GPU Demand Will Not Slow Down

Janus-Pro’s release underscores a harsh reality: AI’s hunger for compute power is insatiable. As models grow more complex—especially multimodal ones—the race for GPUs intensifies. Nvidia and Oracle’s recent stock dips after DeepSeek-V3’s success hint at market jitters, but long-term demand remains bulletproof. - Open Source (and China) Are Closing the Gap

OpenAI isn’t “done for,” but the playing field is leveling. DeepSeek’s open-sourcing of Janus-Pro and R1 gives developers free access to cutting-edge tools, democratizing innovation. Meanwhile, China’s AI ecosystem—once seen as lagging—is proving it can outpace Western rivals. The DeepSeek-V3 assistant topping Apple’s U.S. App Store is a symbolic victory in this quiet tech Cold War. - Misinformation Clouds the Conversation

Mainstream media narratives around AI are increasingly polarized. Is Janus-Pro’s rise a threat? A fluke? A PR stunt? The truth lies in the benchmarks and technical reports, but sensationalism risks muddying the waters. DeepSeek’s transparency with its methodology (e.g., synthetic data balancing) offers a counterpoint—if observers bother to look.

The Open-Source Gift That Keeps on Giving

By open-sourcing Janus-Pro and R1, DeepSeek isn’t just flexing technical muscle—it’s fueling a developer revolution. Startups and researchers now have free access to models rivaling billion-dollar proprietary systems. This accelerates global AI progress but also pressures giants like OpenAI to innovate faster or risk irrelevance.

Yet challenges remain. Janus-Pro’s reliance on synthetic data raises questions about bias and authenticity, while its decoupled architecture demands careful fine-tuning. Still, its simplicity and effectiveness suggest a blueprint for future multimodal systems.

The Future Is Multimodal—and Unpredictable

DeepSeek’s Janus-Pro-7B isn’t just a model; it’s a manifesto. It proves that open-source AI can outgun closed systems, that China’s tech sector is a force to watch, and that the next breakthrough might come from anywhere. As GPU markets wobble and media narratives clash, one truth emerges: the AI race is far from over—it’s just getting interesting.

For developers, the message is clear: experiment, iterate, and embrace the chaos. For the rest of us? Buckle up.