Unleashing Creativity in Computer Graphics with Intuitive, Precision-Driven Hair Generation

- Precision Meets User-Friendliness: Traditional text or image-based methods for generating hair strands often fall short in control and detail, but sketch-based inputs offer a flexible, intuitive alternative that empowers users to craft exact hairstyles with simple strokes.

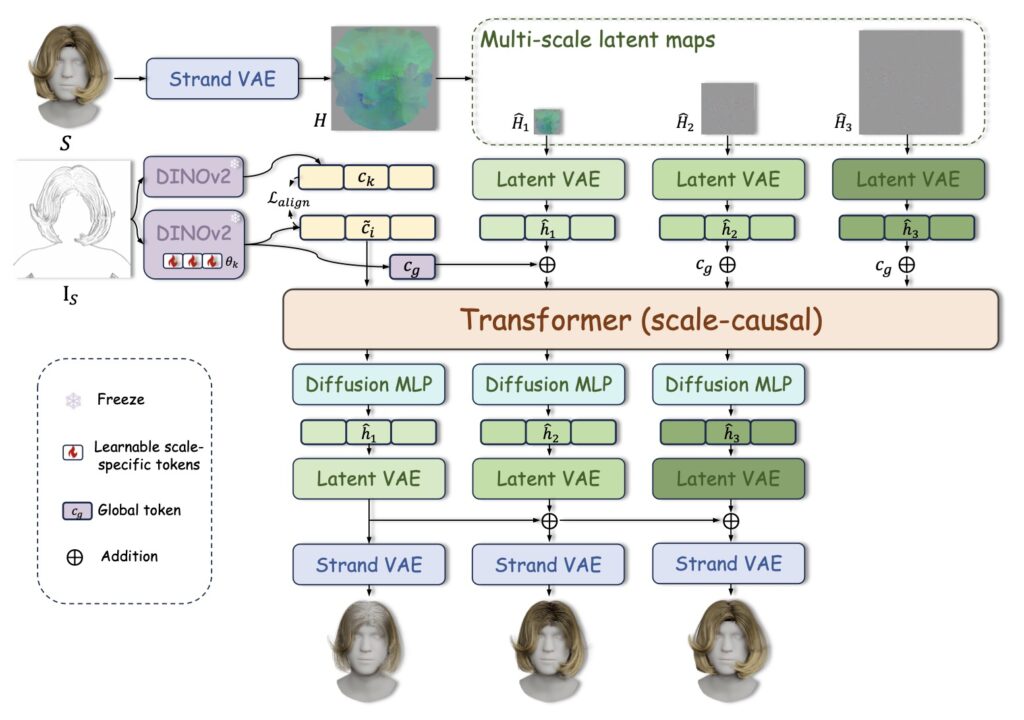

- Innovative Framework Breakthroughs: StrandDesigner introduces a learnable upsampling strategy and multi-scale adaptive conditioning using transformers and diffusion heads, tackling complex strand interactions and ensuring consistent, realistic results across various sketch patterns.

- Superior Performance and Future Horizons: Backed by experiments on benchmark datasets, this approach outperforms existing models in realism and precision, paving the way for extensions like multi-view sketches and dynamic hair motion in virtual reality and beyond.

In the ever-evolving world of computer graphics and virtual reality, creating lifelike hair has long been a holy grail for digital artists, game developers, and VR creators. Imagine designing a video game character’s flowing locks or a virtual avatar’s intricate curls with the same ease as doodling on a napkin. That’s the promise of realistic hair strand generation, a technology that can transform flat, uninspired digital models into vibrant, believable humans. High-quality strands don’t just add visual flair—they elevate immersion in everything from blockbuster movies to interactive simulations. Yet, for years, the tools available have been clunky, relying on vague text prompts or cumbersome image references that often lead to unpredictable results. Enter StrandDesigner, a groundbreaking framework that’s flipping the script by putting the power of sketches directly into users’ hands.

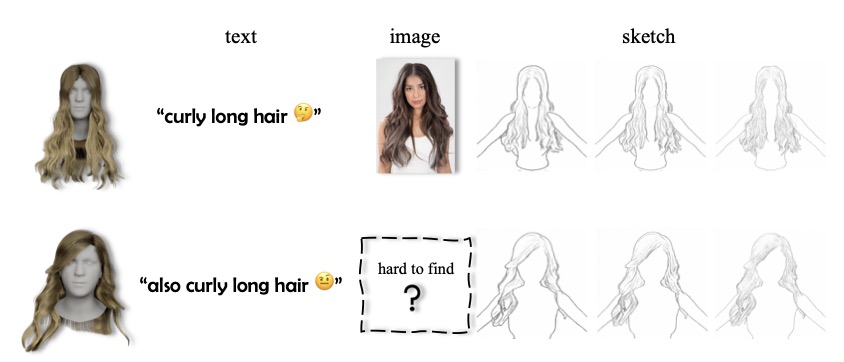

The challenges in hair generation stem from the inherent complexity of hair itself: strands twist, curl, and interact in ways that defy simple descriptions. Recent advancements, like diffusion models, have made strides by generating hairstyles from text prompts. For instance, models such as HAAR use latent diffusion to create guiding strand features based on descriptions, then upscale them with techniques like nearest neighbor or bilinear interpolation. These methods are impressive, showing how generative AI can breathe life into digital content. However, they come with significant drawbacks. Text-based conditioning creates a “one-to-many” mapping—think of prompting “curly long hair,” which could yield countless variations in curl tightness, length, and style, making precise control nearly impossible. Ambiguities in language further complicate things, as words often fail to capture the nuanced details needed for high-fidelity strands.

Image-based approaches offer more detail but sacrifice user-friendliness. Capturing real hairstyles through photographs is time-consuming, requiring specific angles, lighting, and poses that may not translate well to 3D models. Building diverse datasets for training these models is resource-heavy, and static images can’t fully convey hair’s dynamic nature. This is where sketches shine as a compelling alternative. With binarized strokes—simple black-and-white lines—users can intuitively draw the desired shape, flow, and contours of a hairstyle. Sketches provide geometric precision without the hassle of real-world photography, allowing for easy modifications to experiment with new designs. They’re flexible, accessible, and bridge the gap between artistic vision and technical execution, making them ideal for customization in fields like digital content creation.

StrandDesigner is the first framework to harness this sketch-based approach for generating realistic 3D hair strands, addressing the limitations of its predecessors while balancing ease of use with detailed control. At its core, the system takes binarized sketch images as input, enabling users to specify hairstyles with fine-grained accuracy. This isn’t just about drawing lines; it’s about creating a system that understands and extrapolates from those strokes to produce physically plausible hair. The framework tackles key hurdles, such as modeling intricate strand interactions and handling diverse sketch patterns, through two major innovations. First, a learnable strand upsampling strategy encodes 3D strands into multi-scale latent spaces, allowing the model to build from coarse outlines to detailed, high-resolution hair. Second, a multi-scale adaptive conditioning mechanism employs a transformer architecture equipped with diffusion heads, ensuring consistency across different levels of granularity—from broad shapes to individual strand behaviors.

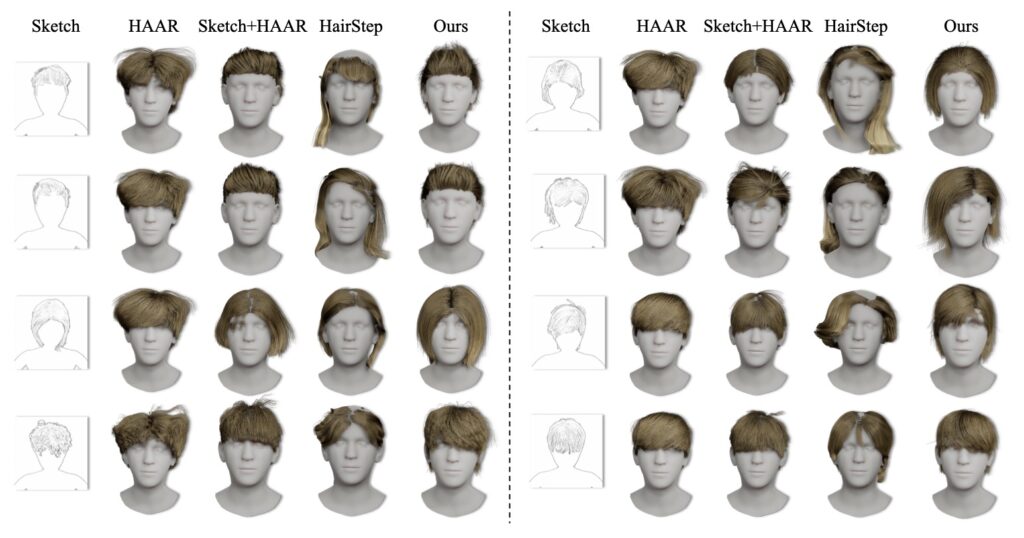

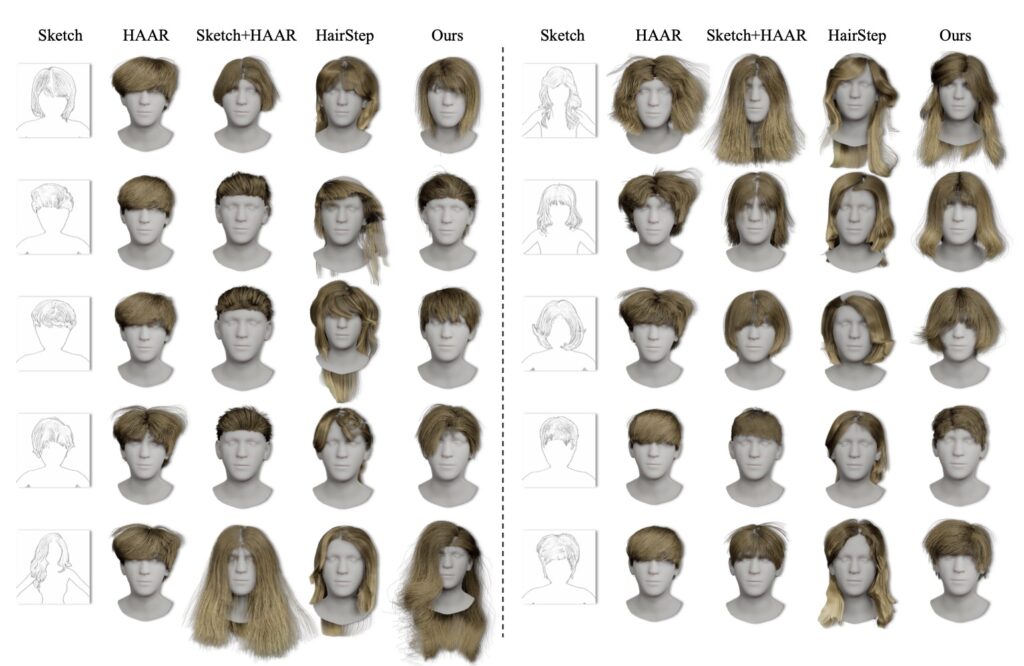

What sets StrandDesigner apart is its ability to generate strands that not only match the sketch’s contours but also maintain realism, like natural curl patterns and physical interactions. Experiments on several benchmark datasets demonstrate its edge over existing methods. Compared to text-guided HAAR and image-based HairStep, StrandDesigner excels in quantitative metrics, showing superior geometric fidelity—meaning the generated hair aligns more closely with the intended design—and semantic alignment, where the output respects the sketch’s overall intent. Qualitative results are equally compelling: side-by-side comparisons reveal strands that look more lifelike, with better handling of variations like straight versus wavy hair or short crops versus long tresses. These improvements aren’t just academic; they translate to practical gains in industries where visual authenticity is key, such as film production, where directors can sketch custom hairstyles for characters, or VR training simulations, where realistic avatars enhance user engagement.

Looking beyond the lab, StrandDesigner’s implications ripple into broader applications. In virtual reality, it could enable personalized avatars that reflect users’ real-world appearances or wild creative visions, fostering more immersive social experiences. For game developers, it means faster prototyping of character designs without relying on expensive 3D modeling software. Even in fields like fashion and cosmetology, digital sketches could preview hairstyles before a single snip. Of course, no innovation is without room for growth. Future extensions might incorporate multi-view sketch inputs, allowing users to draw from different angles for even more accurate 3D representations, or integrate dynamic strand motion synthesis to simulate hair movement in wind or during actions. As AI continues to democratize creative tools, StrandDesigner stands as a beacon of what’s possible when technology meets human intuition—turning simple sketches into strands of digital magic that feel astonishingly real.