Claude-3.7 Dominates Super Mario Bros, But What Does This Mean for the Future of AI Testing?

- Claude-3.7 Sonnet’s gaming prowess shines in real-time challenges like Super Mario Bros, outperforming rivals like GPT-4o and Gemini-1.5-Pro with strategic planning and adaptability.

- Games aren’t just fun—they’re AI evaluation goldmines, offering dynamic environments to test memory, reflexes, and problem-solving under pressure.

- The LMGames initiative invites developers to experiment with open-source agents across classics like Tetris and 2048, bridging the gap between AI research and play.

When Anthropic revealed that its Claude-3.7 Sonnet model conquered Pokémon Red—battling gym leaders and earning badges—the AI community raised an eyebrow. Pokémon? Really? But hidden beneath the nostalgia lies a serious question: Can mastering retro games teach us how to build smarter, more adaptable AI?

The Pokémon Paradigm: From Toy Benchmark to Tactical Triumph

Anthropic’s experiment equipped Claude-3.7 with pixel inputs, button controls, and a “memory” system, enabling it to navigate Pallet Town and beyond. Unlike its predecessor, Claude-3.0 Sonnet—which famously got stuck in the starting house—the upgraded model executed 35,000 actions to defeat three gym leaders. This “extended thinking” capability, akin to OpenAI’s o3-mini, allows Claude to allocate extra compute time for complex tasks, like strategizing against Pokémon Red’s Surge. While skeptics dismiss Pokémon as a “toy benchmark,” it’s part of a growing trend: Street Fighter, Pictionary, and now Super Mario Bros are becoming AI testing battlegrounds.

Enter LMGames: Where AI Meets Real-Time Chaos

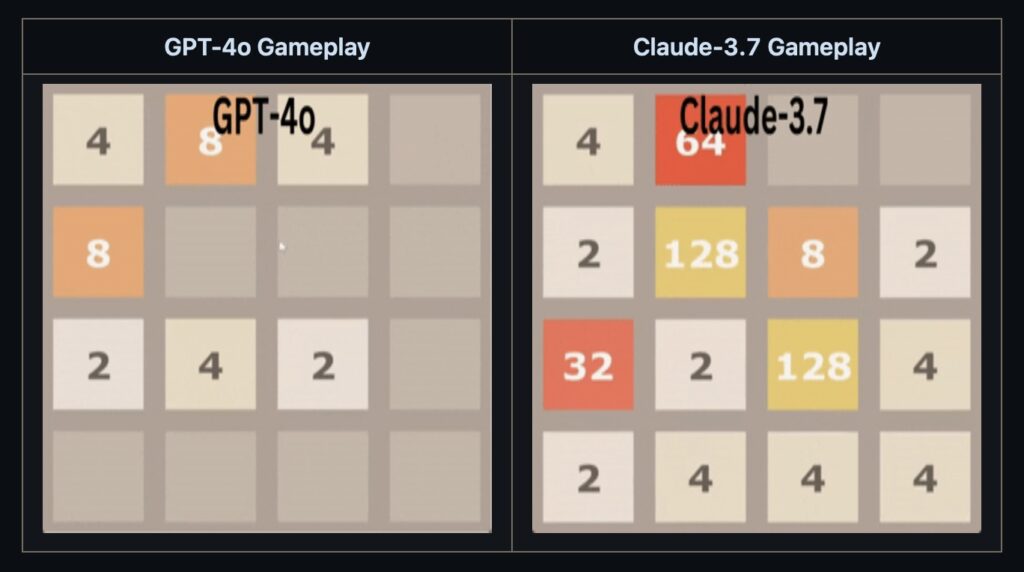

But Pokémon’s turn-based gameplay is child’s play compared to the split-second decisions required in Super Mario Bros. That’s where the LMGames team enters the fray. By building gaming agents for real-time platformers and puzzles, they’ve uncovered striking differences in model performance:

- Claude-3.7 Sonnet excels with “simple heuristics,” mastering jumps, enemy evasion, and power-up timing.

- Claude-3.5 struggles with complex maneuvers, highlighting the leap between versions.

- GPT-4o and Gemini-1.5-Pro lag behind, suggesting current limits in real-time spatial reasoning.

The team’s open-source repo now supports Super Mario Bros, 2048, and Tetris, with more games slated for release. Developers can customize agents, tweak prompts, and test hypotheses—a playground for probing AI’s limits.

Why Games Matter: Beyond High Scores and Nostalgia

Games aren’t just about leaderboards. They’re microcosms of real-world challenges:

- Dynamic environments demand adaptability (e.g., Tetris’s falling blocks).

- Time pressure tests decision-making speed (e.g., avoiding Mario’s Hammer Bros).

- Long-term planning is essential (e.g., 2048’s tile-merging strategy).

As LMGames’ mission states, these environments offer “new perspectives for AI evaluations,” forcing models to balance instinct and foresight. Crucially, they also redefine human roles: developers design challenges, while AI agents reveal unexpected behaviors—like Claude-3.7’s knack for pixel-perfect jumps.

The Future of Play: AI’s Next Level

Anthropic’s Pokémon feat and LMGames’ Mario experiments mark a turning point. As AI models grow more capable, games provide measurable, engaging benchmarks free from real-world risks. Yet questions linger: How much compute is too much for a high score? Can agents generalize skills across games?

One thing’s clear: the fusion of AI and gaming is no game. It’s a proving ground for the adaptive, creative systems of tomorrow—and you’re invited to play.