A new turnkey solution promises to bring high-performance AI inference to standard air-cooled data centers, eliminating the need for exotic infrastructure retrofits.

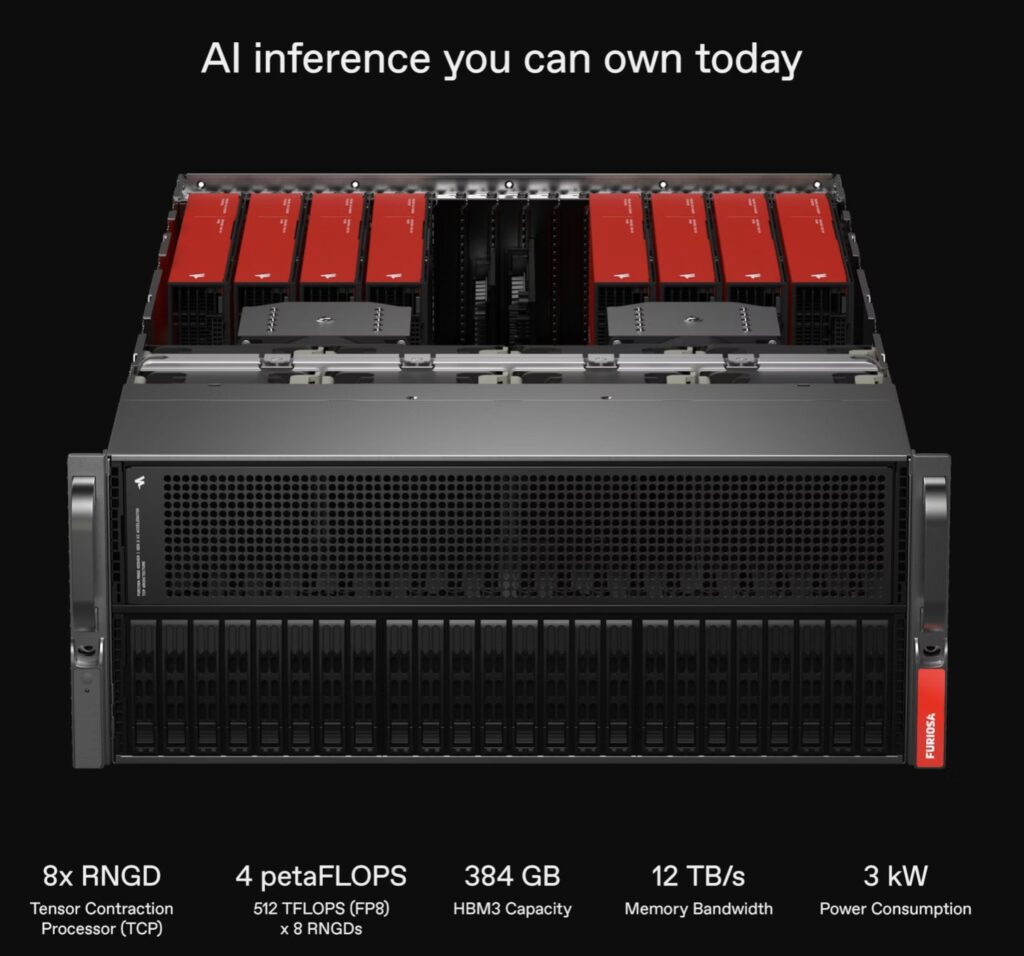

- Seamless Integration: The NXT RNGD Server operates at just 3kW and uses standard air cooling, allowing enterprises to deploy “AI factories” within existing facilities without expensive liquid-cooling upgrades.

- Massive Performance: Packing up to 8 RNGD accelerators and 384 GB of HBM3 memory, the system delivers 4 petaFLOPS of compute power, validated by major players like LG AI Research.

- Instant Scalability: Designed to move from unboxing to serving traffic immediately, the server ships with preinstalled SDKs and standard PCIe interconnects, significantly lowering the Total Cost of Ownership (TCO).

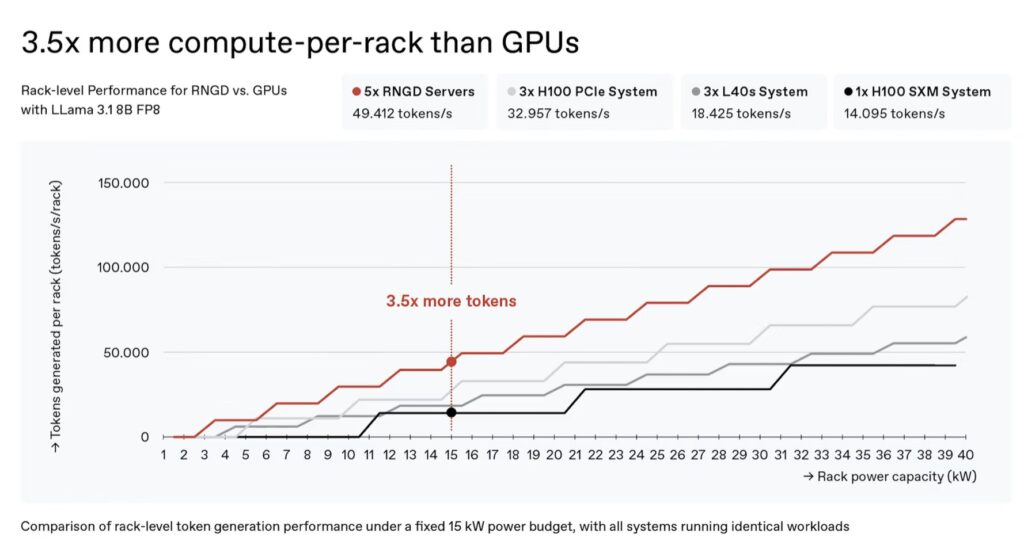

The global appetite for artificial intelligence is insatiable, with data center demand projected to hit 60 GW in 2024 and triple by the end of the decade. Yet, the industry faces a physical bottleneck: power and heat. While next-generation GPU clusters often demand liquid cooling and upwards of 10 kW per server, over 80% of today’s data centers are air-cooled and capped at 8 kW per rack. Enter FuriosaAI, which has just announced a game-changing solution designed to bridge this gap: the NXT RNGD Server.

This new release is FuriosaAI’s first branded, turnkey solution for AI inference. It promises to liberate enterprises from the need for proprietary fabrics or massive facility overhauls, offering a path to high-performance AI that fits strictly within the power and cooling limits of modern facilities.

Democratizing the AI Factory

The core philosophy behind the NXT RNGD Server is accessibility through efficiency. FuriosaAI has engineered the system to be “plug-and-play,” optimizing the platform over standard PCIe interconnects rather than requiring exotic, high-maintenance infrastructure.

The result is a powerhouse system that runs at a remarkably efficient 3 kW, utilizing standard redundant 2,000 W Titanium PSUs and air cooling. This specific design choice means that the NXT RNGD Server can be deployed in the vast majority of existing data centers immediately. By avoiding the “energy tax” of liquid cooling and high-voltage retrofits, FuriosaAI is enabling organizations to build out practical, cost-effective AI factories on-premise.

This is a critical development for businesses with sensitive workloads. For sectors like finance, telecommunications, and biotechnology, where regulatory compliance and privacy are paramount, the NXT RNGD Server allows for complete control over enterprise data, keeping model weights entirely on local infrastructure.

Under the Hood: A Technical Marvel

Despite its modest power footprint, the NXT RNGD Server does not compromise on capability. It is built to handle the most demanding AI workloads available today.

The system is powered by dual AMD EPYC processors and features up to 8 × RNGD accelerators. This configuration delivers a staggering 4 petaFLOPS of FP8 compute power per server. To feed these hungry processors, the system is equipped with 384 GB of HBM3 memory, offering a bandwidth of 12 TB/s, alongside an additional 1 TB of DDR5 system memory.

FuriosaAI has ensured versatility in precision, with support for BF16, FP8, INT8, and INT4 formats. Storage and networking are equally robust, featuring:

- Storage: 2 × 960 GB NVMe M.2 for the OS and 2 × 3.84 TB NVMe U.2 for internal data.

- Networking: A 1G management NIC paired with 2 × 25G data NICs.

- Security: Enterprise-grade protection including Secure Boot, TPM, and BMC attestation.

On the software side, the system is designed to serve immediately upon installation. It ships with the Furiosa SDK and Furiosa LLM runtime preinstalled, offering native Kubernetes and Helm integration. This includes OpenAI API support, allowing developers to deploy and scale AI workloads from day one without wrestling with complex setup procedures.

Proven in the Real World: The LG AI Research Case Study

The claims of efficiency and performance are already being validated by global heavyweights. In July, LG AI Researchannounced the adoption of RNGD for inference computing on their massive EXAONE models.

The performance metrics reported are significant. Running the EXAONE 3.5 32B model on a single server equipped with four RNGD cards, LG achieved:

- 60 tokens/second with a 4K context window.

- 50 tokens/second with a 32K context window.

This real-world validation has led to an expanded partnership, with FuriosaAI now working to supply NXT RNGD servers to enterprises across the electronics, finance, and biotech sectors that utilize LG’s EXAONE models.

The Future of Efficient Inference

As the industry faces a “once-in-a-generation transformation,” the NXT RNGD Server represents a practical path forward. It addresses the TCO (Total Cost of Ownership) crisis by allowing businesses to leverage their current infrastructure—whether on-prem servers or cloud data centers—rather than waiting for new, power-dense facilities to be built.

By combining silicon innovation with smart system design, FuriosaAI is proving that the future of AI infrastructure doesn’t necessarily mean “bigger and hotter.” instead, it means smarter, cooler, and more compatible.