The tech giant makes its latest “frontier intelligence” model the default standard, heating up the AI arms race against OpenAI.

- The New Standard: Google has replaced the previous 2.5 model with Gemini 3 Flash as the default engine for the Gemini app and search, offering a significant upgrade in speed and multimodal understanding.

- Benchmark Breakthroughs: The new model outperforms competitors on the MMMU-Pro reasoning benchmark and nearly matches the much larger Gemini 3 Pro on the “Humanity’s Last Exam” test.

- Strategic Pricing: While slightly more expensive than its predecessor, Gemini 3 Flash is positioned as an enterprise “workhorse,” offering 3x the speed of the previous Pro model and massive token efficiency.

In a move designed to democratize frontier-level AI intelligence, Google has officially released Gemini 3 Flash, immediately establishing it as the default model within the global Gemini app and the AI mode in Search. Coming just a month after the release of the heavy-hitting Gemini 3, the new Flash variant represents a strategic pivot: bringing high-end reasoning capabilities to users at lightning speed and lower costs.

This release arrives roughly six months after the debut of Gemini 2.5 Flash, but the performance leap suggests a much longer developmental stride. By making this the default experience, Google is aggressively looking to steal OpenAI’s thunder in a market that is becoming increasingly defined by speed and utility rather than just raw computing power.

Redefining “Lightweight” Intelligence

Historically, “Flash” or “Turbo” models in the AI industry have meant trading intelligence for speed. Gemini 3 Flash appears to challenge that trade-off. According to Google’s technical report, the model is not just fast; it is remarkably smart.

On the Humanity’s Last Exam (HLE) benchmark—a rigorous test designed to evaluate expertise across diverse domains without tool assistance—Gemini 3 Flash scored 33.7%. To put that in perspective, the heavyweight Gemini 3 Pro scored 37.5%, and OpenAI’s newly released GPT-5.2 scored 34.5%. Perhaps most tellingly, it completely eclipsed its predecessor, Gemini 2.5 Flash, which scored only 11%.

Furthermore, on the MMMU-Pro benchmark, which tests multimodality and reasoning, Gemini 3 Flash secured the top spot with a score of 81.2%, outscoring all current competitors. This data suggests that the gap between “lightweight” and “pro” models is closing rapidly.

A Multimodal Consumer Experience

For the everyday user, this upgrade transforms the Gemini app into a more versatile assistant. Google emphasizes that the new model excels at identifying and processing multimodal content—meaning it can seamlessly handle text, audio, images, and video simultaneously.

Users can now leverage the model for highly specific, real-world tasks:

- Video Analysis: Upload a short video of a pickleball game and ask for coaching tips.

- Sketch Interpretation: Draw a rough sketch and have the model identify the object or complete the concept.

- Audio Interaction: Upload a lecture recording to generate a quiz or receive a summary analysis.

The interface is also evolving to match the model’s capabilities. Gemini 3 Flash is designed to generate visual answers, including tables and images, and can even help create app prototypes directly via prompts. While Flash takes over as the default “Fast” option, users can still access the “Pro” model (Gemini 3 Pro) via the model picker for complex coding and advanced mathematics.

Note: The rollout also expands access to other tools. Gemini 3 Pro is now available to everyone in the U.S. for search, and the Nano Banana Pro image model is seeing wider availability in search results.

The Enterprise Workhorse

Beyond consumer apps, Google is positioning Gemini 3 Flash as the engine of choice for developers and enterprise. Major industry players like JetBrains, Figma, Cursor, Harvey, and Latitude are already integrating the model via Vertex AI and Gemini Enterprise.

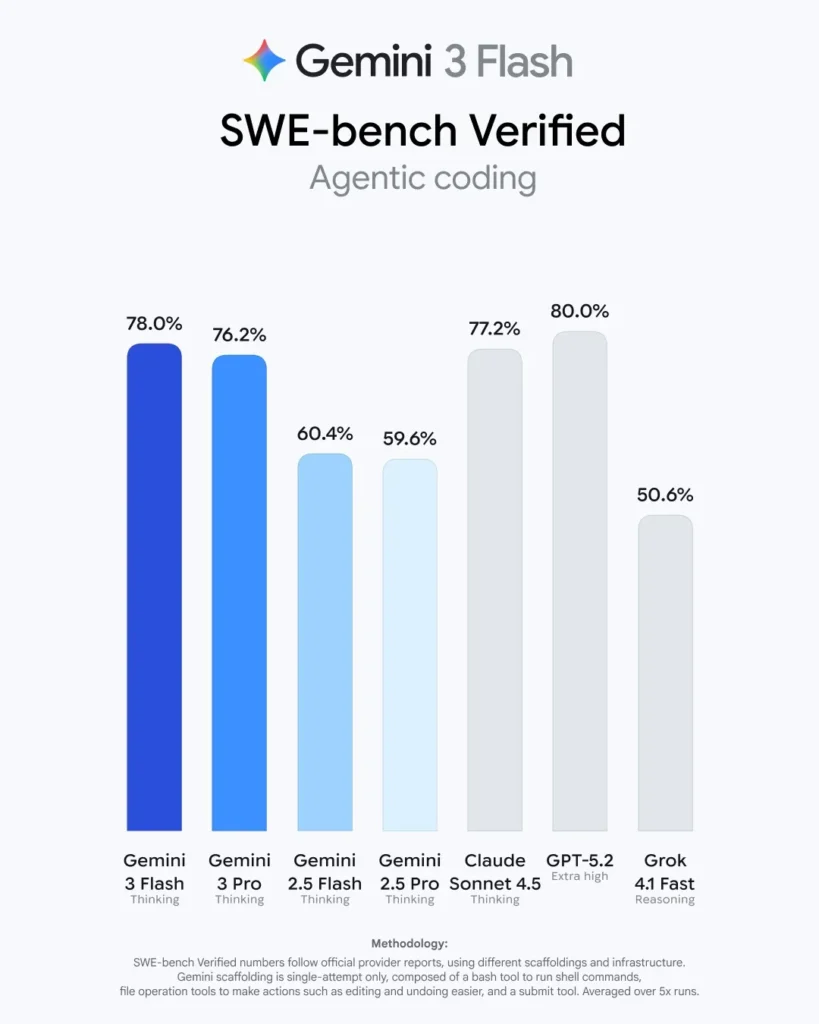

For developers, the model is available in preview via the API and in Antigravity, Google’s recently released coding tool. The coding capabilities are robust; Gemini 3 Pro scores 78% on the SWE-bench verified benchmark, a score bested only by GPT-5.2.

Pricing and Efficiency Google’s pricing strategy reflects confidence in the model’s value.

- Input Cost: $0.50 per 1 million tokens.

- Output Cost: $3.00 per 1 million tokens.

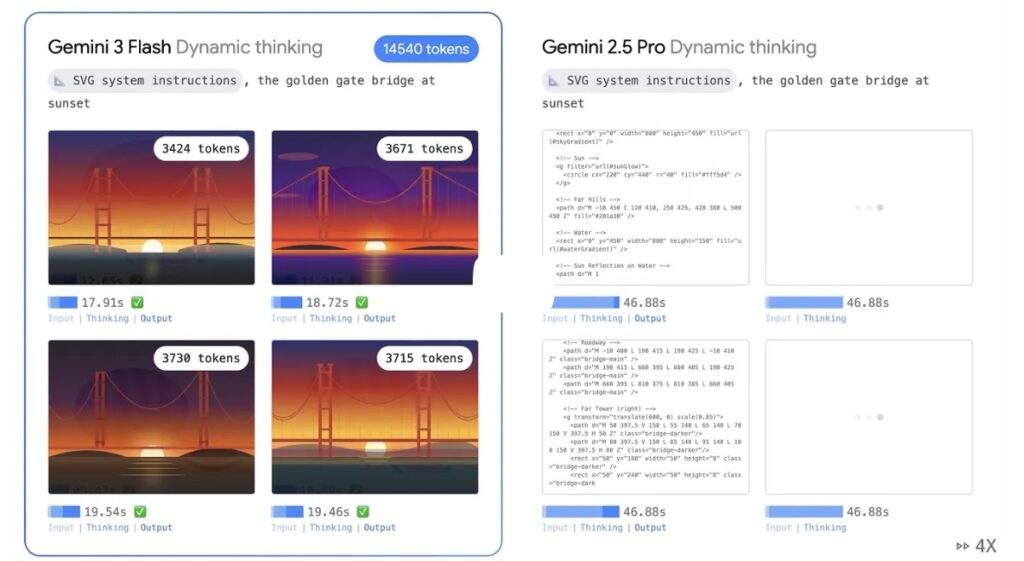

While this is slightly higher than the Gemini 2.5 Flash rates ($0.30 input / $2.50 output), Google argues the efficiency gains are worth it. The new model is three times faster than the Gemini 2.5 Pro model and uses 30% fewer tokens on average for thinking tasks.

“We really position flash as more of your workhorse model,” said Tulsee Doshi, Google’s head of Product for Gemini Models. “Flash is just a much cheaper offering from an input and output price perspective… it actually allows for, for many companies, bulk tasks.”

The “Code Red” Competition

This release occurs against a backdrop of fierce rivalry. Google claims to be processing over 1 trillion tokens per day on its API, a metric that highlights its massive scale.

The pressure is evidently being felt at OpenAI. Reports indicate that Sam Altman recently issued an internal “Code Red” memo following a dip in ChatGPT traffic that coincided with Google’s rising market share. In response, OpenAI has released GPT-5.2 and touted an 8x growth in enterprise message volume since late 2024.

While Google executives refrained from directly naming their competitor, the subtext of the launch is clear. “Just about what’s happening across the industry is like all of these models are continuing to be awesome, challenge each other, push the frontier,” Doshi noted, adding that the introduction of new benchmarks is encouraging the company to push further.

With Gemini 3 Flash, Google isn’t just releasing a model; it is attempting to set a new baseline for what users should expect from a “default” AI experience—instantaneous, multimodal, and incredibly intelligent.