Mastering complex agents, visual design, and adaptive reasoning with a new level of control.

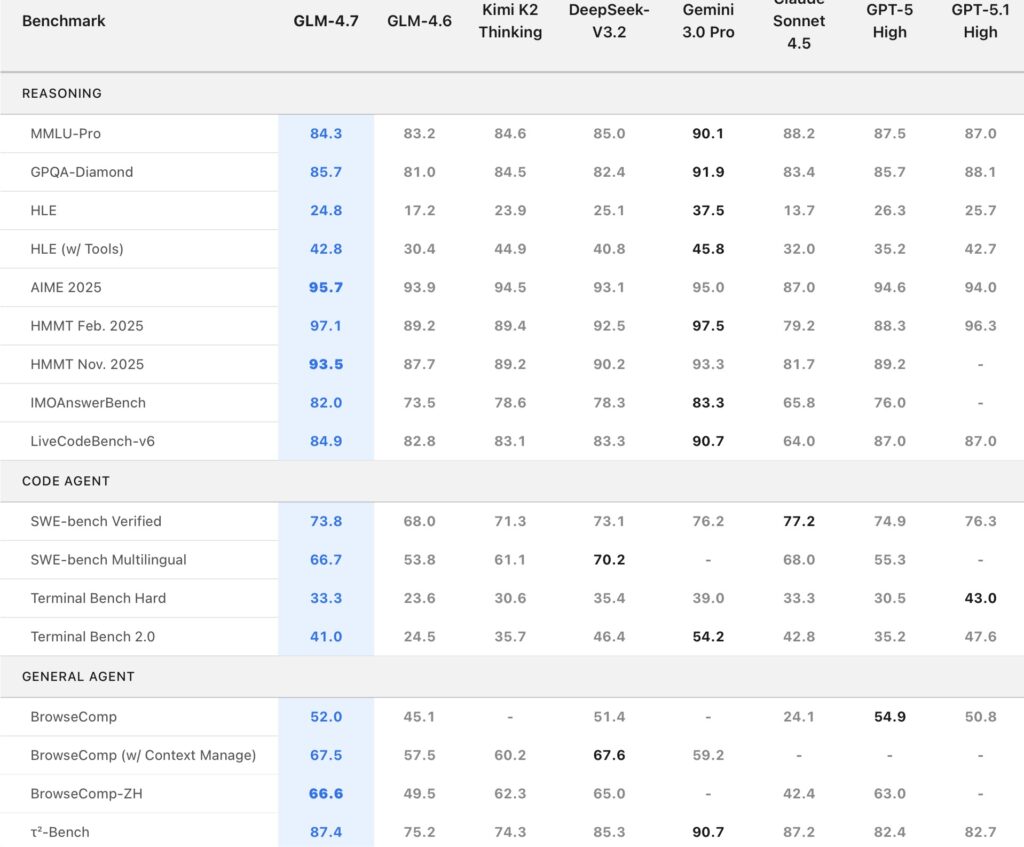

- Agentic Powerhouse: GLM-4.7 delivers massive performance leaps in agentic coding and terminal tasks, showing significant gains on benchmarks like SWE-bench and Terminal Bench 2.0 while integrating seamlessly with frameworks like Claude Code and Cline.

- “Vibe” & Logic: Beyond raw code, the model introduces “Vibe Coding” for superior UI and slide generation, paired with a substantial boost in complex mathematical reasoning on the Humanity’s Last Exam (HLE) benchmark.

- Adaptive Thinking: Features like Preserved Thinking and Turn-level Thinking allow users to dynamically toggle reasoning on or off per turn, balancing cost and latency while maintaining context across long, complex sessions.

The landscape of AI-assisted development is shifting from simple code completion to full-fledged autonomous agents. With the release of GLM-4.7, we are witnessing a pivotal step in this evolution. This isn’t just an incremental update over its predecessor, GLM-4.6; it is a reimagining of how an AI interacts with code, visual design, and complex reasoning tasks. By combining raw computational power with nuanced, controllable thinking processes, GLM-4.7 positions itself not just as a tool, but as a genuine coding partner.

A New Standard in Core Coding and Agents

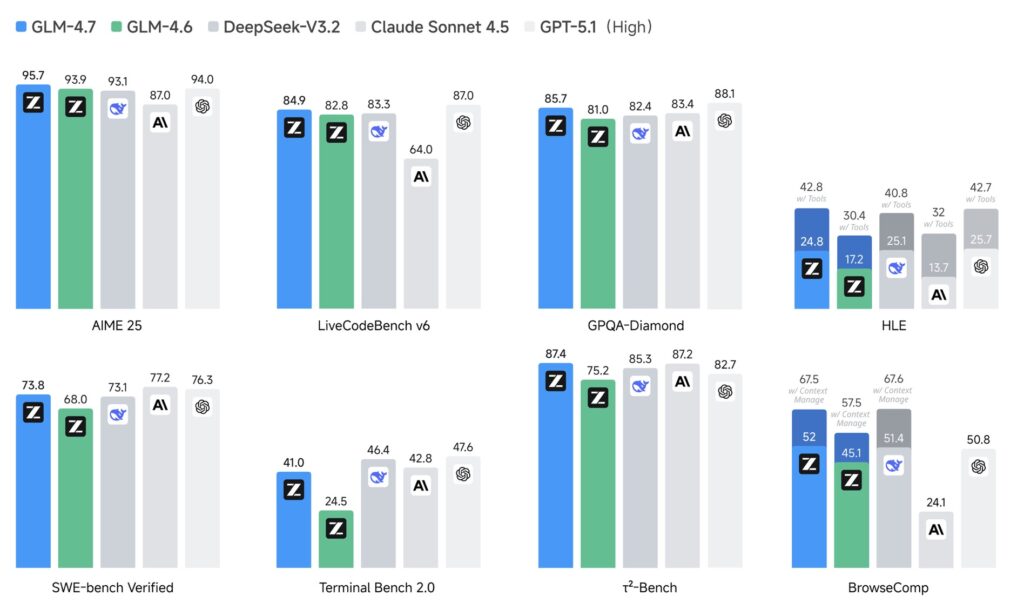

At the heart of GLM-4.7 is a robust improvement in Core Coding capabilities. The model has been fine-tuned to excel in multilingual agentic coding and terminal-based tasks, areas where previous generations often struggled to maintain context or accuracy. The numbers speak for themselves: GLM-4.7 achieves a score of 73.8% (+5.8%) on SWE-bench and a remarkable 66.7% (+12.9%) on SWE-bench Multilingual. Perhaps most impressive is its performance on Terminal Bench 2.0, where it reached 41%, marking a massive 16.5% increase.

These statistics translate into real-world utility. GLM-4.7 supports “thinking before acting,” a critical trait for solving complex problems in mainstream agent frameworks. whether you are using Claude Code, Kilo Code, Cline, or Roo Code, GLM-4.7 integrates seamlessly to handle heavy lifting in software development logic.

Vibe Coding: Where Logic Meets Design

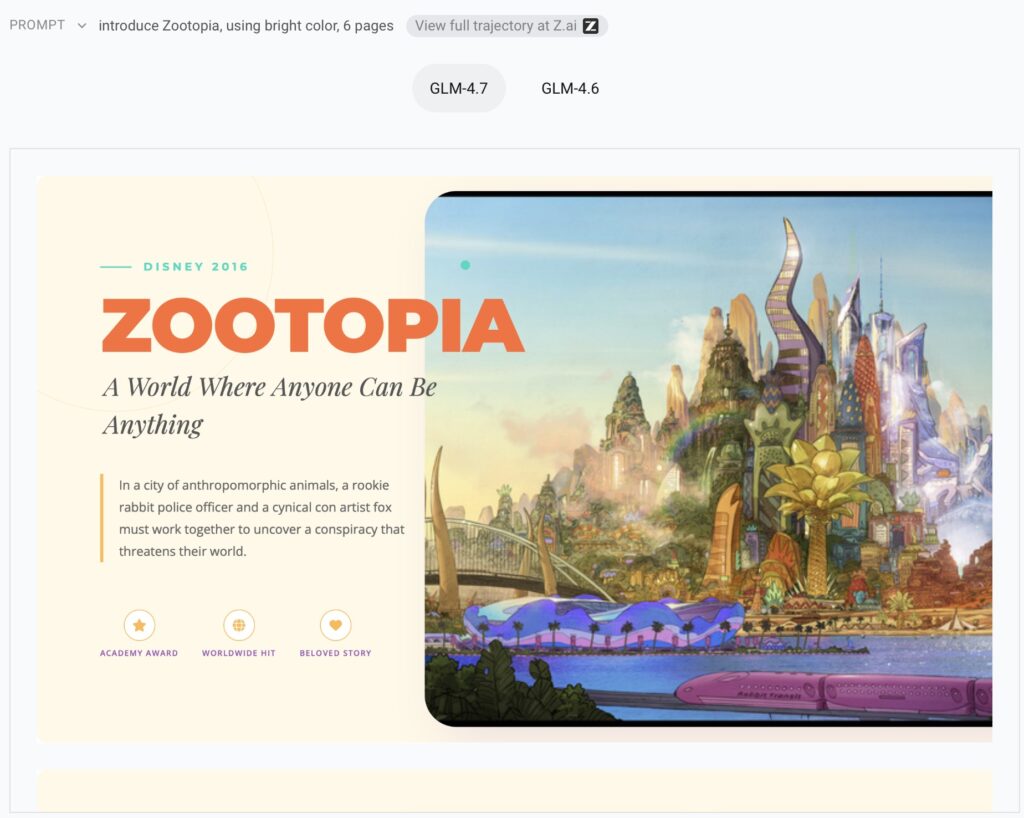

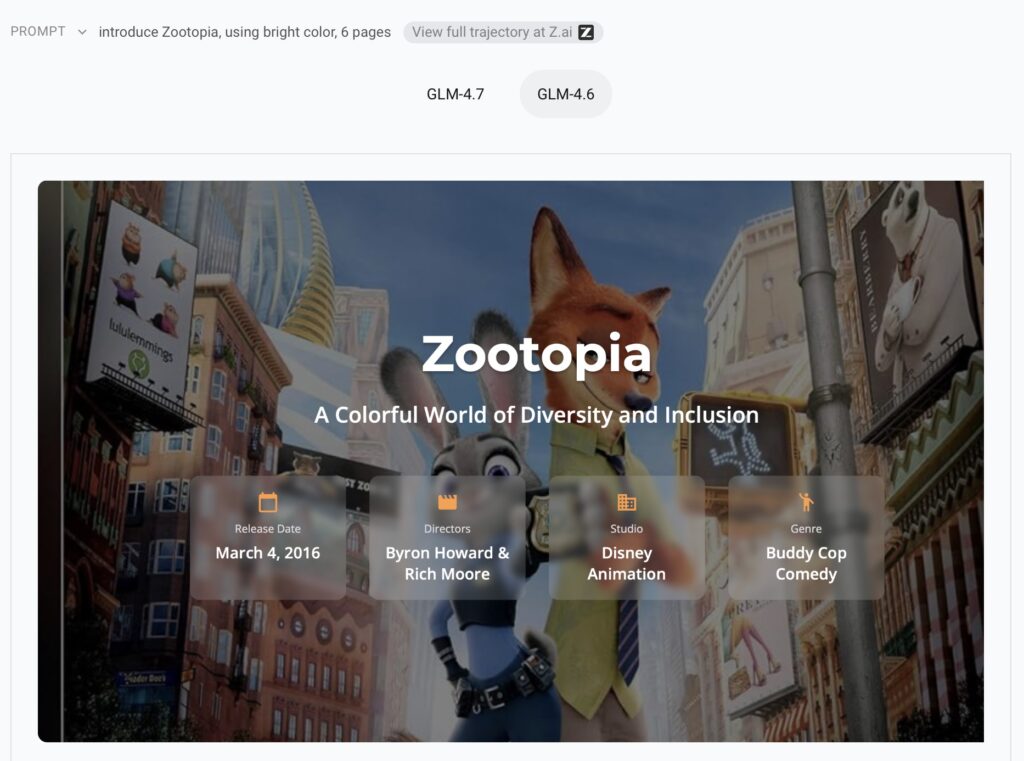

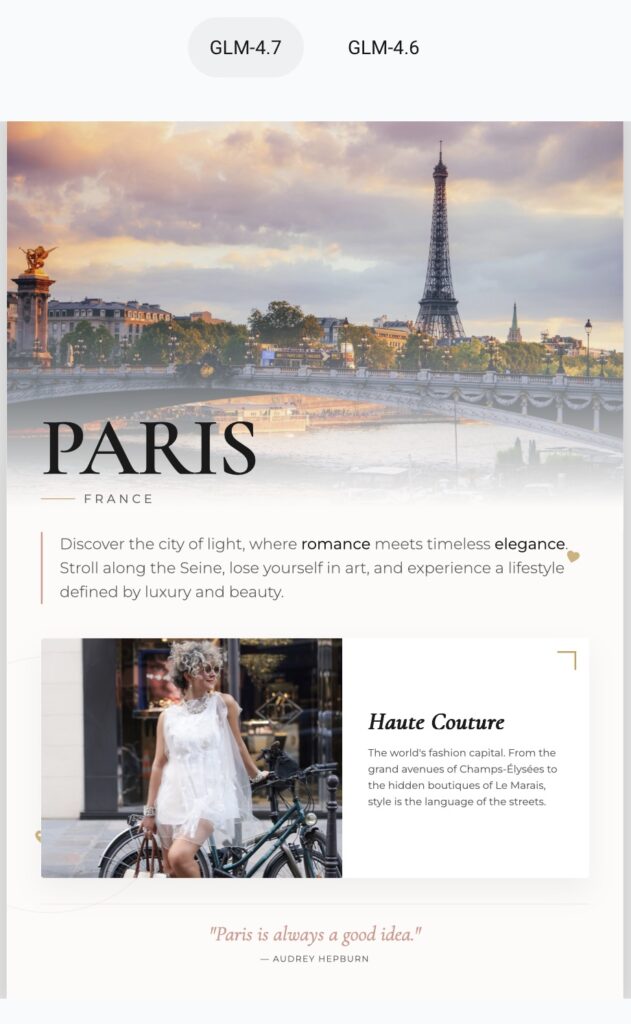

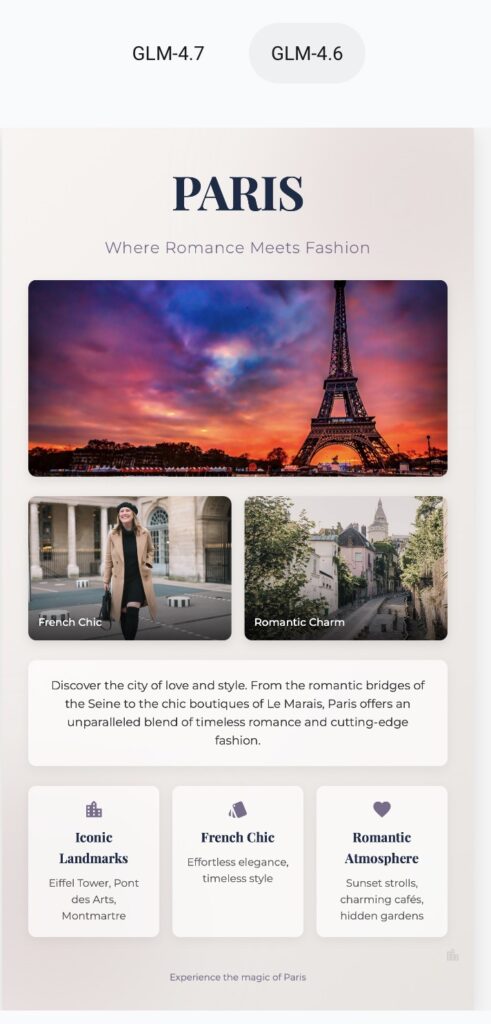

Writing functional code is one thing; creating a product that looks and feels good is another. GLM-4.7 introduces a concept called Vibe Coding, representing a major step forward in User Interface (UI) quality. Developers and designers can expect cleaner, more modern webpages generated on the fly.

Furthermore, this visual intelligence extends to presentations. The model is capable of generating better-looking slides with accurate layout and sizing, bridging the gap between back-end logic and front-end presentation. When combined with improved Tool Using capabilities—evident in significantly better performance on web browsing via BrowseComp and benchmarks like τ2-Bench—the model becomes a versatile assistant capable of navigating the web and designing the results.

Complex Reasoning and Versatility

Under the hood, GLM-4.7 has sharpened its cognitive abilities. In the realm of Complex Reasoning, the model delivers a substantial boost in mathematics. On the HLE (Humanity’s Last Exam) benchmark, it achieved 42.8%, a 12.4%improvement over GLM-4.6. This mathematical rigor ensures that when the coding gets tough—requiring deep algorithmic thinking—the model keeps up.

However, GLM-4.7 is not limited to technical constraints. Users will notice significant improvements in chat fluidity, creative writing, and role-play scenarios, making it a well-rounded model for diverse conversational needs.

The Thinking Revolution: Interleaved, Preserved, and Turn-level

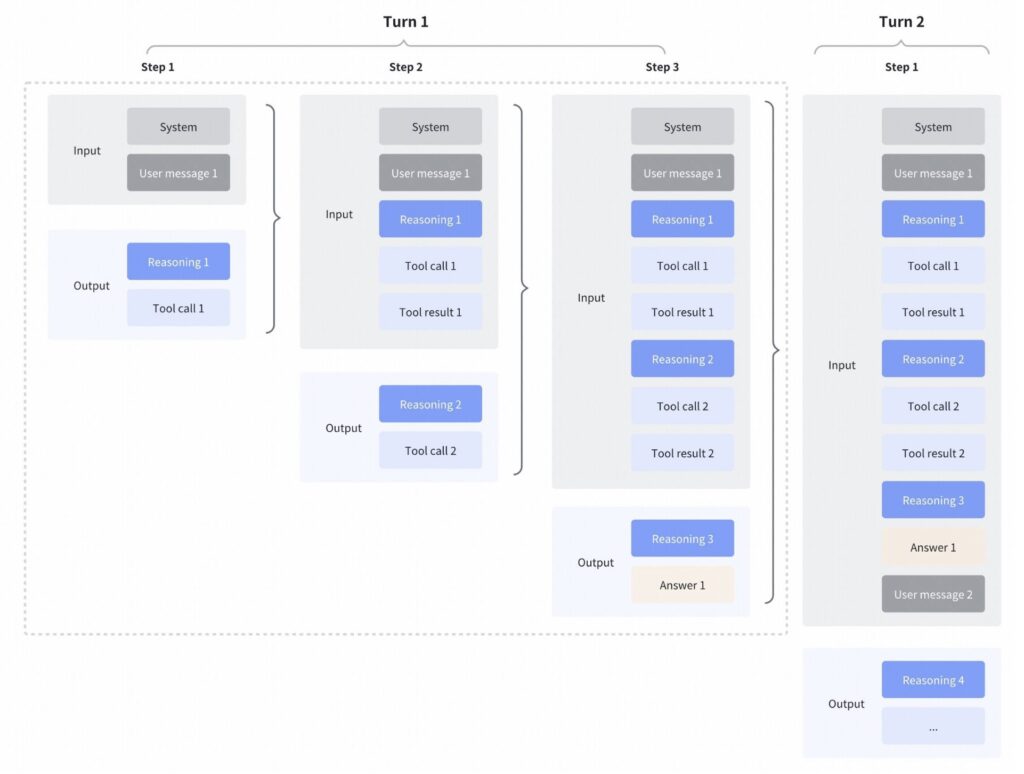

Perhaps the most innovative aspect of GLM-4.7 is how it manages its own “thought process.” The model enhances Interleaved Thinking (thinking before every response and tool call) and introduces two game-changing features: Preserved Thinking and Turn-level Thinking.

Preserved Thinking solves the memory issue in long coding sessions. In agent scenarios, the model automatically retains all thinking blocks across multi-turn conversations. Instead of re-deriving logic from scratch every time you send a new message, it reuses existing reasoning. This reduces information loss and ensures consistency in long-horizon tasks.

Turn-level Thinking offers the user unprecedented control. It allows you to toggle the “thinking switch” for specific requests within the same session.

- Need speed? Disable thinking for lightweight requests like asking for a fact or tweaking wording to reduce latency and cost.

- Need brainpower? Enable thinking for heavy tasks like complex planning, multi-constraint reasoning, or deep debugging.

This dynamic approach ensures the model is “smarter when things are hard, and faster when things are simple,” providing a smooth, cost-effective, and highly intelligent user experience.