Here’s How We’re Making AI More Helpful with Gemini

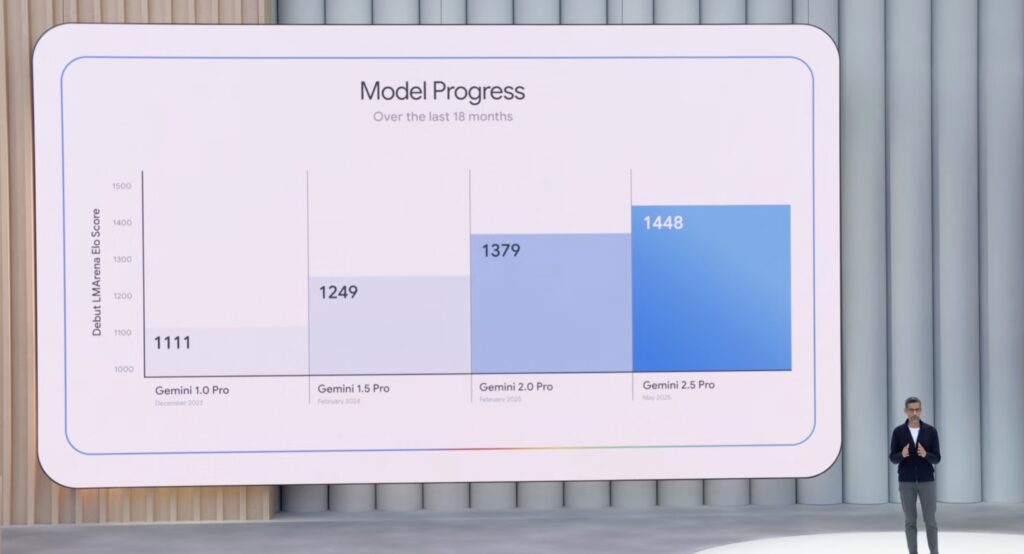

- Rapid Model Progress: Gemini 2.5 Pro dominates the LMArena leaderboard, backed by cutting-edge infrastructure like the Ironwood TPU, delivering faster, more affordable AI models.

- Global AI Adoption: Usage has skyrocketed with over 480 trillion tokens processed monthly, 7 million developers on Gemini, and innovative projects like Google Beam and Gemini Live transforming communication and assistance.

- Personalization and Innovation: From personalized Smart Replies in Gmail to AI Mode in Search and advanced generative media models like Veo 3, Google is bringing AI into everyday life with powerful, user-centric tools.

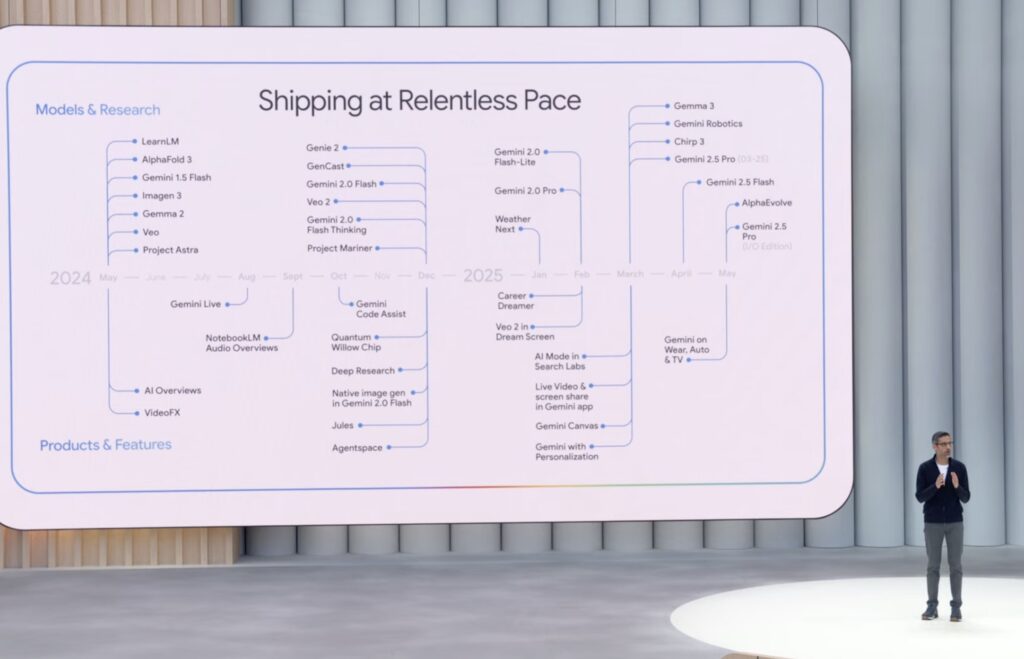

At Google I/O 2025, the theme is clear: AI is no longer just a concept in research labs—it’s a tangible reality shaping how we live, work, and connect. Traditionally, the weeks before I/O were a quiet period, with major announcements saved for the big stage. But in the Gemini era, Google is breaking that mold, shipping intelligent models on random Tuesdays in March or unveiling breakthroughs like AlphaEvolve just days before the event. The focus is on speed—getting the best models and products into users’ hands as quickly as possible. This relentless pace of innovation is evident across every announcement, from model advancements to transformative applications.

One of the most striking highlights is the rapid progress of Gemini models. Elo scores, a key measure of AI performance, have surged over 300 points since the first-generation Gemini Pro. Today, Gemini 2.5 Pro leads the LMArena leaderboard across all categories, a testament to Google’s world-class infrastructure. The seventh-generation TPU, named Ironwood, is a game-changer, designed specifically for thinking and inferential AI workloads at scale. With 10 times the performance of its predecessor and an astonishing 42.5 exaflops of compute per pod, Ironwood enables dramatically faster models while driving down costs. Google isn’t just leading the Pareto Frontier of AI efficiency—it’s redefining it, delivering top-tier models at increasingly accessible price points.

The world is taking notice and adopting AI at an unprecedented rate. Last year, Google processed 9.7 trillion tokens monthly across its products and APIs; now, that number exceeds 480 trillion—a 50-fold increase. Over 7 million developers are building with Gemini, a fivefold jump, while usage on Vertex AI has grown 40 times. The Gemini app itself boasts over 400 million monthly active users, with engagement spiking 45% among those using the 2.5 Pro model. This global embrace of AI signals a new phase in the platform shift, where decades of research are becoming practical tools for individuals, businesses, and communities worldwide.

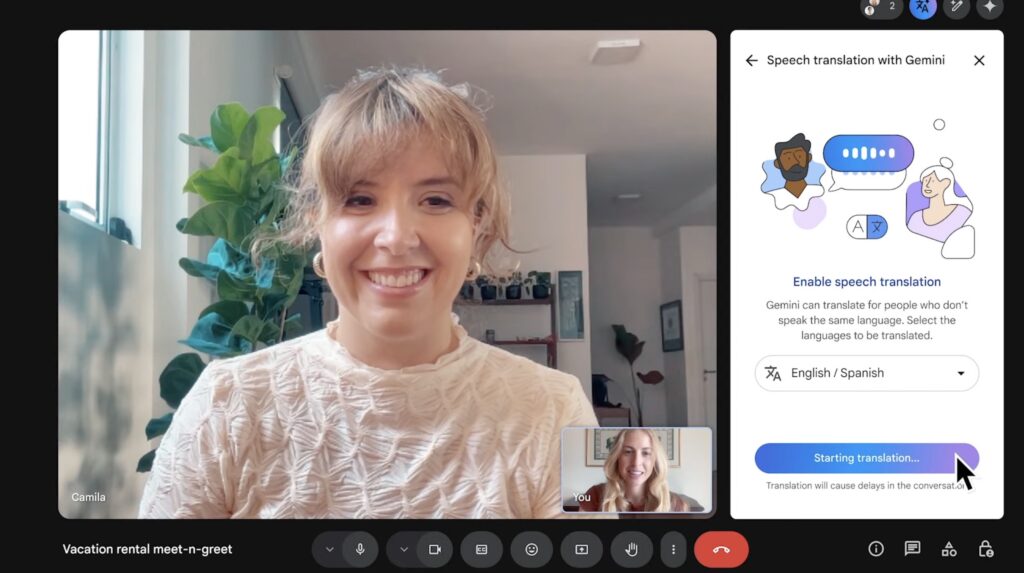

A prime example of this transition from research to reality is Google Beam, the evolution of Project Starline, first debuted at a previous I/O. Starline aimed to replicate the feeling of being in the same room with someone, no matter the distance. Now, Google Beam takes that vision further as an AI-first video communications platform. Using a state-of-the-art video model, six cameras, and AI to merge 2D streams into a realistic 3D experience on a lightfield display, Beam offers near-perfect head tracking and real-time rendering at 60 frames per second. The result is an immersive conversational experience, set to launch with HP for early customers later this year. Alongside this, Google Meet is breaking language barriers with near real-time speech translation that matches a speaker’s voice, tone, and expressions. Rolling out in beta for English and Spanish to Google AI Pro and Ultra subscribers, with more languages soon, this feature will also reach Workspace business customers for early testing in 2025.

Another exciting leap is Gemini Live, born from Project Astra, which explores the potential of a universal AI assistant that understands the world around you. Now integrated with camera and screen-sharing capabilities, Gemini Live is being used for everything from interview prep to marathon training. Already available on Android and rolling out to iOS users, it’s a glimpse into the future of AI assistance. Similarly, Project Mariner introduces agentic capabilities through “Agent Mode” in the Gemini app, helping users with tasks like apartment hunting on platforms like Zillow by adjusting filters and scheduling tours via the Model Context Protocol (MCP). With compatibility for MCP tools in the Gemini API and SDK, and partnerships with companies like Automation Anywhere and UiPath, Google is laying the groundwork for a flourishing agent ecosystem, extending into Chrome and Search as well.

Personalization is at the heart of making AI truly useful, and Google is pioneering this with “personal context.” With user permission, Gemini models can access relevant data across Google apps in a private, transparent way. Imagine receiving an email from a friend asking for road trip advice—Gemini’s personalized Smart Replies in Gmail can search your past emails and Google Drive files to craft a response with specific itinerary details, matching your tone and style. Set to launch for subscribers later this year, this feature hints at the potential for personalized experiences across Search and beyond. Speaking of Search, AI Overviews have reached 1.5 billion users in 200 countries, driving significant query growth in markets like the U.S. and India. The new AI Mode, a complete reimagining of Search, allows for longer, more complex queries and follow-ups, available now in the U.S. as a dedicated tab with Gemini 2.5 integration for faster, high-quality responses.

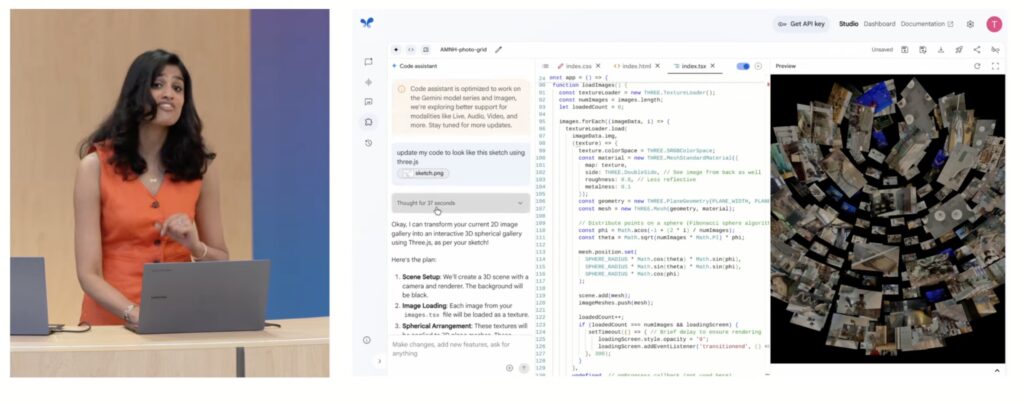

Gemini 2.5 itself is a powerhouse, with the efficient 2.5 Flash model beloved by developers for its speed and cost-effectiveness, now improved across reasoning, multimodality, and long context benchmarks. The 2.5 Pro model introduces “Deep Think,” an enhanced reasoning mode leveraging parallel thinking techniques. The Gemini app is also becoming more personal and proactive, with Deep Research allowing file uploads and soon connecting to Google Drive and Gmail for custom reports, alongside Canvas for creating infographics, quizzes, and podcasts. Gemini Live’s camera and screen-sharing features are now free for all, including iOS users, with seamless Google app integration on the horizon.

Creativity gets a boost with generative media advancements like Veo 3, a video model with native audio generation, and Imagen 4, Google’s most capable image generation model yet. Both are accessible in the Gemini app, while a new tool called Flow empowers filmmakers to craft cinematic clips or extend short scenes. These tools open up endless possibilities for creators, mirroring the broader opportunity AI presents to improve lives. From robotics to quantum computing, AlphaFold to Waymo, the research of today is poised to become tomorrow’s reality. A personal anecdote shared at I/O 2025 encapsulates this impact: witnessing an 80-year-old father marvel at a Waymo ride in San Francisco, seeing technological progress through fresh eyes, serves as a reminder of AI’s power to inspire and transform.

The opportunity with AI is immense, and Google I/O 2025 underscores a collective responsibility to ensure its benefits reach everyone. As developers, builders, and problem-solvers harness these tools, the focus remains on creating a future where technology continues to awe and move humanity forward. With Gemini leading the charge, Google is not just keeping pace with AI’s evolution—it’s accelerating it, turning research into reality one breakthrough at a time.