Instant Answers, Unmatched Speed, and Cutting-Edge Technology

- Cerebras Inference now powers Mistral’s Le Chat, delivering instant responses with its new Flash Answers feature.

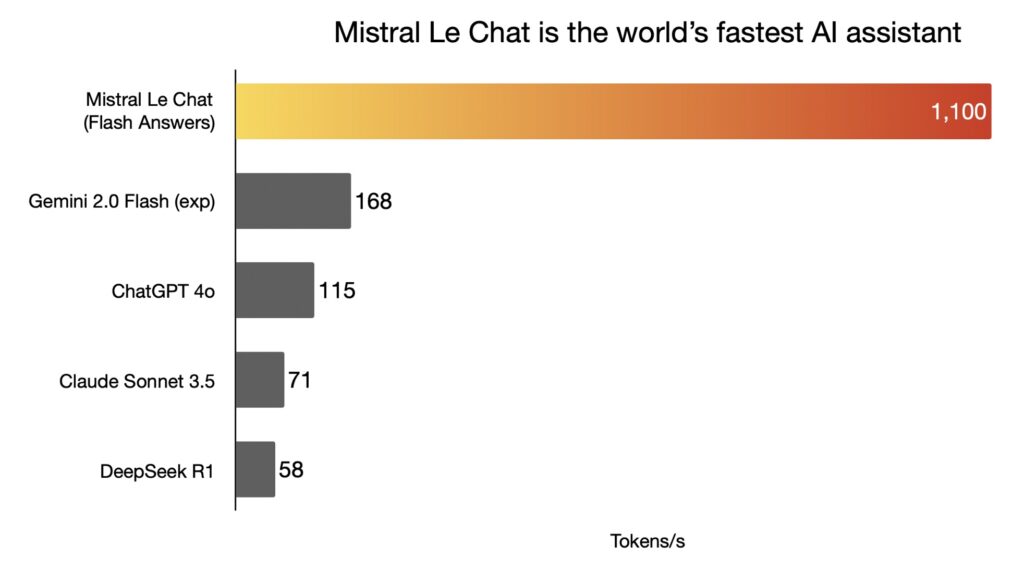

- At over 1,100 tokens per second, Le Chat is 10x faster than leading AI models like ChatGPT 4o, Sonnet 3.5, and DeepSeek R1.

- This breakthrough is made possible by Cerebras’ Wafer Scale Engine 3 and innovative speculative decoding techniques, setting a new standard for AI performance.

In the ever-evolving world of artificial intelligence, speed and efficiency are paramount. Mistral’s Le Chat, now powered by Cerebras Inference, is setting a new benchmark for AI performance with its revolutionary Flash Answers feature. At an astonishing 1,100 tokens per second, Le Chat is not just fast—it’s the fastest AI assistant in the world, leaving competitors like ChatGPT 4o, Sonnet 3.5, and DeepSeek R1 far behind. This partnership between Cerebras and Mistral is a game-changer, redefining what users can expect from AI-powered chat and code generation tools.

The Technology Behind the Speed

At the heart of this breakthrough is Cerebras’ Wafer Scale Engine 3 (WSE-3), a cutting-edge hardware innovation that enables unparalleled AI inference performance. Unlike traditional architectures, the WSE-3 leverages an SRAM-based inference design, which minimizes latency and maximizes throughput. This is further enhanced by speculative decoding techniques, developed in collaboration with Mistral’s researchers, to optimize the processing of text-based queries.

The result? Le Chat can process over 1,100 tokens per second, a speed that is 10 times faster than its closest competitors. This means users no longer have to wait for lengthy responses to their queries or coding prompts. Whether it’s generating complex code or answering intricate questions, Le Chat delivers results instantly, transforming the user experience.

A New Era for AI Assistants

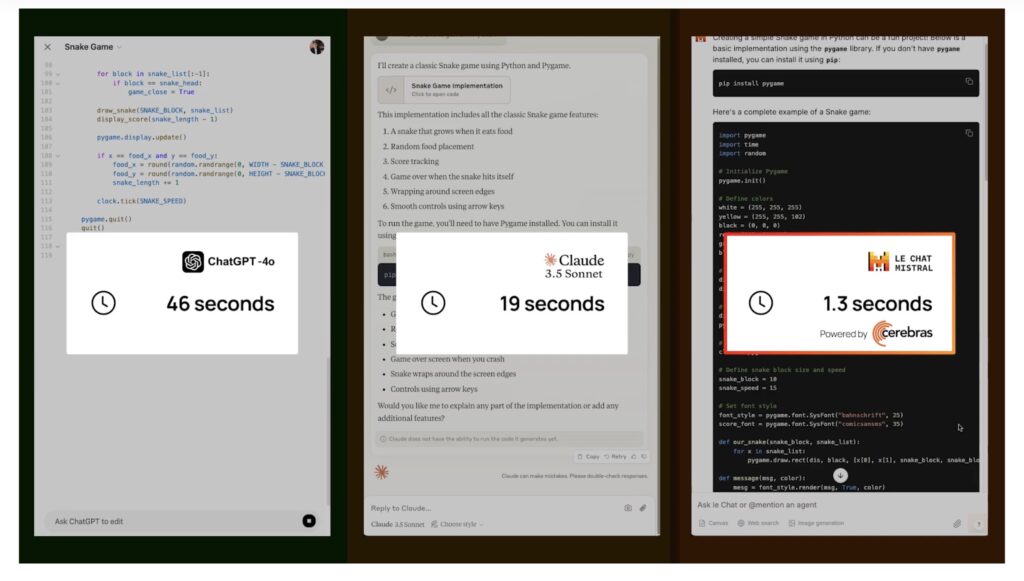

The implications of this speed are profound. AI assistants are increasingly being used for tasks like chat-based interactions and code generation, two areas where response time is critical. With Cerebras Inference powering Le Chat, users can now enjoy a seamless, real-time experience that feels almost like conversing with a human expert.

For example, in coding scenarios, where other AI models might take up to 50 seconds to complete a prompt, Le Chat finishes the task in the blink of an eye. This not only saves time but also enhances productivity, making it an invaluable tool for developers, researchers, and professionals across industries.

Mistral and Cerebras: A Perfect Partnership

Mistral, Europe’s leading AI startup, has been at the forefront of developing advanced foundation models. Their flagship Mistral Large 2 model, with 123 billion parameters, is a testament to their expertise in building state-of-the-art AI systems. By integrating Cerebras’ technology, Mistral has taken Le Chat to the next level, combining their robust model architecture with the world’s fastest AI inference engine.

This collaboration also highlights the growing importance of partnerships in the AI ecosystem. By pooling resources and expertise, companies like Cerebras and Mistral are pushing the boundaries of what AI can achieve, setting new standards for performance and usability.

What’s Next for Le Chat?

For its initial release, Cerebras is focusing on serving text-based queries for the Mistral Large 2 model. Users will notice a “Flash Answer” icon in the chat interface, signaling the use of Cerebras Inference for instant responses. This feature is just the beginning, as both companies plan to expand their collaboration in 2025, bringing even more advanced capabilities to Le Chat and other models.

The future of AI is fast, efficient, and user-centric, and Cerebras is leading the charge. With its record-breaking performance in models like Llama 3.3 70B, Llama 3.1 405B, and DeepSeek R1 70B, Cerebras Inference is poised to become the gold standard for AI inference.

Le Chat, powered by Cerebras, is more than just an AI assistant—it’s a glimpse into the future of artificial intelligence. By combining speed, accuracy, and cutting-edge technology, it delivers an unparalleled user experience that sets it apart from the competition.

Whether you’re a developer looking for instant code generation or a user seeking quick, accurate answers, Le Chat is ready to redefine your expectations. Try the new Mistral Le Chat today and experience the power of instant inference.