Meta’s latest release empowers developers with customizable models that revolutionize AI capabilities at the edge.

- Llama 3.2 introduces small and medium-sized vision models and lightweight text models optimized for edge and mobile devices, supporting advanced AI tasks.

- The release includes powerful features like context length of 128K tokens, image reasoning capabilities, and a simplified deployment process for developers.

- By promoting openness and collaboration, Meta aims to drive innovation in AI, providing developers the tools to create impactful applications.

Meta has unveiled Llama 3.2, a groundbreaking advancement in the realm of artificial intelligence, specifically designed to enhance edge AI and vision capabilities. This latest version introduces a variety of models, including small and medium-sized vision language models (LLMs) and lightweight text-only models tailored for mobile and edge devices. With an emphasis on flexibility and usability, Llama 3.2 aims to empower developers to harness the power of AI in innovative ways.

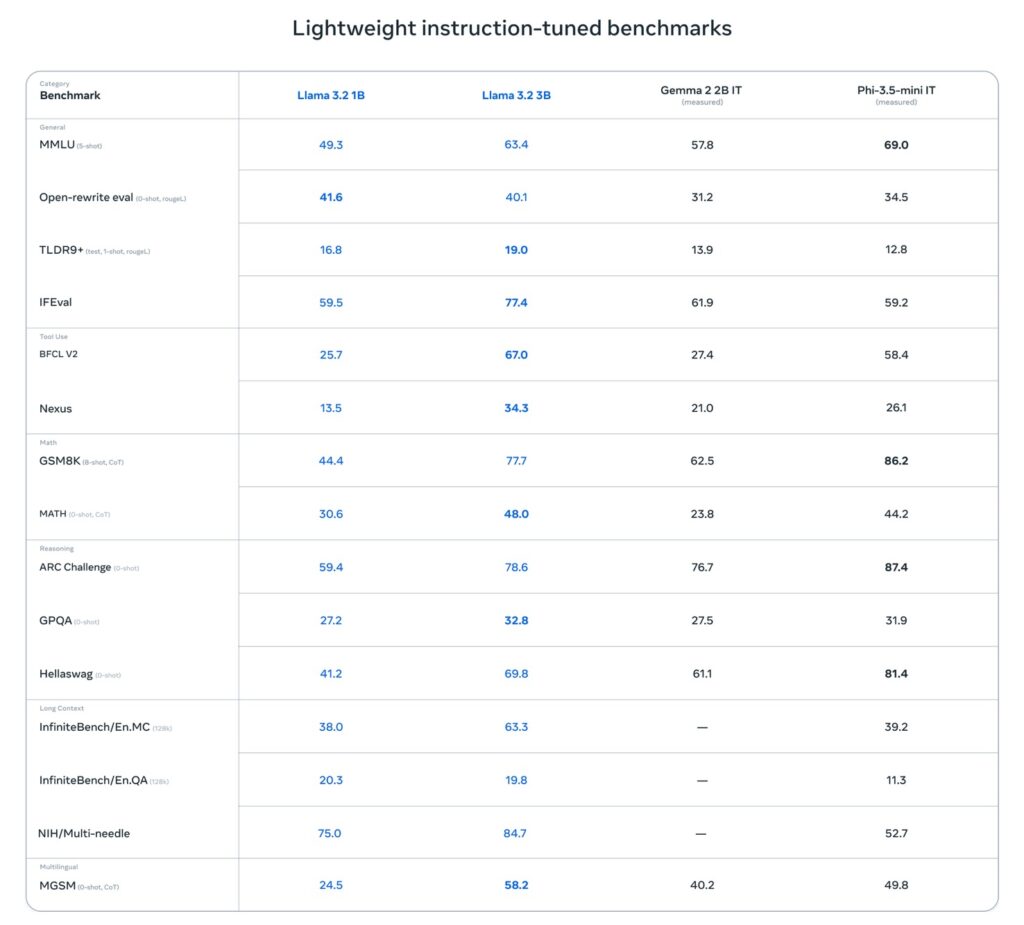

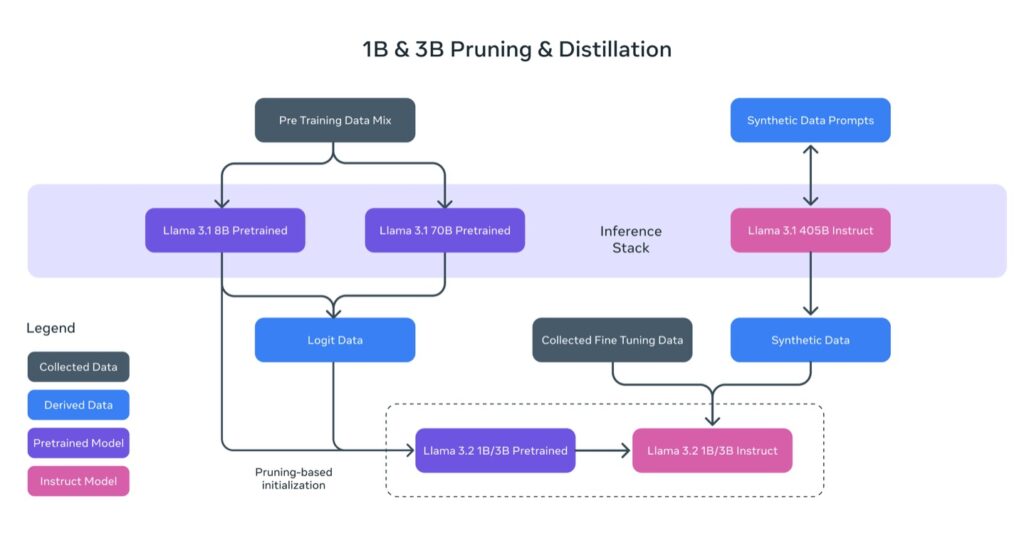

One of the key highlights of Llama 3.2 is the introduction of the 1B and 3B models, which support an impressive context length of 128K tokens. These state-of-the-art models are particularly suited for on-device tasks like summarization, instruction following, and rewriting. Their optimized performance on Qualcomm and MediaTek hardware ensures that developers can implement robust AI functionalities directly on edge devices, significantly enhancing user experiences through faster processing and improved privacy.

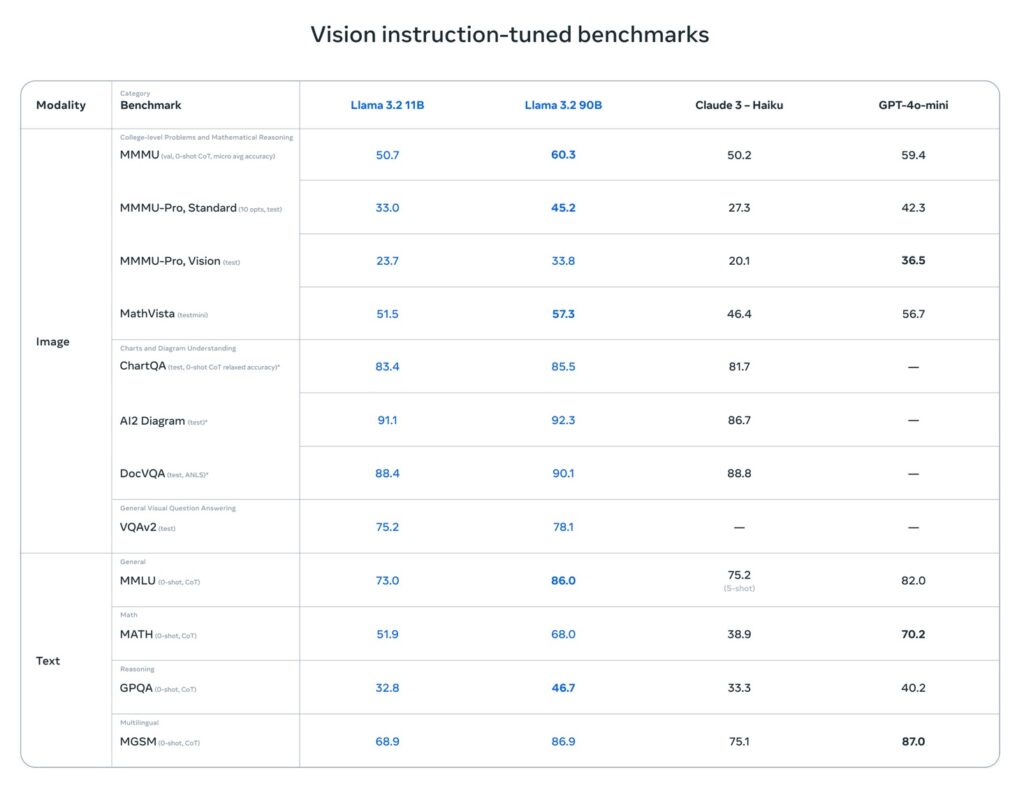

In addition to text capabilities, Llama 3.2 also boasts vision models that can compete with the leading closed models on the market, such as Claude 3 Haiku. The 11B and 90B vision models enable advanced image understanding tasks, including document-level comprehension, chart interpretation, and visual grounding. For instance, a user could query the model about a sales graph and receive accurate insights based on the data presented. This combination of language and vision not only enhances the model’s utility but also opens up new possibilities for interactive applications.

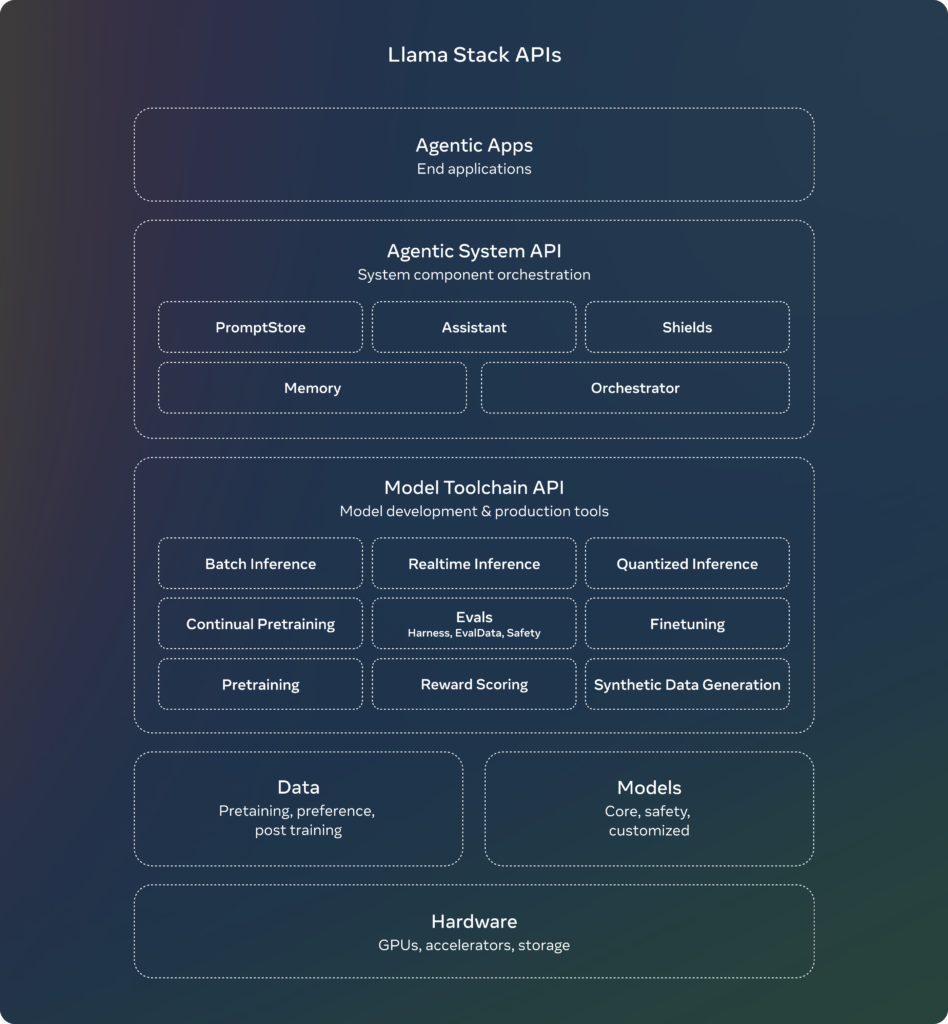

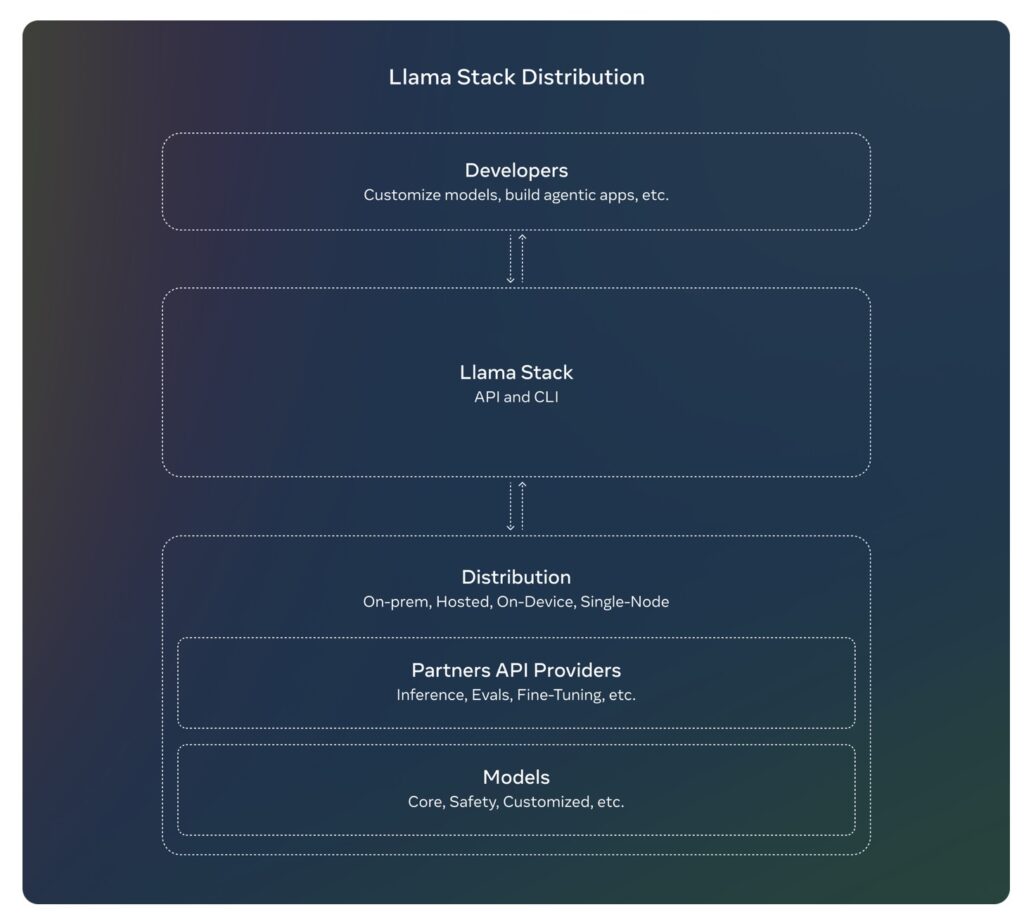

Meta has prioritized ease of use in Llama 3.2 by providing the Llama Stack, which simplifies the deployment of these models across various environments, whether on-premises, in the cloud, or on-device. This new infrastructure enables developers to leverage features like retrieval-augmented generation (RAG) and other tooling-enabled applications seamlessly. Collaborations with industry leaders like AWS, Databricks, and Dell Technologies further strengthen the ecosystem surrounding Llama, making it easier for businesses to integrate these advanced models into their existing systems.

The release of Llama 3.2 comes at a time when the demand for open, customizable AI solutions is growing. Meta’s commitment to openness and collaboration is evident in its partnerships with over 25 companies, including major players such as AMD, Google Cloud, and Microsoft Azure. By enabling a wide array of services at launch, Meta is fostering a community where developers can innovate without the barriers often associated with proprietary systems.

Beyond just enhancing functionality, Llama 3.2 also incorporates safety features designed to ensure responsible use. The introduction of Llama Guard 3 provides filtering capabilities for text and image inputs, ensuring that the models maintain high standards of safety and compliance. With Llama Guard optimized for efficiency, developers can deploy these safeguards without compromising on performance.

Llama 3.2 represents a significant step forward in the field of AI, particularly for edge computing and vision applications. By combining advanced capabilities with an open-source philosophy, Meta is empowering developers to create impactful, user-centric applications. As Llama 3.2 reaches a broader audience, the potential for innovation and creativity in AI will expand, promising exciting advancements for developers and users alike. The future of AI is bright, and with tools like Llama 3.2, the possibilities are endless.