Breaking Through Length Limitations in AI Text Generation with New Agent-Based Techniques

- Extended Output Capability: LongWriter enables large language models (LLMs) to generate coherent text outputs exceeding 10,000 words, far surpassing the previous limit of 2,000 words.

- Innovative AgentWrite Pipeline: The new AgentWrite pipeline decomposes ultra-long generation tasks into manageable subtasks, enabling LLMs to produce extended outputs without compromising coherence.

- New Benchmarking Standards: The introduction of LongBench-Write provides a comprehensive evaluation framework for assessing ultra-long text generation capabilities in LLMs.

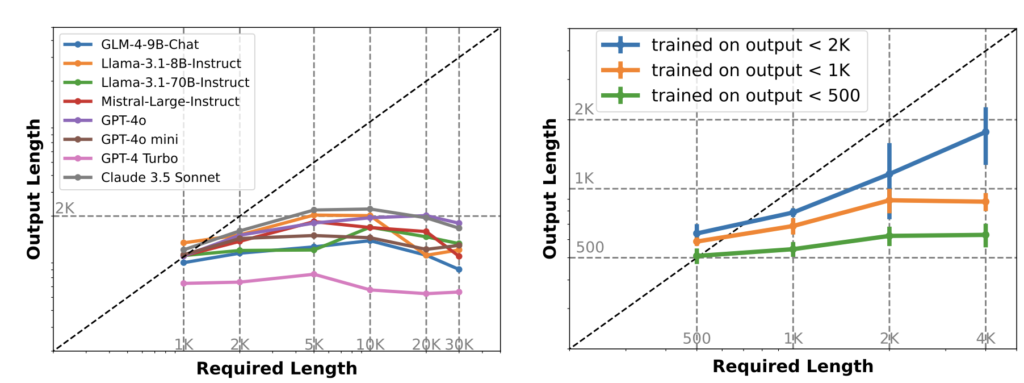

As large language models (LLMs) continue to evolve, they have shown remarkable capabilities in handling vast amounts of input data. However, these models have consistently struggled with generating equally long outputs, often capping out at around 2,000 words despite being able to process much longer sequences. This limitation has been a significant hurdle in fields requiring extended, coherent text generation, such as academic writing, detailed reporting, and comprehensive content creation.

LongWriter: Extending the Frontier

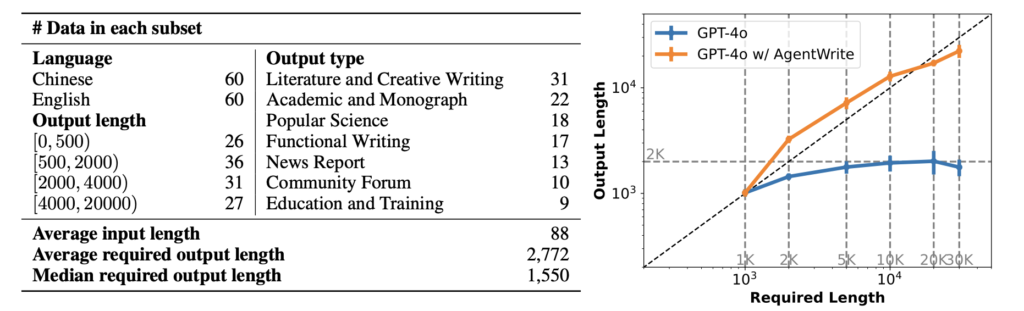

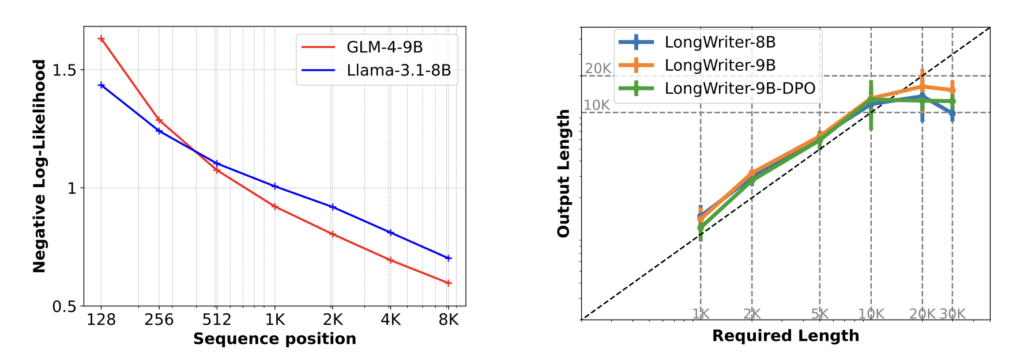

Enter LongWriter, a breakthrough approach designed to unlock the potential of LLMs to generate outputs exceeding 10,000 words. The development team behind LongWriter identified that the limitation of output length in existing models is not due to the models’ inherent capabilities but rather a result of the training data used during the supervised fine-tuning (SFT) process. Most SFT datasets are capped at 2,000 words, which inadvertently limits the models’ generation length.

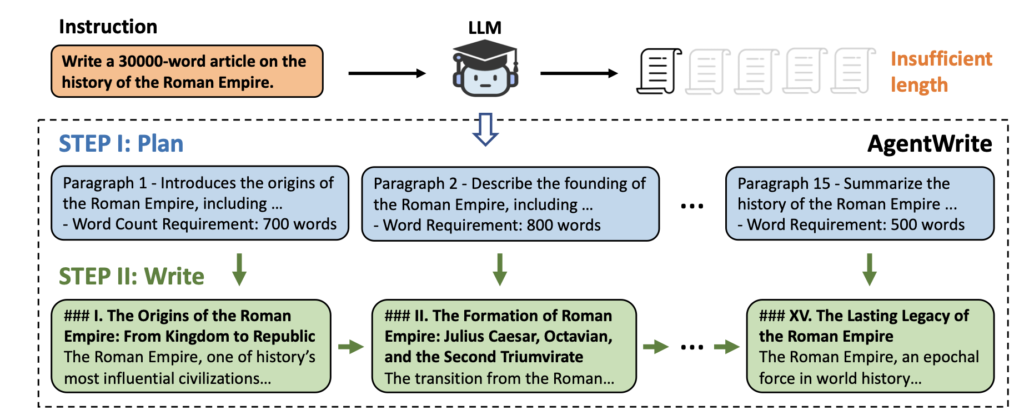

To address this, the team introduced AgentWrite, an innovative agent-based pipeline that intelligently decomposes ultra-long generation tasks into smaller, manageable subtasks. This approach allows off-the-shelf LLMs to produce coherent, extended outputs by effectively expanding the “output window” beyond the traditional limits. The result is a new dataset, LongWriter-6k, which includes 6,000 supervised fine-tuning examples with outputs ranging from 2,000 to 32,000 words. This dataset has been instrumental in training models capable of generating texts well over 10,000 words.

AgentWrite: The Backbone of LongWriter

AgentWrite is the cornerstone of LongWriter’s success. By breaking down large-scale text generation tasks into subtasks, it enables LLMs to maintain coherence across vast stretches of text. The pipeline leverages existing LLMs to construct extended, high-quality outputs, which are then used to fine-tune the models for long-text generation. This method not only extends the length of generated text but also ensures that the quality remains high, avoiding the pitfalls of redundancy or loss of coherence that often plague long-form text generation.

Introducing LongBench-Write

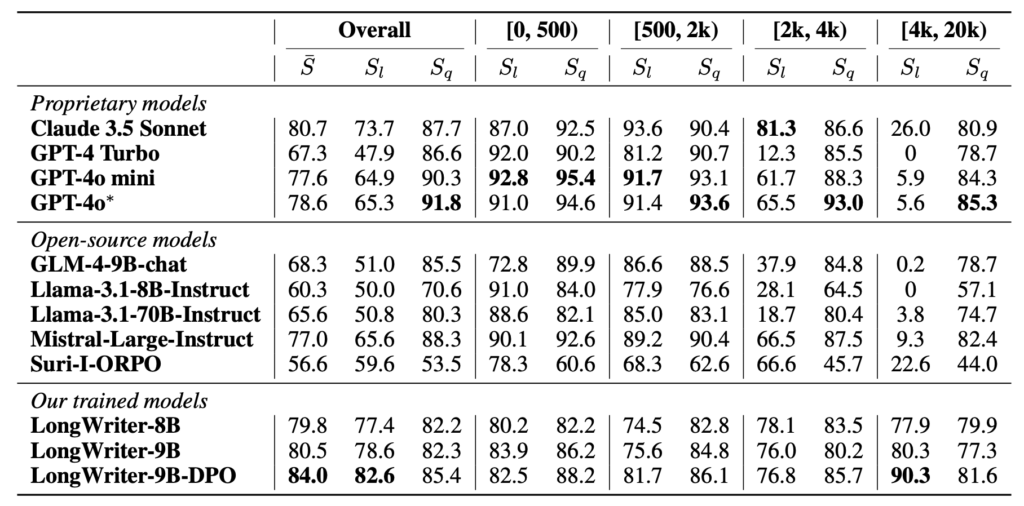

With the ability to generate longer texts comes the need for new benchmarking tools to evaluate performance. The team behind LongWriter developed LongBench-Write, a comprehensive benchmark designed to assess ultra-long text generation capabilities. This new benchmark provides a standardized way to measure the quality, coherence, and relevance of long-form outputs, setting a new standard for evaluating LLM performance in extended text generation.

Implications and Future Directions

The advancements brought by LongWriter have significant implications for various industries, from content creation to academic research, where the need for coherent, lengthy outputs is crucial. The ability to generate texts exceeding 10,000 words opens up new possibilities for using AI in tasks that were previously challenging due to length constraints.

Looking ahead, the team suggests several areas for future research, including further expanding the output capabilities of LLMs, refining the quality of long-form data generated by AgentWrite, and improving inference efficiency for longer texts. These directions aim to continue pushing the boundaries of what LLMs can achieve, ultimately leading to more powerful and versatile AI systems capable of handling complex, long-duration tasks.

LongWriter represents a significant leap forward in the field of AI-driven text generation. By overcoming the traditional length limitations of LLMs, it opens up new avenues for applications requiring extended, coherent text outputs. With the introduction of AgentWrite and LongBench-Write, LongWriter not only enhances the capabilities of existing models but also sets new standards for future research and development in the field of AI.