A New Method Using Attention Maps to Detect and Mitigate Hallucinations

- Detection Through Attention Maps: Lookback Lens identifies contextual hallucinations in LLMs by analyzing the ratio of attention weights on the input context versus generated tokens.

- Transferability Across Models: The method is effective across different tasks and LLM sizes without the need for retraining.

- Mitigation Strategy: Lookback Lens Guided Decoding reduces hallucinations by adjusting the LLM’s decoding process based on detected hallucinations.

Large language models (LLMs) have revolutionized natural language processing, but they often hallucinate details or provide unsubstantiated responses, which undermines their reliability. To address this issue, researchers have developed Lookback Lens, a novel approach that detects and mitigates contextual hallucinations using attention maps.

Detection Through Attention Maps

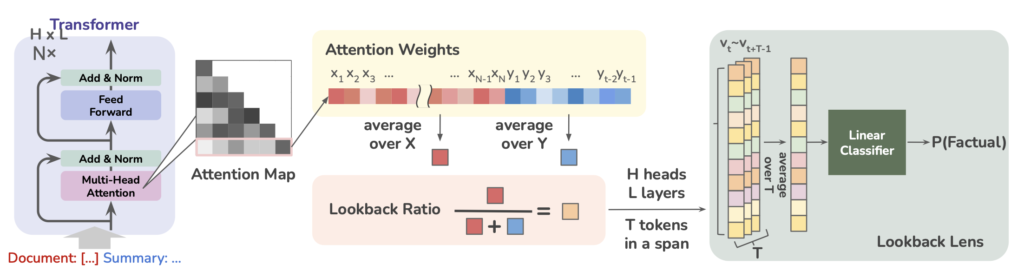

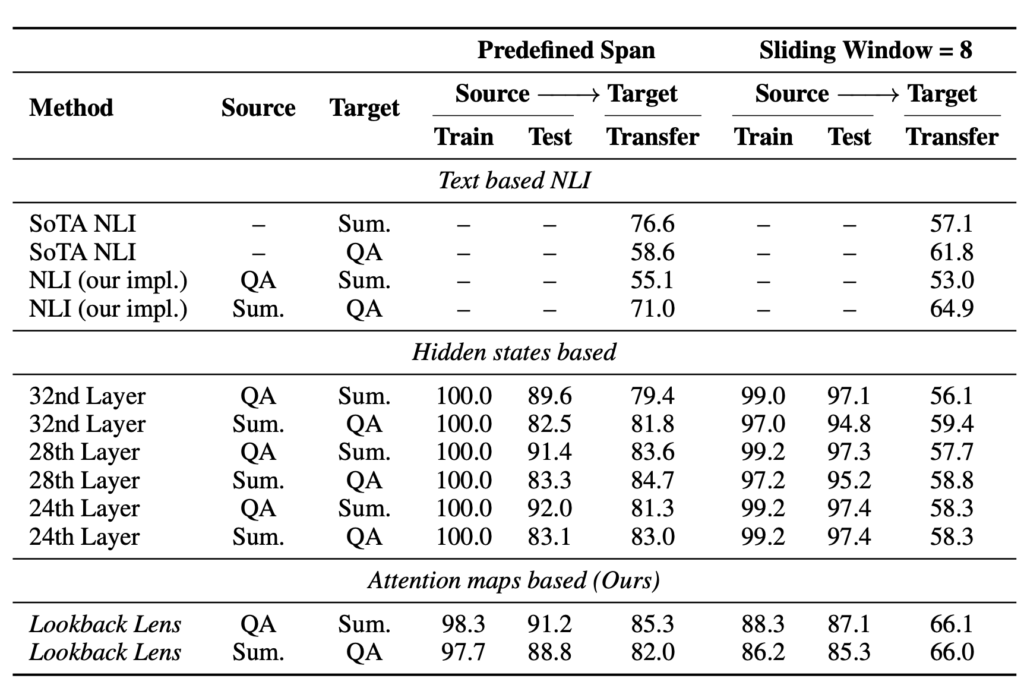

The core hypothesis behind Lookback Lens is that hallucinations occur when an LLM pays more attention to its own generated content rather than the input context. By calculating the lookback ratio—the ratio of attention weights on the context versus the generated tokens for each attention head—Lookback Lens can effectively identify hallucinations. This method uses a linear classifier trained on these lookback ratios, which has proven to be as effective as more complex detectors that rely on the entire hidden states or text-based entailment models.

Transferability Across Models

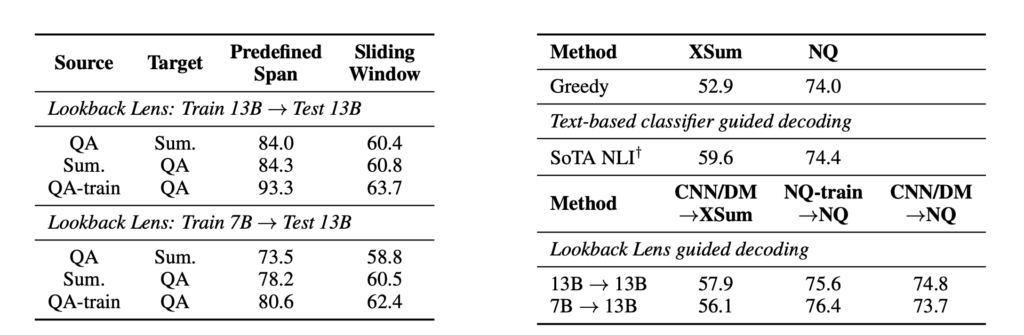

One of the significant advantages of Lookback Lens is its transferability. The model trained on a smaller 7B LLM can be applied to a larger 13B LLM without retraining, making it highly adaptable. This transferability is made possible by mapping attention heads across different models, ensuring that the Lookback Lens can handle various text generation scenarios efficiently.

Mitigation Strategy

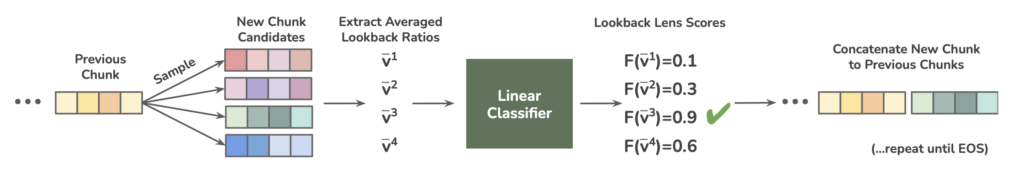

Beyond detection, Lookback Lens also provides a mitigation strategy through Lookback Lens Guided Decoding. This approach uses the classifier’s feedback to adjust the decoding process, reducing hallucinations by guiding the LLM to generate more accurate and contextually relevant content. For example, in the XSum summarization task, this method reduced hallucinations by 9.6%.

Ideas for Further Exploration

- Optimized Inference Techniques: Exploring faster inference methods that integrate Lookback Lens signals directly into the attention map mechanism could enhance efficiency.

- Extended Training Data: Increasing the annotated training examples beyond 1,000 to 2,000 to improve the robustness and accuracy of the Lookback Lens classifier.

- Real-Time Applications: Implementing Lookback Lens in real-time applications such as conversational agents and automated summarization tools to enhance reliability and user trust.

Despite its effectiveness, the Lookback Lens does have some limitations. The requirement for multiple candidate samples from the LLM increases total inference time, and the method relies on annotated examples to train the classifier. However, the potential for faster, more integrated approaches, and the feasibility of acquiring training data, make Lookback Lens a promising solution for improving the reliability of large language models.

Overall, Lookback Lens represents a significant advancement in combating hallucinations in LLMs, leveraging attention map information to create a more accurate and dependable AI language generation system.