A groundbreaking approach enables virtual guitarists to play complex rhythms and chords with precision and naturalness.

- Researchers present a novel method for synthesizing dexterous hand motions in virtual guitar playing by utilizing cooperative learning and latent space manipulation.

- The new approach decouples control for each hand, allowing for improved training efficiency and high precision in bimanual coordination.

- The developed system can accurately interpret guitar tabs, enabling virtual performances of diverse songs while providing publicly available motion capture data for further exploration.

The world of virtual music performance is rapidly evolving, fueled by advancements in computer graphics and VR/AR technologies. Among the many instruments that challenge digital representation, the guitar stands out as particularly complex due to its requirement for precision and coordination between both hands. To address these challenges, researchers have developed a groundbreaking approach to synchronize dual hands for physically simulated guitar playing, allowing for high-quality and realistic performances.

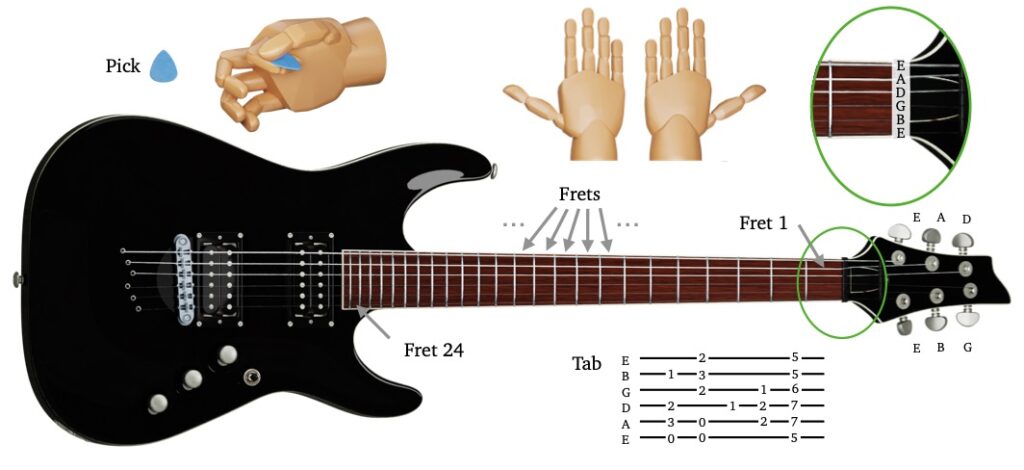

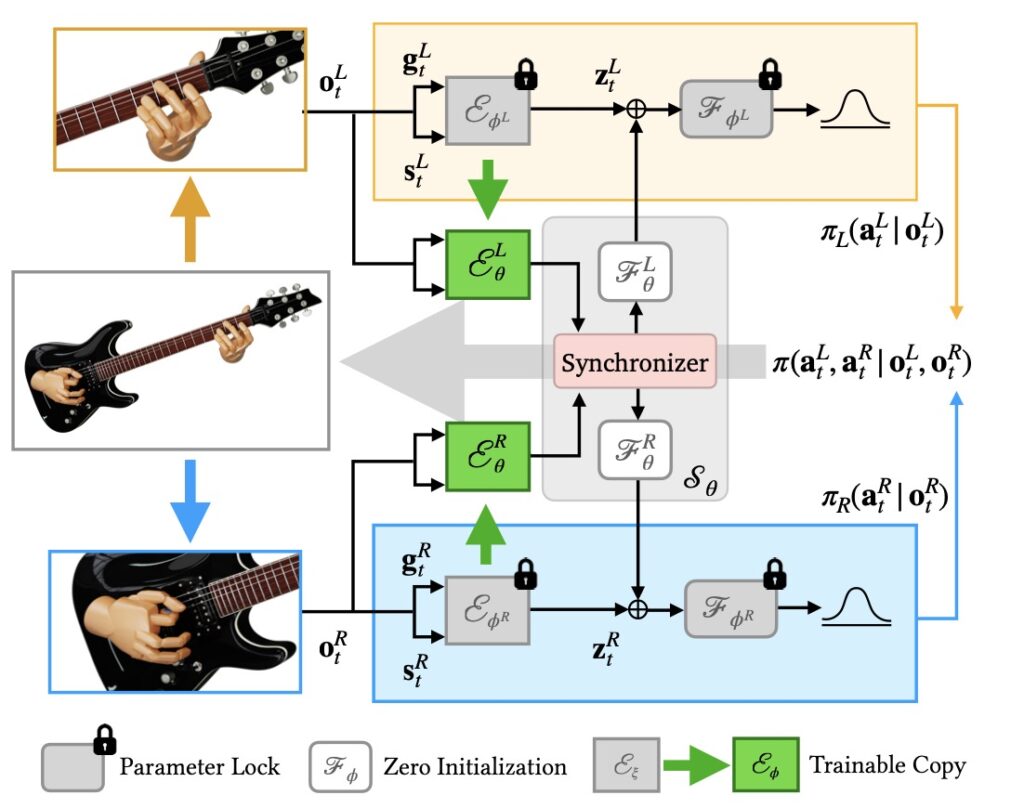

Traditionally, replicating the intricate motions of guitar playing through virtual avatars has posed significant hurdles. The coordination required between the left hand, which frets the chords, and the right hand, responsible for plucking the strings, necessitates not only dexterity but also exceptional timing and spatial awareness. While prior methods often relied on motion capture data, these approaches can be labor-intensive and fail to adapt to new songs or techniques. This new research seeks to overcome these limitations by leveraging cooperative learning, treating each hand as an independent agent to enhance training efficiency.

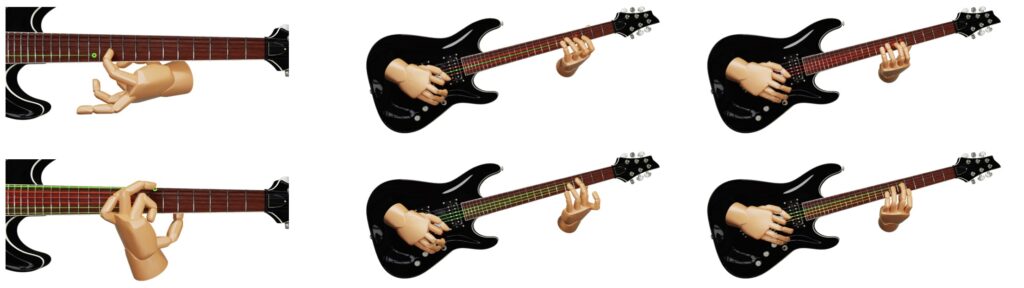

The core innovation of this approach lies in the synthesis of individual policies for each hand, trained separately before being synchronized through latent space manipulation. This decoupled method allows for greater flexibility in generating motions, avoiding the complexities associated with directly learning in a high-dimensional joint state-action space. By streamlining the training process, researchers can produce realistic and responsive hand movements that reflect the nuanced dynamics of human guitarists.

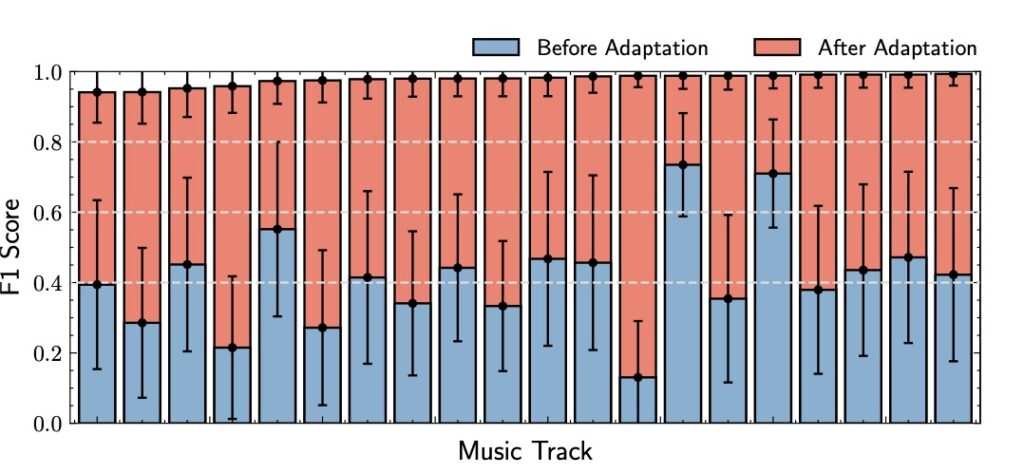

One of the significant achievements of this method is its ability to utilize unstructured reference data, enabling the virtual guitarist to accurately perform various rhythms, complex chord transitions, and intricate fingerpicking patterns. For instance, by interpreting input guitar tabs—regardless of their presence in the training data—the system can adapt to play songs that were previously unseen. This capability not only enhances the realism of the performance but also opens up new avenues for musicians and developers to explore creative possibilities in virtual environments.

The research highlights the challenges inherent in creating a complete guitar-playing experience. Guitarists often employ advanced techniques, such as natural harmonics, which require a seamless interaction between the hands. The new bimanual control method effectively manages these interactions, synchronizing the fretting and strumming actions to produce an authentic sound output. By integrating sound generation based on the dynamics of the two hands, the system ensures that the auditory experience aligns closely with the visual performance, capturing the essence of live music.

In addition to its technical advancements, the project has also made strides in accessibility for further research. A collection of motion capture data from professional guitarists has been made publicly available, providing a valuable resource for other researchers and developers interested in advancing virtual music performance. This commitment to sharing data exemplifies a broader trend in the tech community toward openness and collaboration, fostering innovation and exploration in AI-driven applications.

The synchronization of dual hands for physics-based guitar playing represents a significant milestone in the realm of virtual music performance. By leveraging advanced learning techniques and providing realistic motion synthesis, this research not only enhances the capabilities of virtual avatars but also enriches the overall experience of music creation and appreciation. As the technology continues to evolve, the potential for virtual guitarists to perform complex pieces with accuracy and flair becomes increasingly tangible, promising exciting developments for musicians and tech enthusiasts alike.