New Architecture Enhances LLM Performance and Reduces Computational Costs

- Explicit Memory Integration: Memory3 incorporates explicit memory mechanisms to reduce computational costs and improve efficiency.

- Superior Performance: Despite a smaller parameter size, Memory3 outperforms larger LLMs and RAG models in benchmarks.

- Scalability and Adaptability: The architecture is designed to be compatible with existing Transformer-based models, facilitating widespread adoption.

Language modeling in artificial intelligence has made significant strides, focusing on creating systems capable of understanding, interpreting, and generating human language. These advancements have led to the development of increasingly complex and large models, which require substantial computational resources. The introduction of Memory3, a novel architecture for large language models (LLMs) incorporating an explicit memory mechanism, marks a significant shift in addressing these challenges.

Explicit Memory Integration: A New Paradigm

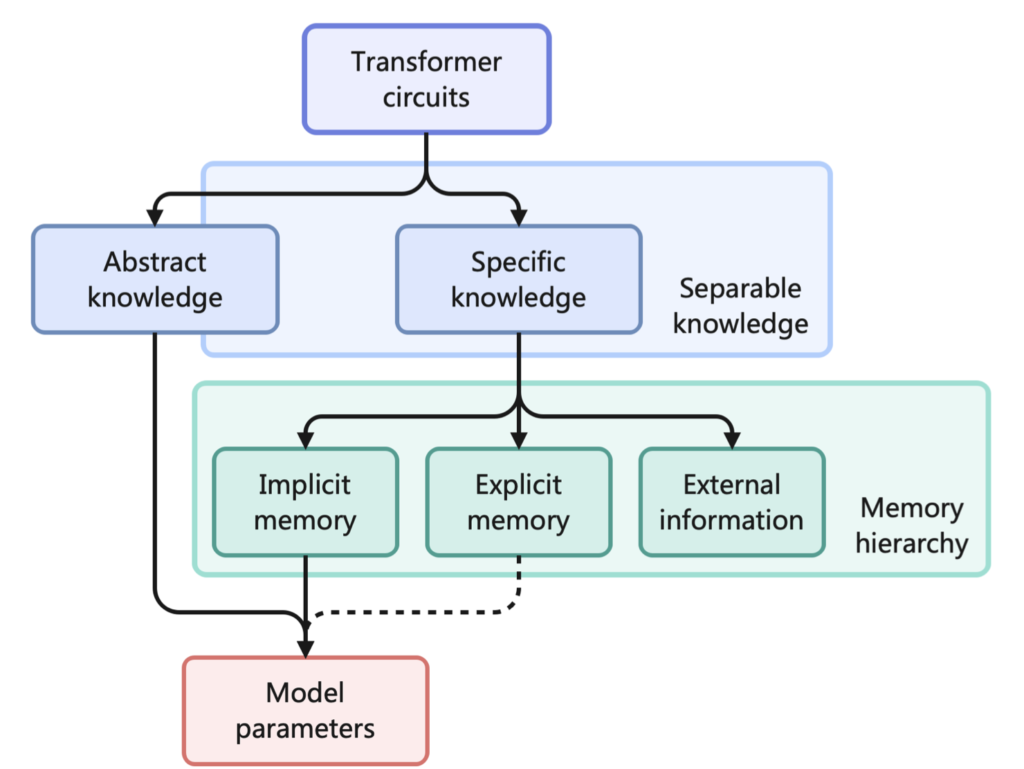

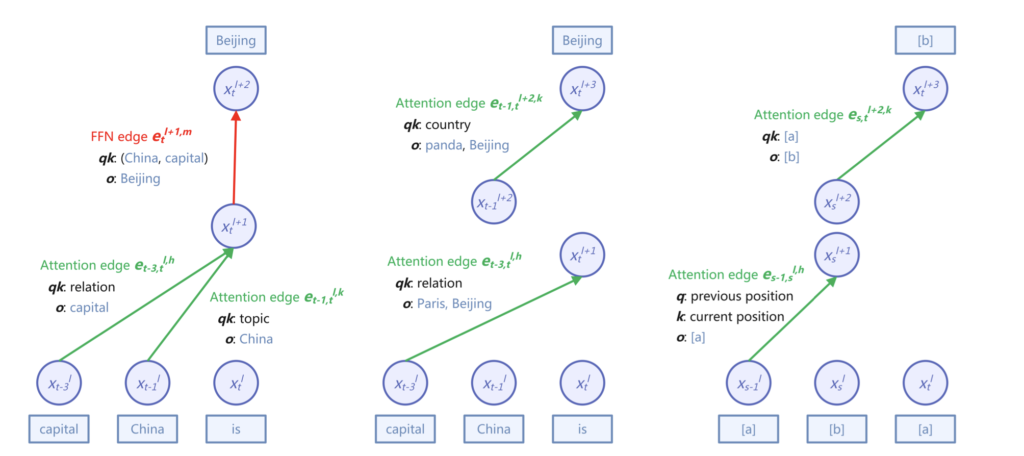

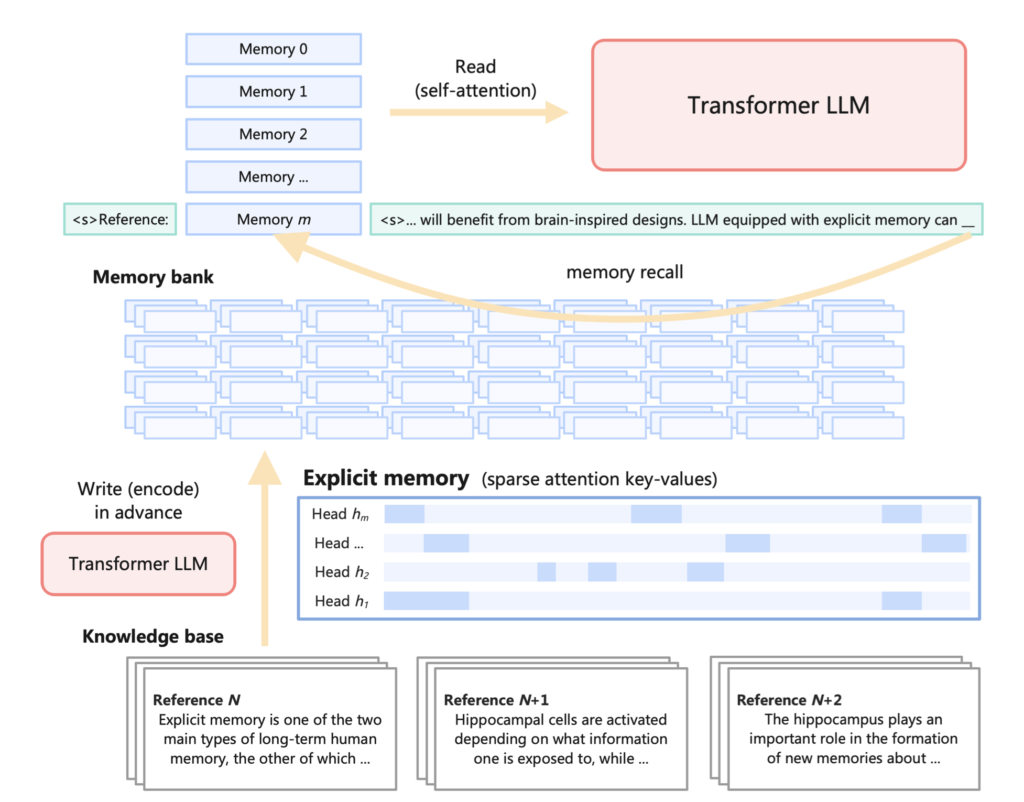

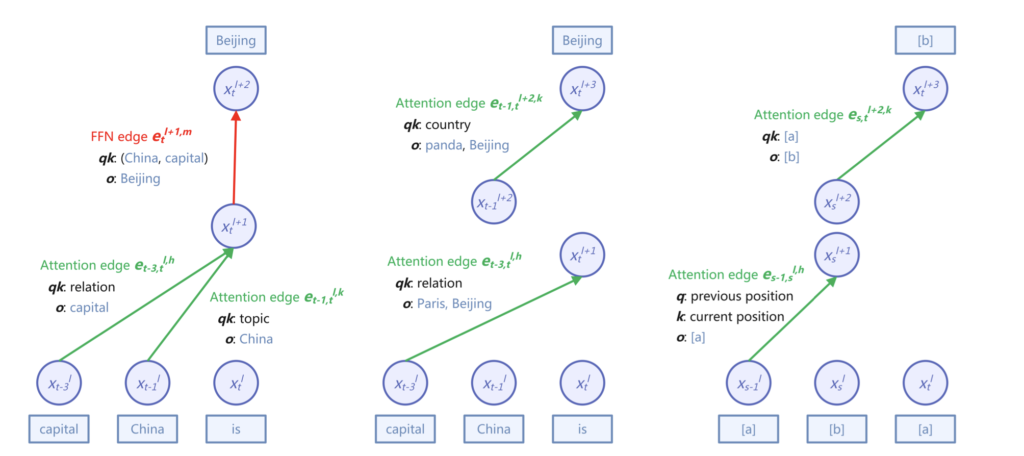

Developed by researchers from the Institute for Advanced Algorithms Research in Shanghai, Moqi Inc., and the Center for Machine Learning Research at Peking University, the Memory3 model introduces explicit memory into LLMs. This innovation externalizes a significant portion of the model’s knowledge, allowing it to maintain a smaller parameter size. By storing and retrieving knowledge as explicit memories, the model can achieve greater efficiency and reduce computational costs. This design includes a memory sparsification mechanism and a two-stage pretraining scheme to facilitate efficient memory formation.

Superior Performance: Outperforming Larger Models

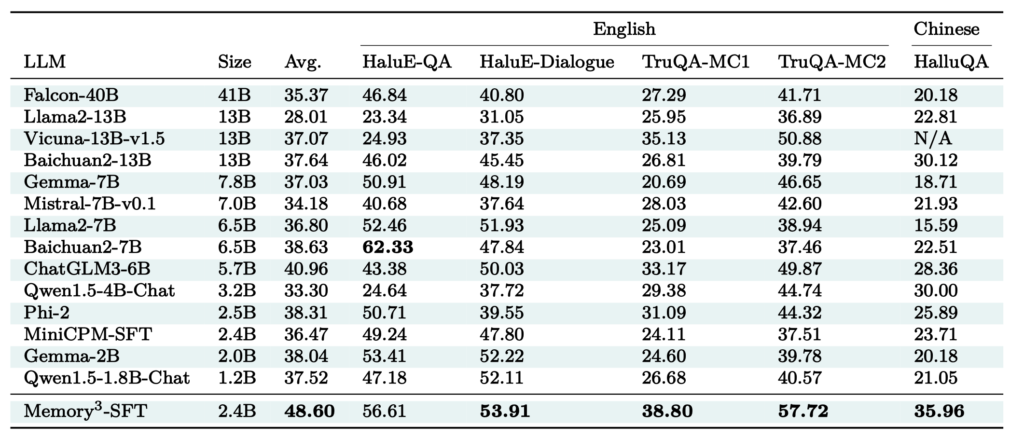

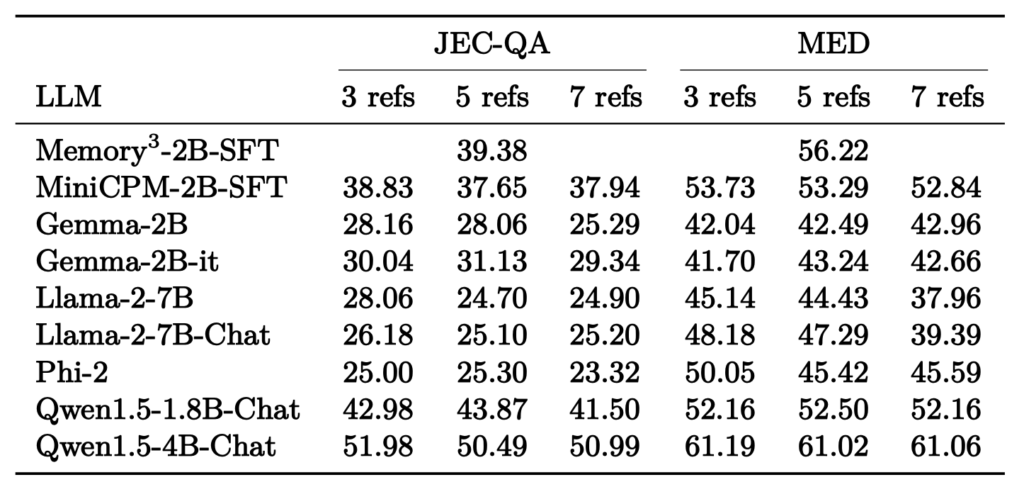

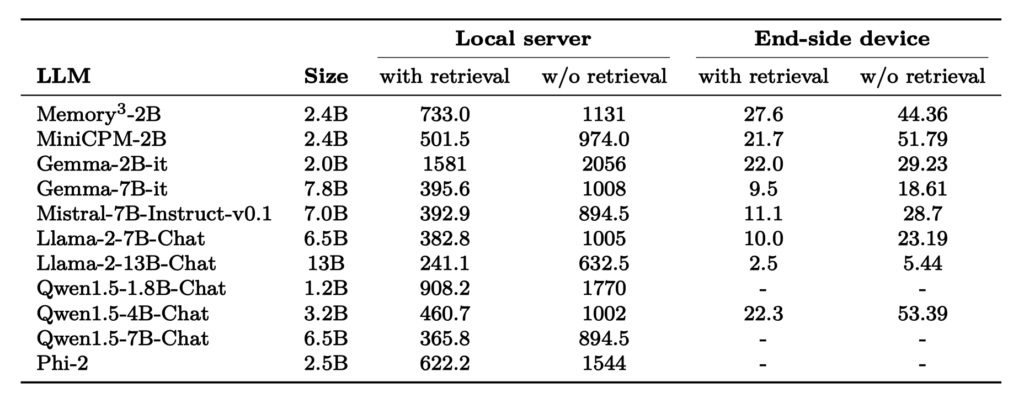

The Memory3 model, with 2.4 billion non-embedding parameters, has demonstrated impressive performance in various benchmarks. It has outperformed larger LLMs and Retrieval-Augmented Generation (RAG) models, achieving superior efficiency and accuracy. Specifically, Memory3 showed a decoding speed higher than RAG models due to its reduced reliance on extensive text retrieval processes. In professional tasks that involved high-frequency retrieval of explicit memories, the model’s robustness and adaptability were particularly evident. For instance, in the HellaSwag benchmark, Memory3 scored 83.3, surpassing a larger 9.1B parameter model that scored 70.6. Similarly, in the BoolQ benchmark, Memory3 scored 80.4 compared to the larger model’s 70.7.

Scalability and Adaptability: Designed for Widespread Use

One of the key advantages of the Memory3 architecture is its compatibility with existing Transformer-based LLMs, requiring minimal fine-tuning for integration. This adaptability ensures that the Memory3 model can be widely adopted without extensive modifications to existing systems. The explicit memory mechanism significantly reduces the computational load, allowing for faster and more efficient processing. Moreover, it reduces the total memory storage requirement from 7.17PB to 45.9TB, making it more practical for large-scale applications.

Ideas for Further Exploration

- Medical Applications: Implementing Memory3 in medical AI systems to provide efficient and accurate diagnostic support while reducing computational overhead.

- Educational Tools: Developing advanced educational platforms that leverage Memory3 to offer personalized learning experiences and real-time assistance.

- Content Creation: Utilizing Memory3 for generating high-quality content in journalism, marketing, and entertainment, ensuring faster production with lower costs.

Memory3 represents a significant advancement in the sustainable development of language modeling technologies. By incorporating explicit memory, the model addresses the pressing issue of computational costs, paving the way for more efficient, scalable, and accessible AI technologies. As the demand for powerful models continues to grow, innovations like Memory3 will be crucial in managing the balance between performance and resource efficiency, ultimately advancing the capabilities of artificial intelligence in understanding and generating human language.