How Meta’s Latest AI Innovations Are Shaping the Future of Virtual Worlds

- AI-Powered Realism: Meta Motivo introduces lifelike movement and behavior in digital humanoids, addressing key limitations of current Metaverse avatars.

- Zero-Shot Versatility: The model can perform tasks like motion tracking, pose reaching, and reward optimization without additional training.

- Open Development: Meta’s open release of models, benchmarks, and training codes fosters collaboration and innovation in AI-driven character animation.

Meta has unveiled Meta Motivo, a groundbreaking AI model designed to control human-like digital agents in the Metaverse. Built on an unsupervised reinforcement learning framework, this behavioral foundation model enables digital avatars to execute realistic, physics-based movements and adapt to a wide variety of tasks without prior training.

Meta describes the innovation as a significant step toward creating fully embodied agents capable of enhancing immersive experiences. By improving avatar movement and interaction, Meta Motivo aims to redefine how users engage with the Metaverse.

The Technology Behind Meta Motivo

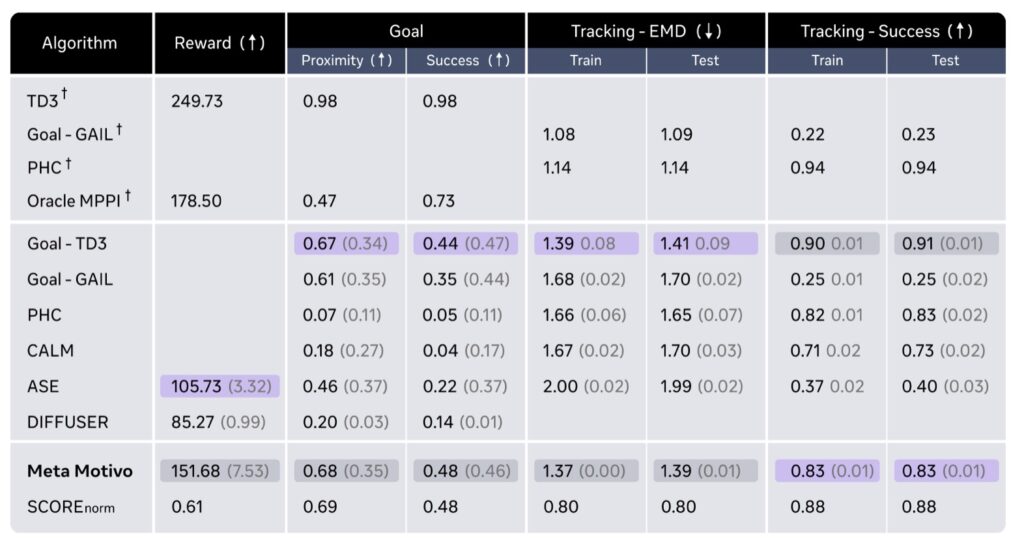

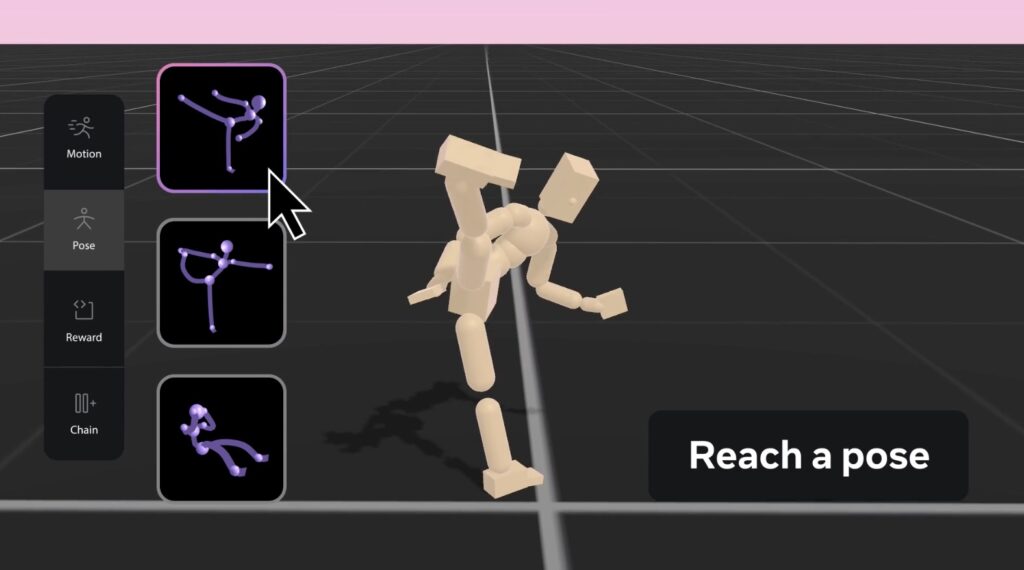

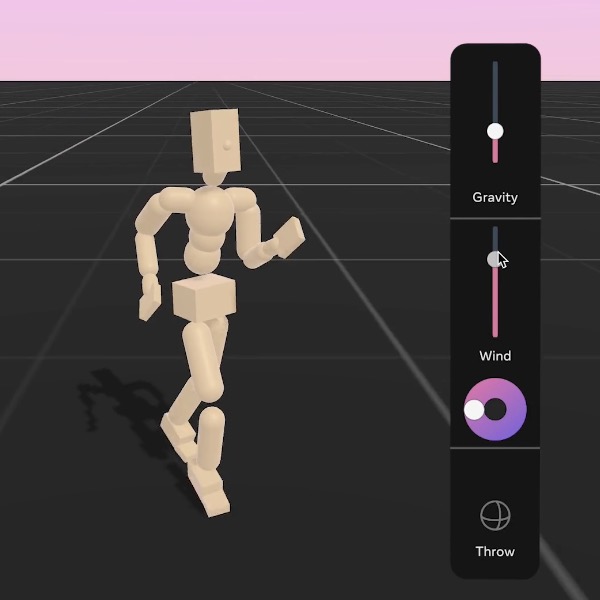

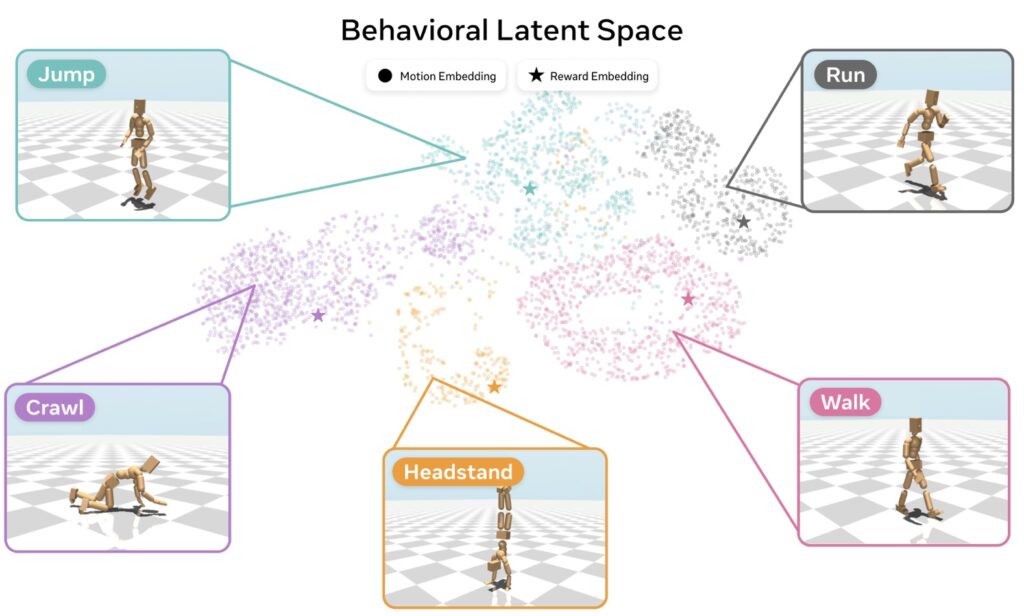

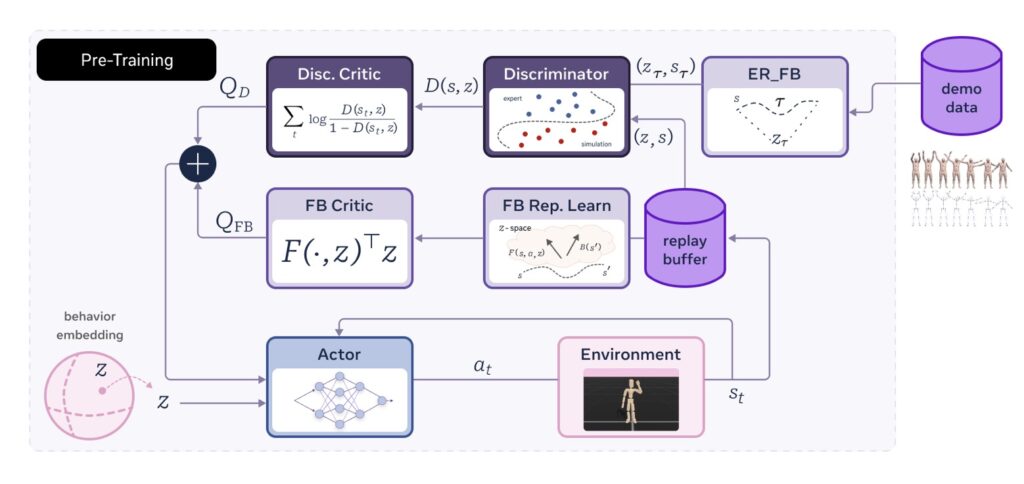

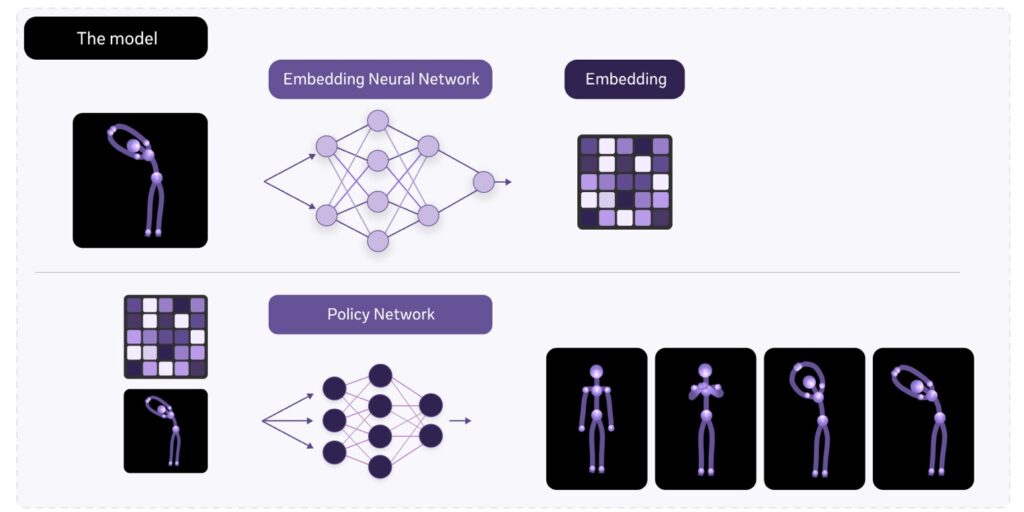

At its core, Meta Motivo uses an advanced algorithm called Forward-Backward Conditional Policy Regularization (FB-CPR), which integrates unsupervised learning with imitation techniques. This unique approach allows the model to align states, motions, and rewards in a unified latent space, enabling zero-shot inference across tasks such as:

- Motion Tracking: Accurately mimicking dynamic movements like cartwheels.

- Pose Reaching: Achieving stable, complex poses such as an arabesque.

- Reward Optimization: Executing goal-oriented behaviors like running.

By training on datasets like AMASS motion capture and simulating a physics-based humanoid environment, Meta Motivo achieves competitive results compared to specialized task-specific models.

Beyond Meta Motivo: Large Concept Model (LCM)

In tandem with Meta Motivo, Meta is introducing the Large Concept Model (LCM), a next-generation language model designed to decouple reasoning from language representation. Unlike traditional language models, LCM predicts high-level ideas rather than tokens, operating in a multimodal, multilingual embedding space. This innovation is expected to enhance reasoning capabilities in applications ranging from virtual worlds to natural language processing.

Democratizing AI for the Metaverse

Meta has consistently championed an open-source approach, releasing its AI models and benchmarks to developers. By sharing pre-trained models and training code, the company hopes to spur innovation in behavioral foundation models and advance AI research in character animation.

Meta’s investments in AI and augmented reality—projected to reach up to $40 billion in 2024—underline its commitment to driving Metaverse innovation. According to Meta, the release of Motivo and LCM could democratize character animation and unlock new possibilities for lifelike NPCs and immersive virtual experiences.

Future Implications and Limitations

While Meta Motivo showcases impressive capabilities, its developers acknowledge several limitations, including performance gaps in certain tasks compared to specialized algorithms. However, the model’s potential for adaptation and zero-shot learning across diverse prompts positions it as a foundational step toward a fully interactive Metaverse.

By bridging the gap between realistic movement and intelligent behavior, Meta Motivo sets the stage for a future where virtual agents feel as dynamic and engaging as their human counterparts.