A Compact Powerhouse for Generative AI Tasks

- Unmatched Efficiency: Mistral Small 3 delivers 81% MMLU accuracy and processes 150 tokens per second, outperforming larger models like Llama 3.3 70B while being over three times faster.

- Versatile Applications: From conversational AI to domain-specific fine-tuning, Mistral Small 3 is designed for low-latency, high-performance tasks across industries.

- Open-Source Commitment: Released under Apache 2.0, Mistral Small 3 is a robust foundation for innovation, offering flexibility for local deployment and customization.

The world of AI is evolving rapidly, and Mistral Small 3 is here to redefine what’s possible with compact, high-performance models. Designed to handle 80% of generative AI tasks with exceptional speed and accuracy, this 24-billion-parameter model is a game-changer for developers, researchers, and enterprises alike. Whether you’re building conversational agents, fine-tuning domain-specific models, or deploying AI locally, Mistral Small 3 offers unparalleled versatility and efficiency.

Why Mistral Small 3 Stands Out

1. Compact Yet Powerful

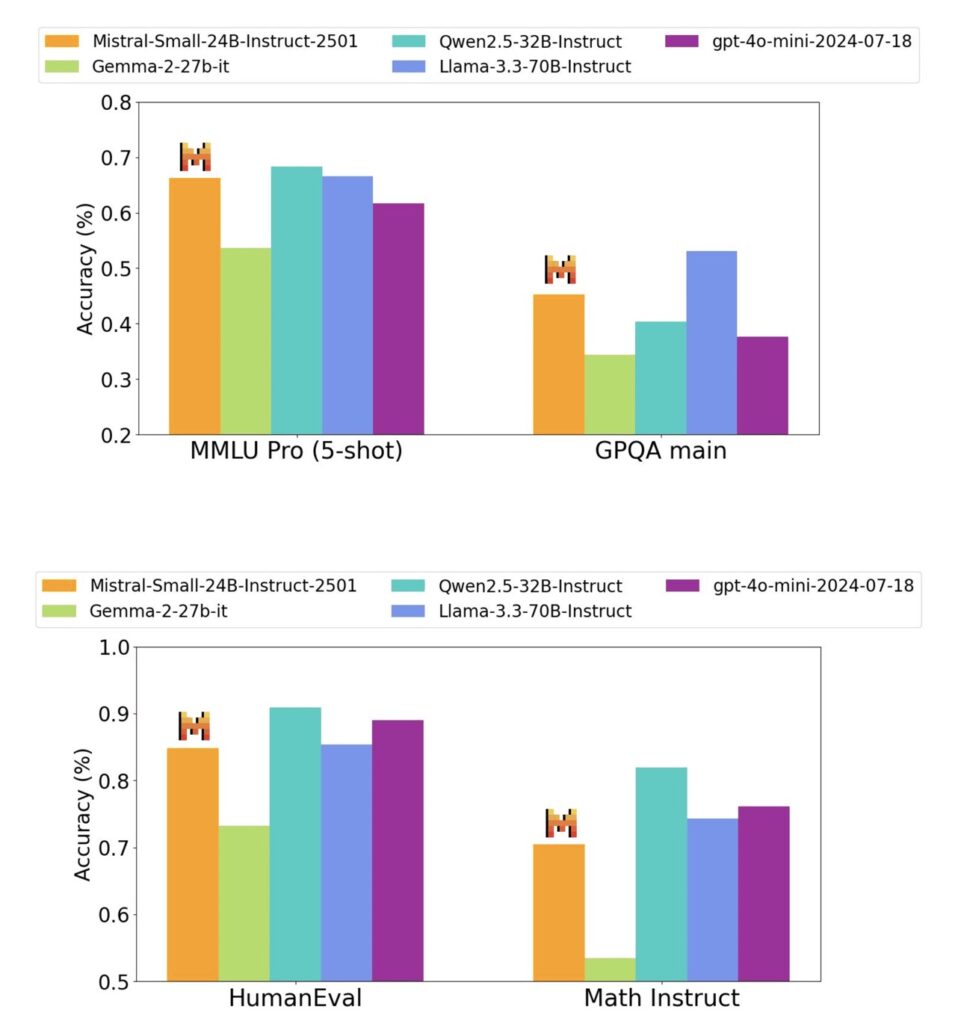

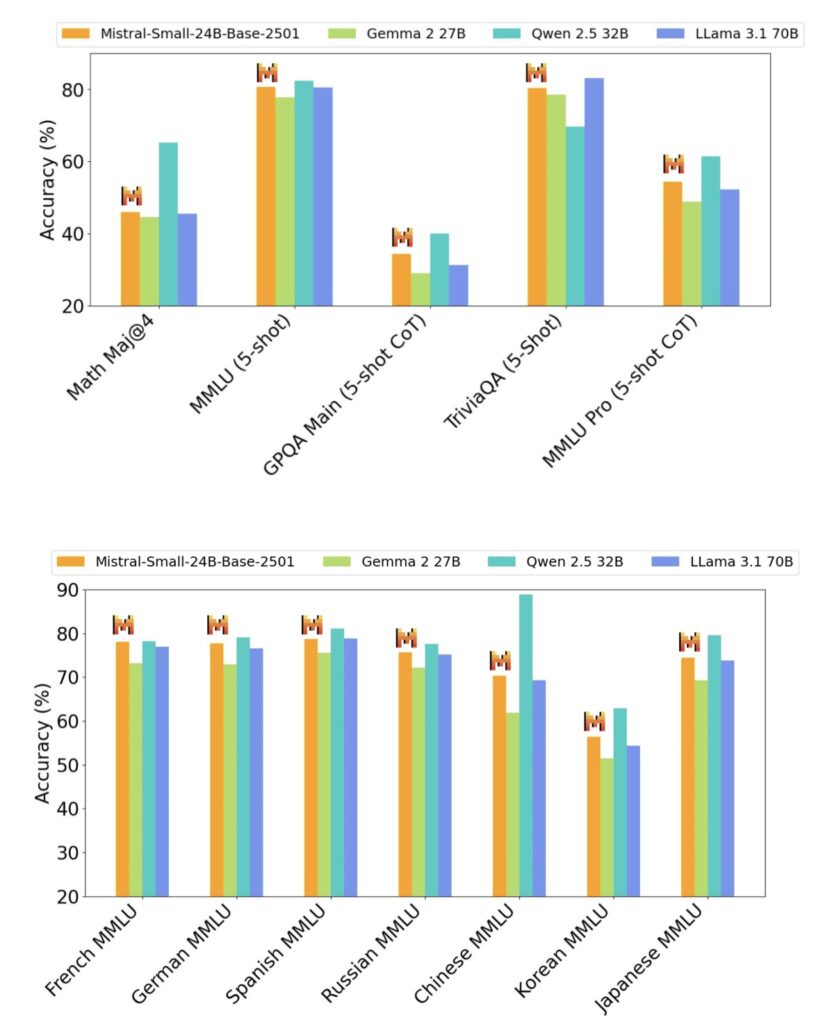

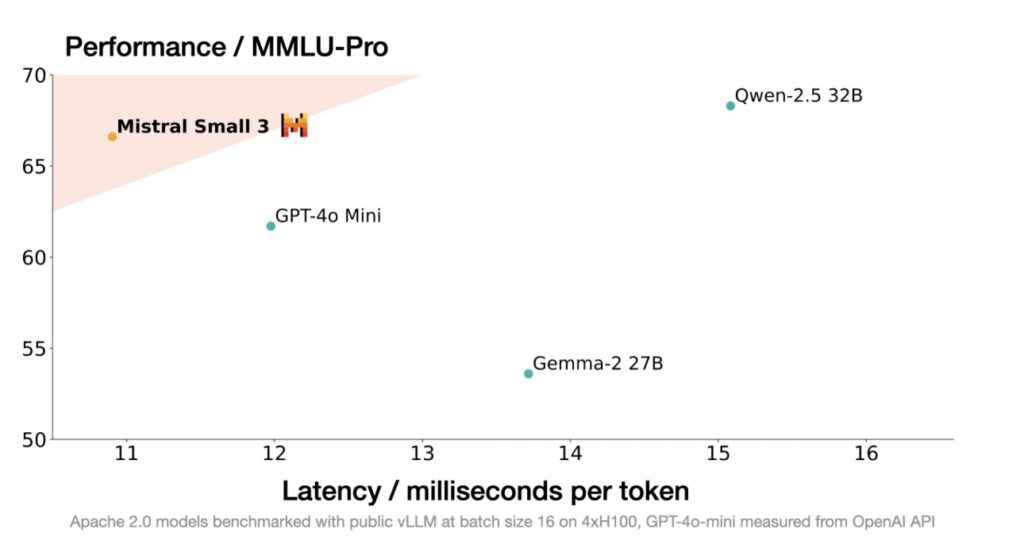

Mistral Small 3 achieves an impressive 81% accuracy on the MMLU benchmark, rivaling much larger models like Llama 3.3 70B and Qwen 32B. Despite its smaller size, it delivers competitive performance across tasks such as coding, math, general knowledge, and instruction following. This makes it an excellent open-source alternative to proprietary models like GPT4o-mini.

What truly sets Mistral Small 3 apart is its speed. With a processing rate of 150 tokens per second, it’s more than three times faster than many larger models on the same hardware. This efficiency is achieved through a streamlined architecture with fewer layers, reducing the time per forward pass without compromising accuracy.

2. Versatility Across Use Cases

Mistral Small 3 is designed to excel in a wide range of applications, making it a go-to solution for diverse industries:

- Fast-Response Conversational AI: Ideal for virtual assistants and chatbots, Mistral Small 3 ensures quick, accurate responses for real-time interactions.

- Low-Latency Function Execution: Perfect for automated workflows, it handles rapid function calls with ease.

- Domain-Specific Fine-Tuning: The model can be customized to become a subject matter expert in fields like legal advice, medical diagnostics, and technical support.

- Local Deployment: When quantized, Mistral Small 3 can run efficiently on devices like an RTX 4090 or a MacBook with 32GB RAM, making it a great choice for privacy-conscious users and organizations.

3. Open-Source and Developer-Friendly

Mistral Small 3 is released under the Apache 2.0 license, ensuring maximum flexibility for developers. Both pre-trained and instruction-tuned checkpoints are available, providing a solid foundation for building advanced AI systems. Unlike some models, Mistral Small 3 is not trained with reinforcement learning or synthetic data, making it an excellent base for further customization and reasoning tasks.

The model is already accessible on platforms like Hugging Face, Ollama, Kaggle, and Together AI, with more integrations coming soon on NVIDIA NIM, Amazon SageMaker, and Databricks. This broad availability ensures that developers can easily incorporate Mistral Small 3 into their preferred tech stacks.

Real-World Applications

Mistral Small 3 is already being evaluated across multiple industries, showcasing its potential to transform workflows and enhance productivity:

- Financial Services: Fraud detection and risk analysis.

- Healthcare: Customer triaging and diagnostics.

- Robotics and Manufacturing: On-device command and control for automation.

- Customer Service: Virtual assistants and sentiment analysis for improved user experiences.

Its ability to handle sensitive data locally makes it particularly appealing for organizations prioritizing privacy and security.

A Model Built for the Future

Mistral Small 3 is not just a model—it’s a vision for the future of open-source AI. By offering a compact, efficient, and versatile solution, it empowers developers and enterprises to innovate without the constraints of proprietary systems.

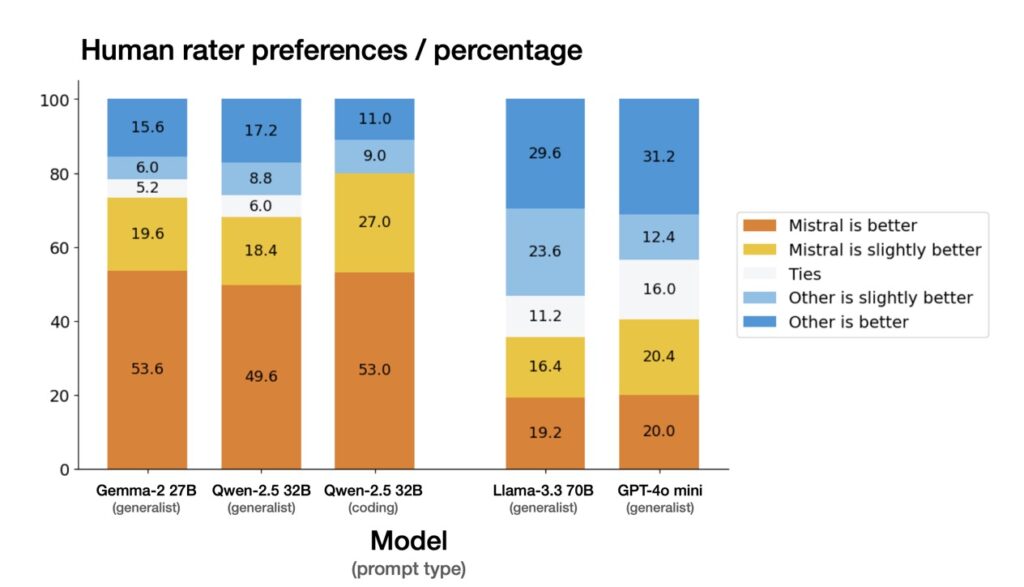

The model’s performance has been rigorously tested through side-by-side evaluations with over 1,000 proprietary coding and generalist prompts. These evaluations confirm that Mistral Small 3 holds its own against much larger models, delivering high-quality results across a variety of benchmarks.

The Road Ahead

Mistral Small 3 is just the beginning. The team at Mistral is committed to advancing open-source AI, with plans to release both small and large models featuring enhanced reasoning capabilities in the near future. By adhering to the Apache 2.0 license, Mistral ensures that its models remain accessible, modifiable, and free to use for any purpose.

For developers and enterprises seeking specialized capabilities, Mistral also offers commercial models tailored to specific tasks like code completion and domain-specific knowledge.

Mistral Small 3 represents a significant leap forward in AI technology, combining efficiency, versatility, and accessibility in a single package. Whether you’re a hobbyist, a researcher, or a business leader, this model opens up new possibilities for innovation and problem-solving.

Explore Mistral Small 3 today and be part of the journey to shape the future of AI. With its unmatched performance and open-source ethos, the possibilities are endless.