Streamlined, scalable, and precise—OmniControl reshapes how we generate and control images using AI.

- OmniControl introduces an efficient framework for image-conditioned control in diffusion models, requiring minimal additional parameters.

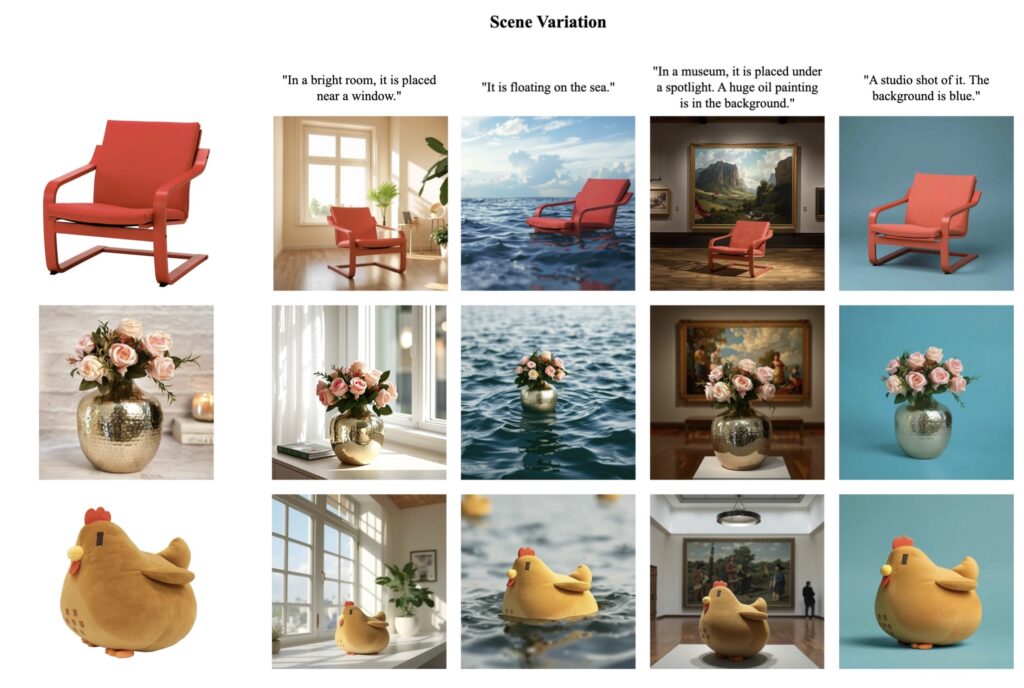

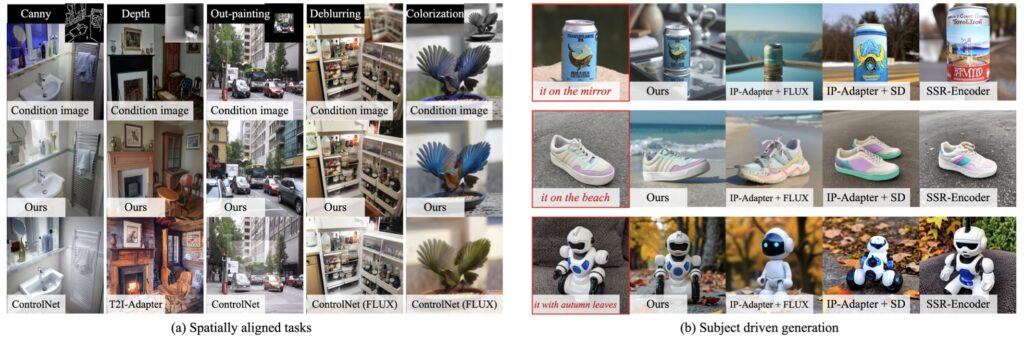

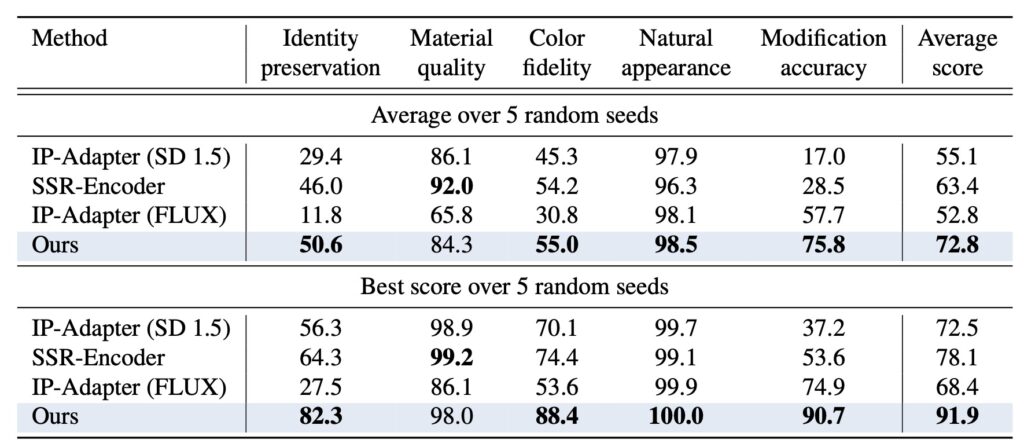

- It excels in diverse tasks, from subject-driven generation to spatially-aligned controls like depth and edges.

- The newly released Subjects200K dataset promises to advance research in subject-consistent image generation.

The field of AI-driven visual generation has seen remarkable advancements, with diffusion models leading the way in creating highly realistic images. However, the challenge of controlling these models with precision and flexibility has persisted. Enter OmniControl, a groundbreaking framework that integrates image conditions into Diffusion Transformer (DiT) models with unmatched efficiency and versatility.

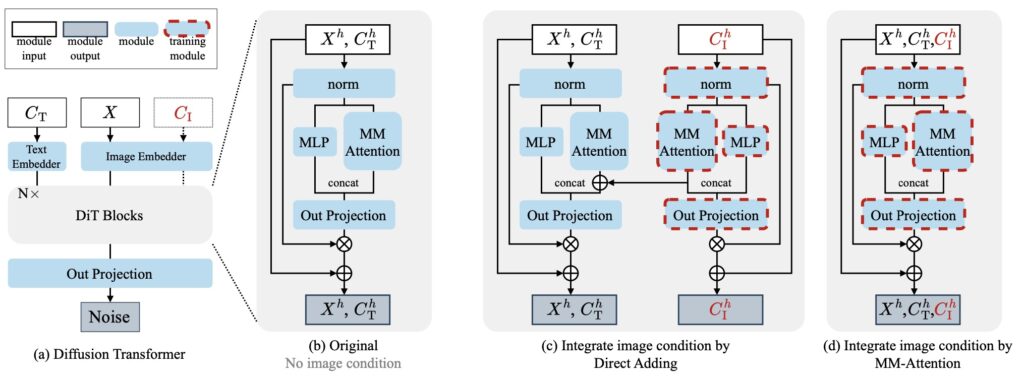

OmniControl not only requires a mere 0.1% increase in parameters but also eliminates the need for complex additional encoders. This innovative approach allows for seamless integration of spatial and subject-driven conditions, offering a unified framework that adapts effortlessly to various tasks. By leveraging a parameter reuse mechanism, OmniControl turns the DiT into a robust backbone for encoding and processing image conditions with multi-modal attention.

Expanding the Possibilities of Image-Conditioned Control

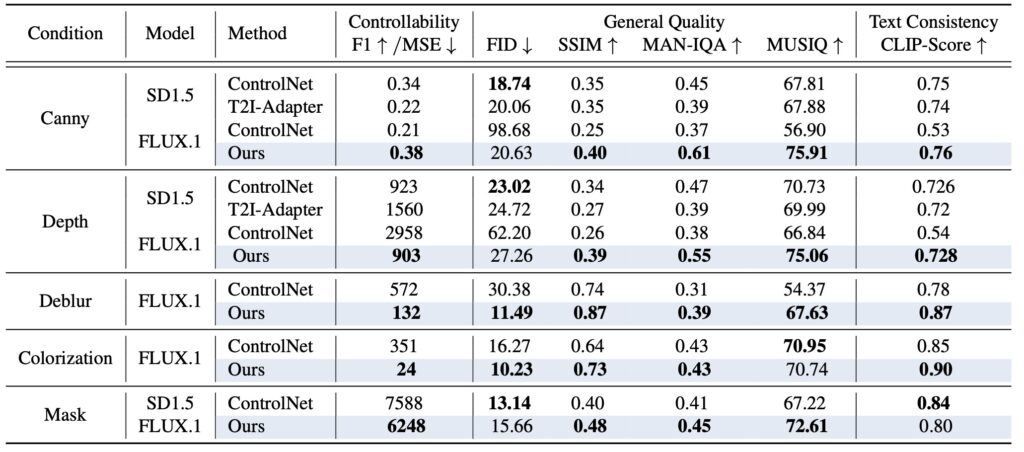

Traditional methods of guiding diffusion models relied heavily on text prompts or additional architectural components. While effective to an extent, these approaches often struggled with spatial precision or required significant computational overhead. OmniControl changes the game by introducing a streamlined, image-based conditioning method that supports tasks like subject-driven generation, depth control, and edge-based structuring—all with remarkable accuracy.

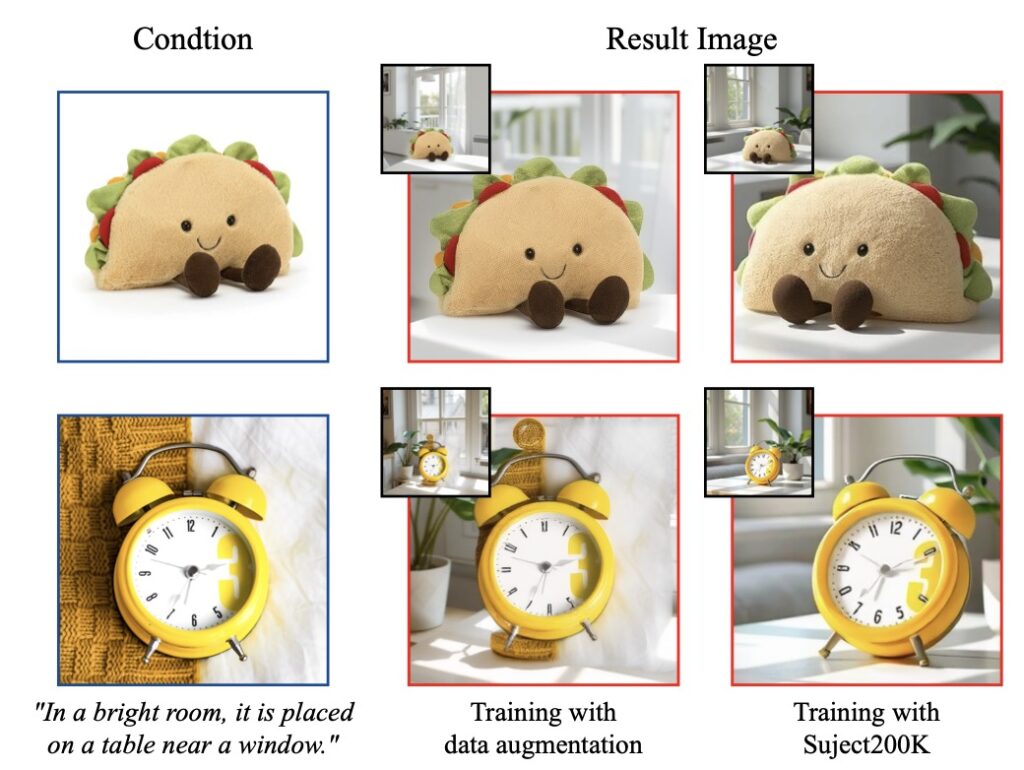

This method achieves its results by training the model on images generated by the DiT itself. This self-referential training strategy not only enhances efficiency but also ensures consistency in subject-driven tasks. OmniControl’s ability to handle complex requirements without additional encoder modules makes it a versatile tool for researchers and developers alike.

The Subjects200K Dataset: A Game-Changer for Research

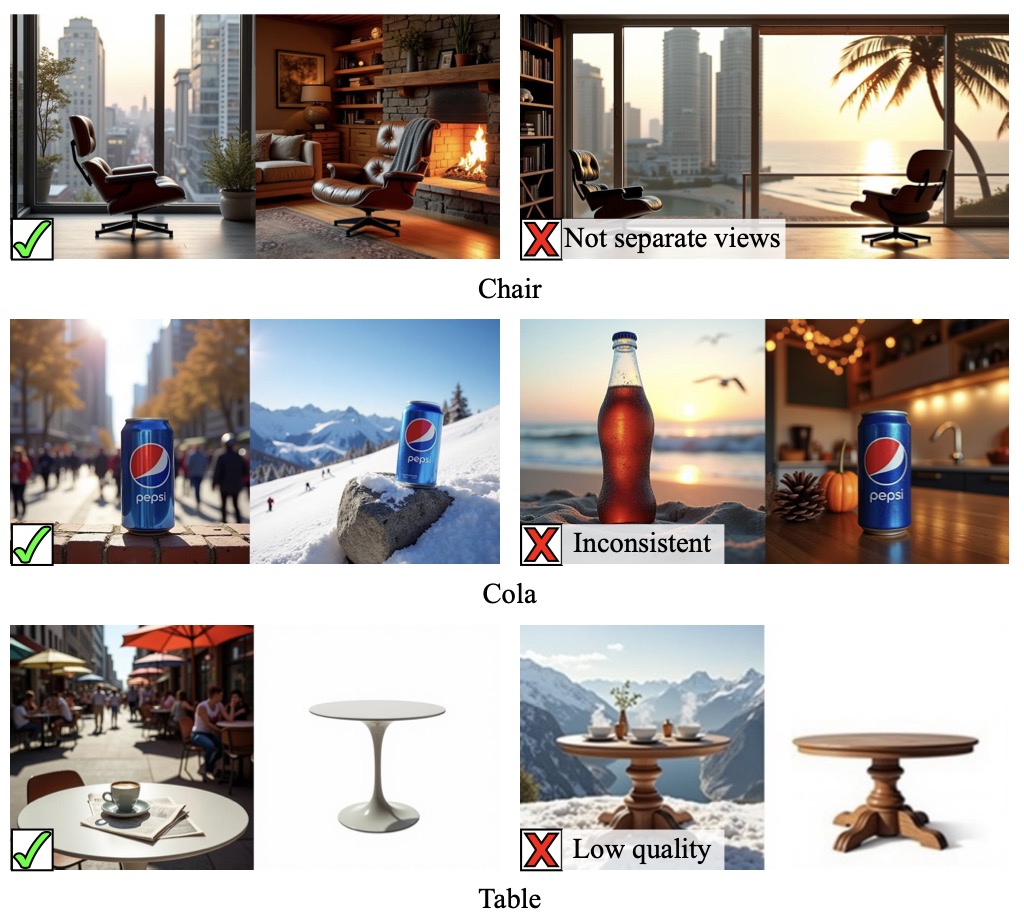

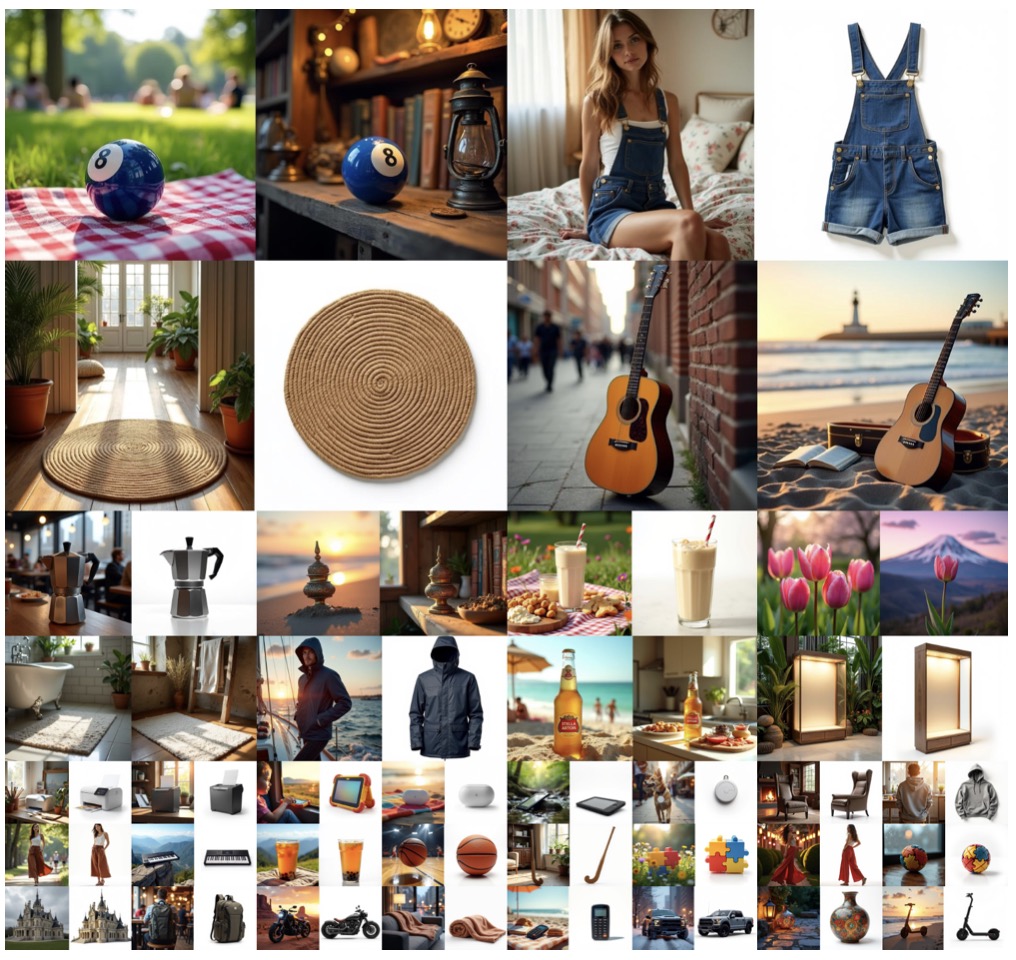

OmniControl’s capabilities are further enhanced by the introduction of the Subjects200K dataset. This extensive collection of over 200,000 identity-consistent images provides researchers with a high-quality resource for advancing subject-consistent image generation. The dataset includes a robust data synthesis pipeline, enabling researchers to explore new frontiers in AI-driven visual creativity.

By releasing this dataset to the public, OmniControl not only pushes the boundaries of what diffusion models can achieve but also fosters a collaborative environment for innovation. Researchers can now experiment with new techniques, leveraging OmniControl’s framework to unlock new possibilities in image-conditioned generation.

Setting a New Standard for Diffusion Models

OmniControl represents a paradigm shift in how we approach controllable image generation. Its parameter-efficient design, combined with its ability to handle diverse tasks, positions it as a cornerstone technology for the next generation of AI applications. From subject-driven animations to complex spatial controls, OmniControl’s scalability and precision set a new benchmark for diffusion models.

With its innovative framework and the release of the Subjects200K dataset, OmniControl is not just a tool—it’s a vision for the future of AI-driven creativity. As researchers and developers begin to explore its full potential, OmniControl promises to redefine the limits of what’s possible in visual generation.