New AI Model Converts Simple Sketches into Playable 3D Game Scenes, Pushing the Boundaries of Content Creation

- Sketch-Based 3D Scene Generation: Sketch2Scene leverages user sketches and text prompts to generate interactive 3D game scenes using advanced deep-learning models.

- Overcoming Data Limitations: The method utilizes pre-trained 2D diffusion models and procedural content generation to address the challenge of limited 3D scene training data.

- Seamless Game Integration: The resulting 3D scenes are fully compatible with game engines like Unity or Unreal, enabling easy integration into game development workflows.

The process of creating 3D content for video games, films, and virtual reality experiences has traditionally been labor-intensive, requiring significant expertise and time. Enter Sketch2Scene, a novel deep-learning approach that promises to revolutionize 3D content creation by transforming simple user sketches and prompts into fully interactive and playable 3D game scenes. This technology not only democratizes the creation process but also opens up new avenues for rapid game development and design.

The Magic Behind Sketch2Scene

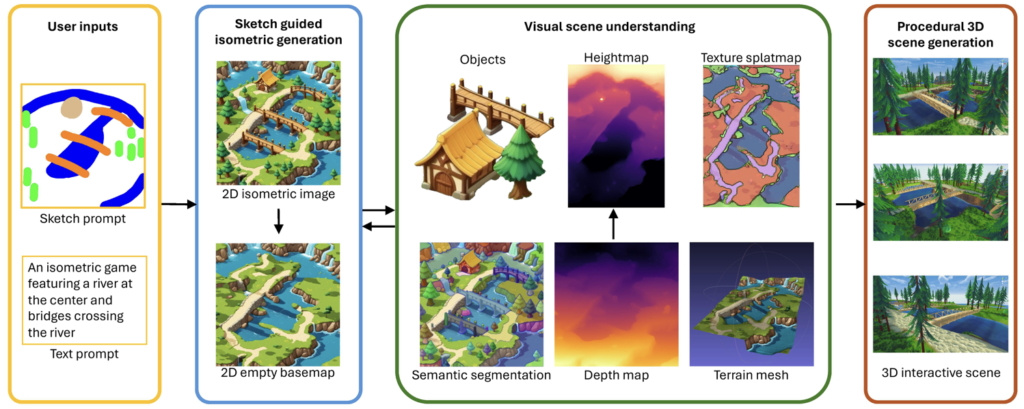

At its core, Sketch2Scene enables users to input casual, hand-drawn sketches or text descriptions, which are then processed by a sophisticated pipeline to generate 3D game environments. The process begins with a pre-trained 2D denoising diffusion model, which converts the sketch into a conceptual 2D image. This image is generated using an isometric projection to neutralize unknown camera angles, ensuring that the scene’s layout is accurately captured.

From this isometric image, Sketch2Scene employs image understanding techniques to segment the scene into meaningful components, such as buildings, trees, and other objects. These segments are then fed into a procedural content generation (PCG) engine, like Unity or Unreal Engine, to create a detailed 3D scene that closely matches the user’s original sketch.

Overcoming Challenges with Diffusion Models

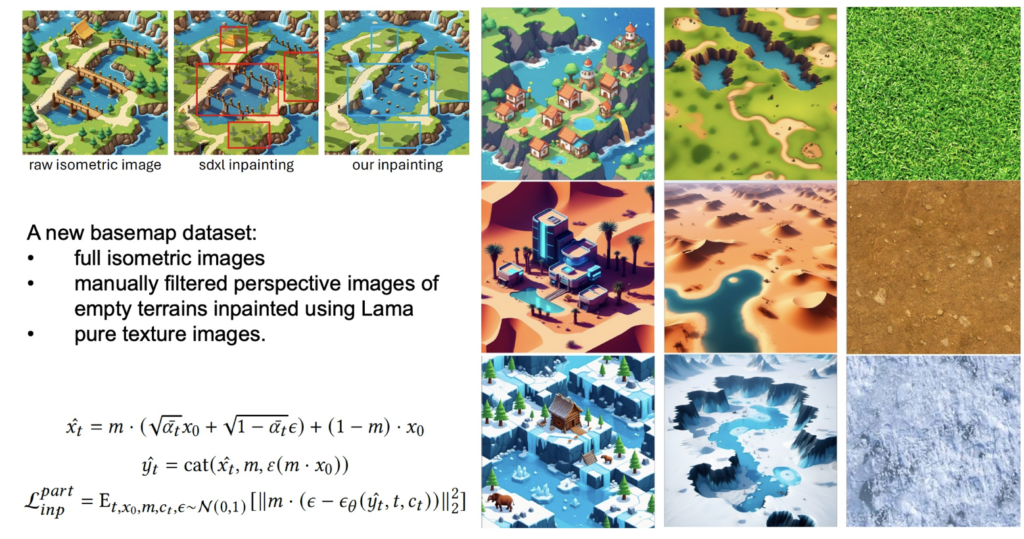

One of the significant challenges in 3D content generation is the lack of large-scale training data for 3D scenes. Sketch2Scene addresses this by utilizing pre-trained 2D diffusion models that have been enhanced with specialized techniques, such as SAL-enhanced ControlNet and step-unrolled diffusion inpainting. These innovations allow the model to produce high-quality 3D assets that are not only visually impressive but also interactive and ready for integration into existing game engines.

However, the multi-stage pipeline used by Sketch2Scene is not without its challenges. Errors can accumulate during the process, sometimes requiring users to restart from a different noise seed. The developers have proposed several potential solutions, including concurrently generating multiple modalities like RGB, semantic, and depth data, and fusing them to create a more coherent final product. Additionally, the current method of retrieving terrain textures from a pre-existing database limits diversity, a limitation the team plans to address by developing diffusion-based texture generation models in the future.

Game-Changing Potential

The ability to generate interactive 3D scenes from simple sketches has vast implications for the gaming industry. With Sketch2Scene, game designers can rapidly prototype ideas, artists can visualize concepts more effectively, and developers can streamline the creation process. The generated 3D scenes are not only of high quality but are also fully interactive, making them immediately usable in game development environments.

While still in its early stages, Sketch2Scene represents a significant step forward in the field of 3D content generation. As the technology matures, it could fundamentally change how games are developed, making it easier for creators of all skill levels to bring their ideas to life.