As Seoul races to become a top-three global AI powerhouse, a landmark bill aims to balance safety with innovation—though local startups fear they may be paying the price for being pioneers.

- Global Precedent: South Korea has implemented the “AI Basic Act,” a comprehensive regulatory framework that takes effect sooner than the European Union’s phased approach, positioning the nation as a regulatory trailblazer.

- Mandatory Oversight: The new laws mandate strict human oversight and transparency for “high-impact” AI sectors, such as nuclear safety, healthcare, and finance, requiring clear labeling for AI-generated content.

- Innovation vs. Regulation: While the government views the law as a foundation for trust and growth, the local tech industry expresses “resentment” and concern that vague compliance requirements could stifle innovation compared to the lighter-touch U.S. approach.

South Korea has officially staked its claim as a global regulator of the future, introducing on Thursday what it describes as the world’s first comprehensive set of laws governing artificial intelligence. In a move designed to secure the nation’s status as a top-three global AI powerhouse, Seoul is betting that a robust legal framework will foster the public trust necessary for widespread adoption. However, this aggressive sprint toward regulation has exposed a widening rift between government ambition and the anxieties of the country’s burgeoning startup ecosystem.

Defining “High-Impact” AI

At the heart of the new AI Basic Act is a focus on safety in critical sectors. The law introduces strict requirements for what it terms “high-impact” AI—systems deployed in sensitive fields such as nuclear safety, drinking water production, transportation, and healthcare. The scope also extends to financial services, specifically covering algorithms used for credit evaluation and loan screening.

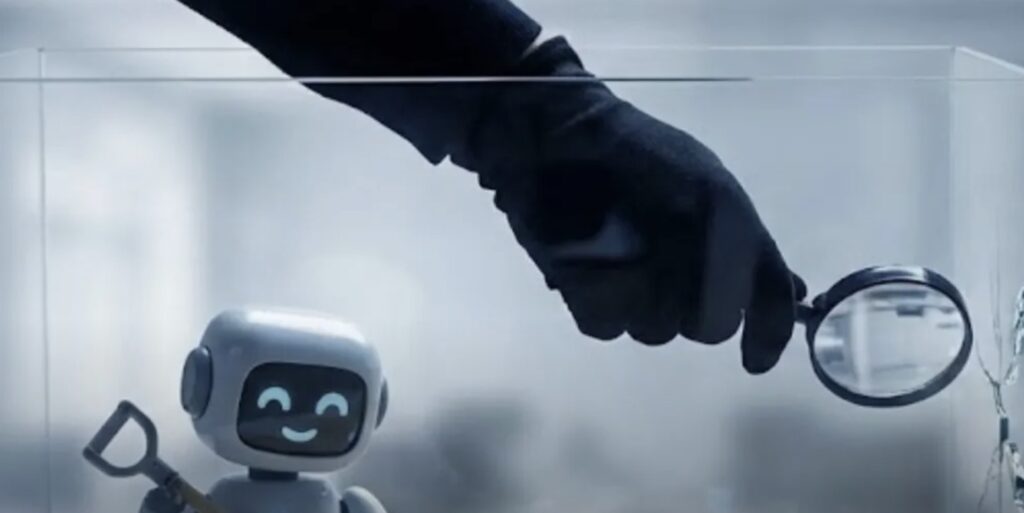

For companies operating in these spaces, the era of unmonitored automation is over. The legislation mandates human oversight for these high-stakes systems to prevent catastrophic errors. Furthermore, the law attacks the problem of deepfakes and misinformation head-on: companies must now provide clear advance notice when users are interacting with high-impact or generative AI. Crucially, if AI-generated output is difficult to distinguish from reality, it must carry a visible label.

A Global Race for Regulation

South Korea’s legislative speed is notable on the world stage. By enacting these laws immediately, Seoul has bypassed the timeline of the European Union, where the comprehensive EU AI Act is still being rolled out in phases through 2027.

This move highlights the fragmented nature of global AI governance. While South Korea tightens the reins, the United States continues to favor a light-touch approach to avoid slowing down Silicon Valley’s innovation engine. Meanwhile, China has introduced specific rules and is advocating for a centralized body to coordinate regulation globally. South Korea is attempting to carve a middle path: proving that heavy regulation can actually “promote AI adoption” by building a “foundation of safety and trust,” according to the Ministry of Science and ICT.

The Industry Pushback

Despite the government’s optimism, the mood among South Korean entrepreneurs is tense. Lim Jung-wook, co-head of South Korea’s Startup Alliance, voiced the frustration of many founders who feel they are being used as test subjects. “There’s a bit of resentment — why do we have to be the first to do this?” he asked, reflecting fears that compliance costs will hamstring Korean firms while their international competitors run free.

The primary complaint is ambiguity. Jeong Joo-yeon, a senior researcher at the alliance, warned that the law’s language remains “vague.” The fear is that without clear definitions, companies will default to the most conservative, risk-averse approaches to avoid penalties, effectively freezing creativity and bold development.

Penalties and the Path Forward

The consequences for non-compliance are significant. A failure to properly label generative AI, for instance, could result in administrative fines of up to 30 million won ($20,400). The government has built in a buffer zone; companies will be granted a grace period of at least one year before authorities begin levying these fines.

Science Minister Bae Kyung-hoon, a former head of AI research at LG, championed the law as a “critical institutional foundation.” To bridge the gap between policy and practice, the ministry has promised to launch a guidance platform and a dedicated support center. In a nod to industry concerns, a spokesperson noted that authorities are willing to extend the grace period if domestic and global market conditions warrant it, promising to “continue to review measures to minimize the burden on industry.”